yolo不多做介绍,请参相关博客和论文

本文主要是使用pytorch来对yolo中每一步进行实现 参考:https://blog.paperspace.com/tag/series-yolo/

需要了解:

- 卷积神经网络原理及pytorch实现

- yolo等目标检测算法的检测原理,相关概念如 anchor(锚点)、ROI(感兴趣区域)、IOU(交并比)、NMS(非极大值抑制)、LR softmax分类、边框回归等

本文主要分为四个部分:

- yolo网络层级的定义

- 向前传播

- 置信度阈值和非极大值抑制

- 输入和输出流程

yolo网络层级的定义

Darknet 是 yolo 对目标特征提取的框架,从yolov1的darknet 到 v2,v3的darknet-19,darknet-53

可以看到yolov3的时候已经没有全连接层了,最后只有一个池化层,还加入了resnet的机制。

Demo

layer filters size input output

0 conv 32 3 x 3 / 1 416 x 416 x 3 -> 416 x 416 x 32 0.299 BFLOPs

1 conv 64 3 x 3 / 2 416 x 416 x 32 -> 208 x 208 x 64 1.595 BFLOPs

2 conv 32 1 x 1 / 1 208 x 208 x 64 -> 208 x 208 x 32 0.177 BFLOPs

3 conv 64 3 x 3 / 1 208 x 208 x 32 -> 208 x 208 x 64 1.595 BFLOPs

4 res 1 208 x 208 x 64 -> 208 x 208 x 64

5 conv 128 3 x 3 / 2 208 x 208 x 64 -> 104 x 104 x 128 1.595 BFLOPs

6 conv 64 1 x 1 / 1 104 x 104 x 128 -> 104 x 104 x 64 0.177 BFLOPs

7 conv 128 3 x 3 / 1 104 x 104 x 64 -> 104 x 104 x 128 1.595 BFLOPs

8 res 5 104 x 104 x 128 -> 104 x 104 x 128

9 conv 64 1 x 1 / 1 104 x 104 x 128 -> 104 x 104 x 64 0.177 BFLOPs

10 conv 128 3 x 3 / 1 104 x 104 x 64 -> 104 x 104 x 128 1.595 BFLOPs

11 res 8 104 x 104 x 128 -> 104 x 104 x 128

12 conv 256 3 x 3 / 2 104 x 104 x 128 -> 52 x 52 x 256 1.595 BFLOPs

13 conv 128 1 x 1 / 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BFLOPs

14 conv 256 3 x 3 / 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BFLOPs

15 res 12 52 x 52 x 256 -> 52 x 52 x 256

16 conv 128 1 x 1 / 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BFLOPs

17 conv 256 3 x 3 / 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BFLOPs

18 res 15 52 x 52 x 256 -> 52 x 52 x 256

19 conv 128 1 x 1 / 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BFLOPs

20 conv 256 3 x 3 / 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BFLOPs

21 res 18 52 x 52 x 256 -> 52 x 52 x 256

22 conv 128 1 x 1 / 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BFLOPs

23 conv 256 3 x 3 / 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BFLOPs

24 res 21 52 x 52 x 256 -> 52 x 52 x 256

25 conv 128 1 x 1 / 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BFLOPs

26 conv 256 3 x 3 / 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BFLOPs

27 res 24 52 x 52 x 256 -> 52 x 52 x 256

28 conv 128 1 x 1 / 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BFLOPs

29 conv 256 3 x 3 / 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BFLOPs

30 res 27 52 x 52 x 256 -> 52 x 52 x 256

31 conv 128 1 x 1 / 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BFLOPs

32 conv 256 3 x 3 / 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BFLOPs

33 res 30 52 x 52 x 256 -> 52 x 52 x 256

34 conv 128 1 x 1 / 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BFLOPs

35 conv 256 3 x 3 / 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BFLOPs

36 res 33 52 x 52 x 256 -> 52 x 52 x 256

37 conv 512 3 x 3 / 2 52 x 52 x 256 -> 26 x 26 x 512 1.595 BFLOPs

38 conv 256 1 x 1 / 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BFLOPs

39 conv 512 3 x 3 / 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BFLOPs

40 res 37 26 x 26 x 512 -> 26 x 26 x 512

41 conv 256 1 x 1 / 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BFLOPs

42 conv 512 3 x 3 / 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BFLOPs

43 res 40 26 x 26 x 512 -> 26 x 26 x 512

44 conv 256 1 x 1 / 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BFLOPs

45 conv 512 3 x 3 / 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BFLOPs

46 res 43 26 x 26 x 512 -> 26 x 26 x 512

47 conv 256 1 x 1 / 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BFLOPs

48 conv 512 3 x 3 / 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BFLOPs

49 res 46 26 x 26 x 512 -> 26 x 26 x 512

50 conv 256 1 x 1 / 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BFLOPs

51 conv 512 3 x 3 / 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BFLOPs

52 res 49 26 x 26 x 512 -> 26 x 26 x 512

53 conv 256 1 x 1 / 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BFLOPs

54 conv 512 3 x 3 / 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BFLOPs

55 res 52 26 x 26 x 512 -> 26 x 26 x 512

56 conv 256 1 x 1 / 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BFLOPs

57 conv 512 3 x 3 / 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BFLOPs

58 res 55 26 x 26 x 512 -> 26 x 26 x 512

59 conv 256 1 x 1 / 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BFLOPs

60 conv 512 3 x 3 / 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BFLOPs

61 res 58 26 x 26 x 512 -> 26 x 26 x 512

62 conv 1024 3 x 3 / 2 26 x 26 x 512 -> 13 x 13 x1024 1.595 BFLOPs

63 conv 512 1 x 1 / 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BFLOPs

64 conv 1024 3 x 3 / 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BFLOPs

65 res 62 13 x 13 x1024 -> 13 x 13 x1024

66 conv 512 1 x 1 / 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BFLOPs

67 conv 1024 3 x 3 / 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BFLOPs

68 res 65 13 x 13 x1024 -> 13 x 13 x1024

69 conv 512 1 x 1 / 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BFLOPs

70 conv 1024 3 x 3 / 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BFLOPs

71 res 68 13 x 13 x1024 -> 13 x 13 x1024

72 conv 512 1 x 1 / 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BFLOPs

73 conv 1024 3 x 3 / 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BFLOPs

74 res 71 13 x 13 x1024 -> 13 x 13 x1024

75 conv 512 1 x 1 / 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BFLOPs

76 conv 1024 3 x 3 / 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BFLOPs

77 conv 512 1 x 1 / 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BFLOPs

78 conv 1024 3 x 3 / 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BFLOPs

79 conv 512 1 x 1 / 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BFLOPs

80 conv 1024 3 x 3 / 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BFLOPs

81 conv 255 1 x 1 / 1 13 x 13 x1024 -> 13 x 13 x 255 0.088 BFLOPs

82 detection

83 route 79

84 conv 256 1 x 1 / 1 13 x 13 x 512 -> 13 x 13 x 256 0.044 BFLOPs

85 upsample 2x 13 x 13 x 256 -> 26 x 26 x 256

86 route 85 61

87 conv 256 1 x 1 / 1 26 x 26 x 768 -> 26 x 26 x 256 0.266 BFLOPs

88 conv 512 3 x 3 / 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BFLOPs

89 conv 256 1 x 1 / 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BFLOPs

90 conv 512 3 x 3 / 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BFLOPs

91 conv 256 1 x 1 / 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BFLOPs

92 conv 512 3 x 3 / 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BFLOPs

93 conv 255 1 x 1 / 1 26 x 26 x 512 -> 26 x 26 x 255 0.177 BFLOPs

94 detection

95 route 91

96 conv 128 1 x 1 / 1 26 x 26 x 256 -> 26 x 26 x 128 0.044 BFLOPs

97 upsample 2x 26 x 26 x 128 -> 52 x 52 x 128

98 route 97 36

99 conv 128 1 x 1 / 1 52 x 52 x 384 -> 52 x 52 x 128 0.266 BFLOPs

100 conv 256 3 x 3 / 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BFLOPs

101 conv 128 1 x 1 / 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BFLOPs

102 conv 256 3 x 3 / 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BFLOPs

103 conv 128 1 x 1 / 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BFLOPs

104 conv 256 3 x 3 / 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BFLOPs

105 conv 255 1 x 1 / 1 52 x 52 x 256 -> 52 x 52 x 255 0.353 BFLOPs

106 detection

1.具体性能比较暂不多提,实现方法如下:

darknet 网络的 config 从官网中可以下载: https://pjreddie.com/darknet/yolo/

每个config包含如下一些模块:

1.1.卷积网络模型,步长有1、2。步长为2做上采样

1.2.yolo3中shortcut 类似于resnet,跨通道连接,

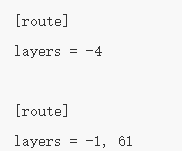

1.3.route层。当层级有两个值时,它将返回由这两个值索引的拼接特征图。实验中为-1 和 61,因此该层级将输出从前一层级(-1)到第 61 层的特征图,并将它们按深度拼接。最后检测时像FPN中对不同层次的特征一个结合,更好检测小目标

1. 4.上采样层

1.5.net网络训练的参数层

1.6.yolo层,设置一些参数,anchor的尺寸是由k-means聚类得到的

所以我们要解析config文件来构建我们的网络。首先创建darknet.py 文件(读取的是yolo3的darknet-53)

from __future__ import division

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

import numpy as np

def parse_cfg(cfgfile):

#解析cfg,将每个块存储为词典(键 值),然后添加到列表blocks中

file = open(cfgfile, 'r')

lines = file.read().split('\n') #按行存储

lines = [x for x in lines if len(x) > 0] #不读空行

lines = [x for x in lines if x[0] != '#'] #不读注释

lines = [x.rstrip().lstrip() for x in lines]#去除边缘空白

#遍历列表 得到块

block = {}

blocks = []

for line in lines:

if line[0] == '[': #起始mark 标记

if len(block) != 0: #判断是否为空

blocks.append(block)

block = {} #重新初始化block

block["type"] = line[1:-1].rstrip()

else:

key, value = line.split('=')

block[key.rstrip()] = value.lstrip()

blocks.append(block)

print(blocks)

return blocksblocks 是以字典的形式保存信息,输出为:

{'type': 'convolutional', 'batch_normalize': '1', 'filters': '32', 'size': '3', 'stride': '1', 'pad': '1', 'activation': 'leaky'}, {'type': 'convolutional', 'batch_normalize': '1', 'filters': '64', 'size': '3', 'stride': '2', 'pad': '1', 'activation': 'leaky'},

{'type': 'shortcut', 'from': '-3', 'activation': 'linear'},

{'type': 'route', 'layers': '-4'},

{'type': 'upsample', 'stride': '2'},

{'type': 'net', 'batch': '1', 'subdivisions': '1', 'width': '416', 'height': '416', 'channels': '3', 'momentum': '0.9', 'decay': '0.0005', 'angle': '0', 'saturation': '1.5', 'exposure': '1.5', 'hue': '.1', 'learning_rate': '0.001', 'burn_in': '1000', 'max_batches': '500200', 'policy': 'steps', 'steps': '400000,450000', 'scales': '.1,.1'},

{'type': 'yolo', 'mask': '0,1,2', 'anchors': '10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326', 'classes': '80', 'num': '9', 'jitter': '.3', 'ignore_thresh': '.5', 'truth_thresh': '1', 'random': '1'},

然后利用字典中的信息构建网络层:

def create_modules(blocks):

net_info = blocks[0]#存储网络信息

module_list = nn.ModuleList()

prev_fileters = 3 #初始化图像通道 但是卷积深度由上一层决定

output_filters =[]#追踪之前的层用来路由

#读取bloks中的元素

for index, x in enumerate(blocks[1:]):

module = nn.Sequential()

#读取convolutional块中的信息

if (x['type'] == 'convolutional'):

activation = x['activation']

try:

batch_normalize = int(x["batch_normalize"])

bias = False

except:

batch_normalize = 0

bias = True

filters = int(x["filters"]) #卷积核数量(维度)

padding = int(x["pad"]) #边框填补

kernel_size = int(x["size"]) #卷积核大小

stride = int(x["stride"]) #步长

if padding:

pad = (kernel_size - 1)//2

else:

pad = 0

###

conv = nn.Conv2d(prev_fileters,filters,kernel_size,

stride,pad,bias=bias)

module.add_module("conv_{0}".format(index),conv)

if batch_normalize:

bn = nn.BatchNorm2d(filters)

module.add_module("batch_norm_{0}".format(index),bn)

if activation == "leaky":

activn = nn.LeakyReLU(0.1,inplace=True)

module.add_module("leaky_{0}".format(index),activn)

###

#读取上采样块

elif (x["type"] == "upsample"):

stride = int(x["stride"])

upsample = nn.Upsample(scale_factor=2, mode="nearest")

module.add_module("upsample_{}".format(index),upsample)

#读取路由块

elif (x["type"] == "route"):

x["layers"] = x["layers"].split(',')

start = int(x["layers"][0])

try:

end = int(x["layers"][1])

except:

end = 0

if start > 0:

start = start - index

if end > 0:

end = end - index

route = EmptyLayer()

module.add_module("route_{0}".format(index),route)

if end < 0:

filters = output_filters[index + start] + output_filters[index + end]

else:

filters = output_filters[index + start]

#读取shortcut

elif x["type"] == "shortcut":

shortcut = EmptyLayer()

module.add_module("shortcut_{}".format(index),shortcut)

#读取yolo

elif x["type"] == "yolo":

mask = x["mask"].split(",")

mask = [int(x) for x in mask]

#读取anchor的尺寸

anchors = x["anchors"].split(",")

anchors = [int(a) for a in anchors]

anchors = [(anchors[i], anchors[i+1]) for i in range(0, len(anchors),2)]

anchors = [anchors[i] for i in mask]

detection = DetectionLayer(anchors)

module.add_module("Detection_{}".format(index),detection)

module_list.append(module)

prev_fileters = filters

output_filters.append(filters)

return (net_info, module_list)

blocks = parse_cfg("cfg/yolov3.cfg")

print(create_modules(blocks))输出:

其中,需在此之前定义两个继承nn.Module的类

class EmptyLayer(nn.Module):

def __init__(self):

super(EmptyLayer,self).__init__()

class DetectionLayer(nn.Module):

def __init__(self, anchors):

super(DetectionLayer, self).__init__()

self.anchors = anchors前向传播

下一节介绍 然后根据读取的网络信息 定义一个前向传播

首先定义一个nn.Module的类 Darknet,获取blocks 和 module_list

class Darknet(nn.Module):

def __init__(self, cfgfile):

super(Darknet, self).__init__()

self.blocks = parse_cfg(cfgfile)

self.net_info, self.module_list = create_modules(self.blocks)然后定义向前传播

def forward(self, x, CUDA=False):

modules = self.blocks[1:]

outputs = {}

'''

write flag 表示我们是否遇到第一个检测。如果 write 是 0,则收集器尚未初始化。

如果 write 是 1,则收集器已经初始化,我们只需要将检测图与收集器级联起来即可。

'''

write = 0

#按顺序读modules 不同的层执行不同的操作

for i, module in enumerate(modules):

module_type = (module["type"])

if module_type == "convolutional" or module_type == "upsample":

x = self.module_list[i](x)

elif module_type == "route":

layers = module["layers"]

layers = [int(a) for a in layers]

if (layers[0]) > 0:

layers[0] = layers[0] - i

if len(layers) == 1:

x = outputs[i + (layers[0])]

else:

if (layers[1]) > 0:

layers[1] = layers[1] - i

map1 = outputs[i + layers[0]]

map2 = outputs[i + layers[1]]

x = t.cat((map1, map2), 1) #两个张量(tensor)拼接在一起

elif module_type == "shortcut":

from_ = int(module["from"])

x = outputs[i-1] + outputs[i+from_]

#现在可以将三个不同尺度的检测图级联成一个大的张量

elif module_type == "yolo":

anchors = self.module_list[i][0].anchors

inp_dim = int (self.net_info["height"])

num_classer = int (module["classes"])

x = x.data

x = predict_transform(x, inp_dim, anchors,

num_classer,CUDA)

if not write:

detections = x

write = 1

else:

detections = t.cat((detections, x), 1)

outputs[i] = x

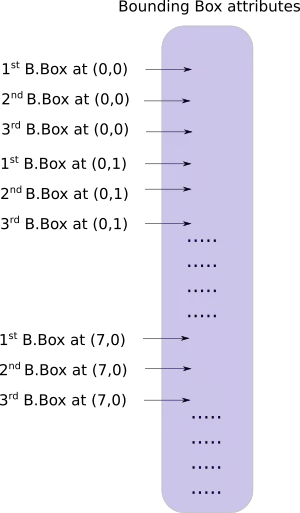

return detectionsYOLO 的输出是一个卷积特征图,包含沿特征图深度的边界框属性。边界框属性由彼此堆叠的单元格预测得出。因此,如果你需要在 (5,6) 处访问单元格的第二个边框,那么你需要通过 map[5,6, (5+C): 2*(5+C)] 将其编入索引。这种格式对于输出处理过程(例如通过目标置信度进行阈值处理、添加对中心的网格偏移、应用锚点等)很不方便。

另一个问题是由于检测是在三个尺度上进行的,预测图的维度将是不同的。虽然三个特征图的维度不同,但对它们执行的输出处理过程是相似的。如果能在单个张量而不是三个单独张量上执行这些运算,就太好了。

为了解决这些问题,我们引入了函数 predict_transform 在utils.py文件中

def predict_transform(prediction, inp_dim, anchors, num_classes, CUDA = True):

batch_size = prediction.size(0)

stride = inp_dim // prediction.size(2)

grid_size = inp_dim // stride

bbox_attrs = 5 + num_classes

num_anchors = len(anchors)

prediction = prediction.view(batch_size, bbox_attrs*num_anchors, grid_size*grid_size)

prediction = prediction.transpose(1,2).contiguous()

prediction = prediction.view(batch_size, grid_size*grid_size*num_anchors, bbox_attrs)

anchors = [(a[0]/stride, a[1]/stride) for a in anchors]

#Sigmoid the centre_X, centre_Y. and object confidencce

prediction[:,:,0] = torch.sigmoid(prediction[:,:,0])

prediction[:,:,1] = torch.sigmoid(prediction[:,:,1])

prediction[:,:,4] = torch.sigmoid(prediction[:,:,4])

#Add the center offsets

grid = np.arange(grid_size)

a,b = np.meshgrid(grid, grid)

x_offset = torch.FloatTensor(a).view(-1,1)

y_offset = torch.FloatTensor(b).view(-1,1)

if CUDA:

x_offset = x_offset.cuda()

y_offset = y_offset.cuda()

x_y_offset = torch.cat((x_offset, y_offset), 1).repeat(1,num_anchors).view(-1,2).unsqueeze(0)

prediction[:,:,:2] += x_y_offset

#log space transform height and the width

anchors = torch.FloatTensor(anchors)

if CUDA:

anchors = anchors.cuda()

anchors = anchors.repeat(grid_size*grid_size, 1).unsqueeze(0)

prediction[:,:,2:4] = torch.exp(prediction[:,:,2:4])*anchors

prediction[:,:,5: 5 + num_classes] = torch.sigmoid((prediction[:,:, 5 : 5 + num_classes]))

prediction[:,:,:4] *= stride

return predictionpredict_transform 函数把检测特征图转换成二维张量,张量的每一行对应边界框的属性,如下所示:

现在,在 darknet.py 文件的顶部定义以下函数

def get_test_input():

img = cv2.imread("dog-cycle-car.png")

img = cv2.resize(img, (416,416)) #Resize to the input dimension

img_ = img[:,:,::-1].transpose((2,0,1)) # BGR -> RGB | H X W C -> C X H X W

img_ = img_[np.newaxis,:,:,:]/255.0 #Add a channel at 0 (for batch) | Normalise

img_ = t.from_numpy(img_).float() #Convert to float

img_ = Variable(img_) # Convert to Variable

return img_测试代码

model = Darknet("cfg/yolov3.cfg")

inp = get_test_input()

pred = model(inp)

print (pred)输出

下载预训练权重

https://pjreddie.com/media/files/yolov3.weights

读取权重

def load_weights(self, weightfile):

fp = open(weightfile, "rb")

header = np.fromfile(fp, dtype = np.int32, count = 5)

self.header = t.from_numpy(header)

self.seen = self.header[3]

weights = np.fromfile(fp, dtype=np.float32)

ptr = 0

for i in range(len(self.module_list)):

module_type = self.blocks[i+1]["type"]

if module_type == "convolutional":

module = self.module_list[i]

try:

batch_normalize = int(self.blocks[i+1]["batch_normalize"])

except:

batch_normalize = 0

conv = module[0]

if (batch_normalize):

bn = module[1]

num_bn_biases = bn.bias.numel()

bn_biases = t.from_numpy(weights[ptr:ptr + num_bn_biases])

ptr += num_bn_biases

bn_weights = t.from_numpy(weights[ptr: ptr + num_bn_biases])

ptr += num_bn_biases

bn_running_mean = t.from_numpy(weights[ptr: ptr + num_bn_biases])

ptr += num_bn_biases

bn_running_var = t.from_numpy(weights[ptr: ptr + num_bn_biases])

ptr += num_bn_biases

#Cast the loaded weights into dims of model weights.

bn_biases = bn_biases.view_as(bn.bias.data)

bn_weights = bn_weights.view_as(bn.weight.data)

bn_running_mean = bn_running_mean.view_as(bn.running_mean)

bn_running_var = bn_running_var.view_as(bn.running_var)

#Copy the data to model

bn.bias.data.copy_(bn_biases)

bn.weight.data.copy_(bn_weights)

bn.running_mean.copy_(bn_running_mean)

bn.running_var.copy_(bn_running_var)

else:

#Number of biases

num_biases = conv.bias.numel()

#Load the weights

conv_biases = t.from_numpy(weights[ptr: ptr + num_biases])

ptr = ptr + num_biases

#reshape the loaded weights according to the dims of the model weights

conv_biases = conv_biases.view_as(conv.bias.data)

#Finally copy the data

conv.bias.data.copy_(conv_biases)

#Let us load the weights for the Convolutional layers

num_weights = conv.weight.numel()

#Do the same as above for weights

conv_weights = t.from_numpy(weights[ptr:ptr+num_weights])

ptr = ptr + num_weights

conv_weights = conv_weights.view_as(conv.weight.data)

conv.weight.data.copy_(conv_weights)测试:

model = Darknet("cfg/yolov3.cfg")

model.load_weights("yolov3.weights")

inp = get_test_input()

pred = model(inp)

print (pred)通过模型构建和权重加载,就可以开始进行目标检测了

下一章介绍如何利用 objectness 置信度阈值和非极大值抑制生成最终的检测结果。