java初试爬虫jsoup爬取纵横小说免费模块

之前一直学习java ee,上个月到深圳工作,被招去做java爬虫,于是自己学着jsoup,写了个简单的爬虫

因为平时喜欢看小说就爬了纵横。

将整个过程分为了

1. 获取当前页小说列表的详细资料

2. 切换到下一分页的列表

3. 获取当前章的内容

4. 切换到下一章再重复 3

获取当前页小说列表的详细资料 与切换到下一分页的列表

先上代码

public class Book {

private String name;//书名

private String author;//作者

private String classify;//书分类

private String url;//书的url

private String path;//保存路径

打开纵横免费已完结的页码,第一页,然后发现点击第二页后 URL变化的

由 http://book.zongheng.com/store/c0/c0/b0/u0/p1/v0/s1/t0/u0/i1/ALL.html

变为http://book.zongheng.com/store/c0/c0/b0/u0/p2/v0/s1/t0/u0/i1/ALL.html

于是用占位符 替换了

http://book.zongheng.com/store/c0/c0/b0/u0/p/v0/s1/t0/u0/i1/ALL.html

再从爬取的分页组件中获取到对应的最大页数,遍历页数进行全部爬取

在换页之前,先将本页的小说爬取下来,我获得了点击书名的跳转链接与书名,作者,分类,将其保存到Book实体中,再放入BlockingQueue中,开启多线程去爬取每一个小说

import org.jsoup.Connection;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import java.io.IOException;

import java.util.Iterator;

import java.util.concurrent.BlockingQueue;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

import java.util.concurrent.LinkedBlockingDeque;

public class ZhongHeng {

private final String listUrl = "http://book.zongheng.com/store/c0/c0/b0/u0/p<number>/v0/s1/t0/u0/i1/ALL.html";

private BlockingQueue<Book> books = new LinkedBlockingDeque<>();

private String path;

private int num;

private void getList(int page,int total) throws IOException {

String url = listUrl.replaceAll("<number>",String.valueOf(page));

Connection con = Jsoup.connect(url);

Document dom = con.header("Accept","text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8")

.header("Accept-Encoding"," gzip, deflate")

.header("Accept-Language","zh-CN,zh;q=0.9")

.header("Cache-Control","max-age=0")

.header("Connection","keep-alive")

.header("Upgrade-Insecure-Requests","1")

.header("User-Agent","Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.110 Safari/537.36").get();

Elements bookbox = dom.getElementsByClass("bookbox");

Iterator<Element> iterator = bookbox.iterator();

while (iterator.hasNext()){

Element element = iterator.next();

//获取书名和Url

Elements bookNameA = element.select(".bookname").select("a");

String boolUrl = bookNameA.attr("href");

String bookName = bookNameA.text();

//获取作者和分类

Elements bookilnkA = element.select(".bookilnk").select("a");

String author = bookilnkA.get(0).text();

String classify = bookilnkA.get(1).text();

Book book = new Book(bookName,author,classify,boolUrl,path);

books.add(book);

}

if (total == -1){

//获取页码

Elements tota = dom.getElementsByClass("pagenumber");

String totalStr = tota.attr("count");

total = Integer.valueOf(totalStr);

if (num != 0 && total>num){

total = num;

}

}

if (page >= total){

getBook();

return;

}

page++;

url = listUrl.replaceAll("[number]",String.valueOf(page));

getList(page,total);

}

private void getBook(){

BookCrawl c1 = new BookCrawl(books);

~

BookCrawl c10 = new BookCrawl(books);

ExecutorService service = Executors.newCachedThreadPool();

service.execute(c1);

~

service.execute(c10);

}

public int getNum() {

return num;

}

public void setNum(int num) {

this.num = num;

}

public String getPath() {

return path;

}

public void setPath(String path) {

this.path = path;

}

public static void main(String[] args) {

ZhongHeng z = new ZhongHeng();

z.setNum(100);//爬取多少页

z.setPath("D:\\纵横中文网爬取小说");//保存位置

try {

z.getList(1,-1);

} catch (IOException e) {

e.printStackTrace();

}

}

}

获取当前章的内容

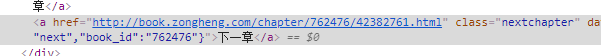

通过图片所示,这是之前列表获取的url链接,点击开始阅读后进入小说第一章

进入第一章后

发现章节名都存在class=title_txtbox 的div内,内容放在class=content的div内,且根据p标签换行,我们获取下来,遍历content内的p标签,放入stringBuilder中,并每行加入换行符。

获取完本章内容后,存入text文件中。

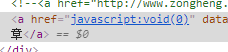

切换到下一章再重复 获取小说的步骤

通过下一章按钮可以获取到下一章的网址,通过递归跳入下一章进行爬取,

当下一章的href地址为 javascript:void(0) 说明已经到了小说最后一章跳出本小说爬取,获取队列内的另一小说进行爬取

小说的存储地址根据填写的地址加上小说分类进行保存,已经存在相同小说时停止本小说爬取

import org.jsoup.Connection;

import org.jsoup.helper.StringUtil;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import java.io.BufferedWriter;

import java.io.File;

import java.io.FileWriter;

import java.io.IOException;

import java.util.HashMap;

import java.util.Iterator;

import java.util.Map;

import java.util.concurrent.BlockingQueue;

public class BookCrawl implements Runnable{

private String firstUrl ;

private BlockingQueue<Book> books ;

private Map<String, String> cookieMap = new HashMap<>();

private String filePath;

public BookCrawl(BlockingQueue<Book> books) {

this.books = books;

}

public void controller() {

if (books.isEmpty()){

return;

}

Book book = null;

try {

book = books.take();

} catch (InterruptedException e) {

e.printStackTrace();

}

if (StringUtil.isBlank(book.getPath())){

System.out.println("文件地址不可为空");

return;

}

this.filePath = book.getPath()+"\\"+book.getClassify() +"\\"+book.getName()+".txt";

String filePaths = book.getPath()+"\\"+book.getClassify();

if (StringUtil.isBlank(book.getUrl())){

System.out.println("小说url不可为空");

return;

}

try {

File file = new File(filePath);

if (file.exists()) {

System.out.println("---- 已存在"+filePath+" ----");

return;

}else {

File file1 = new File(filePaths);

file1.mkdirs();

file.createNewFile();

}

index(book.getUrl());

} catch (IOException e) {

e.printStackTrace();

}

}

//纵横中文网首页

private void index(String url) throws IOException {

System.out.println("---- 进入小说主页 ----");

Connection con = org.jsoup.Jsoup.connect(url);

Connection.Response response = con.header("Upgrade-Insecure-Requests", "1")

.header("User-Agent", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.110 Safari/537.36")

.header("Accept", "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8")

.header("Accept-Language", "zh-CN,zh;q=0.9").method(Connection.Method.GET).execute();

Map<String, String> cookies = response.cookies();

cookieMap.putAll(cookies);

firstUrl = response.parse().getElementsByClass("read-btn").attr("href");

System.out.println("---- 开始爬取小说 ----");

crawl(firstUrl);

}

//爬取小说

private void crawl(String url) throws IOException {

Connection con = org.jsoup.Jsoup.connect(url);

Connection.Response response = con.header("Upgrade-Insecure-Requests", "1")

.header("User-Agent", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.110 Safari/537.36")

.header("Accept", "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8")

.header("Accept-Language", "zh-CN,zh;q=0.9")

.header("Accept-Encoding", "gzip,deflate")

.cookies(cookieMap)

.method(Connection.Method.GET).execute();

if (response.statusCode() != 200) {

throw new RuntimeException("返回失败");

}

Map<String, String> cookies = response.cookies();

cookieMap.putAll(cookies);

StringBuilder builder = new StringBuilder();

Document dom = response.parse();

String title = dom.getElementsByClass("title_txtbox").html();

builder.append(title);

builder.append(System.getProperty("line.separator"));

builder.append(System.getProperty("line.separator"));

Elements content = dom.getElementsByClass("content");

Iterator<Element> iterator = content.select("p").iterator();

while (iterator.hasNext()) {

String text = iterator.next().text();

builder.append(text);

builder.append(System.getProperty("line.separator"));

}

save(builder.toString(),title);

String nextUrl = dom.getElementsByClass("nextchapter").attr("href");

if (nextUrl.equals("javascript:void(0)")){

System.out.println("---- 小说爬取完毕 ----");

return;

}

crawl(nextUrl);

}

//保存小说

private void save(String text,String title) throws IOException {

FileWriter fw=new FileWriter(filePath,true);

BufferedWriter bw = new BufferedWriter(fw);

bw.append(text);

bw.close();

fw.close();

}

public String getFirstUrl() {

return firstUrl;

}

public void setFirstUrl(String firstUrl) {

this.firstUrl = firstUrl;

}

public String getFilePath() {

return filePath;

}

public void setFilePath(String filePath) {

this.filePath = filePath;

}

@Override

public void run() {

controller();

}

}

//GET / HTTP/1.1

// Host: www.zongheng.com

// Connection: keep-alive

// Cache-Control: max-age=0

// Upgrade-Insecure-Requests: 1

// User-Agent: Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3538.110 Safari/537.36

// Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8

/*

gzipAccept-Encoding: gzip, deflate

Accept-Language: zh-CN,zh;q=0.9

Cookie: ZHID=5462A809F0C59C25793CD34E1E890C5B; ver=2018; zh_visitTime=1546409556362; zhffr=www.baidu.com; v_user=https%3A%2F%2Fwww.baidu.com%2Flink%3Furl%3D-vpjW5-L5AhYC60PBUteUaj2YUi8kPMl6GZfG-EdFpkSKP-sDZqFtF_OvJPKmQT1%26wd%3D%26eqid%3Dac37315a00017d8e000000055c2c564e%7Chttp%3A%2F%2Fwww.zongheng.com%2F%7C2933297; UM_distinctid=1680d3139de16-0c7dbc28aefbb9-424e0b28-1fa400-1680d3139df819; CNZZDATA30037065=cnzz_eid%3D1886041116-1546408366-null%26ntime%3D1546408366; Hm_lvt_c202865d524849216eea846069349eb9=1546409557; Hm_lpvt_c202865d524849216eea846069349eb9=1546409557

*/

运行后,想爬取17页的缩影小说,被发现异常访问,要输入验证码,之前只爬取2页小说的时候没有这种问题,遇到反爬机制了,接下来,想接着尝试嫩不嫩识别验证码,但识别验证码的代码刚获得权限,看完之后得看看会不会牵扯到公司的保密,不会的话再更新使用验证码的代码,希望填了验证码后,不要再出来个ip限制反爬,第一次写爬虫,有什么能改进的欢迎大神指出

完整代码:javaJsoup初试爬取纵横中文网