版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/casgj16/article/details/82904592

MNIST手写体识别,利用神经网络解决的过程:

1、前向传播模块

首先将前向传播过程抽象出来,作为一个可以作为训练测试共享的模块,取名为mnist_inference_5_5.py,将这个过程抽象出来的好处是,一是可以保证在训练或者测试的过程中前向传播的一致性,提高代码的复用性。还有一点是我们可以更好地将其与滑动平均模型与模型持久化功能结合,更加灵活的来检验新的模型。

mnist_inference_5_5.py:

# -*- coding:utf-8 -*-

import tensorflow as tf

INPUT_NODE=784

OUTPUT_NODE=10

LAYER1_NODE=500

def get_weight_variable(shape,regularizer):

weights=tf.get_variable(

"weights",shape,

initializer=tf.truncated_normal_initializer(stddev=0.1)

)

if regularizer!=None:

tf.add_to_collection('losses',regularizer(weights))

return weights

def inference(input_tensor,regularizer):

with tf.variable_scope('layer1'):

weights=get_weight_variable(

[INPUT_NODE,LAYER1_NODE],regularizer

)

biases=tf.get_variable(

"biases",[LAYER1_NODE],

initializer=tf.constant_initializer(0.0)

)

layer1=tf.nn.relu(tf.matmul(input_tensor,weights)+biases)

with tf.variable_scope('layer2'):

weights=get_weight_variable(

[LAYER1_NODE,OUTPUT_NODE],regularizer

)

biases=tf.get_variable(

"biases",[OUTPUT_NODE],initializer=tf.constant_initializer(0.0)

)

layer2=tf.matmul(layer1,weights)+biases

return layer2

2、训练模块

将训练模型的模块提取出来,训练模块命名为mnist_train_5_5.py,在下面的代码中每过1000个step我们就保存一次模型。

mnist_train_5_5.py:

import os

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import mnist_inference_5_5

BATCH_SIZE=100

LEARNING_RATE_BASE=0.8

LEARNING_RATE_DECAY=0.99

REGULARAZTION_RATE=0.0001

TRAINING_STEPS=6000

MOVING_AVERAGE_DECAY=0.99

MODEL_SAVE_PATH="../Teansorflow_exam1/model/"

MODEL_NAME="model.ckpt"

def train(mnist):

x=tf.placeholder(

tf.float32,[None,mnist_inference_5_5.INPUT_NODE],name='x-input'

)

y_=tf.placeholder(

tf.float32,[None,mnist_inference_5_5.OUTPUT_NODE],name='y-input'

)

regularizer=tf.contrib.layers.l2_regularizer(REGULARAZTION_RATE)

y=mnist_inference_5_5.inference(x,regularizer)

global_step=tf.Variable(0,trainable=False)

#定义损失函数

variable_average=tf.train.ExponentialMovingAverage(

MOVING_AVERAGE_DECAY,global_step

)

variable_average_op=variable_average.apply(

tf.trainable_variables()

)

cross_entropy=tf.nn.sparse_softmax_cross_entropy_with_logits(

y,tf.argmax(y_,1)

)

cross_entropy_mean=tf.reduce_mean(cross_entropy )

loss=cross_entropy_mean + tf.add_n(tf.get_collection('losses'))

learning_rate = tf.train.exponential_decay(

LEARNING_RATE_BASE,

global_step,

mnist.train.num_examples/BATCH_SIZE,

LEARNING_RATE_DECAY

)

train_step=tf.train.GradientDescentOptimizer(learning_rate)\

.minimize(loss,global_step=global_step)

with tf.control_dependencies([train_step,variable_average_op]):

train_op=tf.no_op("train")

#初始化Tensorflow持久化类

saver=tf.train.Saver()

with tf.Session() as sess:

tf.initialize_all_variables().run()

for i in range(TRAINING_STEPS):

xs,ys=mnist.train.next_batch(BATCH_SIZE)

_,loss_value,step=sess.run([train_op,loss,global_step],

feed_dict={x:xs,y_:ys})

if i%1000==0:

print("After %d training steps,loss on training"

" batch is %g"%(step,loss_value))

saver.save(

sess,os.path.join(MODEL_SAVE_PATH,MODEL_NAME),global_step=global_step

)

mnist=input_data.read_data_sets("/tmp/data",one_hot=True)

train(mnist)

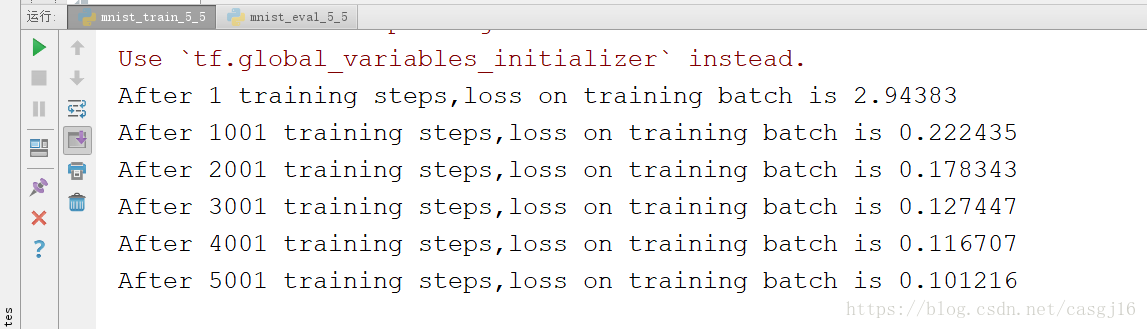

结果:

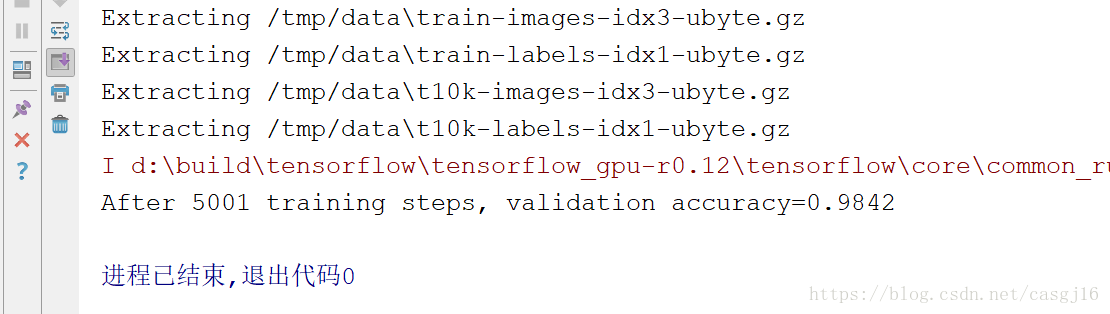

3、验证与测试模块

验证模块与测试模块可以对保存好的训练模型进行验证与测试,在下面的代码中我们选择每过1秒钟验证一个最新的模型。这样做的好处是可以将训练与验证或者测试分割开来,同时进行。该模块命名为mnist_eval_5_5.py。

mnist_eval_5_5.py:

import tensorflow as tf

import time

from tensorflow.examples.tutorials.mnist import input_data

import mnist_inference_5_5

import mnist_train_5_5

EVAL_INTERVAL_SECS=1

def evaluate(mnist):

with tf.Graph().as_default() as g:

x=tf.placeholder(

tf.float32,[None,mnist_inference_5_5.INPUT_NODE],name='x-input')

y_=tf.placeholder(

tf.float32,[None,mnist_inference_5_5.OUTPUT_NODE],name='y-input'

)

validate_feed={x:mnist.validation.images,

y_:mnist.validation.labels}

y=mnist_inference_5_5.inference(x,None)

correct_predicion=tf.equal(tf.argmax(y,1),tf.argmax(y_,1))

accuracy=tf.reduce_mean(tf.cast(correct_predicion,tf.float32))

variable_averages=tf.train.ExponentialMovingAverage(

mnist_train_5_5.MOVING_AVERAGE_DECAY

)

variables_to_restore=variable_averages.variables_to_restore()

saver=tf.train.Saver(variables_to_restore)

if True:

with tf.Session() as sess:

ckpt=tf.train.get_checkpoint_state(

mnist_train_5_5.MODEL_SAVE_PATH

)

if ckpt and ckpt.model_checkpoint_path:

saver.restore(sess,ckpt.model_checkpoint_path)

global_step=ckpt.model_checkpoint_path\

.split('/')[-1].split('-')[-1]

#model_checkpoint_path返回最新的chechpoint路径

accuracy_score=sess.run(accuracy,feed_dict=validate_feed)

print("After %s training steps, validation"

" accuracy=%g"%(global_step,accuracy_score))

else:

print("No checkpoint file found")

return

#time.sleep(EVAL_INTERVAL_SECS)

mnist=input_data.read_data_sets("/tmp/data",one_hot=True)

evaluate(mnist)结果: