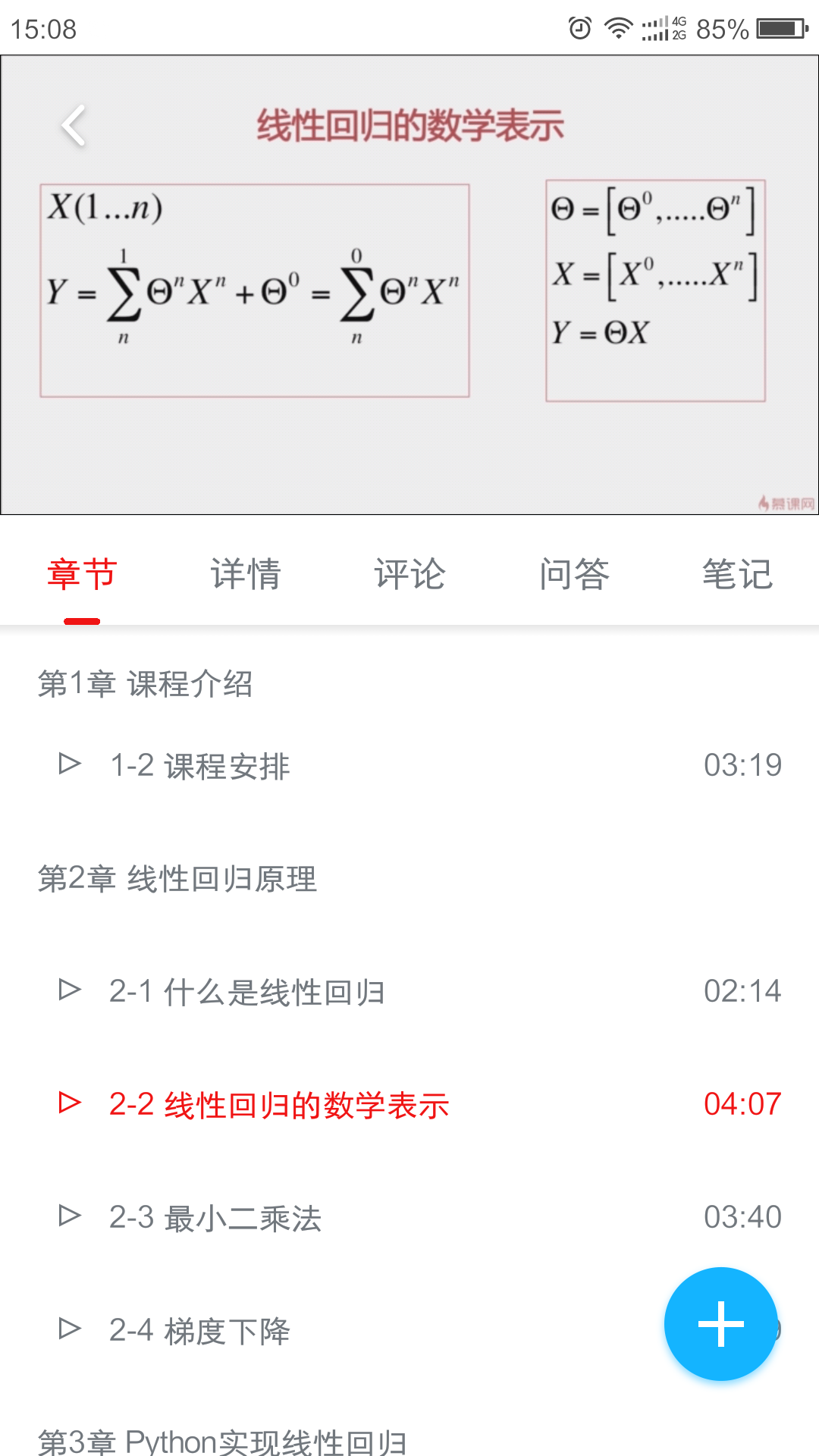

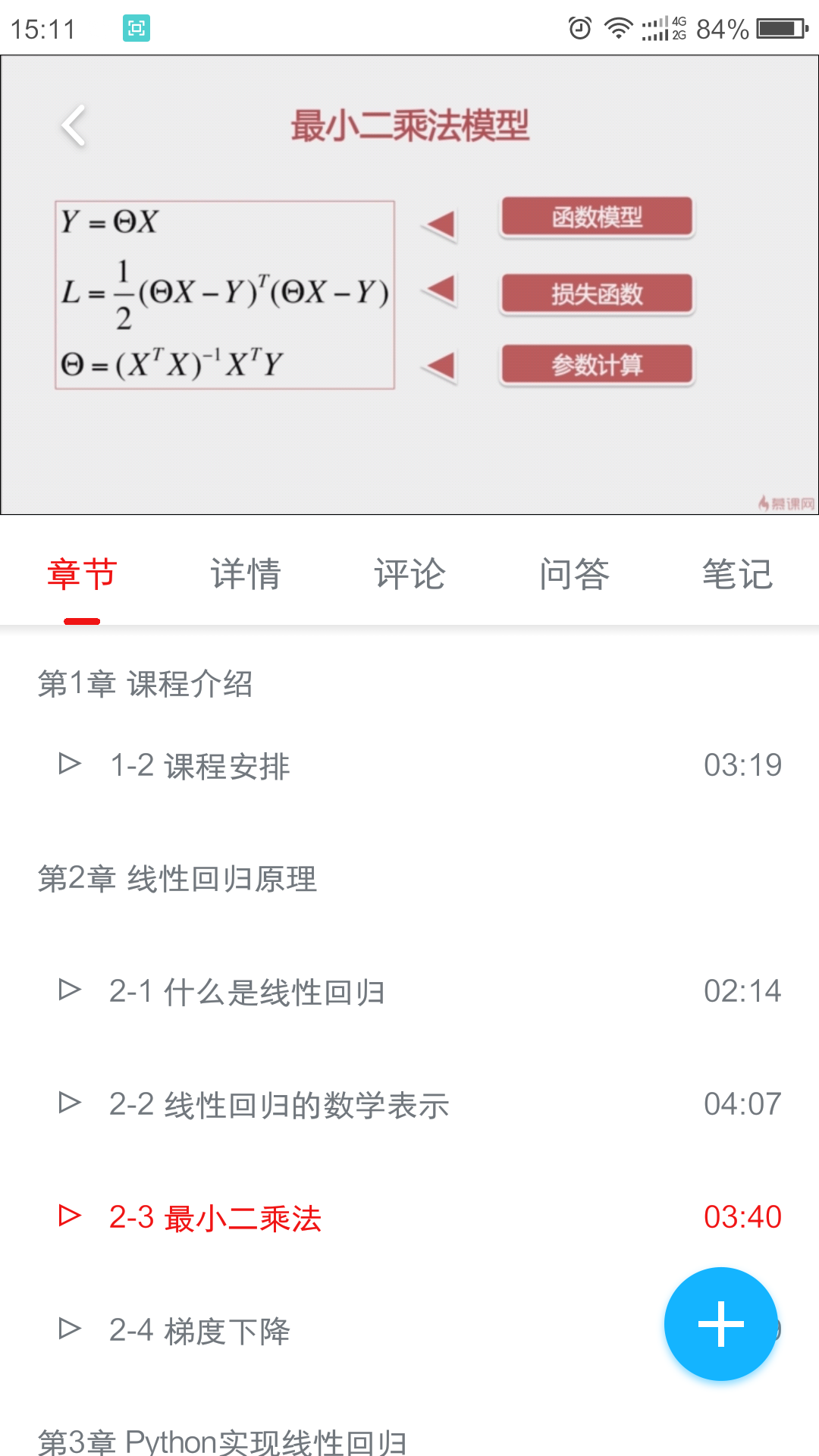

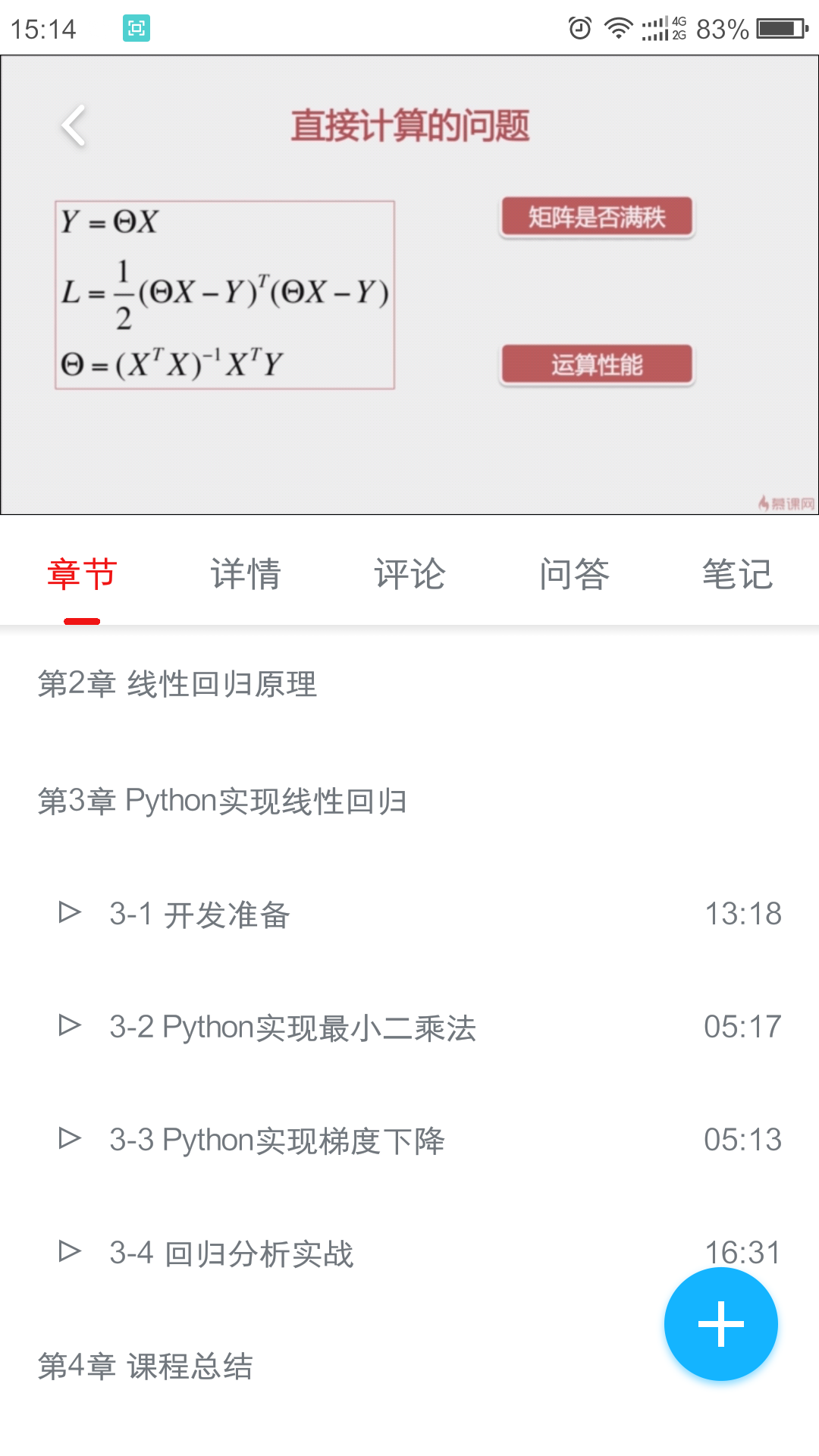

一、线性回归原理

二、python实现线性回归

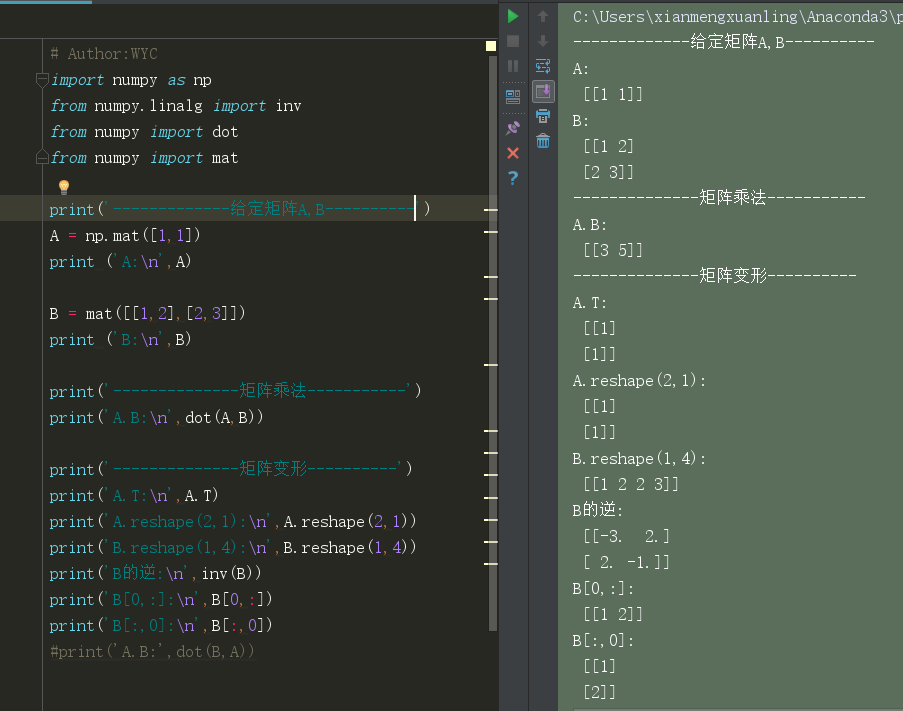

1.基本矩阵运算

pratice1.py:

# Author:WYC

import numpy as np

from numpy.linalg import inv

from numpy import dot

from numpy import mat

print('-------------给定矩阵A,B----------')

A = np.mat([1,1])

print ('A:\n',A)

B = mat([[1,2],[2,3]])

print ('B:\n',B)

print('--------------矩阵乘法-----------')

print('A.B:\n',dot(A,B))

print('--------------矩阵变形----------')

print('A.T:\n',A.T)

print('A.reshape(2,1):\n',A.reshape(2,1))

print('B.reshape(1,4):\n',B.reshape(1,4))

print('B的逆:\n',inv(B))

print('B[0,:]:\n',B[0,:])

print('B[:,0]:\n',B[:,0])

#print('A.B:',dot(B,A))

2.实现最小二乘法

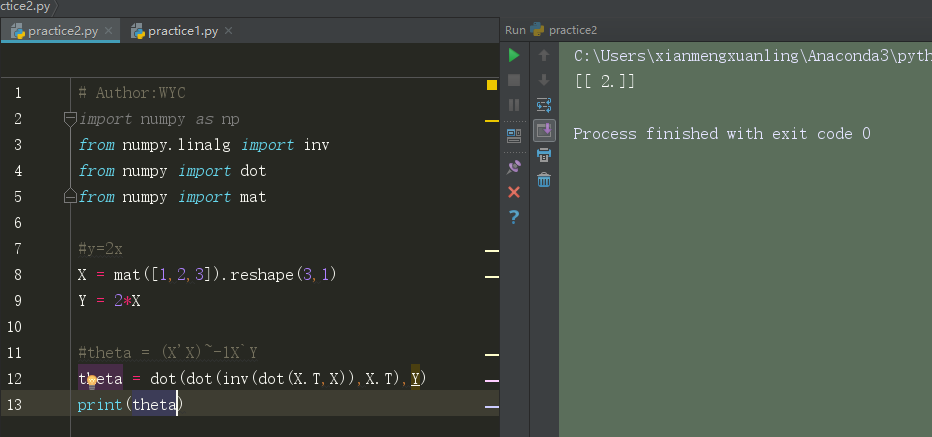

pratice2.py:

# Author:WYC

import numpy as np

from numpy.linalg import inv

from numpy import dot

from numpy import mat

#y=2x

X = mat([1,2,3]).reshape(3,1)

Y = 2*X

#theta = (X'X)~-1X`Y

theta = dot(dot(inv(dot(X.T,X)),X.T),Y)

print(theta)3.实现梯度下降法

扫描二维码关注公众号,回复:

453149 查看本文章

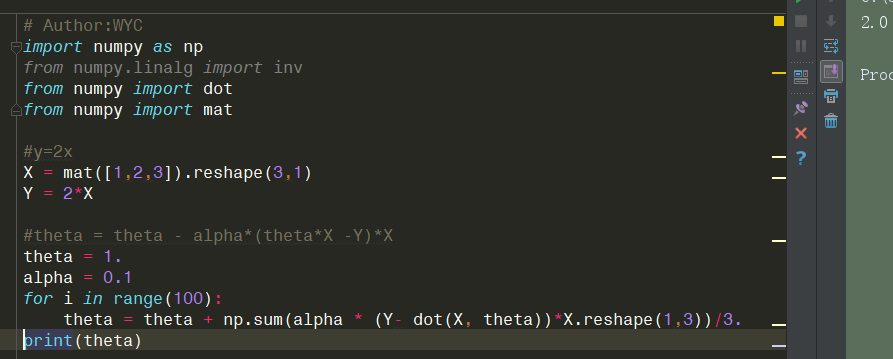

pratice3.py:

# Author:WYC

import numpy as np

from numpy.linalg import inv

from numpy import dot

from numpy import mat

#y=2x

X = mat([1,2,3]).reshape(3,1)

Y = 2*X

#theta = theta - alpha*(theta*X -Y)*X

theta = 1.

alpha = 0.1

for i in range(100):

theta = theta + np.sum(alpha * (Y- dot(X, theta))*X.reshape(1,3))/3.

print(theta)4.回归分析实战

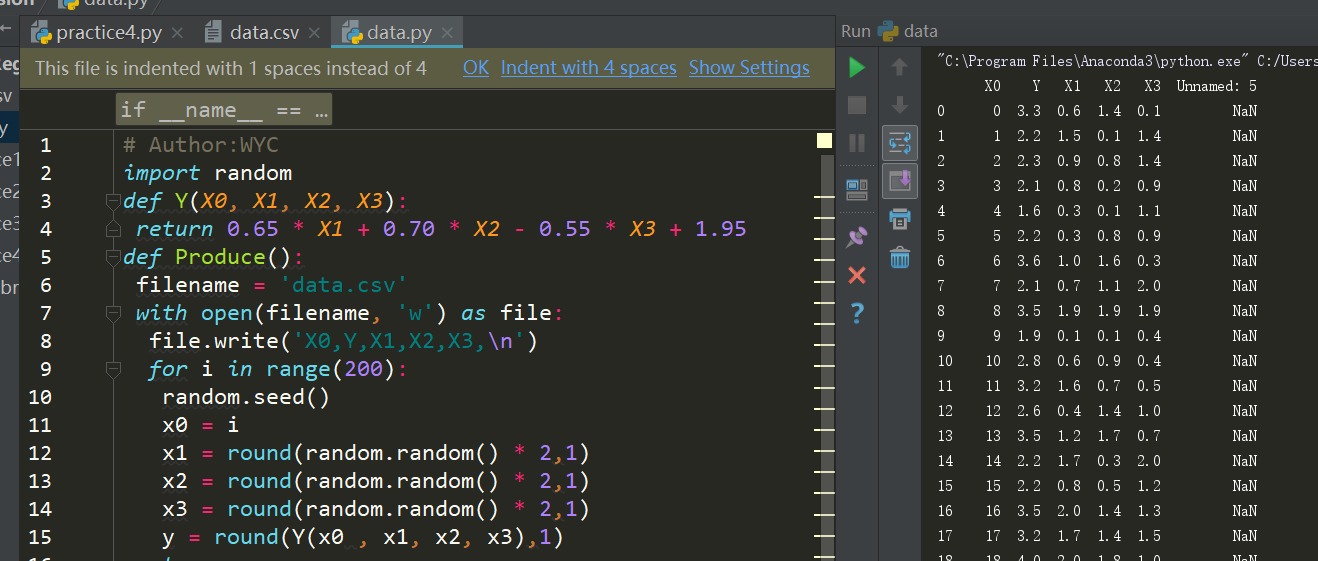

注:从笔记上copy一个网友的数据生成,列数不够,缺少y和x0部分,进行了修改,后面很多次试验用梯度下降方法求解thera都是NAN的结果,经过调试,发现可能是小数保留位数太多所致,所以用round函数保留一位小数,做到和讲解的数据一致:

data.py:

# Author:WYC

import random

def Y(X0, X1, X2, X3):

return 0.65 * X1 + 0.70 * X2 - 0.55 * X3 + 1.95

def Produce():

filename = 'data.csv'

with open(filename, 'w') as file:

file.write('X0,Y,X1,X2,X3,\n')

for i in range(200):

random.seed()

x0 = i

x1 = round(random.random() * 2,1)

x2 = round(random.random() * 2,1)

x3 = round(random.random() * 2,1)

y = round(Y(x0 , x1, x2, x3),1)

try:

file.write(str(x0) + ',' + str(y) +',' + str(x1) + ',' + str(x2) + ',' + str(x3) + '\n')

except e:

print ('Write Error')

print (str(e))

if __name__ == '__main__':

Produce()

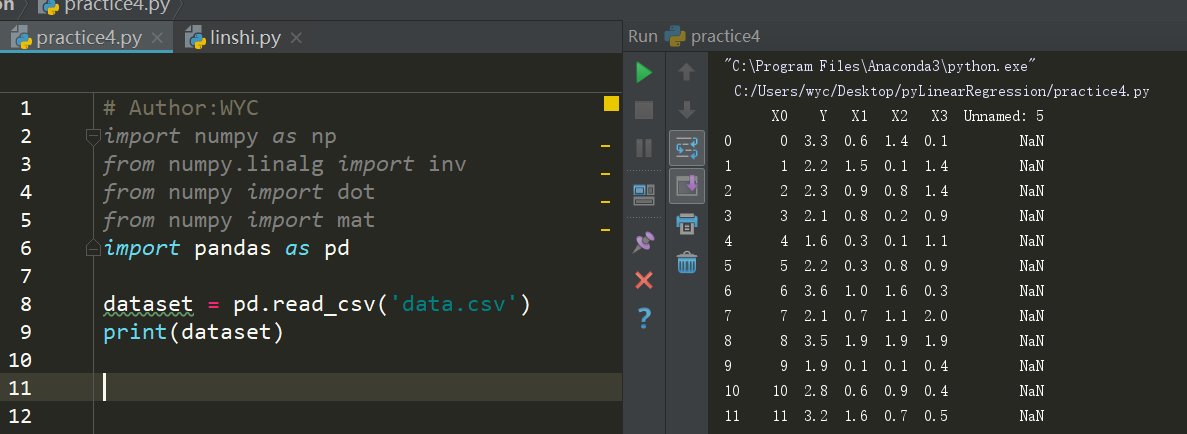

#打印csv中的数据格式,后面几行可以不要

import pandas as pd

dataset = pd.read_csv('data.csv')

print(dataset)

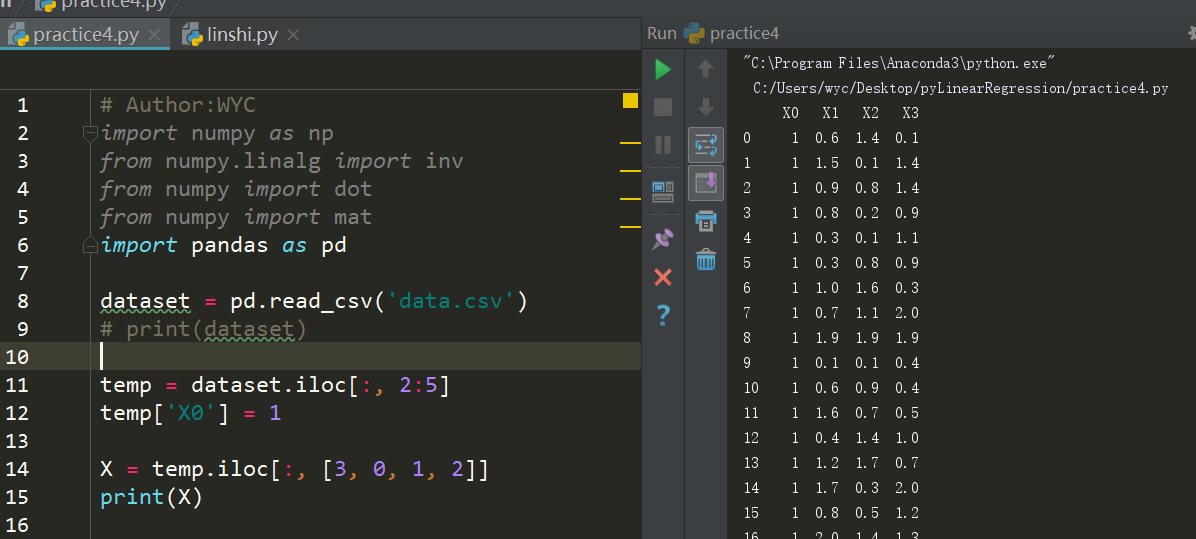

获得x

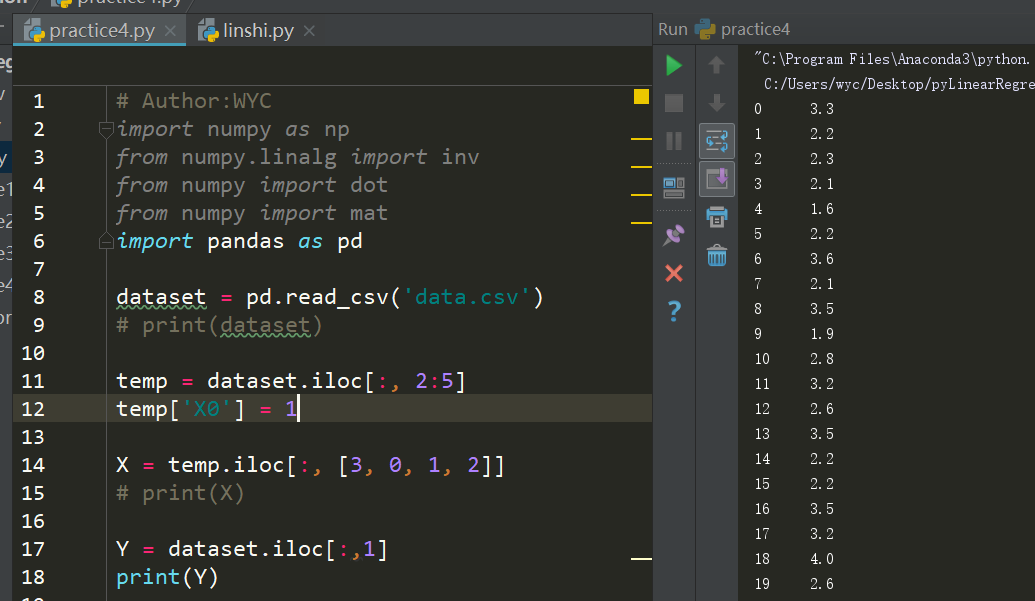

获得y

通过最小二乘法计算thera值

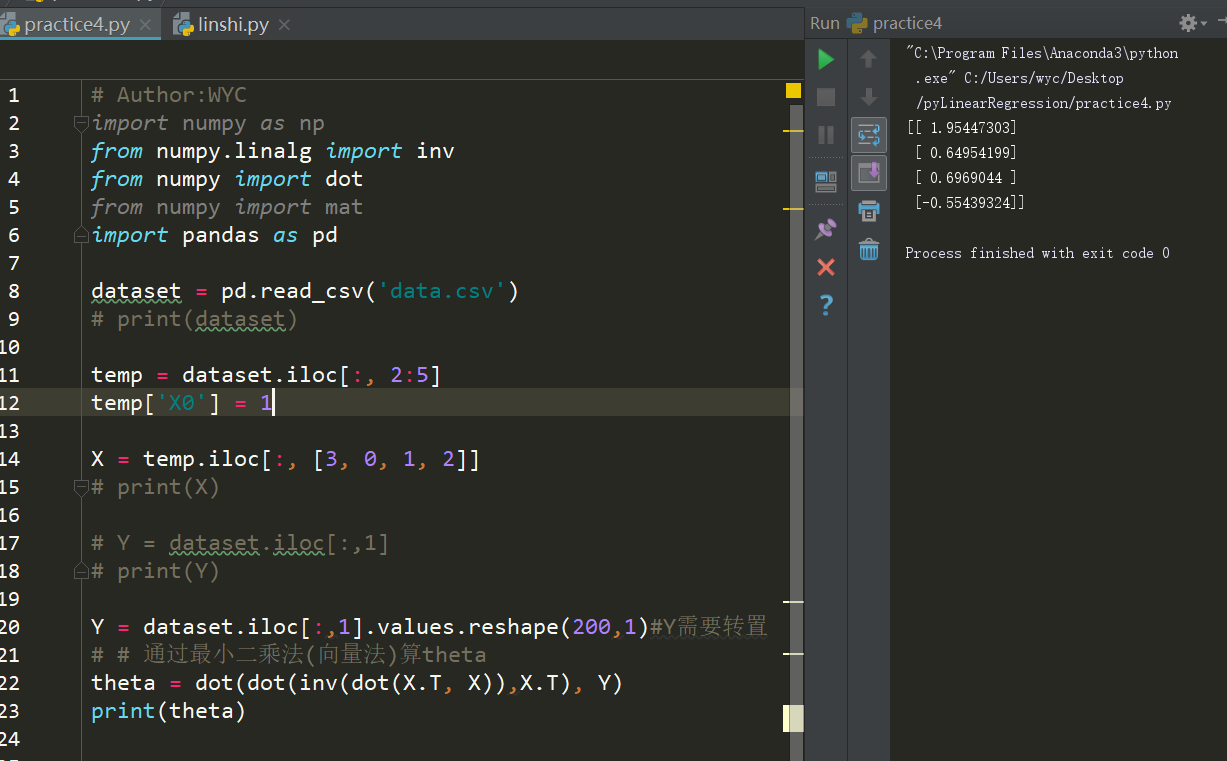

# Author:WYC

import numpy as np

from numpy.linalg import inv

from numpy import dot

from numpy import mat

import pandas as pd

dataset = pd.read_csv('data.csv')

# print(dataset)

temp = dataset.iloc[:, 2:5]

temp['X0'] = 1

X = temp.iloc[:, [3, 0, 1, 2]]

# print(X)

# Y = dataset.iloc[:,1]

# print(Y)

Y = dataset.iloc[:,1].values.reshape(200,1)#Y需要转置

# # 通过最小二乘法(向量法)算theta

theta = dot(dot(inv(dot(X.T, X)),X.T), Y)

print(theta)

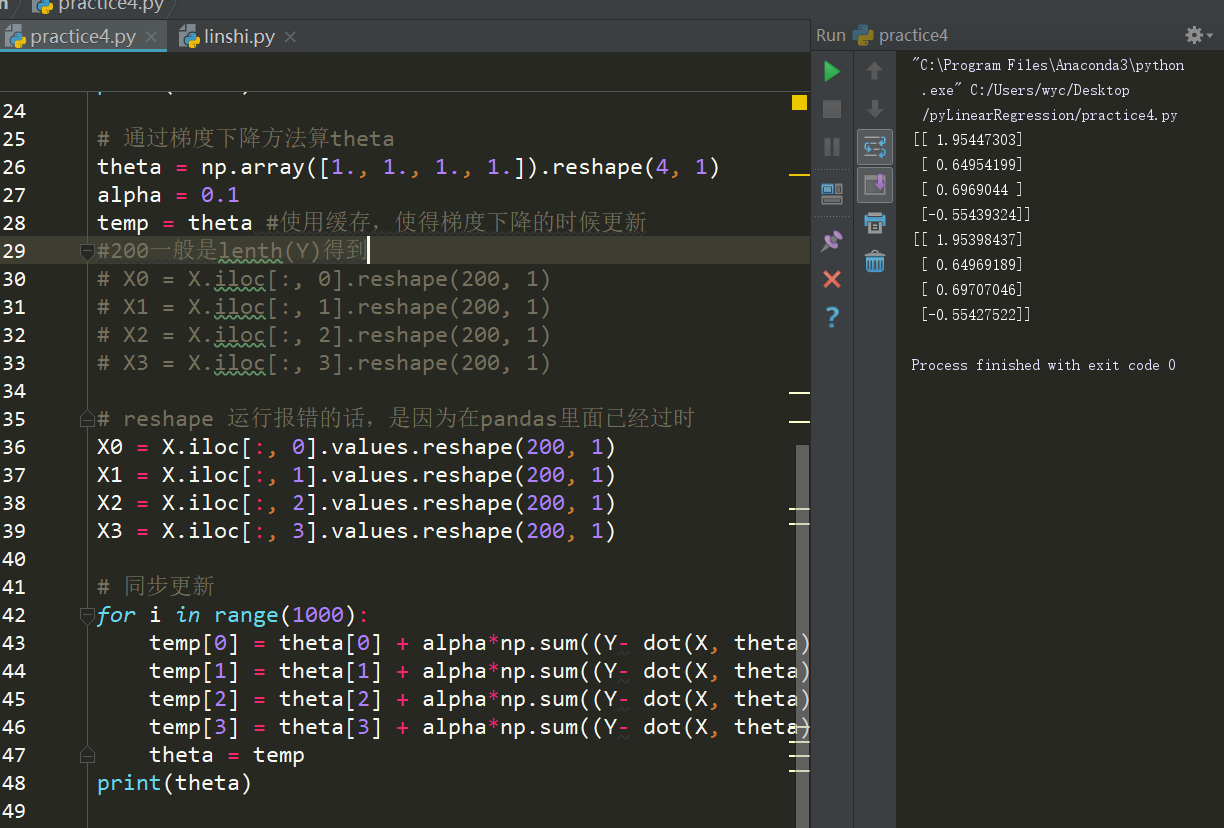

通过梯度下降法计算thera值

pratice4.py全部代码如下:

# Author:WYC

import numpy as np

from numpy.linalg import inv

from numpy import dot

from numpy import mat

import pandas as pd

dataset = pd.read_csv('data.csv')

# print(dataset)

temp = dataset.iloc[:, 2:5]

temp['X0'] = 1

X = temp.iloc[:, [3, 0, 1, 2]]

# print(X)

# Y = dataset.iloc[:,1]

# print(Y)

Y = dataset.iloc[:,1].values.reshape(200,1)#Y需要转置

# # 通过最小二乘法(向量法)算theta

theta = dot(dot(inv(dot(X.T, X)),X.T), Y)

print(theta)

# 通过梯度下降方法算theta

theta = np.array([1., 1., 1., 1.]).reshape(4, 1)

alpha = 0.1

temp = theta #使用缓存,使得梯度下降的时候更新

#200一般是lenth(Y)得到

# X0 = X.iloc[:, 0].reshape(200, 1)

# X1 = X.iloc[:, 1].reshape(200, 1)

# X2 = X.iloc[:, 2].reshape(200, 1)

# X3 = X.iloc[:, 3].reshape(200, 1)

# reshape 运行报错的话,是因为在pandas里面已经过时

X0 = X.iloc[:, 0].values.reshape(200, 1)

X1 = X.iloc[:, 1].values.reshape(200, 1)

X2 = X.iloc[:, 2].values.reshape(200, 1)

X3 = X.iloc[:, 3].values.reshape(200, 1)

# 同步更新

for i in range(1000):

temp[0] = theta[0] + alpha*np.sum((Y- dot(X, theta))*X0)/200.

temp[1] = theta[1] + alpha*np.sum((Y- dot(X, theta))*X1)/200.

temp[2] = theta[2] + alpha*np.sum((Y- dot(X, theta))*X2)/200.

temp[3] = theta[3] + alpha*np.sum((Y- dot(X, theta))*X3)/200.

theta = temp

print(theta)

(完结)