本文的源码地址是https://github.com/balancap/SSD-Tensorflow

由于项目需要,需要对场景中的人体进行检测,但是原始的SSD网络是20种类别的网络,而只需要获取人的分类即可,当我按照其说明在具有两块1080Ti的服务器上训练8个小时,损失值降低到10左右,但是,其效果不如原始的权重参数的效果,因此,想修改网络连接,只保留最后一层的分类网络中对人体的分类。

步骤如下:

-

从github中下载上述例子,测试给定的note_books.ipynb,可以实现多目标检测。最好将其转换成note_books.py程序

-

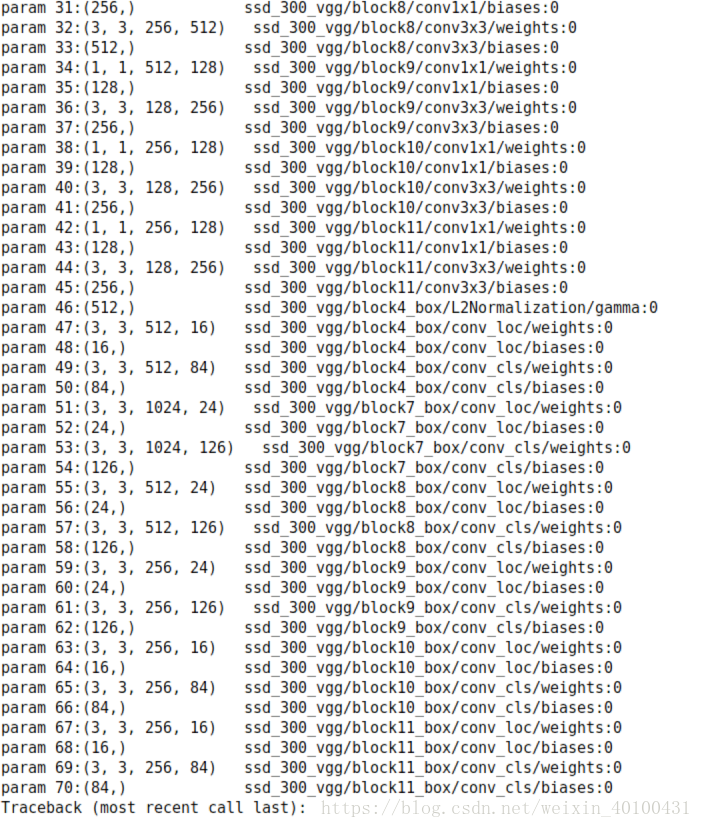

在note_books.py中打印可训练的变量名称(读论文和程序也是可以看出来的),部分如下:

注意,上图中名称为***.box/conv_cls/biases和***.box/conv_cls/weights是需要提取的最后的分类参数,也是网络需要修改的地方。 -

进行分类的网络在ssd_vgg_300.py中的def ssd_multibox_layer()函数中,原程序的weights和biases变量使用tensorflow的slim同时实现,无法手动获取,因此,先将slim方式转换成原始的tensorflow方式。

有关于saver进行tensorflow存储与恢复参考前面的文章:https://blog.csdn.net/weixin_40100431/article/details/82860478

channels=[512,1024,512,256,256,256]

###这里的chanels是输入网络的通道数目,需要在输入函数中添加一个变量i,确定是哪一个网络输入进来

weights = tf.Variable(tf.truncated_normal([3, 3, channels[i] , num_cls_pred], dtype=tf.float32, stddev=1e-1),

name='conv_cls/weights')

biases = tf.Variable(tf.constant(0.0, shape=[num_cls_pred], dtype=tf.float32), name='conv_cls/biases')

####

weights1 = weights

biases1 = biases

####上面这两行是重要的部分,后面修改两行进行提取网络参数和修改分类网络使之变成单目标检测

##首先声明变量,名称要和上一步图中的名称一致,否则,在ssd_notebook中恢复参数会由于名称不一致而报错。

tmp = tf.nn.conv2d(net, weights1, strides=[1, 1, 1, 1], padding='SAME')

# cls_pred=tf.nn.relu(tf.nn.bias_add(tmp,biases))

cls_pred = tf.nn.bias_add(tmp, biases1)

- 提取参数和修改分类网络,SSD网络设置了anchors,每一个点生成4个或者6个anchors,代码中这4个或者6个并不是按照同一类的顺序存储,而是先存储第一个anchors的21类参数,然后存储第二个anchors,以此类推,在pascalvoc_2007.py中,人是第15类,然后修改上面所说的两行代码为。

有关于tf.concat函数的使用请参考前面的文章:

https://blog.csdn.net/weixin_40100431/article/details/82858085

for ii in range(num_cls_pred*2):

if ii==0:

weights1 = tf.concat([weights[0:3, 0:3, 0:channels[i], ii * num_classes:ii * num_classes + 1],

weights[0:3, 0:3, 0:channels[i], ii * num_classes + 15:ii * num_classes + 16]], 3)

biases1 = tf.concat(

[biases[ii * num_classes:ii * num_classes + 1], biases[ii * num_classes + 15:ii * num_classes + 16]], 0)

else:

weights1 = tf.concat([weights1,weights[0:3, 0:3, 0:channels[i], ii * num_classes:ii * num_classes + 1],

weights[0:3, 0:3, 0:channels[i], ii * num_classes + 15:ii * num_classes + 16]], 3)

biases1 = tf.concat(

[biases1,biases[ii * num_classes:ii * num_classes + 1], biases[ii * num_classes + 15:ii * num_classes + 16]], 0)

cls_pred = tf.reshape(cls_pred,

tensor_shape(cls_pred, 4)[:-1] + [num_anchors, 2]) #cls_pred is [N,W,H,num_anchors*classes] before,here become [N,W,H,num_anchors,classes]

###这里将21类修改成2类

note:在ssd_notebook.py中有一个21类的参数,不需要修改,在函数的具体实现上并没有使用。

实验效果图如下:

note:建议结合源码和论文进行理解,才能对代码进行更好的操作和修改。

修改后的代码:在ssd_vgg_300.py中替换下面的函数即可,如有问题,请留言交流

def ssd_multibox_layer(inputs,

num_classes,

sizes,

i,

ratios=[1],

normalization=-1,

bn_normalization=False,

):

"""Construct a multibox layer, return a class and localization predictions.

"""

channels=[512,1024,512,256,256,256]

net = inputs

if normalization > 0:

net = custom_layers.l2_normalization(net, scaling=True)

# Number of anchors.

num_anchors = len(sizes) + len(ratios) ###num_anchors=[4,6,6,6,4,4]

# Location.

num_loc_pred = num_anchors * 4

loc_pred = slim.conv2d(net, num_loc_pred, [3, 3], activation_fn=None,

scope='conv_loc')

loc_pred = custom_layers.channel_to_last(loc_pred)#data_format NHWC==[batch,height,width,channels];NCHW=[batcg,channles,height,width]

loc_pred = tf.reshape(loc_pred,

tensor_shape(loc_pred, 4)[:-1]+[num_anchors, 4]) #loc_pred is [N,W,H,num_anchors*4] before,here become [N,W,H,num_anchors,4]

# Class prediction.

# num_cls_pred = num_anchors * num_classes

# cls_pred = slim.conv2d(net, num_cls_pred, [3, 3], activation_fn=None,

# scope='conv_cls')

# cls_pred = custom_layers.channel_to_last(cls_pred)

## add codes

num_cls_pred = num_anchors * num_classes ##

weights = tf.Variable(tf.truncated_normal([3, 3, channels[i], num_cls_pred], dtype=tf.float32, stddev=1e-1),

name='conv_cls/weights')

biases = tf.Variable(tf.constant(0.0, shape=[num_cls_pred], dtype=tf.float32), name='conv_cls/biases')

#sa usual

# weights1 = weights

# #weights = weights[0:3, 0:3, 0:channels[i], 0:num_cls_pred]

# biases1 = biases

###only detect person

for ii in range(num_cls_pred*2):

if ii==0:

weights1 = tf.concat([weights[0:3, 0:3, 0:channels[i], ii * num_classes:ii * num_classes + 1],

weights[0:3, 0:3, 0:channels[i], ii * num_classes + 15:ii * num_classes + 16]], 3)

biases1 = tf.concat(

[biases[ii * num_classes:ii * num_classes + 1], biases[ii * num_classes + 15:ii * num_classes + 16]], 0)

else:

weights1 = tf.concat([weights1,weights[0:3, 0:3, 0:channels[i], ii * num_classes:ii * num_classes + 1],

weights[0:3, 0:3, 0:channels[i], ii * num_classes + 15:ii * num_classes + 16]], 3)

biases1 = tf.concat(

[biases1,biases[ii * num_classes:ii * num_classes + 1], biases[ii * num_classes + 15:ii * num_classes + 16]], 0)

###

# print("*****************")

# print(weights.name)

# print(weights.get_shape)

# print(biases.name)

# print(biases.get_shape)

tmp = tf.nn.conv2d(net, weights1, strides=[1, 1, 1, 1], padding='SAME')

# cls_pred=tf.nn.relu(tf.nn.bias_add(tmp,biases))

cls_pred = tf.nn.bias_add(tmp, biases1)

#print(

# cls_pred.get_shape) ###cls_pred.get_shape==(1,38,38,84)(1,19,19,126)(1,10,10,126)(1,5,5,126)(1,3,3,84)(1,1,1,84)

# cls_pred = tf.reshape(cls_pred,

# tensor_shape(cls_pred, 4)[:-1]+[num_anchors, num_classes])

cls_pred = tf.reshape(cls_pred,

tensor_shape(cls_pred, 4)[:-1] + [num_anchors, 2]) #cls_pred is [N,W,H,num_anchors*classes] before,here become [N,W,H,num_anchors,classes]

#print(cls_pred) # cls_pred=(1,38,38,4,21)(1,19,19,6,21)(1,10,10,6,21)(1,5,5,6,21)(1,3,3,4,21)(1,1,1,4,21)

return cls_pred, loc_pred

def ssd_net(inputs,

num_classes=SSDNet.default_params.num_classes,

feat_layers=SSDNet.default_params.feat_layers,

anchor_sizes=SSDNet.default_params.anchor_sizes,

anchor_ratios=SSDNet.default_params.anchor_ratios,

normalizations=SSDNet.default_params.normalizations,

is_training=True,

dropout_keep_prob=0.5,

prediction_fn=slim.softmax,

reuse=None,

scope='ssd_300_vgg'):

"""SSD net definition.

"""

# if data_format == 'NCHW':

# inputs = tf.transpose(inputs, perm=(0, 3, 1, 2))

# End_points collect relevant activations for external use.

end_points = {}

with tf.variable_scope(scope, 'ssd_300_vgg', [inputs], reuse=reuse):

# Original VGG-16 blocks.

net = slim.repeat(inputs, 2, slim.conv2d, 64, [3, 3], scope='conv1') ### create model variable,which can be used train or finetune .

## ===

##net=slim.conv2d(inputs,64,[3,3],scope='conv1')

##net=slim.conv2d(net,64,[3,3],scope='conv1')

end_points['block1'] = net

net = slim.max_pool2d(net, [2, 2], scope='pool1') #150*150*64

# Block 2.

net = slim.repeat(net, 2, slim.conv2d, 128, [3, 3], scope='conv2')

end_points['block2'] = net

net = slim.max_pool2d(net, [2, 2], scope='pool2') #75*75*128

# Block 3.

net = slim.repeat(net, 3, slim.conv2d, 256, [3, 3], scope='conv3')

end_points['block3'] = net

net = slim.max_pool2d(net, [2, 2], scope='pool3')# 38*38*256

# Block 4.

net = slim.repeat(net, 3, slim.conv2d, 512, [3, 3], scope='conv4')

end_points['block4'] = net

net = slim.max_pool2d(net, [2, 2], scope='pool4')# 19*19*512

# Block 5.

net = slim.repeat(net, 3, slim.conv2d, 512, [3, 3], scope='conv5')

end_points['block5'] = net

net = slim.max_pool2d(net, [3, 3], stride=1, scope='pool5')#19*19*512

# Additional SSD blocks.

# Block 6: let's dilate the hell out of it!

net = slim.conv2d(net, 1024, [3, 3], rate=6, scope='conv6')#19*19*1024

end_points['block6'] = net

net = tf.layers.dropout(net, rate=dropout_keep_prob, training=is_training)

# Block 7: 1x1 conv. Because the fuck.

net = slim.conv2d(net, 1024, [1, 1], scope='conv7')#19*19*1024

end_points['block7'] = net

net = tf.layers.dropout(net, rate=dropout_keep_prob, training=is_training)

# Block 8/9/10/11: 1x1 and 3x3 convolutions stride 2 (except lasts).

end_point = 'block8'

with tf.variable_scope(end_point):

net = slim.conv2d(net, 256, [1, 1], scope='conv1x1')

net = custom_layers.pad2d(net, pad=(1, 1))

net = slim.conv2d(net, 512, [3, 3], stride=2, scope='conv3x3', padding='VALID')###10*10*512

end_points[end_point] = net

end_point = 'block9'

with tf.variable_scope(end_point):

net = slim.conv2d(net, 128, [1, 1], scope='conv1x1')

net = custom_layers.pad2d(net, pad=(1, 1))

net = slim.conv2d(net, 256, [3, 3], stride=2, scope='conv3x3', padding='VALID')###5*5*256

end_points[end_point] = net

end_point = 'block10'

with tf.variable_scope(end_point):

net = slim.conv2d(net, 128, [1, 1], scope='conv1x1')

net = slim.conv2d(net, 256, [3, 3], scope='conv3x3', padding='VALID') ###

end_points[end_point] = net

end_point = 'block11'

with tf.variable_scope(end_point):

net = slim.conv2d(net, 128, [1, 1], scope='conv1x1')

net = slim.conv2d(net, 256, [3, 3], scope='conv3x3', padding='VALID')

end_points[end_point] = net

# Prediction and localisations layers.

predictions = []

logits = []

localisations = []

for i, layer in enumerate(feat_layers):

with tf.variable_scope(layer + '_box'): #### creat context

p, l = ssd_multibox_layer(end_points[layer],

num_classes,

anchor_sizes[i],

i,

anchor_ratios[i],

normalizations[i])

predictions.append(prediction_fn(p))###softmax,to predict class

logits.append(p)

localisations.append(l)

return predictions, localisations, logits, end_points

ssd_net.default_image_size = 300