实际上编写tensorflow可以总结为两步.

(1)组装一个graph;

(2)使用session去执行graph中的operation。

当使用tensorflow进行graph构建时,大体可以分为五部分:

1、为输入X与输出y定义placeholder;

2、定义权重W;

3、定义模型结构;

4、定义损失函数;

5、定义优化算法

下面是手写识别字程序:

-

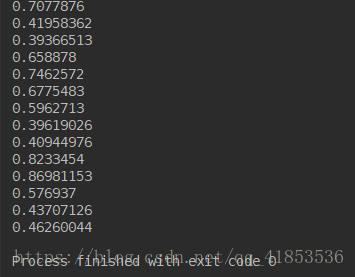

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data mnist = input_data.read_data_sets("MNIST_data/",one_hot=True) #导入数据集 x = tf.placeholder(shape=[None,784],dtype=tf.float32) y = tf.placeholder(shape=[None,10],dtype=tf.float32) #为输入输出定义placehloder w = tf.Variable(tf.truncated_normal(shape=[784,10],mean=0,stddev=0.5)) b = tf.Variable(tf.zeros([10])) #定义权重 y_pred = tf.nn.softmax(tf.matmul(x,w)+b) #定义模型结构 loss =tf.reduce_mean(-tf.reduce_sum(y*tf.log(y_pred),reduction_indices=[1])) #定义损失函数 opt = tf.train.GradientDescentOptimizer(0.05).minimize(loss) #定义优化算法 sess =tf.Session() sess.run(tf.global_variables_initializer()) for each in range(1000): batch_xs,batch_ys = mnist.train.next_batch(100) loss1 = sess.run(loss,feed_dict={x:batch_xs,y:batch_ys}) opt1 = sess.run(opt,feed_dict={x:batch_xs,y:batch_ys}) print(loss1)