本篇文章,主要介绍使用promethesu监控kubernetes集群信息以及kubernetes集群中部署的springboot业务指标。通过此篇文章,你能快速搭建自己的监控系统,这对于没有统一监控系统的公司来说,非常省事。对于自己搭建的话,后期可能需要考虑更多的事情,比如采集的监控数据如何存放,磁盘内存毕竟有限,可以考虑写到es、db等,当然这又牵涉到搭建es了,在此就不扩展这些外围组件了。

prometheus具有自动发现服务的功能,它通过kubernetes的api发现Node、Pod、Service等功能,当然它还可以基于file_sd_config定时读取配置文件发现静态目标,这时你可以通过某种机制比如自写shell等脚本更新file_sd_config文件或者基于现有的Consul、DNS等中间件实现。prometheus的数据采集之后,然后通过图形界面进行展示,自带的图形工具效果一般,所以我们选择Grafana进行展示。我们除了监控kubernetes本身的一些基本信息,我们还可以通过prometheus监控部署在集群容器中的业务应用指标,根据指标数据进行告警提示。

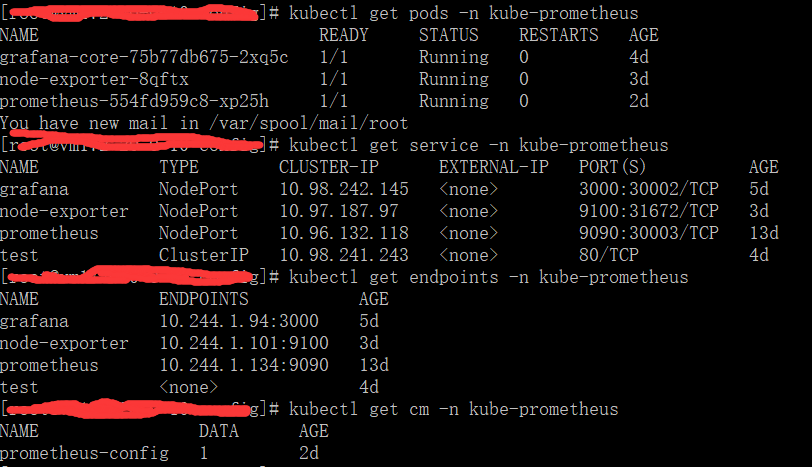

下面在kubernetes中单独新建一个namespace(kube-prometheus),在新建的命名空间下来部署prometheus监控服务。

1.创建namespace

新建prometheus-ns.yaml

apiVersion: v1

kind: Namespace

metadata:

name: kube-prometheus

执行kubectl create -f prometheus-ns.yaml

2.创建prometheus权限资源

新建prometheus-rbac.yaml文件,创建prometheus访问kubernetes容器所需的ClusterRole、ServiceAccount以及ClusterRoleBinding。

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-prometheus

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-prometheus

执行kubectl create -f prometheus-rbac.yaml

3.编写prometheus的启动配置

在kubernetes中部署prometheus服务,其配置文件通过configman形式进行映射,新建prometheus-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-prometheus

data:

prometheus.yml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-nodes'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-service-business-endpoints'

kubernetes_sd_configs:

- role: endpoints

metrics_path: /prometheus

relabel_configs:

- source_labels: [__meta_kubernetes_endpoints_name]

action: keep

regex: (container-console-service)

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: 'kubernetes-services'

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module: [http_2xx]

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: 'kubernetes-ingresses'

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.example.com:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

执行 kubectl create -f prometheus-cm.yaml

4.部署prometheus服务

新建prometheus-deploy.yaml

apiVersion: apps/v1beta2

kind: Deployment

metadata:

labels:

name: prometheus-deployment

name: prometheus

namespace: kube-prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- image: prom/prometheus:v2.3.2

name: prometheus

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=24h"

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: "/prometheus"

name: data

- mountPath: "/etc/prometheus"

name: config-volume

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 2500Mi

serviceAccountName: prometheus

volumes:

- name: data

emptyDir: {}

- name: config-volume

configMap:

name: prometheus-config

prometheus的启动配置通过configMap方式映射到容器中,执行kubectl create -f prometheus-deploy.yaml创建部署。

新建prometheus-svc.yaml

kind: Service

apiVersion: v1

metadata:

labels:

app: prometheus

name: prometheus

namespace: kube-prometheus

spec:

type: NodePort

ports:

- port: 9090

targetPort: 9090

nodePort: 30003

selector:

app: prometheus

执行kubectl create -f prometheus-svc.yaml创建服务,通过NodePort方式将容器的9090端口映射到宿主机的30003端口,通过浏览器访问http://ip:30003/,应该可以看到prometheus的web页面了。如果配置文件格式错误,可以通过kubectl logs podId 查看具体的解析错误。

5.部署grafana服务

新建grafana-deploy.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: grafana-core

namespace: kube-prometheus

labels:

app: grafana

component: core

spec:

replicas: 1

template:

metadata:

labels:

app: grafana

component: core

spec:

containers:

- image: grafana/grafana:5.0.0

name: grafana-core

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 100m

memory: 100Mi

env:

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

readinessProbe:

httpGet:

path: /login

port: 3000

volumeMounts:

- name: grafana-persistent-storage

mountPath: /var

volumes:

- name: grafana-persistent-storage

emptyDir: {}

这里说一下,我们最好创建存储卷,把相关的数据文件以及插件持久化到本地目录中,grafana的5.1+版本以上有些变化,功能有所提升,如果添加/var/lib/grafana数据卷,请手动赋予文件附属权给472,chown -R 472:472,否则很可能创建失败。

执行kubectl create -f grafana-deploy.yaml创建grafana部署,它的一些相关配置可以通过环境变量的方式注入。接下来新建grafana-svc.yaml创建对应的服务,并将容器的3000端口映射到宿主机的30002端口。

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: kube-prometheus

labels:

app: grafana

component: core

annotations:

prometheus.io/scrape: 'true'

spec:

type: NodePort

ports:

- port: 3000

nodePort: 30002

selector:

app: grafana

component: core

执行kubectl create -f grafana-svc.yaml创建服务成功之后,则可以通过http://ip:30002/访问grafana的web服务了。上面配置中的annotations是用来控制prometheus采集的,prometheus会采集到__meta_kubernetes_service_annotation_prometheus_io_scrape=true标签,通过该标签就可以控制哪些目标需要采集,避免无谓的去采集目标,可以起到一个过滤filter的作用。

6.部署node-exporter

这个东西不是必须的,node-exporter这个是官方自带的一个数据收集器,当前很多组件都有对应的第三方收集器。node-exporter就是启动了一个http_server,持续不断去采集Linux服务的cpu、内存、磁盘、网络IO等各种数据,它提供的指标数据远远大于你想象的。它提供了一个符合prometheus格式的数据接口,prometheus定期过来请求该接口即可获取机器的各项指标输数据。它既然是监控主机信息的,在kubernetes中则最好以DaemonSet方式运行在每个node节点上。新建node-exporter-ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: node-exporter

namespace: kube-prometheus

labels:

k8s-app: node-exporter

spec:

template:

metadata:

labels:

k8s-app: node-exporter

spec:

containers:

- image: prom/node-exporter:latest

name: node-exporter

ports:

- containerPort: 9100

protocol: TCP

name: http

执行kubectl create -f node-exporter-ds.yaml创建资源,然后新建node-exporter-svc.yaml创建服务。

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: node-exporter

name: node-exporter

namespace: kube-prometheus

spec:

ports:

- name: http

port: 9100

nodePort: 31672

protocol: TCP

type: NodePort

selector:

k8s-app: node-exporter

上面已经将容器端口映射到宿主机的31672,服务启动成功之后,在宿主机上执行curl http://ip:31672/metrics就可以看到node-exporter采集到的目标数据。至此,监控的所有服务部署完毕。

下面稍微解释下prometheus的启动配置,以上面prometheus-cm.yaml中内容为例,在该文件中,其中有一段如下:

- job_name: 'kubernetes-service-business-endpoints'

kubernetes_sd_configs:

- role: endpoints

metrics_path: /prometheus

relabel_configs:

- source_labels: [__meta_kubernetes_endpoints_name]

action: keep

regex: (container-console-service)

配置文件中,剪除上面这一小段配置之外,可以作为一个通用的监控k8s的启动配置。上面这段配置是我针对下面的springboot应用进行监控添加的。springboot应用是部署在k8s中,自然我们也会为这个应用创建部署deployment、service,有service自然则会产生endpoints,即podIp:containerPort。通过kubernetes_sd_config相关配置,可以让prometheus自动去发现应用对应的pod实例,pod数量在系统运行中经常自动变化,有了自动发现功能,则可以监控到所有的pod实例。mertrics_path不填写默认是/metrics,而springboot自带的mertrics数据格式不符合prometheus的规则,这里我们设置为/prometheus。

relabel_configs是个非常有用的东西,它可以将source_labels的东西重写,__meta_kubernetes_endpoints_name是prometheus提供的一个label,这里的springboot应用服务名称即为container-console-service,这样我们通过regex匹配规则,进而执行action,keep表示匹配了regex就去采集。

上面的prometheus服务已经具备监控kubernetes集群的基本信息以及node节点的信息,有时候,我们还需通过监控系统监控业务系统的指标,比如调用接口一共请求了多少次,失败或者异常了多少次,平均的请求时间,尤其是某些关键业务,如订单处理失败等,需要能监控到并且告警通知相应的负责人,下面以一个基础的springboot1.5.10版本的项目为例。

A. 引入prometheus包

io.prometheus.simpleclient_spring_boot:0.1.0

B. 编写工具类

import io.prometheus.client.Counter;

import io.prometheus.client.Summary;

import org.apache.commons.lang.StringUtils;

/**

* some sample data :

* container_console_request_total{request_name=GetCluster,status=success}

* container_console_request_total{request_name=GetCluster,status=exception}

* container_console_request_total{request_name=GetCluster,status=fail}

* author:SUNJINFU

* date:2018/8/14

*/

public class Monitor {

private static final String STATUS_SUCCESS = "success";

private static final String STATUS_FAIL = "fail";

private static final String STATUS_EXCEPTION = "exception";

private static final Summary RequestTimeSummary = Summary.build("container_console_request_milliseconds",

"request cost time").labelNames("request_name", "status").register();

private static final Counter RequestTotalCounter = Counter.build("container_console_request_total",

"request total").labelNames("request_name", "status").register();

public static void recordSuccess(String labelName, long milliseconds) {

recordTimeCost(labelName, STATUS_SUCCESS, milliseconds);

}

public static void recordSuccess(String labelName) {

recordTotal(labelName, STATUS_SUCCESS);

}

public static void recordException(String labelName, long milliseconds) {

recordTimeCost(labelName, STATUS_EXCEPTION, milliseconds);

}

public static void recordException(String labelName) {

recordTotal(labelName, STATUS_EXCEPTION);

}

public static void recordFail(String labelName, long milliseconds) {

recordTimeCost(labelName, STATUS_FAIL, milliseconds);

}

public static void recordFail(String labelName) {

recordTotal(labelName, STATUS_FAIL);

}

private static void recordTimeCost(String labelName, String status, long milliseconds) {

if (StringUtils.isNotEmpty(labelName)) {

RequestTimeSummary.labels(labelName.trim(), status).observe(milliseconds);

}

}

private static void recordTotal(String labelName, String status) {

if (StringUtils.isNotEmpty(labelName)) {

RequestTotalCounter.labels(labelName.trim(), status).inc();

}

}

}

上面的Summary与Counter区别具体查看prometheus文档,Counter就是用来统计次数的,这个用来统计接口调用次数最合适,Summary除了统计次数,还会统计调用时间。在你的业务代码需要监控的地方调用Monitor中的静态方法来进行数据收集。

try {

//业务逻辑

Monitor.recordSuccess("serviceName_" + action, System.currentTimeMillis() - t);

} catch (Exception e) {

Monitor.recordException("serviceName_" + action, System.currentTimeMillis() - t);

}

利用这种静态方法则可以添加到任何想要添加的代码块中,当然如果还需监控系统的http请求与响应,可以通过编写一个interceptor来进行。

@Component

public class RequestTimingInterceptor extends HandlerInterceptorAdapter {

private static final String REQ_PARAM_TIMING = "http_req_start_time";

private static final Summary responseTimeInMs = Summary.build()

.name("container_console_http_response_time_milliseconds")

.labelNames("method", "handler", "status")

.help("http request completed time in milliseconds")

.register();

@Override

public boolean preHandle(HttpServletRequest request, HttpServletResponse response,

Object handler) {

request.setAttribute(REQ_PARAM_TIMING, System.currentTimeMillis());

return true;

}

@Override

public void afterCompletion(HttpServletRequest request, HttpServletResponse response,

Object handler, Exception ex) {

Long timingAttr = (Long) request.getAttribute(REQ_PARAM_TIMING);

long completedTime = System.currentTimeMillis() - timingAttr;

String handlerLabel = handler.toString();

if (handler instanceof HandlerMethod) {

Method method = ((HandlerMethod) handler).getMethod();

handlerLabel = method.getDeclaringClass().getSimpleName() + "_" + method.getName();

}

responseTimeInMs.labels(request.getMethod(), handlerLabel,

Integer.toString(response.getStatus())).observe(completedTime);

}

}

然后把这个拦截器注册到springboot中即可。

C、开启prometheus

在Springboot的启动类上添加注册@EnablePrometheusEndpoint,这样springboot应用就暴露了/prometheus接口,查看源码发现这个接口的实现与spring默认的那些endpoint返回的数据结构不一致,默认的都是返回的json格式,不支持prometheus的采集。同时在springboot的启动配置中添加management.security.enabled=false,不添加这个配置,访问/prometheus可能返回401未授权等相关错误信息。启动应用,触发数据采集的相关埋点,访问应用的/prometheus即可看到采集到的指标。

D、查看采集数据

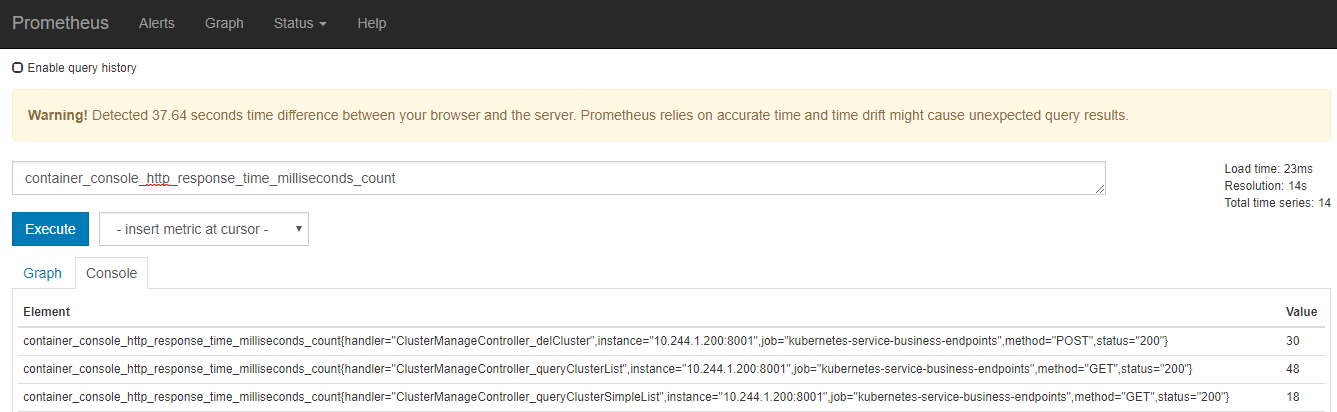

首先利用prometheus自带的图形观察采集的数据,首先查看springboot埋点的数据。

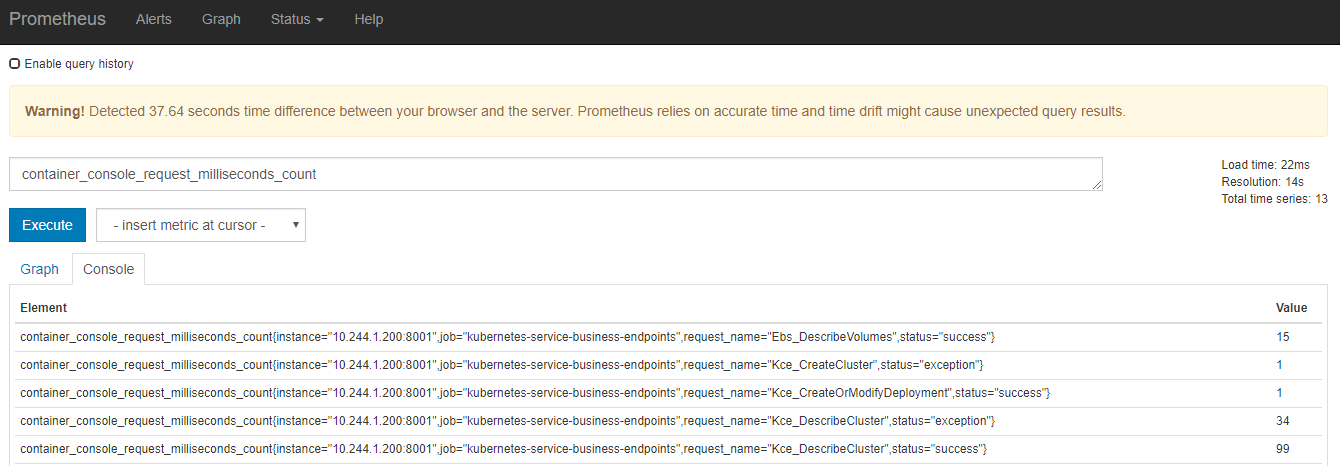

再看Monitor静态方法埋点的数据。

E、报警设置

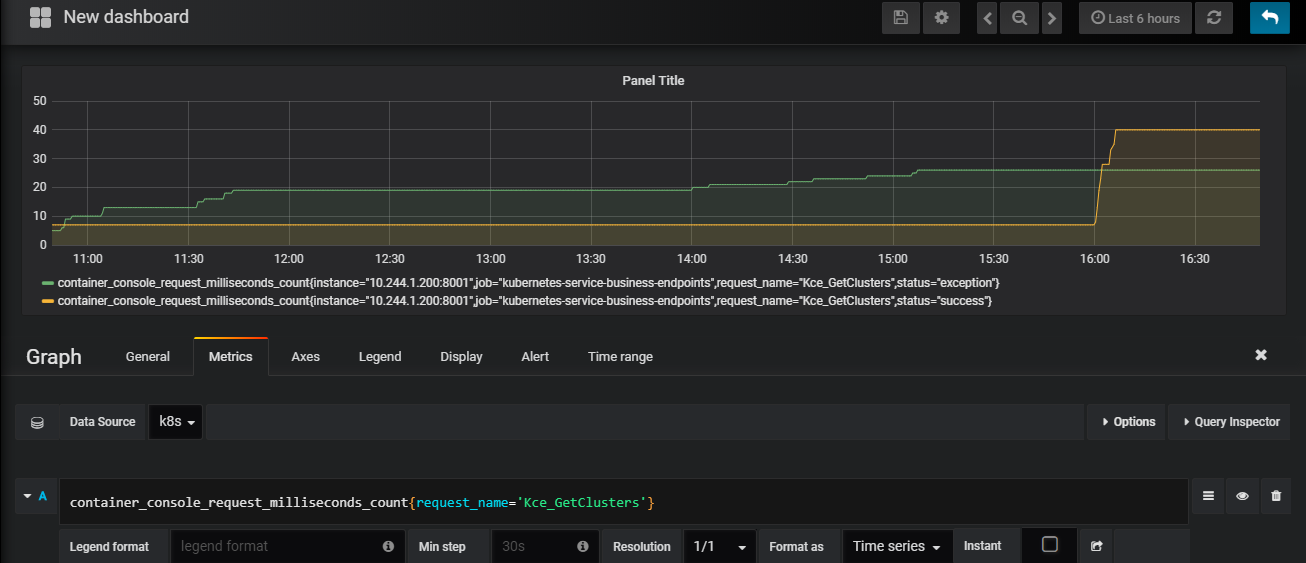

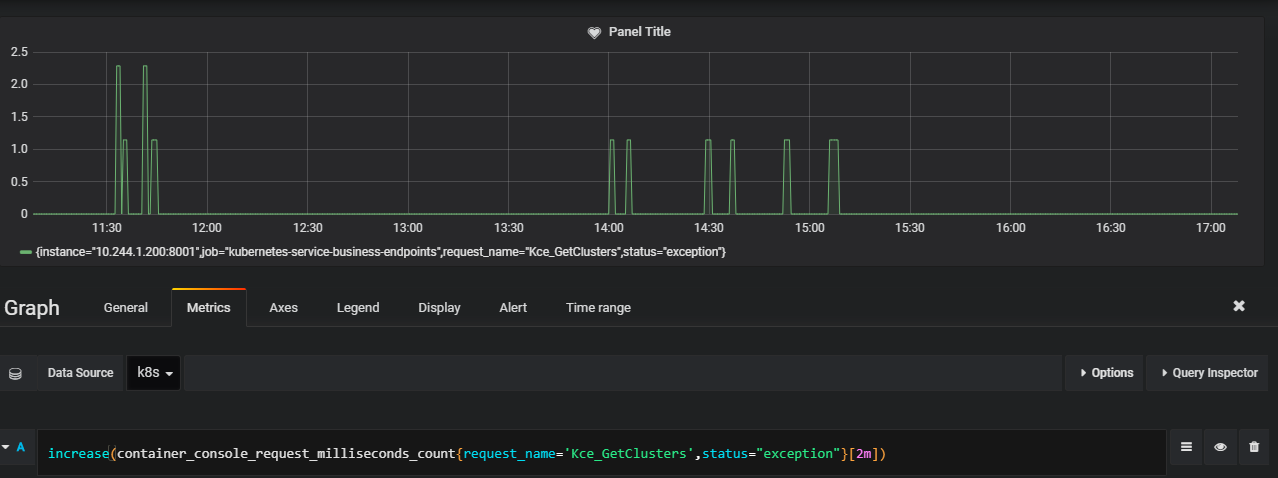

prometheus的图形化功能不如grafana出色,现在利用grafana来观察监控数据,同时设置报警规则。实际生产中,应该根据业务的稳定程度等相关指标进行告警设置。这里纯粹演示一个示例。这里以请求Kce_GetClusters为例,查看如下指标container_console_request_milliseconds_count{request_name=’Kce_GetClusters’

从上面可以看出异常与成功的请求次数,这时我们可以针对异常的调用设置告警。切换到Alert面饭,即可进行设置,不能直接通过指标的大小去设置告警,因为它是Counter类型,永远是递增的,一直是一个累计的数字,我们应该以它增长的速率或者增长量作为告警规则。

可以按照上面的规则,2分钟内发生一次即告警,意思就是表达式的值大于0即告警。时间维度,可以自由设置,看业务场景的重要性。