一、背景简介

原生的 Kubernetes 是集成的 InfluxDB + Heapster + Grafana 监控组件,存在一定局限;

本文主要介绍,在 Kubernetes 集群中部署 Prometheus 监控系统的一般方法(核心功能安装)

二、基本步骤

2.1 镜像准备

prom/prometheus:v1.7.0 【主监控程序】

prom/alertmanager:v0.14.0 【告警管理器】

prom/node-exporter:v0.14.0 【 Node 采集器】

quay.io/coreos/kube-state-metrics:v1.3.0 【k8s 采集器】

k8s.gcr.io/addon-resizer:1.7 【k8s 采集器附属】

2.2 创建命名空间(专门存放监控 Pods)

# kubectl create namespace monitoring

【 也可以通过Yaml 文件形式创建】

# vim monitoring_namespace.yaml

apiVersion: v1 kind: Namespace metadata: name: monitoring

# kubectl create -f monitoring_namespace.yaml

2.3 创建 Prometheus core ConfigMap

# vim prometheus_core_config_map.yaml

apiVersion: v1

kind: ConfigMap

metadata:

creationTimestamp: null

name: prometheus-core

namespace: monitoring

data:

prometheus.yaml: |

global:

scrape_interval: 10s

scrape_timeout: 10s

evaluation_interval: 10s

rule_files:

- "/etc/prometheus-rules/*.rules"

scrape_configs:

# Data From Kubelet Daemon

# https://github.com/prometheus/prometheus/blob/master/documentation/examples/prometheus-kubernetes.yml#L37

- job_name: 'kubernetes-node-kubelet'

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:10255'

target_label: __address__

# Data From EndPoints (Service EndPoints)

# https://github.com/prometheus/prometheus/blob/master/documentation/examples/prometheus-kubernetes.yml#L79

- job_name: 'kubernetes-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: (.+)(?::\d+);(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

# No Data Now, For ClusterIP ?

# https://github.com/prometheus/prometheus/blob/master/documentation/examples/prometheus-kubernetes.yml#L119

- job_name: 'kubernetes-services'

metrics_path: /probe

params:

module: [http_2xx]

kubernetes_sd_configs:

- role: service

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

# Data From Pods

# https://github.com/prometheus/prometheus/blob/master/documentation/examples/prometheus-kubernetes.yml#L156

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: (.+):(?:\d+);(\d+)

replacement: ${1}:${2}

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- source_labels: [__meta_kubernetes_pod_container_port_number]

action: keep

regex: \d{4}

# Data From cAdvisor

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__meta_kubernetes_node_address_InternalIP]

target_label: __address__

regex: (.*)

replacement: $1:4194

# kubectl create -f prometheus_core_config_map.yaml

2.4 创建 Prometheus rule ConfigMap

# vim prometheus_rules_config_map.yaml

apiVersion: v1

kind: ConfigMap

metadata:

creationTimestamp: null

name: prometheus-rules

namespace: monitoring

data:

cpu-usage.rules: |

ALERT NodeCPUUsage

IF (100 - (avg by (instance) (irate(node_cpu{name="node-exporter",mode="idle"}[5m])) * 100)) > 75

FOR 2m

LABELS {

severity="page"

}

ANNOTATIONS {

SUMMARY = "{{$labels.instance}}: High CPU usage detected",

DESCRIPTION = "{{$labels.instance}}: CPU usage is above 75% (current value is: {{ $value }})"

}

instance-availability.rules: |

ALERT InstanceDown

IF up == 0

FOR 1m

LABELS { severity = "page" }

ANNOTATIONS {

summary = "Instance {{ $labels.instance }} down",

description = "{{ $labels.instance }} of job {{ $labels.job }} has been down for more than 1 minute.",

}

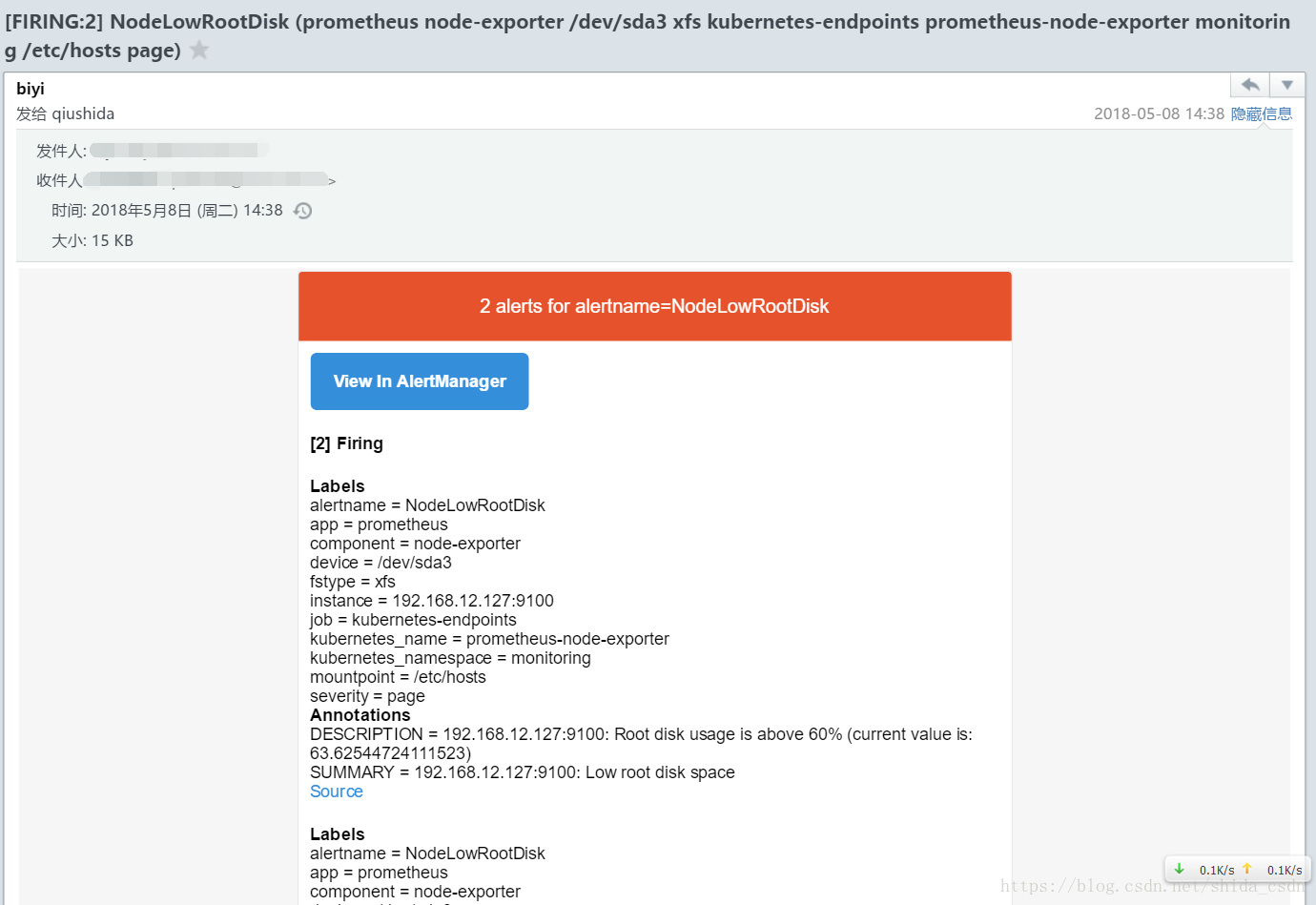

low-disk-space.rules: |

ALERT NodeLowRootDisk

IF ((node_filesystem_size{mountpoint="/etc/hosts"} - node_filesystem_free{mountpoint="/etc/hosts"} ) / node_filesystem_size{mountpoint="/etc/hosts"} * 100) > 75

FOR 2m

LABELS {

severity="page"

}

ANNOTATIONS {

SUMMARY = "{{$labels.instance}}: Low root disk space",

DESCRIPTION = "{{$labels.instance}}: Root disk usage is above 75% (current value is: {{ $value }})"

}

ALERT NodeLowDataDisk

IF ((node_filesystem_size{mountpoint="/data-disk"} - node_filesystem_free{mountpoint="/data-disk"} ) / node_filesystem_size{mountpoint="/data-disk"} * 100) > 75

FOR 2m

LABELS {

severity="page"

}

ANNOTATIONS {

SUMMARY = "{{$labels.instance}}: Low data disk space",

DESCRIPTION = "{{$labels.instance}}: Data disk usage is above 75% (current value is: {{ $value }})"

}

mem-usage.rules: |

ALERT NodeSwapUsage

IF (((node_memory_SwapTotal-node_memory_SwapFree)/node_memory_SwapTotal)*100) > 75

FOR 2m

LABELS {

severity="page"

}

ANNOTATIONS {

SUMMARY = "{{$labels.instance}}: Swap usage detected",

DESCRIPTION = "{{$labels.instance}}: Swap usage usage is above 75% (current value is: {{ $value }})"

}

ALERT NodeMemoryUsage

IF (((node_memory_MemTotal-node_memory_MemFree-node_memory_Cached)/(node_memory_MemTotal)*100)) > 75

FOR 2m

LABELS {

severity="page"

}

ANNOTATIONS {

SUMMARY = "{{$labels.instance}}: High memory usage detected",

DESCRIPTION = "{{$labels.instance}}: Memory usage is above 75% (current value is: {{ $value }})"

}

# kubectl create -f prometheus_rules_config_map.yaml

2.5 创建 Prometheus alert ConfigMap

# vim prometheus_alert_config_map.yaml

kind: ConfigMap

apiVersion: v1

metadata:

name: prometheus-alertmanager

namespace: monitoring

data:

config.yml: |-

global:

resolve_timeout: 5m

smtp_smarthost: '<SMTP_IP>:<PORT>'

smtp_from: '[email protected]'

smtp_require_tls: false

route:

receiver: live-monitoring

group_wait: 30s

group_interval: 5m

repeat_interval: 4h

group_by: [alertname]

receivers:

- name: live-monitoring

email_configs:

- to: '[email protected]'

- to: '[email protected]'

# kubectl create -f prometheus_alert_config_map.yaml

2.6 创建 Prometheus 实例

# vim prometheus_deployment.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: prometheus rules: - apiGroups: [""] resources: - nodes - services - endpoints - pods verbs: ["get", "list", "watch"] - apiGroups: [""] resources: - configmaps verbs: ["get"] - nonResourceURLs: ["/metrics"] verbs: ["get"] --- apiVersion: v1 kind: ServiceAccount metadata: name: prometheus-k8s namespace: monitoring --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: prometheus roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: prometheus subjects: - kind: ServiceAccount name: prometheus-k8s namespace: monitoring --- apiVersion: extensions/v1beta1 kind: Deployment metadata: name: prometheus-core namespace: monitoring labels: app: prometheus component: core spec: replicas: 1 template: metadata: name: prometheus-main labels: app: prometheus component: core spec: serviceAccountName: prometheus-k8s containers: - name: prometheus image: prom/prometheus:v1.7.0 args: - '-storage.local.retention=12h' - '-storage.local.memory-chunks=500000' - '-config.file=/etc/prometheus/prometheus.yaml' - '-alertmanager.url=http://127.0.0.1:9093/' ports: - name: web-ui containerPort: 9090 resources: requests: cpu: 500m memory: 500M limits: cpu: 500m memory: 500M volumeMounts: - name: config-volume mountPath: /etc/prometheus - name: rules-volume mountPath: /etc/prometheus-rules - image: prom/alertmanager:v0.14.0 name: alertmanager args: - "--config.file=/etc/alertmanager/config.yml" - "--storage.path=/alertmanager" ports: - containerPort: 9093 protocol: TCP name: http volumeMounts: - name: alertmanager-config-volume mountPath: /etc/alertmanager resources: requests: cpu: 50m memory: 50Mi limits: cpu: 200m memory: 200Mi volumes: - name: config-volume configMap: name: prometheus-core - name: rules-volume configMap: name: prometheus-rules - name: alertmanager-config-volume configMap: name: prometheus-alertmanager --- apiVersion: v1 kind: Service metadata: name: prometheus namespace: monitoring labels: app: prometheus component: core annotations: prometheus.io/scrape: 'true' spec: type: NodePort ports: - port: 9090 protocol: TCP name: core-web-ui nodePort: 30122 - port: 9093 protocol: TCP name: alert-web-ui nodePort: 30123 selector: app: prometheus component: core

# kubectl create -f prometheus_deployment.yaml

2.7 开启 Node Exporter (通过 EndPoints 收集)

# vim prometheus_node_exporter.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: prometheus-node-exporter

namespace: monitoring

labels:

app: prometheus

component: node-exporter

spec:

template:

metadata:

name: prometheus-node-exporter

labels:

app: prometheus

component: node-exporter

spec:

containers:

- image: prom/node-exporter:v0.14.0

name: prometheus-node-exporter

ports:

- name: prom-node-exp

containerPort: 9100

hostPort: 9100

hostNetwork: true

hostPID: true

---

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/scrape: 'true'

name: prometheus-node-exporter

namespace: monitoring

labels:

app: prometheus

component: node-exporter

spec:

clusterIP: None

ports:

- name: prometheus-node-exporter

port: 9100

protocol: TCP

selector:

app: prometheus

component: node-exporter

type: ClusterIP

# kubectl create -f prometheus_node_exporter.yaml

2.8 开启 Kubernetes 状态 Exporter (通过 EndPoints 收集)

# vim prometheus_kube_stat_exporter.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: kube-state-metrics

rules:

- apiGroups: [""]

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs: ["list", "watch"]

- apiGroups: ["extensions"]

resources:

- daemonsets

- deployments

- replicasets

verbs: ["list", "watch"]

- apiGroups: ["apps"]

resources:

- statefulsets

verbs: ["list", "watch"]

- apiGroups: ["batch"]

resources:

- cronjobs

- jobs

verbs: ["list", "watch"]

- apiGroups: ["autoscaling"]

resources:

- horizontalpodautoscalers

verbs: ["list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

namespace: monitoring

name: kube-state-metrics-resizer

rules:

- apiGroups: [""]

resources:

- pods

verbs: ["get"]

- apiGroups: ["extensions"]

resources:

- deployments

resourceNames: ["kube-state-metrics"]

verbs: ["get", "update"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-state-metrics

namespace: monitoring

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: monitoring

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: kube-state-metrics

namespace: monitoring

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kube-state-metrics-resizer

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: monitoring

---

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: kube-state-metrics

namespace: monitoring

spec:

selector:

matchLabels:

k8s-app: kube-state-metrics

replicas: 1

template:

metadata:

labels:

k8s-app: kube-state-metrics

spec:

serviceAccountName: kube-state-metrics

containers:

- name: kube-state-metrics

image: quay.io/coreos/kube-state-metrics:v1.3.0

ports:

- name: http-metrics

containerPort: 8080

- name: telemetry

containerPort: 8081

readinessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

- name: addon-resizer

image: k8s.gcr.io/addon-resizer:1.7

resources:

limits:

cpu: 100m

memory: 30Mi

requests:

cpu: 100m

memory: 30Mi

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

command:

- /pod_nanny

- --container=kube-state-metrics

- --cpu=100m

- --extra-cpu=1m

- --memory=100Mi

- --extra-memory=2Mi

- --threshold=5

- --deployment=kube-state-metrics

---

apiVersion: v1

kind: Service

metadata:

name: kube-state-metrics

namespace: monitoring

labels:

k8s-app: kube-state-metrics

annotations:

prometheus.io/scrape: 'true'

spec:

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

protocol: TCP

- name: telemetry

port: 8081

targetPort: telemetry

protocol: TCP

selector:

k8s-app: kube-state-metrics

# kubectl create -f prometheus_kube_stat_exporter.yaml

2.9 查看 prometheus web-ui

浏览器访问:http:<任意 k8s Node IP>:30122/targets

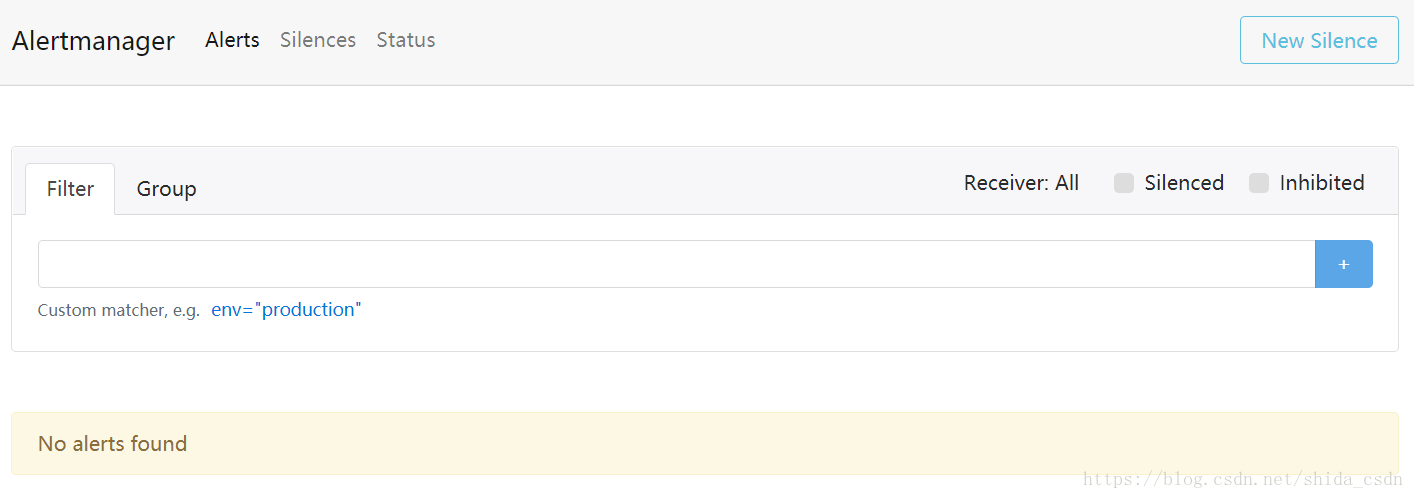

2.10 查看 alert manager web-ui

浏览器访问:http:<任意 k8s Node IP>:30123

2.11 邮箱收到告警样式