data(churn)导入自带的训练集churnTrain和测试集churnTest

用id3、cart、C4.5和C5.0创建决策树模型,并用交叉矩阵评估模型,针对churn数据,哪种模型更合适

决策树模型 ID3/C4.5/CART算法比较 传送门

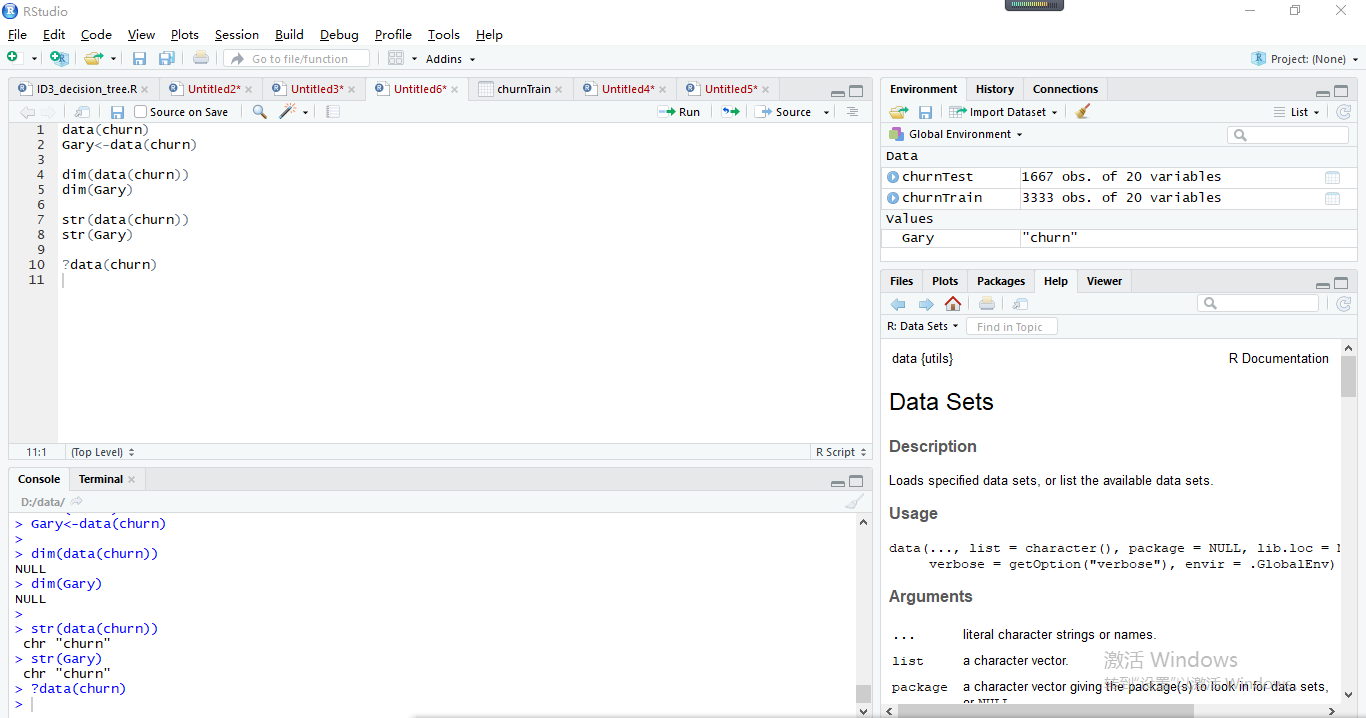

data(churn)为R自带的训练集,这个data(chun十分特殊)

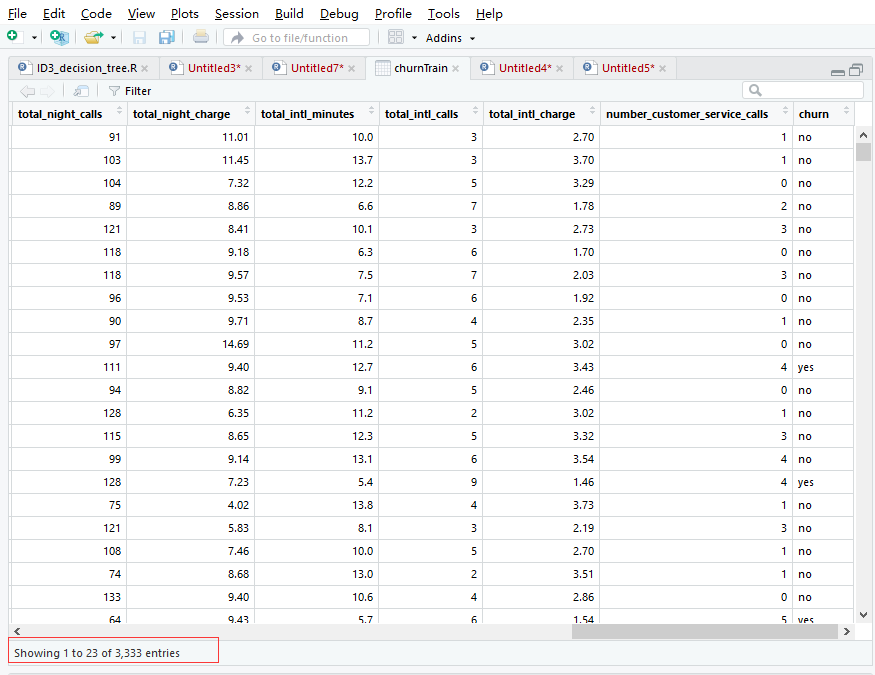

先对data(churn)训练集和测试集进行数据查询

churnTest数据

奇怪之处,不能存储它的数据,不能查看数据的维度 ,不能查看数据框中每个变量的属性!!

> data(churn) > Gary<-data(churn) > > dim(data(churn)) NULL > dim(Gary) NULL > > str(data(churn)) chr "churn" > str(Gary) chr "churn"

官方我只看懂了它是一个数据集:加载指定的数据集,或列出可用的数据集(英文文档真是硬伤∑=w=)

用不同决策树模型去预测它churn数据集,比较一下哪种模型更合适churn数据

比较评估模型(预测)的正确率

#正确率 sum(diag(tab))/sum(tab)

id3创建决策树模型

#加载数据 data(churn) #随机抽样设置种子,种子是为了让结果具有重复性 set.seed(1) library(rpart) Gary1<-rpart(churn~.,data=churnTrain,method="class", control=rpart.control(minsplit=1),parms=list(split="information")) printcp(Gary1) #交叉矩阵评估模型 pre1<-predict(Gary1,newdata=churnTrain,type='class') tab<-table(pre1,churnTrain$churn) tab #评估模型(预测)的正确率 sum(diag(tab))/sum(tab)

pre1 yes no yes 360 27 no 123 2823 > sum(diag(tab))/sum(tab) [1] 0.9549955

cart创建决策树模型

data(churn) set.seed(1) library(rpart) Gary1<-rpart(churn~.,data=churnTrain,method="class", control=rpart.control(minsplit=1),parms=list(split="gini")) printcp(Gary1) #交叉矩阵评估模型 pre1<-predict(Gary1,newdata=churnTrain,type='class') tab<-table(pre1,churnTrain$churn) tab #评估模型(预测)的正确率 sum(diag(tab))/sum(tab)

pre1 yes no yes 354 35 no 129 2815 > sum(diag(tab))/sum(tab) [1] 0.9507951

C4.5创建决策树模型

data(churn) library(RWeka) #oldpar=par(mar=c(3,3,1.5,1),mgp=c(1.5,0.5,0),cex=0.3) Gary<-J48(churn~.,data=churnTrain) tab<-table(churnTrain$churn,predict(Gary)) tab #评估模型(预测)的正确率 sum(diag(tab))/sum(tab)

yes no yes 359 124 no 24 2826 > sum(diag(tab))/sum(tab) [1] 0.9555956

C5.0创建决策树模型

data(churn) treeModel <- C5.0(x = churnTrain[, -20], y = churnTrain$churn) ruleModel <- C5.0(churn ~ ., data = churnTrain, rules = TRUE) tab<-table(churnTest$churn,predict(ruleModel,churnTest)) tab #评估模型(预测)的正确率 sum(diag(tab))/sum(tab)

yes no yes 149 75 no 15 1428 > sum(diag(tab))/sum(tab) [1] 0.9460108

实现过程

id3创建决策树模型:

加载数据,随机抽样设置种子,种子是为了让结果具有重复性

data(churn)

set.seed(1)

使用rpart包创建决策树模型

> Gary1<-rpart(churn~.,data=churnTrain,method="class", control=rpart.control(minsplit=1),parms=list(split="information")) > printcp(Gary1) Classification tree: rpart(formula = churn ~ ., data = churnTrain, method = "class", parms = list(split = "information"), control = rpart.control(minsplit = 1)) Variables actually used in tree construction: [1] international_plan number_customer_service_calls state [4] total_day_minutes total_eve_minutes total_intl_calls [7] total_intl_minutes voice_mail_plan Root node error: 483/3333 = 0.14491 #根节点错误:483/3333=0.14491 n= 3333 CP nsplit rel error xerror xstd #错误的XSTD 1 0.089027 0 1.00000 1.00000 0.042076 2 0.084886 1 0.91097 0.95445 0.041265 3 0.078675 2 0.82609 0.90269 0.040304 4 0.052795 4 0.66874 0.72878 0.036736 5 0.022774 7 0.47412 0.51139 0.031310 6 0.017253 9 0.42857 0.49068 0.030719 7 0.012422 12 0.37681 0.46170 0.029865 8 0.010000 17 0.31056 0.43892 0.029171

交叉矩阵评估模型

> pre1<-predict(Gary1,newdata=churnTrain,type='class') > tab<-table(pre1,churnTrain$churn) > tab pre1 yes no yes 360 27 no 123 2823

对角线上的数据实际值和预测值相同,非对角线上的值为预测错误的值

评估模型(预测)的正确率

> sum(diag(tab))/sum(tab)

[1] 0.9549955

diag(x = 1, nrow, ncol) diag(x) <- value 解析: x:一个矩阵,向量或一维数组,或不填写。 nrow, ncol:可选 行列。 value :对角线的值,可以是一个值或一个向量

cart创建决策树模型:

与id3区别parms=list(split="gini"))

Gary1<-rpart(churn~.,data=churnTrain,method="class", control=rpart.control(minsplit=1),parms=list(split="gini"))

解释略

> data(churn) > > set.seed(1) > > library(rpart) > > Gary1<-rpart(churn~.,data=churnTrain,method="class", control=rpart.control(minsplit=1),parms=list(split="gini")) > printcp(Gary1) Classification tree: rpart(formula = churn ~ ., data = churnTrain, method = "class", parms = list(split = "gini"), control = rpart.control(minsplit = 1)) Variables actually used in tree construction: [1] international_plan number_customer_service_calls state [4] total_day_minutes total_eve_minutes total_intl_calls [7] total_intl_minutes voice_mail_plan Root node error: 483/3333 = 0.14491 n= 3333 CP nsplit rel error xerror xstd 1 0.089027 0 1.00000 1.00000 0.042076 2 0.084886 1 0.91097 0.96273 0.041414 3 0.078675 2 0.82609 0.90062 0.040265 4 0.052795 4 0.66874 0.72050 0.036551 5 0.023810 7 0.47412 0.49896 0.030957 6 0.017598 9 0.42650 0.53416 0.031942 7 0.014493 12 0.36853 0.51553 0.031426 8 0.010000 14 0.33954 0.48654 0.030599 > > #交叉矩阵评估模型 > pre1<-predict(Gary1,newdata=churnTrain,type='class') > tab<-table(pre1,churnTrain$churn) > tab pre1 yes no yes 354 35 no 129 2815 > > #评估模型(预测)的正确率 > sum(diag(tab))/sum(tab) [1] 0.9507951

C4.5创建决策树模型:

读取数据,加载party包

data(churn)

library(RWeka)

使用rpart包J48()创建决策树模型

> Gary<-J48(churn~.,data=churnTrain) > tab<-table(churnTrain$churn,predict(Gary)) > tab yes no yes 359 124 no 24 2826 > #评估模型(预测)的正确率 > sum(diag(tab))/sum(tab) [1] 0.9555956

C5.0创建决策树模型:

C5.0算法则是C4.5算法的商业版本,较C4.5算法提高了运算效率,它加入了boosting算法,使该算法更加智能化

解释略

> data(churn) > treeModel <- C5.0(x = churnTrain[, -20], y = churnTrain$churn) > > ruleModel <- C5.0(churn ~ ., data = churnTrain, rules = TRUE) > > tab<-table(churnTest$churn,predict(ruleModel,churnTest)) > tab yes no yes 149 75 no 15 1428 > #评估模型(预测)的正确率 > sum(diag(tab))/sum(tab) [1] 0.9460108

diag(x = 1, nrow, ncol)

diag(x) <- value

解析:

x:一个矩阵,向量或一维数组,或不填写。

nrow, ncol:可选 行列。

value :对角线的值,可以是一个值或一个向量