TR方法比LS方法收敛速度快

TR方法中有几个参数需要选择:

-

模型函数

- 信赖域

- 求解参数

when ,Taylor-series expansion of f around

,which is

where t is some scalar in the interval (0,1).

By using an approximation to the Hessian in the second-order term:

Then we seek a solution of subproblem:

The difference between and

is

, which is small when p is small.

Let

1.if is negative , the newe value

is greater than

, so the step must be rejected.,because step

is obtained by minimizing the model

.

2.if is close to 1, so it safe to expand the trust region.

3.if is postive but significantly smaller than 1,we do not alter the trust region.

4.if is close to 0, we shrink the trust region.

-

专注于求解子问题:

We sometimes drop the interation subscript k and restate the problem as follows:

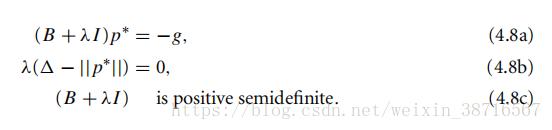

if and only if

(4.8b) is a complementarity condition that states at least one of and (

) must be 0.

When ,

lies strictly inside the trust region,we must have

.

When or

, we have

, then we get

Finally we get p.

4.1 Algotithms based on the Cauchy Point

Find an approximate solution

4.1.1 The Cauchy Point

1.Find the vector by solving a linear version of subproblem,that is,

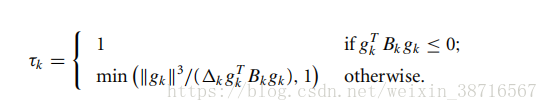

2.Calculate the scalar

3.Set .

When ,

decreases monotonically with

, so

When , min

,so

Taking the Cauchy point as our step,we are simply implementing the steepest descent method with a particular choice if step length.

4.1.2 The Dogleg Method

It can be used when B is positive definite.

We denote the solution of it by .

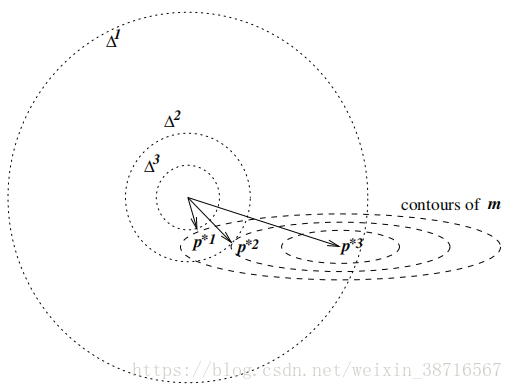

- When

,

- When

, then we have the restriction

,so

so, .

Consider the step length .

,then we have

1.When , then we choose

2..When , then let

, we choose

3..When , then we want

be close to

, so

where

4.1.3 Two-Dimensional subspace minimization

The dogleg method for p can be widen to the entire two-dimensional subspace spanned by pU and pB (equivalently, g and ). The subproblem is replaced by