Scikit-Learn 练习题

Code

from sklearn import datasets, cross_validation, metrics

from sklearn.naive_bayes import GaussianNB

from sklearn.svm import SVC

from sklearn.ensemble import RandomForestClassifier

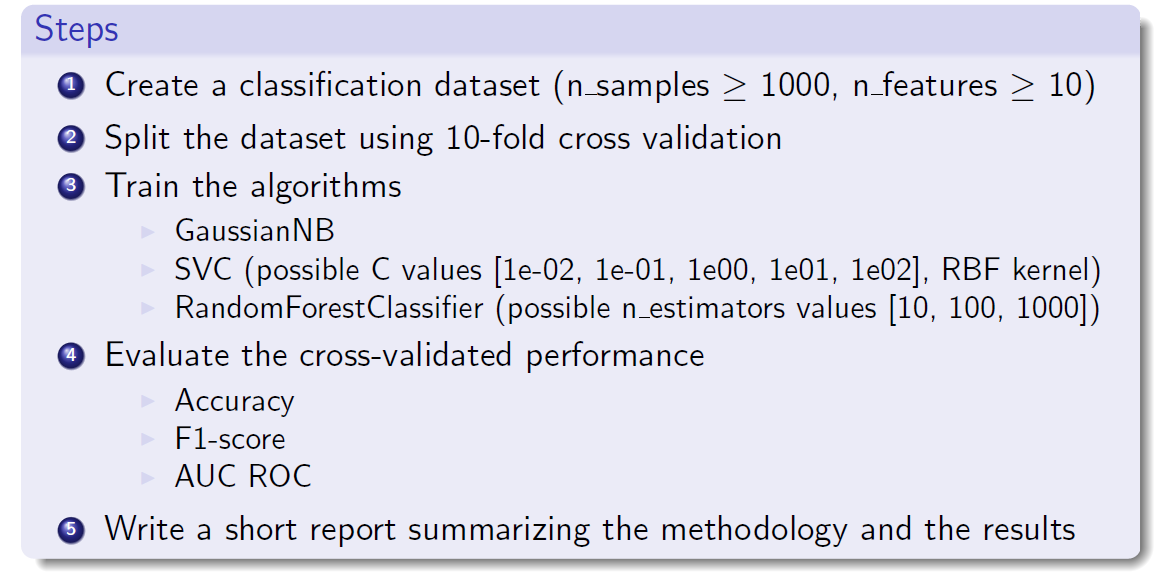

# Create a classification dataset.

dataset = datasets.make_classification(n_samples=1000, n_features=10)

[X, y] = dataset

acc, f1, auc = 0, 0, 0

# Split the dataset using 10-fold cross validation.

ten_fold = cross_validation.KFold(len(X), n_folds=10, shuffle=True)

for train_index, test_index in ten_fold:

X_train, y_train = X[train_index], y[train_index]

X_test, y_test = X[test_index], y[test_index]

# Train the algorithms using GaussianNB.

clf = GaussianNB()

clf.fit(X_train, y_train)

pred = clf.predict(X_test)

# Evaluate the cross-validated performance

acc += metrics.accuracy_score(y_test, pred)

f1 += metrics.f1_score(y_test, pred)

auc += metrics.roc_auc_score(y_test, pred)

print("GaussianNB:")

print("- Accuracy: %f" % (acc/10))

print("- F1-score: %f" % (f1/10))

print("- AUC ROC: %f" % (auc/10))

print()

for value in [1e-02, 1e-01, 1e00, 1e01, 1e02]:

acc, f1, auc = 0, 0, 0

for train_index, test_index in ten_fold:

X_train, y_train = X[train_index], y[train_index]

X_test, y_test = X[test_index], y[test_index]

# Train the algorithms using SVC.

clf = SVC(C=value, kernel='rbf', gamma=0.1)

clf.fit(X_train, y_train)

pred = clf.predict(X_test)

# Evaluate the cross-validated performance

acc += metrics.accuracy_score(y_test, pred)

f1 += metrics.f1_score(y_test, pred)

auc += metrics.roc_auc_score(y_test, pred)

print("\nSVC (C = %.0e):" % value)

print("- Accuracy: %f" % (acc/10))

print("- F1-score: %f" % (f1/10))

print("- AUC ROC: %f" % (auc/10))

print()

for value in [10, 100, 1000]:

acc, f1, auc = 0, 0, 0

for train_index, test_index in ten_fold:

X_train, y_train = X[train_index], y[train_index]

X_test, y_test = X[test_index], y[test_index]

# Train the algorithms using RandomForestClassifier.

clf = RandomForestClassifier(n_estimators=value)

clf.fit(X_train, y_train)

pred = clf.predict(X_test)

# Evaluate the cross-validated performance

acc += metrics.accuracy_score(y_test, pred)

f1 += metrics.f1_score(y_test, pred)

auc += metrics.roc_auc_score(y_test, pred)

print("\nRandomForestClassifier (n_estimators = %d):" % value)

print("- Accuracy: %f" % (acc/10))

print("- F1-score: %f" % (f1/10))

print("- AUC ROC: %f" % (auc/10))Output

GaussianNB:

- Accuracy: 0.914000

- F1-score: 0.915247

- AUC ROC: 0.914527

SVC (C = 1e-02):

- Accuracy: 0.865000

- F1-score: 0.868321

- AUC ROC: 0.873646

SVC (C = 1e-01):

- Accuracy: 0.935000

- F1-score: 0.936352

- AUC ROC: 0.935286

SVC (C = 1e+00):

- Accuracy: 0.942000

- F1-score: 0.944010

- AUC ROC: 0.942180

SVC (C = 1e+01):

- Accuracy: 0.932000

- F1-score: 0.933763

- AUC ROC: 0.932221

SVC (C = 1e+02):

- Accuracy: 0.904000

- F1-score: 0.905260

- AUC ROC: 0.904708

RandomForestClassifier (n_estimators = 10):

- Accuracy: 0.961000

- F1-score: 0.961153

- AUC ROC: 0.960277

RandomForestClassifier (n_estimators = 100):

- Accuracy: 0.965000

- F1-score: 0.965509

- AUC ROC: 0.964309

RandomForestClassifier (n_estimators = 1000):

- Accuracy: 0.963000

- F1-score: 0.963489

- AUC ROC: 0.962328Report

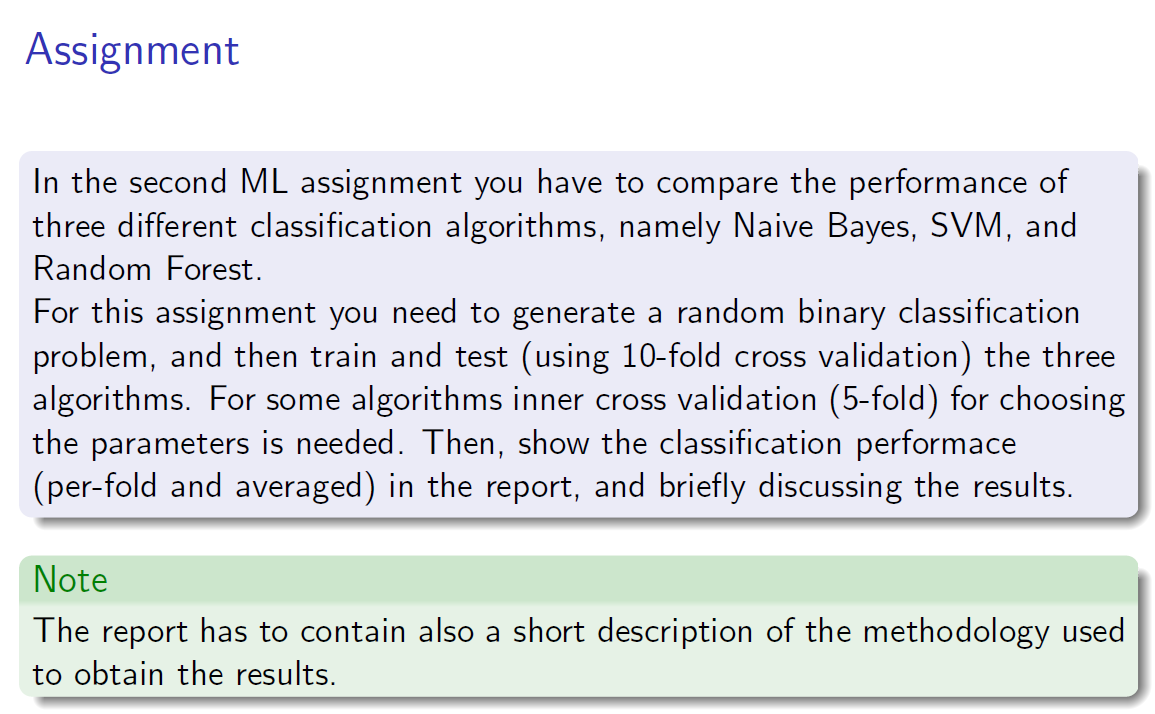

- SVC的三种得分均随着参数C的增大呈先上升后下降的趋势,说明参数C的取值对SVC的表现有一定影响。

- 参数n_estimators的改变对RandomForestClassifier的三种得分几乎没有影响,但是从实际运行来看,随着参数n_estimators的增大,训练时间也会增加,综合时间成本来看,选择较小的n_estimators可能更好。

- 综合三种模型的表现来看,RandomForestClassifier的表现最好,但运行时间也最长;而GaussianNB的运行时间最短,但表现最差(相对而言)。