一、引入

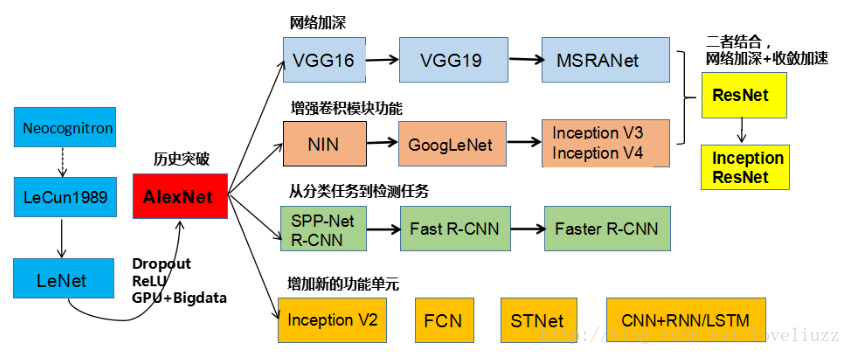

1.发展

2.特点

(1)全部使用3×3的卷积核,步长1;和2×2的池化核,步长2;通过不断加深网络结构提升性能。

(2)两个3×3卷积层串联优于一个7×7卷积层,使得CNN对特征学习能力更强。

(3)不用LRN层;越深层网络效果越好。

二、tensorflow实现

1.实现计算前向预测和反向测评时间

(1)导入

from datetime import datetime

import math

import time

import tensorflow as tf(2)设置参数

#设置参数

batch_size = 32 #每个batch数据的大小

num_batches = 50 #一共测试50个batch数据(3)封装VGG所用函数

def conv_op(input, name, wh, ww, n_out, dh, dw, p): #输入,范围名称,滤波器尺寸,滤波器输出通道数,步长,参数列表

n_in = input.get_shape()[-1].value #输入通道数

with tf.name_scope(name) as scope:

weight = tf.get_variable(scope+"w", shape=[wh, ww, n_in, n_out], dtype=tf.float32,

initializer=tf.contrib.layers.xavier_initializer_conv2d()) #滤波器

conv = tf.nn.conv2d(input, weight, (1, dw, dw, 1),padding='SAME') #步长 必须是边界填充SAME

bias_init_val = tf.constant(0.0, shape=[n_out],dtype=tf.float32)

biases = tf.Variable(bias_init_val, trainable=True, name='b') #偏置

result = tf.nn.bias_add(conv, biases) #前向

activation = tf.nn.relu(result, name=scope) #非线性激活

p += [weight, biases] #参数

return activation

def pool_op(input, name, wh, ww, dh, dw):

pool = tf.nn.max_pool(input, ksize=[1, wh, ww, 1], strides=[1, dw, dh, 1], padding='VALID', name=name) #池化 尺寸 步长 必须是不填充VALID

return pool

def fc_op(input, name, n_out, p): #全连接

n_in = input.get_shape()[-1].value #通道数

with tf.name_scope(name) as scope:

weight = tf.get_variable(scope+"w", shape=[n_in, n_out], dtype=tf.float32,

initializer=tf.contrib.layers.xavier_initializer())

bias_init_val = tf.constant(0.1, shape=[n_out], dtype=tf.float32)

biases = tf.Variable(bias_init_val, name='b')

activation = tf.nn.relu_layer(input, weight, biases, name=scope)

p += [weight, biases]

return activation

def inference(images, keep_prob):

parameters = [] #参数

#卷积1

conv1_1 = conv_op(images, name='conv1_1', wh=3, ww=3, n_out=64, dh=1, dw=1, p=parameters)

conv1_2 = conv_op(conv1_1, name='conv1_2', wh=3, ww=3, n_out=64, dh=1, dw=1, p=parameters)

pool1 = pool_op(conv1_2, name='pool1', wh=2, ww=2, dh=2, dw=2)

#卷积2

conv2_1 = conv_op(pool1, name='conv2_1', wh=3, ww=3, n_out=128, dh=1, dw=1, p=parameters)

conv2_2 = conv_op(conv2_1, name='conv2_1', wh=3, ww=3, n_out=128, dh=1, dw=1, p=parameters)

pool2 = pool_op(conv2_2, name='pool2', wh=2, ww=2, dh=2, dw=2)

# 卷积3

conv3_1 = conv_op(pool2, name='conv3_1', wh=3, ww=3, n_out=256, dh=1, dw=1, p=parameters)

conv3_2 = conv_op(conv3_1, name='conv3_1', wh=3, ww=3, n_out=256, dh=1, dw=1, p=parameters)

conv3_3 = conv_op(conv3_2, name='conv3_1', wh=3, ww=3, n_out=256, dh=1, dw=1, p=parameters)

pool3 = pool_op(conv3_3, name='pool3', wh=2, ww=2, dh=2, dw=2)

# 卷积4

conv4_1 = conv_op(pool3, name='conv4_1', wh=3, ww=3, n_out=512, dh=1, dw=1, p=parameters)

conv4_2 = conv_op(conv4_1, name='conv4_1', wh=3, ww=3, n_out=512, dh=1, dw=1, p=parameters)

conv4_3 = conv_op(conv4_2, name='conv4_1', wh=3, ww=3, n_out=512, dh=1, dw=1, p=parameters)

pool4 = pool_op(conv4_3, name='pool4', wh=2, ww=2, dh=2, dw=2)

# 卷积5

conv5_1 = conv_op(pool4, name='conv5_1', wh=3, ww=3, n_out=512, dh=1, dw=1, p=parameters)

conv5_2 = conv_op(conv5_1, name='conv5_1', wh=3, ww=3, n_out=512, dh=1, dw=1, p=parameters)

conv5_3 = conv_op(conv5_2, name='conv5_1', wh=3, ww=3, n_out=512, dh=1, dw=1, p=parameters)

pool5 = pool_op(conv5_3, name='pool5', wh=2, ww=2, dh=2, dw=2)

#全连接:扁平化处理成1维向量

shp = pool5.get_shape()

flattened_shape = shp[1].value * shp[2].value * shp[3].value

resh1 = tf.reshape(pool5, [-1,flattened_shape], name='resh1') #转换为一维

fc1 = fc_op(resh1, name='fc1', n_out=4096, p=parameters)

fc1_drop = tf.nn.dropout(fc1, keep_prob, name='fc1_drop')

fc2 = fc_op(fc1_drop, name='fc2', n_out=4096, p=parameters)

fc2_drop = tf.nn.dropout(fc2, keep_prob, name='fc2_drop')

fc3 = fc_op(fc2_drop, name='fc3', n_out=1000, p=parameters)

softmax = tf.nn.softmax(fc3) #预测值

predictions = tf.argmax(softmax, 1) #返回最大值的索引号

return predictions, parameters, fc3(4)时间间隔(同于alex)

def time_tensorflow_run(session, target, feed, info_string):

num_steps_burn_in = 10 # 设备热身,存在显存加载/cache命中等问题

total_duration = 0.0 # 总时间

total_duration_squared = 0.0 # 用于计算方差

for i in range(num_batches + num_steps_burn_in):

start_time = time.time()

_ = session.run(target, feed_dict=feed)

duration = time.time() - start_time

if i >= num_steps_burn_in:

if not i % 10:

print('%s: step %d, duration = %.3f' %

(datetime.now(), i - num_steps_burn_in, duration))

total_duration += duration

total_duration_squared += duration * duration

mn = total_duration / num_batches

vr = total_duration_squared / num_batches - mn * mn

sd = math.sqrt(vr)

print('%s: %s across %d steps, %.3f +/- %.3f sec / batch' %

(datetime.now(), info_string, num_batches, mn, sd))

(5)运行

def run_benchmark():

with tf.Graph().as_default():

image_size = 224

images = tf.Variable(tf.random_normal([batch_size,

image_size,

image_size, 3],

dtype=tf.float32,

stddev=1e-1))

keep_prob = tf.placeholder(tf.float32) #必须占位

predictions, parameters, fc3 = inference(images, keep_prob)

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

time_tensorflow_run(sess, predictions, {keep_prob: 1.0}, "Forward") #预测用keep_prob=1.0

objective = tf.nn.l2_loss(fc3) #l2正则化必须计算每层输出,不能越级计算prediction,否则报错,格式不符。

grad = tf.gradients(objective, parameters) # 计算梯度(objective与parameters有相关)

time_tensorflow_run(sess, grad, {keep_prob: 0.5}, "Forward-backward") #评测用keep_prob=0.5

if __name__ == '__main__':

run_benchmark()(6)结果

2018-09-20 10:47:33.207136: step 0, duration = 19.633

2018-09-20 10:50:55.973392: step 10, duration = 21.066

2018-09-20 10:54:25.718082: step 20, duration = 21.203

2018-09-20 10:57:47.927425: step 30, duration = 19.620

2018-09-20 11:01:21.385679: step 40, duration = 23.137

2018-09-20 11:04:22.032885: Forward across 50 steps, 20.569 +/- 0.932 sec / batch

2018-09-20 11:15:46.500329: step 0, duration = 59.778

2018-09-20 11:25:44.948142: step 10, duration = 59.143

2018-09-20 11:35:45.526153: step 20, duration = 59.795

2018-09-20 11:45:45.806747: step 30, duration = 60.079

2018-09-20 11:55:47.121064: step 40, duration = 60.367

2018-09-20 12:04:45.947511: Forward-backward across 50 steps, 59.984 +/- 0.530 sec / batch