逻辑回归处理二元分类

普通的线性回归假设响应变量呈正态分布,也称为高斯分布(Gaussian distribution )或钟形曲线(bell curve)。正态分布数据是对称的,且均值,中位数和众数(mode)是一样的。

掷一个硬币获取正反两面的概率分布是伯努力分布(Bernoulli distribution),又称两点分布或者0-1分布。表示一个事件发生的概率是p,不发生的概率是1-p,概率在{0,1}之间

在逻辑回归里,响应变量描述了类似于掷一个硬币结果为正面的概率。如果响应变量等于或超过了指

定的临界值,预测结果就是正面,否则预测结果就是反面。响应变量是一个像线性回归中的解释变量

构成的函数表示,称为逻辑函数(logistic function)。

二元分类效果评估方法

二元分类的效果评估方法有很多,常见的包括第一章里介绍的肿瘤预测使用的准确率(accuracy),

精确率(precision)和召回率(recall)三项指标,以及综合评价指标(F1 measure), ROC AUC

值(Receiver Operating Characteristic ROC,Area Under Curve,AUC)

在我们的垃圾短信分类里,真阳性是指分类器将一个垃圾短信分辨为spam类。真阴性是指分类器将

一个正常短信分辨为ham类。假阳性是指分类器将一个正常短信分辨为spam类。假阴性是指分类器

将一个垃圾短信分辨为ham类。混淆矩阵(Confusion matrix),也称列联表分析(Contingency

table)可以用来描述真假与阴阳的关系。矩阵的行表示实际类型,列表示预测类型。

LogisticRegression.score()用来计算模型预测的准确率

import numpy as np

import pandas as pd

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.linear_model.logistic import LogisticRegression

from sklearn.cross_validation import train_test_split, cross_val_score

df = pd.read_csv('mlslpic/sms.csv')

X_train_raw, X_test_raw, y_train, y_test = train_test_split(df['message']

, df['label'])

vectorizer = TfidfVectorizer()

X_train = vectorizer.fit_transform(X_train_raw)

X_test = vectorizer.transform(X_test_raw)

classifier = LogisticRegression()

classifier.fit(X_train, y_train)

scores = cross_val_score(classifier, X_train, y_train, cv=5)

print('准确率:',np.mean(scores), scores)

输出结果如下:

准确率: 0.958373205742 [ 0.96291866 0.95334928 0.95813397 0.96172249 0.95574163]

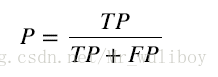

精确率:

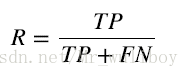

召回率:

scikit-learn结合真实类型数据,提供了一个函数来计算一组预测值的精确率和召回率。

代码如下:

import numpy as np

import pandas as pd

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.linear_model.logistic import LogisticRegression

from sklearn.cross_validation import train_test_split, cross_val_score

df = pd.read_csv('mlslpic/sms.csv')

X_train_raw, X_test_raw, y_train, y_test = train_test_split(df['message']

, df['label'])

vectorizer = TfidfVectorizer()

X_train = vectorizer.fit_transform(X_train_raw)

X_test = vectorizer.transform(X_test_raw)

classifier = LogisticRegression()

classifier.fit(X_train, y_train)

precisions = cross_val_score(classifier, X_train, y_train, cv=5, scoring=

'precision')

print('精确率:', np.mean(precisions), precisions)

recalls = cross_val_score(classifier, X_train, y_train, cv=5, scoring='re

call')

print('召回率:', np.mean(recalls), recalls)

输出结果:

精确率: 0.99217372134 [ 0.9875 0.98571429 1. 1. 0.98765432]

召回率: 0.672121212121 [ 0.71171171 0.62162162 0.66363636 0.63636364 0.72727273]

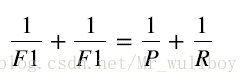

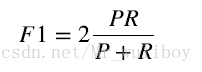

综合评价指标(F1 measure)是精确率和召回率的调和均值(harmonic mean),或加权平均值,也称为F-measure或fF-score。

即:

scikit-learn也提供了计算综合评价指标的函数。

代码如下:

f1s = cross_val_score(classifier, X_train, y_train, cv=5, scoring='f1')

print('综合评价指标:', np.mean(f1s), f1s)

输出结果如下:

综合评价指标: 0.8020666384483939 [0.76923077 0.81481481 0.86010363 0.76404494 0.80213904]

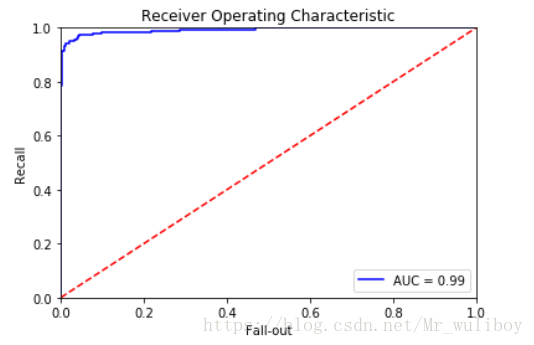

ROC AUC

ROC曲线(Receiver Operating Characteristic,ROC curve)可以用来可视化分类器的效果。和准确

率不同,ROC曲线对分类比例不平衡的数据集不敏感,ROC曲线显示的是对超过限定阈值的所有预

测结果的分类器效果。ROC曲线画的是分类器的召回率与误警率(fall-out)的曲线。误警率也称假

阳性率,是所有阴性样本中分类器识别为阳性的样本所占比例:

AUC是ROC曲线下方的面积,它把ROC曲线变成一个值,表示分类器随机预测的效果。scikit-learn

提供了计算ROC和AUC指标的函数

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.linear_model.logistic import LogisticRegression

from sklearn.cross_validation import train_test_split, cross_val_score

from sklearn.metrics import roc_curve, auc

df = pd.read_csv('D:\dateset\SMSSpamCollection', delimiter='\t', header=None)

X_train_raw, X_test_raw, y_train, y_test = train_test_split(df[1], df[0])

lb = LabelBinarizer()#标签二值化

y_test = np.array([number[0] for number in lb.fit_transform(y_test)])

vectorizer = TfidfVectorizer()

X_train = vectorizer.fit_transform(X_train_raw)

X_test = vectorizer.transform(X_test_raw)

classifier = LogisticRegression()

classifier.fit(X_train, y_train)

predictions = classifier.predict_proba(X_test)

false_positive_rate, recall, thresholds = roc_curve(y_test, predictions[:, 1])

roc_auc = auc(false_positive_rate, recall)

plt.title('Receiver Operating Characteristic')

plt.plot(false_positive_rate, recall, 'b', label='AUC = %0.2f' % roc_auc)

plt.legend(loc='lower right')

plt.plot([0, 1], [0, 1], 'r--')

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.0])

plt.ylabel('Recall')

plt.xlabel('Fall-out')

plt.show()

输出结果如下: