1 MapReduce概述

源自Google的MapReduce论文。

Hadoop MapReduce is a software framework for easily writing applications which process vast amounts of data (multi-terabyte data-sets) in-parallel on large clusters (thousands of nodes) of commodity hardware in a reliable, fault-tolerant manner.

MapReduce

- 优点:海量数据离线处理&易开发&易运行

- 缺点:无法进行实时流式计算

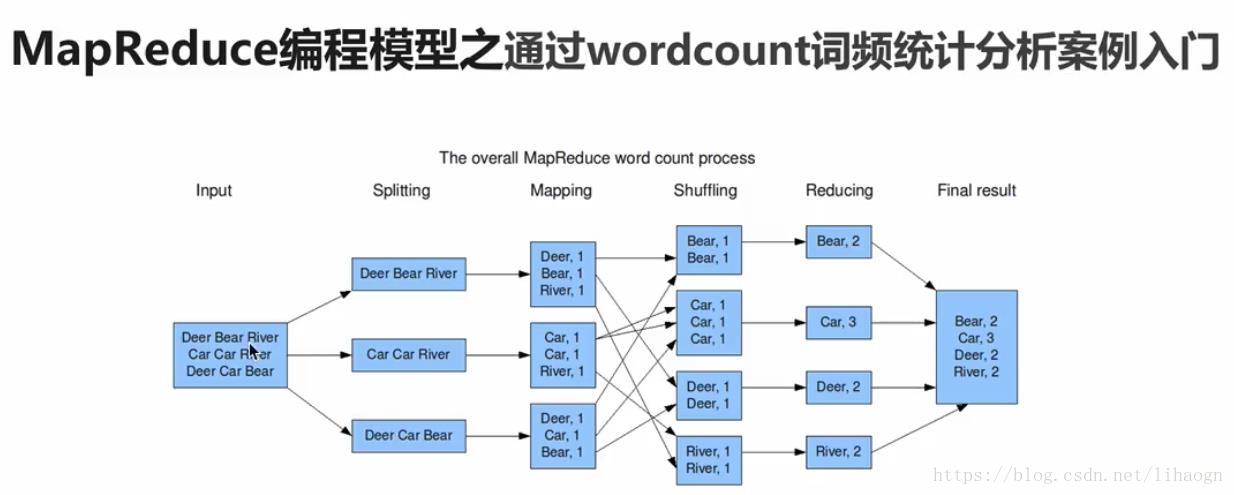

2 MapReduce编程模型

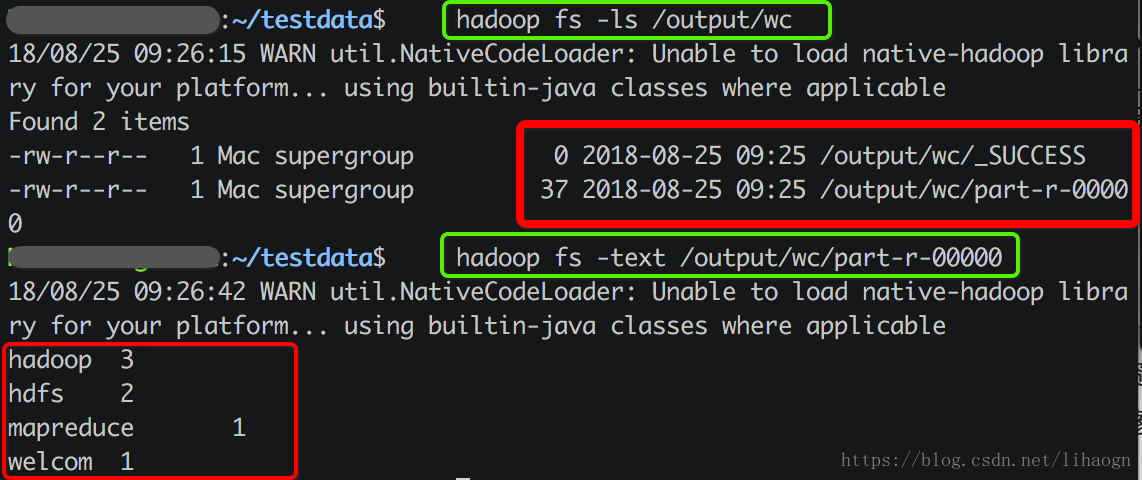

wordcount: 统计文件中每个单词出现的次数

需求:求wc

1)文件内容小:用shell

2)文件内容很大: TB GB ???? 如何解决大数据量的统计分析

==> 求url TOPN <== wc的延伸

工作中很多场景的开发都是wc的基础上进行改造的

借助于分布式计算框架来解决:mapreduce

2.1 MapReduce执行流程

分而治之

(input) < k1, v1 > -> map -> < k2, v2> -> combine -> < k2, v2 > -> reduce -> < k3, v3 > (output)

1)执行步骤:

- 准备map处理的输入数据

- Mapper处理

- Shuffle

- Reducer处理

- 结果输出

2)核心概念

Split:

- 交由MapReduce作业来处理的数据块,是MapReduce中最小的计算单元。

- 一个 Split 交由一个 Mapper Task 处理。

- HDFS:blocksize 是HDFS中最小的存储单元 128M

- 默认情况下:他们两是一一对应的,当然我们也可以手工设置他们之间的关系(不建议)

InputFormat:

- 将我们的输入数据进行分片(split): InputSplit[] getSplits(JobConf job, int numSplits) throws IOException;

- TextInputFormat: 处理文本格式的数据

OutputFormat: 输出

Combiner

Partitioner

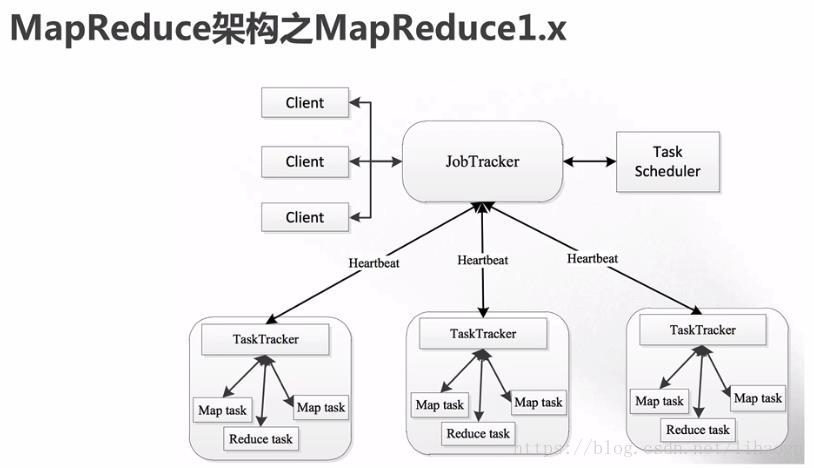

3 MapReduce架构

3.1MapReduce1.x的架构

1)JobTracker: JT

- 作业的管理者(管理的)

- 将作业分解成一堆的任务:Task(MapTask和ReduceTask)

- 将任务分派给TaskTracker运行

- 作业的监控、容错处理(task作业挂了,重启task的机制)

- 在一定的时间间隔内,JT没有收到TT的心跳信息,TT可能是挂了,TT上运行的任务会被指派到其他TT上去执行

2)TaskTracker: TT

- 任务的执行者(干活的)

- 在TT上执行我们的Task(MapTask和ReduceTask)

- 会与JT进行交互:执行/启动/停止作业,发送心跳信息给JT

3)MapTask

- 自己开发的map任务交由该Task出来

- 解析每条记录的数据,交给自己的map方法处理

- 将map的输出结果写到本地磁盘(有些作业只仅有map没有reduce==>HDFS)

4)ReduceTask

- 将Map Task输出的数据进行读取

- 按照数据进行分组传给我们自己编写的reduce方法处理

- 输出结果写到HDFS

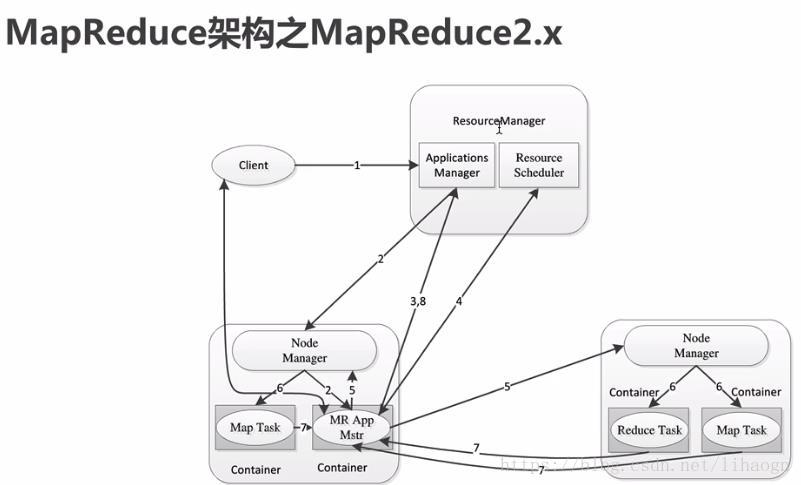

3.2 MapReduce2.x的架构

运行在yarn上

- 提交MapReduce作业,申请资源,查询作业执行状况

- 找一个节点启动一个container来运行MR App Mstr

- 向RM申请或注册资源

- 得到资源

- 到相应的nodemanager上启动任务

- 启动任务

4 MapReduce编程

4.1 使用IDEA+Maven开发wc:

1)开发

pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.lihaogn.hadoop</groupId>

<artifactId>hadoop-train</artifactId>

<version>1.0-</version>

<name>hadoop-train</name>

<!-- FIXME change it to the project's website -->

<url>http://www.example.com</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<maven.compiler.source>1.7</maven.compiler.source>

<maven.compiler.target>1.7</maven.compiler.target>

<!-- 我的添加 -->

<hadoop.version>2.6.0-cdh5.7.0</hadoop.version>

</properties>

<!-- 添加仓库 -->

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<scope>test</scope>

</dependency>

<!--添加hadoop依赖-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

</dependencies>

<build>

<pluginManagement><!-- lock down plugins versions to avoid using Maven defaults (may be moved to parent pom) -->

<plugins>

<plugin>

<artifactId>maven-clean-plugin</artifactId>

<version>3.0.0</version>

</plugin>

<!-- see http://maven.apache.org/ref/current/maven-core/default-bindings.html#Plugin_bindings_for_jar_packaging -->

<plugin>

<artifactId>maven-resources-plugin</artifactId>

<version>3.0.2</version>

</plugin>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.7.0</version>

</plugin>

<plugin>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.20.1</version>

</plugin>

<plugin>

<artifactId>maven-jar-plugin</artifactId>

<version>3.0.2</version>

</plugin>

<plugin>

<artifactId>maven-install-plugin</artifactId>

<version>2.5.2</version>

</plugin>

<plugin>

<artifactId>maven-deploy-plugin</artifactId>

<version>2.8.2</version>

</plugin>

<!--mvn assembly:assembly 将依赖包打进jar-->

<plugin>

<artifactId>maven-assembly-plugin</artifactId>

<configuration>

<archive>

<manifest>

<mainClass></mainClass>

</manifest>

</archive>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

</plugin>

</plugins>

</pluginManagement>

</build>

</project>

WordCountApp.java

package com.imooc.hadoop.mapreduce;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/**

* 使用MapReduce开发WordCount应用程序

*/

public class WordCountApp {

/**

* Map:读取输入的文件

*/

public static class MyMapper extends Mapper<LongWritable, Text, Text, LongWritable>{

LongWritable one = new LongWritable(1);

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 接收到的每一行数据

String line = value.toString();

//按照指定分隔符进行拆分

String[] words = line.split(" ");

for(String word : words) {

// 通过上下文把map的处理结果输出

context.write(new Text(word), one);

}

}

}

/**

* Reduce:归并操作

*/

public static class MyReducer extends Reducer<Text, LongWritable, Text, LongWritable> {

@Override

protected void reduce(Text key, Iterable<LongWritable> values, Context context) throws IOException, InterruptedException {

long sum = 0;

for(LongWritable value : values) {

// 求key出现的次数总和

sum += value.get();

}

// 最终统计结果的输出

context.write(key, new LongWritable(sum));

}

}

/**

* 定义Driver:封装了MapReduce作业的所有信息

*/

public static void main(String[] args) throws Exception{

//创建Configuration

Configuration configuration = new Configuration();

//创建Job

Job job = Job.getInstance(configuration, "wordcount");

//设置job的处理类

job.setJarByClass(WordCountApp.class);

//设置作业处理的输入路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

//设置map相关参数

job.setMapperClass(MyMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(LongWritable.class);

//设置reduce相关参数

job.setReducerClass(MyReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

//设置作业处理的输出路径

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}2)编译:

mvn clean package -DskipTests3)上传到服务器:

scp target/hadoop-train-1.0.jar hadoop@hadoop000:~/lib4)运行

前提条件:

- 启动hadoop

- 上传文件到HDFS中

hadoop jar /home/hadoop/lib/hadoop-train-1.0.jar \

com.imooc.hadoop.mapreduce.WordCountApp \

hdfs://hadoop000:8020/hello.txt \

hdfs://hadoop000:8020/output/wc5)结果

注意:

1)相同的代码和脚本再次执行,会报错

security.UserGroupInformation:

PriviledgedActionException as:hadoop (auth:SIMPLE) cause:

org.apache.hadoop.mapred.FileAlreadyExistsException:

Output directory hdfs://hadoop000:8020/output/wc already exists

Exception in thread "main" org.apache.hadoop.mapred.FileAlreadyExistsException:

Output directory hdfs://hadoop000:8020/output/wc already exists原因:在MR中,输出文件是不能事先存在的

解决方法:

先手工通过shell的方式将输出文件夹先删除

hadoop fs -rm -r /output/wc在代码中完成自动删除功能: 推荐使用这种方式

Path outputPath = new Path(args[1]); FileSystem fileSystem = FileSystem.get(configuration); if(fileSystem.exists(outputPath)){ fileSystem.delete(outputPath, true); System.out.println("output file exists, but is has deleted"); }

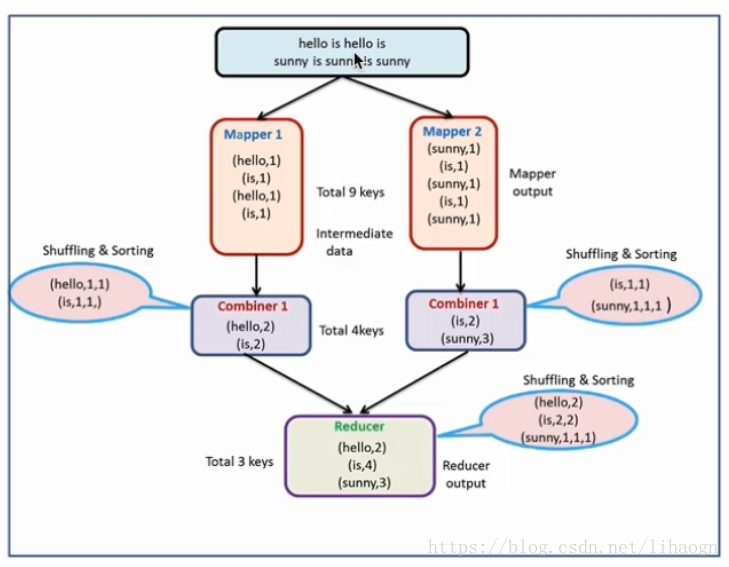

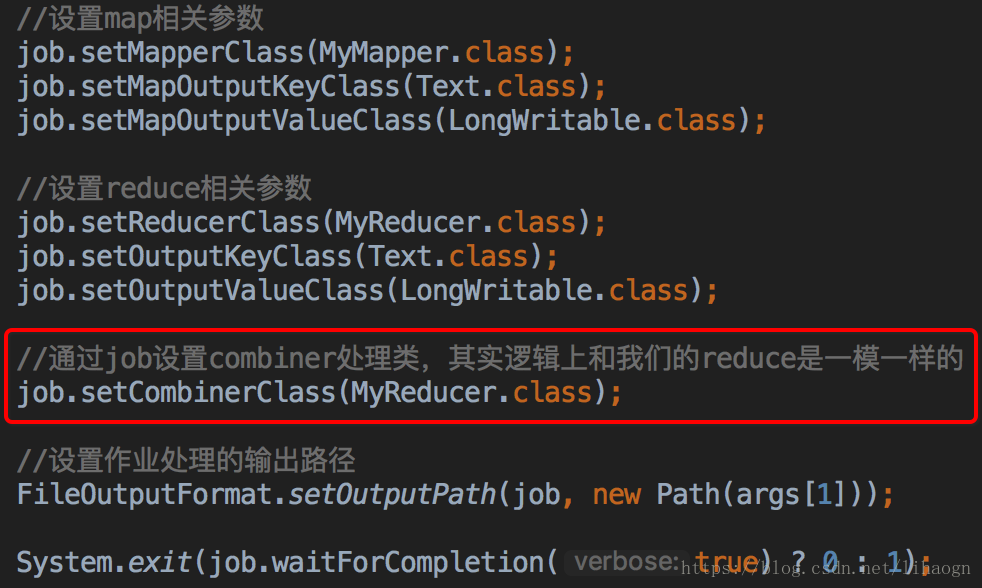

4.2 MapReduce编程之combiner

1)本地的reducer

2)减少map tasks输出的数据量及数据网络传输量

Combiner:在Mapper上进行一个本地的reduce操作。

修改:在main函数中添加一行设置

hadoop jar /home/hadoop/lib/hadoop-train-1.0.jar \

com.imooc.hadoop.mapreduce.CombinerApp \

hdfs://hadoop000:8020/hello.txt \

hdfs://hadoop000:8020/output/wc使用场景:

- 求和、次数

- 求平均数是不行的

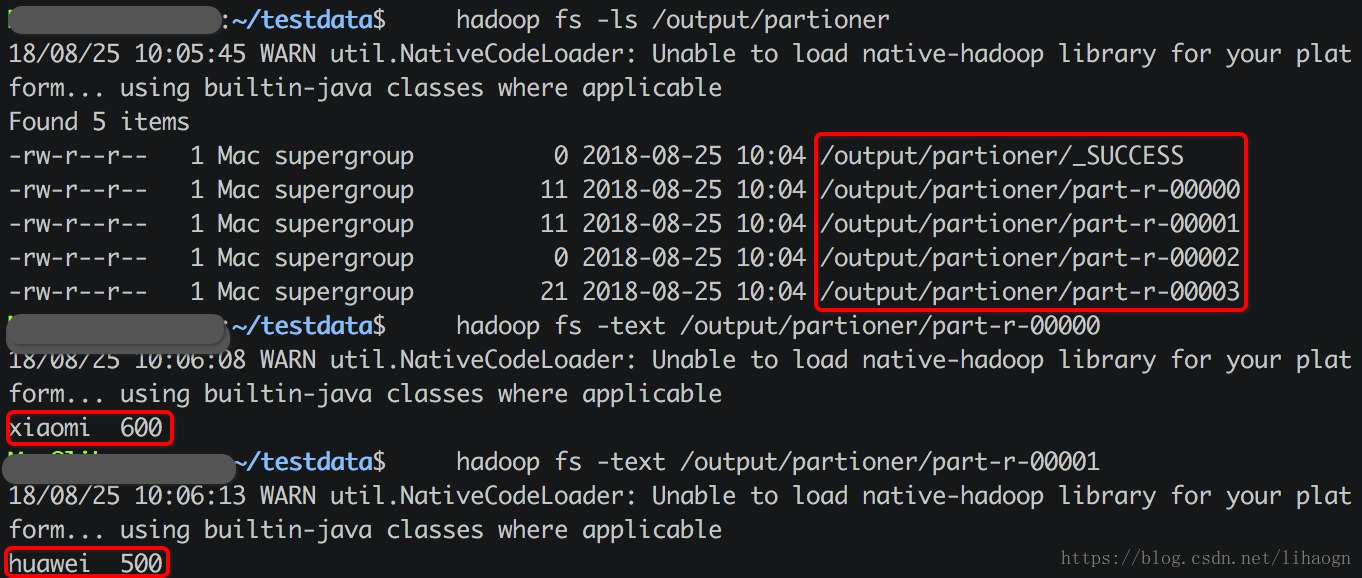

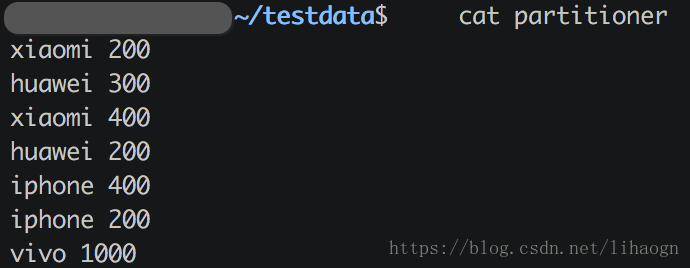

4.3 MapReduce编程之partitioner

1)partitioner决定maptask输出的数据交由哪个reduceTasks处理

2)默认实现:分发的key的hash值对reduce task个数取模

Partitioner

1.准备数据

2.修改代码

package com.imooc.hadoop.mapreduce;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Partitioner;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class ParititonerApp {

/**

* Map:读取输入的文件

*/

public static class MyMapper extends Mapper<LongWritable, Text, Text, LongWritable>{

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 接收到的每一行数据

String line = value.toString();

//按照指定分隔符进行拆分

String[] words = line.split(" ");

context.write(new Text(words[0]), new LongWritable(Long.parseLong(words[1])));

}

}

/**

* Reduce:归并操作

*/

public static class MyReducer extends Reducer<Text, LongWritable, Text, LongWritable> {

@Override

protected void reduce(Text key, Iterable<LongWritable> values, Context context) throws IOException, InterruptedException {

long sum = 0;

for(LongWritable value : values) {

// 求key出现的次数总和

sum += value.get();

}

// 最终统计结果的输出

context.write(key, new LongWritable(sum));

}

}

public static class MyPartitioner extends Partitioner<Text, LongWritable> {

@Override

public int getPartition(Text key, LongWritable value, int numPartitions) {

if(key.toString().equals("xiaomi")) {

return 0;

}

if(key.toString().equals("huawei")) {

return 1;

}

if(key.toString().equals("iphone7")) {

return 2;

}

return 3;

}

}

/**

* 定义Driver:封装了MapReduce作业的所有信息

*/

public static void main(String[] args) throws Exception{

//创建Configuration

Configuration configuration = new Configuration();

// 准备清理已存在的输出目录

Path outputPath = new Path(args[1]);

FileSystem fileSystem = FileSystem.get(configuration);

if(fileSystem.exists(outputPath)){

fileSystem.delete(outputPath, true);

System.out.println("output file exists, but is has deleted");

}

//创建Job

Job job = Job.getInstance(configuration, "wordcount");

//设置job的处理类

job.setJarByClass(ParititonerApp.class);

//设置作业处理的输入路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

//设置map相关参数

job.setMapperClass(MyMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(LongWritable.class);

//设置reduce相关参数

job.setReducerClass(MyReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

//设置job的partition

job.setPartitionerClass(MyPartitioner.class);

//设置4个reducer,每个分区一个

job.setNumReduceTasks(4);

//设置作业处理的输出路径

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

3.运行

hadoop jar /home/hadoop/lib/hadoop-train-1.0.jar \

com.imooc.hadoop.mapreduce.ParititonerApp \

hdfs://hadoop000:8020/partitioner \

hdfs://hadoop000:8020/output/partitioner4.结果