3.1.5 CombineTextInputFormat案例实操

示例:统计单词个数

- 准备工作

在hdfs的根目录下创建input文件夹,然后在里面放置4个大小分别为1.5M、35M、5.5M、6.5M的小文件作为输入数据

- 具体代码

public class WordCountMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

private Text mapOutputKey = new Text();

private IntWritable mapOutputValue = new IntWritable();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String linevalue = value.toString();

StringTokenizer st = new StringTokenizer(linevalue);

while (st.hasMoreTokens()) {

String word = st.nextToken();

mapOutputKey.set(word);

mapOutputValue.set(1);

context.write(mapOutputKey, mapOutputValue);

}

}

}

public class WordCountReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

private IntWritable outputValue = new IntWritable();

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable value : values) {

sum += value.get();

}

outputValue.set(sum);

context.write(key, outputValue);

}

}

public class WordCountDriver {

public static void main(String[] args) throws Exception {

args = new String[]{

"/input/",

"/output/"

};

Configuration cfg = new Configuration();

Job job = Job.getInstance(cfg, WordCountDriver.class.getSimpleName());

job.setJarByClass(WordCountDriver.class);

job.setInputFormatClass(CombineTextInputFormat.class);

CombineTextInputFormat.setMaxInputSplitSize(job,20*1024*1024);

job.setMapperClass(WordCountMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setReducerClass(WordCountReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

boolean issucess = job.waitForCompletion(true);

int status= issucess ? 0 : 1;

System.exit(status);

}

}

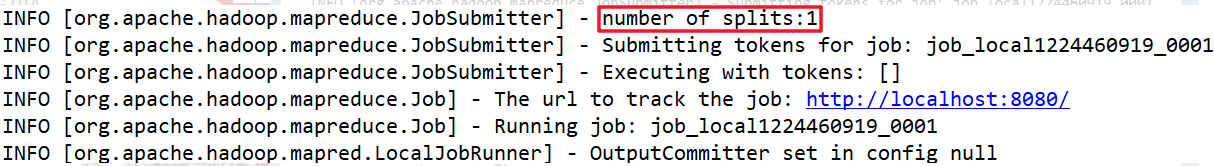

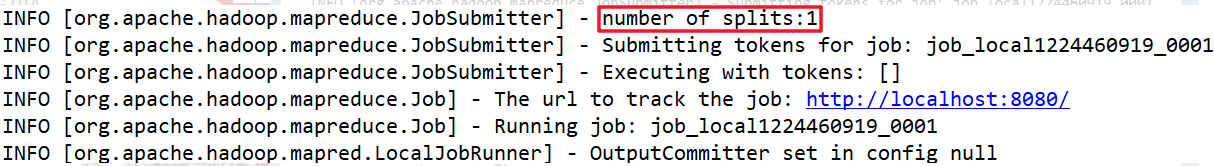

- 运行结果