源码:

def conv2d(input, filter, strides, padding, use_cudnn_on_gpu=True, data_format="NHWC", dilations=[1, 1, 1, 1], name=None):

r"""Computes a 2-D convolution given 4-D `input` and `filter` tensors.

Given an input tensor of shape `[batch, in_height, in_width, in_channels]`

and a filter / kernel tensor of shape

`[filter_height, filter_width, in_channels, out_channels]`, this op

performs the following:

1. Flattens the filter to a 2-D matrix with shape

`[filter_height * filter_width * in_channels, output_channels]`.

2. Extracts image patches from the input tensor to form a *virtual*

tensor of shape `[batch, out_height, out_width,

filter_height * filter_width * in_channels]`.

3. For each patch, right-multiplies the filter matrix and the image patch

vector.

In detail, with the default NHWC format,

output[b, i, j, k] =

sum_{di, dj, q} input[b, strides[1] * i + di, strides[2] * j + dj, q] *

filter[di, dj, q, k]

Must have `strides[0] = strides[3] = 1`. For the most common case of the same

horizontal and vertices strides, `strides = [1, stride, stride, 1]`.

Args:

input: A `Tensor`. Must be one of the following types: `half`, `bfloat16`, `float32`, `float64`.

A 4-D tensor. The dimension order is interpreted according to the value

of `data_format`, see below for details.

filter: A `Tensor`. Must have the same type as `input`.

A 4-D tensor of shape

`[filter_height, filter_width, in_channels, out_channels]`

strides: A list of `ints`.

1-D tensor of length 4. The stride of the sliding window for each

dimension of `input`. The dimension order is determined by the value of

`data_format`, see below for details.

padding: A `string` from: `"SAME", "VALID"`.

The type of padding algorithm to use.

use_cudnn_on_gpu: An optional `bool`. Defaults to `True`.

data_format: An optional `string` from: `"NHWC", "NCHW"`. Defaults to `"NHWC"`.

Specify the data format of the input and output data. With the

default format "NHWC", the data is stored in the order of:

[batch, height, width, channels].

Alternatively, the format could be "NCHW", the data storage order of:

[batch, channels, height, width].

dilations: An optional list of `ints`. Defaults to `[1, 1, 1, 1]`.

1-D tensor of length 4. The dilation factor for each dimension of

`input`. If set to k > 1, there will be k-1 skipped cells between each

filter element on that dimension. The dimension order is determined by the

value of `data_format`, see above for details. Dilations in the batch and

depth dimensions must be 1.

name: A name for the operation (optional).

Returns:

A `Tensor`. Has the same type as `input`.

"""

_ctx = _context._context

if _ctx is None or not _ctx._eager_context.is_eager:

if not isinstance(strides, (list, tuple)):

raise TypeError(

"Expected list for 'strides' argument to "

"'conv2d' Op, not %r." % strides)

strides = [_execute.make_int(_i, "strides") for _i in strides]

padding = _execute.make_str(padding, "padding")

if use_cudnn_on_gpu is None:

use_cudnn_on_gpu = True

use_cudnn_on_gpu = _execute.make_bool(use_cudnn_on_gpu, "use_cudnn_on_gpu")

if data_format is None:

data_format = "NHWC"

data_format = _execute.make_str(data_format, "data_format")

if dilations is None:

dilations = [1, 1, 1, 1]

if not isinstance(dilations, (list, tuple)):

raise TypeError(

"Expected list for 'dilations' argument to "

"'conv2d' Op, not %r." % dilations)

dilations = [_execute.make_int(_i, "dilations") for _i in dilations]

_, _, _op = _op_def_lib._apply_op_helper(

"Conv2D", input=input, filter=filter, strides=strides,

padding=padding, use_cudnn_on_gpu=use_cudnn_on_gpu,

data_format=data_format, dilations=dilations, name=name)

_result = _op.outputs[:]

_inputs_flat = _op.inputs

_attrs = ("T", _op.get_attr("T"), "strides", _op.get_attr("strides"),

"use_cudnn_on_gpu", _op.get_attr("use_cudnn_on_gpu"), "padding",

_op.get_attr("padding"), "data_format",

_op.get_attr("data_format"), "dilations",

_op.get_attr("dilations"))

_execute.record_gradient(

"Conv2D", _inputs_flat, _attrs, _result, name)

_result, = _result

return _result

else:

try:

_result = _pywrap_tensorflow.TFE_Py_FastPathExecute(

_ctx._context_handle, _ctx._eager_context.device_name, "Conv2D", name,

_ctx._post_execution_callbacks, input, filter, "strides", strides,

"use_cudnn_on_gpu", use_cudnn_on_gpu, "padding", padding,

"data_format", data_format, "dilations", dilations)

return _result

except _core._FallbackException:

return conv2d_eager_fallback(

input, filter, strides=strides, use_cudnn_on_gpu=use_cudnn_on_gpu,

padding=padding, data_format=data_format, dilations=dilations,

name=name, ctx=_ctx)

except _core._NotOkStatusException as e:

if name is not None:

message = e.message + " name: " + name

else:

message = e.message

_six.raise_from(_core._status_to_exception(e.code, message), None)图片:

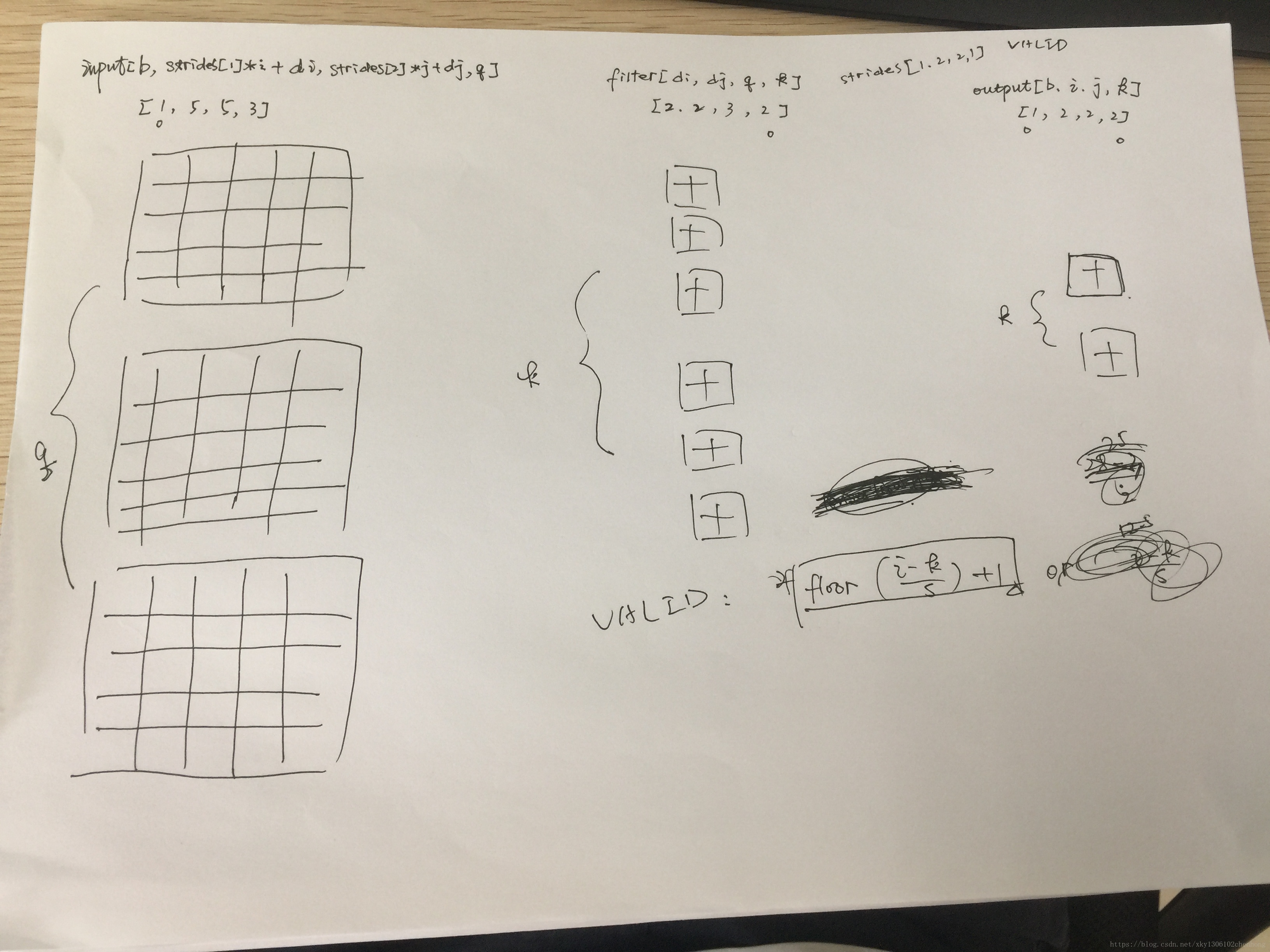

整理一下,对于“VALID”,输出的形状计算如下:

new_height=new_width=⌈(W–F+1)/S⌉new_height=new_width=⌈(W–F+1)/S⌉

对于“SAME”,输出的形状计算如下:

new_height=new_width=⌈W/S⌉