Python上著名的⾃然语⾔处理库,⾃带语料库,词性分类库,⾃带分类,分词,等等功能,强⼤的社区⽀持,还有N多的简单版wrapper。比如TextBolb。

Windows安装

安装Python3.7

http://www.python.org/downloads/,注意,安装X64版本,因为要和操作系统位数一致,记得勾选pip,在Path环璋变量中加入路径:

C:\Python\Python37\

C:\Python\Python37\Scripts注意除了主目录,Scripts这个路径也是需要加入的。

安装Numpy

C:\Users\hgy413>python -m pip install numpy

Collecting numpy

Downloading https://files.pythonhosted.org/packages/8b/8a/5edea6c9759b9c569542ad4da07bba0c03ffe7cfb15d8bbe59b417e99a84/numpy-1.15.0-cp37-none-win_amd64.whl (13.5MB)

100% |████████████████████████████████| 13.5MB 2.5MB/s

Installing collected packages: numpy

The scripts conv-template.exe, f2py.exe and from-template.exe are installed in 'C:\Python\Python37\Scripts' which is not on PATH.

Consider adding this directory to PATH or, if you prefer to suppress this warning, use --no-warn-script-location.

Successfully installed numpy-1.15.0

You are using pip version 10.0.1, however version 18.0 is available.

You should consider upgrading via the 'python -m pip install --upgrade pip' command.验证是否安装成功:没有出现异常就表示成功了。

C:\Users\hgy413>python

Python 3.7.0 (v3.7.0:1bf9cc5093, Jun 27 2018, 04:59:51) [MSC v.1914 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import numpy

>>>

安装NLDK

C:\Users\Administrator>python -m pip install nltk

Collecting nltk

Downloading https://files.pythonhosted.org/packages/50/09/3b1755d528ad9156ee7243d52aa5cd2b809ef053a0f31b53d92853dd653a/nltk-3.3.0.zip (1.4MB)

100% |████████████████████████████████| 1.4MB 240kB/s

Collecting six (from nltk)

Downloading https://files.pythonhosted.org/packages/67/4b/141a581104b1f6397bfa78ac9d43d8ad29a7ca43ea90a2d863fe3056e86a/six-1.11.0-py2.py3-none-any.whl

Installing collected packages: six, nltk

Running setup.py install for nltk ... done

Successfully installed nltk-3.3 six-1.11.0

You are using pip version 10.0.1, however version 18.0 is available.

You should consider upgrading via the 'python -m pip install --upgrade pip' command.验证是否安装成功:没有出现异常就表示成功了。准备下载其他模型。

C:\Users\Administrator>python

Python 3.7.0 (v3.7.0:1bf9cc5093, Jun 27 2018, 04:59:51) [MSC v.1914 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import nltk

>>> nltk.download()

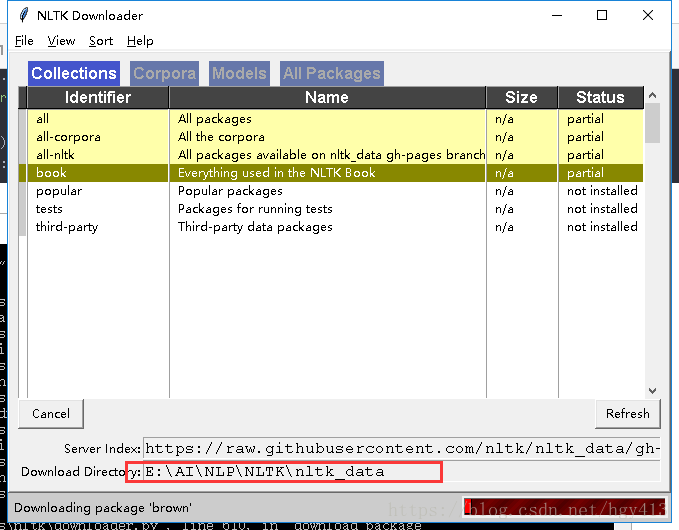

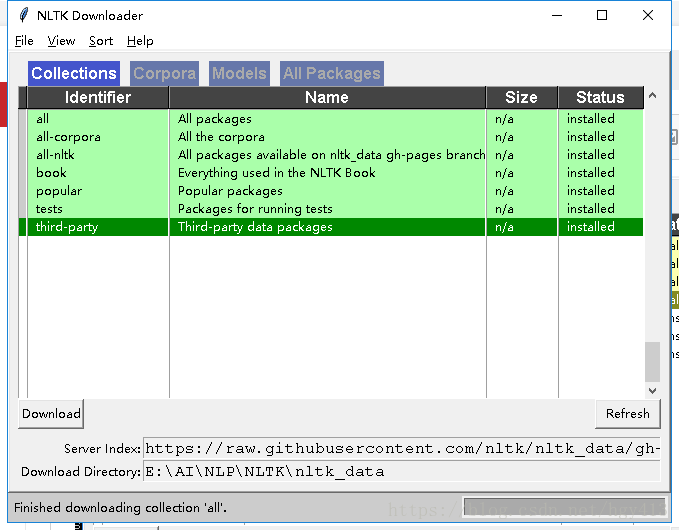

showing info https://raw.githubusercontent.com/nltk/nltk_data/gh-pages/index.xml会弹出一个框:把它们都 下载下来吧:修改下目录到E:\AI\NLP\NLTK\nltk_data

点击下载all,等的时间有点久,差不多有3.25G:

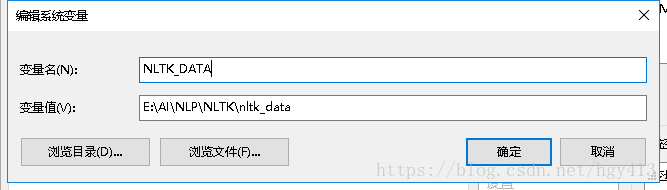

因为我们改了默认下载路径,所以需要加入环境变量NLTK_DATA

If you did not install the data to one of the above central locations, you will need to set the NLTK_DATA environment variable to specify the location of the data.

NLTK自带语料库

corpus(语料)

C:\Users\Administrator>python

Python 3.7.0 (v3.7.0:1bf9cc5093, Jun 27 2018, 04:59:51) [MSC v.1914 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import nltk

>>> from nltk.corpus import brown

>>> brown.categories()// 类别

['adventure', 'belles_lettres', 'editorial', 'fiction', 'government', 'hobbies', 'humor', 'learned', 'lore', 'mystery', 'news', 'religion', 'reviews', 'romance', 'science_fiction']

>>> len(brown.sents())

57340

>>> len(brown.words())

1161192

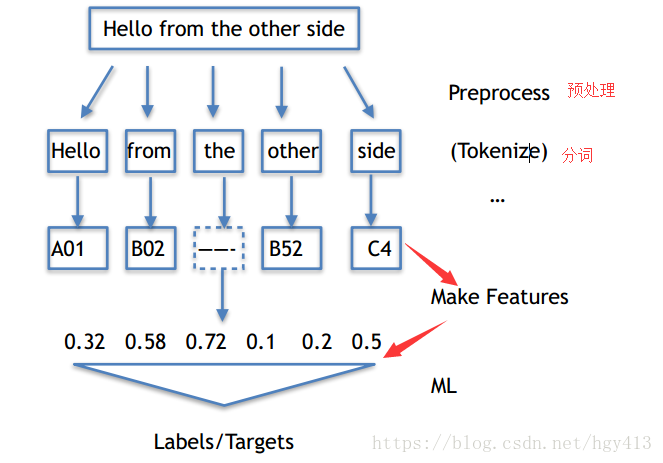

>>>文本处理流程

预处理----->分词预处理(Tokenize)----->特征表达式( Make Features)----->变成计算机可以理解的数字(ML)。

Tokenize

把长句⼦拆成有“意义”的⼩部件

C:\Users\Administrator>python

Python 3.7.0 (v3.7.0:1bf9cc5093, Jun 27 2018, 04:59:51) [MSC v.1914 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import nltk

>>> str = "hello ,world"

>>> tokens = nltk.word_tokenize(str)

>>> tokens

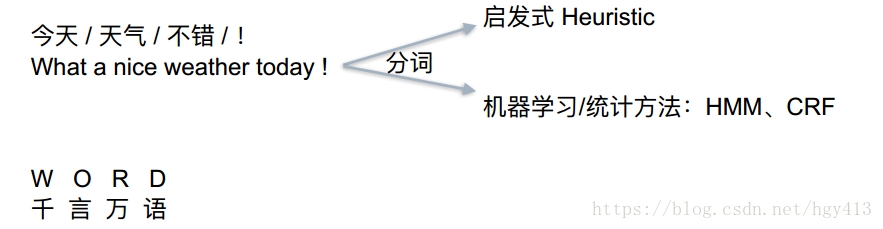

['hello', ',', 'world']中英⽂NLP区别

中文因为没有空格,所以不好分词,所以给点特殊的分隔符。

启发式是从头开始找,使用字典查找的方式,找到一个单词,

今是单词吗?不是

今天是单词吗?是

今天天是单词吗?不是

。。。。

确认今天是最长的那个单词,拿下来,再从后面开始查找。

天是单词吗?不是

天气是单词吗?是

天气不是单词吗?不是

确认天气是最长的那个单词,拿下来。

。。。

中文分词类似于英文的单个字母。

中文分词

Python利用jieba(结巴)分词,安装方式和上面类似

C:\Users\Administrator>python -m pip install jieba

Collecting jieba

Downloading https://files.pythonhosted.org/packages/71/46/c6f9179f73b818d5827202ad1c4a94e371a29473b7f043b736b4dab6b8cd/jieba-0.39.zip (7.3MB)

100% |████████████████████████████████| 7.3MB 220kB/s

Installing collected packages: jieba

Running setup.py install for jieba ... done

Successfully installed jieba-0.39

You are using pip version 10.0.1, however version 18.0 is available.

You should consider upgrading via the 'python -m pip install --upgrade pip' command.C:\Users\Administrator>python

Python 3.7.0 (v3.7.0:1bf9cc5093, Jun 27 2018, 04:59:51) [MSC v.1914 64 bit (AMD64)] on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import jieba

>>> seg_list = jieba.cut("我来自西安交通大学",cut_all=True)#全模式

>>> print ("Full Mode:", "/".join(seg_list))

Building prefix dict from the default dictionary ...

Loading model from cache C:\Users\ADMINI~1\AppData\Local\Temp\jieba.cache

Loading model cost 0.812 seconds.

Prefix dict has been built succesfully.

Full Mode: 我/来自/西安/西安交通/西安交通大学/交通/大学

>>> seg_list = jieba.cut("我来自西安交通大学",cut_all=False)#精确模式

>>> print ("Default Mode:", "/".join(seg_list))

Default Mode: 我/来自/西安交通大学

>>> seg_list=jieba.cut("他来到网易杭研大厦")#精确模式,新词识别,杭研并没在词典中,但被识别了

>>> print ("Default Mode:", "/".join(seg_list))

Default Mode: 他/来到/网易/杭研/大厦

>>> seg_list = jieba.cut_for_search("小明硕士毕业于中国科学院计算所,后在日本京都大学深造"

... )#搜索引擎模式

>>> print ("Search Mode:", "/".join(seg_list))

Search Mode: 小明/硕士/毕业/于/中国/科学/学院/科学院/中国科学院/计算/计算所/,/后/在/日本/京都/大学/日本京都大学/深造

>>>

全模式:把所有可能的单词都列出来。

精确模式:只把这句话可以划分的单词列出来。

新词识别:也是精确模式,但它可以识别新词,如杭研。

搜索引擎模式:把所有的可搜的关键字全列出。

分词之后的效果

[‘what’, ‘a’, ‘nice’, ‘weather’, ‘today’]

[‘今天’, ’天⽓’, ’真’, ’不错’]

可以看出,分词之后,中文和英文没有什么区别了。

有时候tokenize没那么简单

⽐如社交⽹络上,这些乱七⼋糟的不合语法不合正常逻辑的语⾔很多:拯救 @某⼈, 表情符号, URL, #话题符号

社交⽹络语⾔的tokenize

举个栗⼦:

>>> import nltk

>>> from nltk.tokenize import word_tokenize

>>> tweet='RT @angelababy: love you baby! :D http://ah.love #168.cm'

>>> print(word_tokenize(tweet))

['RT', '@', 'angelababy', ':', 'love', 'you', 'baby', '!', ':', 'D', 'http', ':', '//ah.love', '#', '168.cm']

>>>可以看到这样划分是比较乱的。

写一个正则表达式类相关的python做处理:

import re

emoticons_str = r"""# r用于正则表达式,三个引号表示字符串跨行

(?:

[:=;] #眼睛

[oO\-]? #鼻子,

[D\)\]\(\]/\\OpP] #嘴

)"""

regex_str = [

emoticons_str,

r'<[^>]+>',# HTML tags

r'(?:@[\w_]+)',# @某人

r"(?:\#+[\w_]+[\w\'_\-]*[\w_]+)", # 话题标签

r'http[s]?://(?:[a-z]|[0-9]|[$-_@.&+]|[!*\(\),]|(?:%[0-9a-f][0-9a-f]))+',# URLs

r'(?:(?:\d+,?)+(?:\.?\d+)?)', # 数字

r"(?:[a-z][a-z'\-_]+[a-z])", # 含有 - 和 ‘ 的单词

r'(?:[\w_]+)', # 其他

r'(?:\S)' # 其他

]

tokens_re = re.compile(r'('+'|'.join(regex_str)+')',re.VERBOSE | re.IGNORECASE)

emoticon_re = re.compile(r'^'+emoticons_str+'$', re.VERBOSE | re.IGNORECASE)

def tokenize(s):

return tokens_re.findall(s)

def preprocess(s, lowercase=False):

tokens = tokenize(s)

if lowercase:

tokens = [token if emoticon_re.search(token) else token.lower() for token in tokens]#如果在emoticon_re中,就不小写,比如笑脸符号

return tokens

tweet = 'RT @angelababy: love you baby! :D http://ah.love #168cm'

print(preprocess(tweet))

运行结果如下:

['RT', '@angelababy', ':', 'love', 'you', 'baby', '!', ':D', 'http://ah.love', '#168cm']纷繁复杂的词形

Inflection变化: walk => walking => walked

不影响词性

derivation 引申: nation (名词) => national (形容词) => nationalize (动词)

影响词性

词形归⼀化

Stemming 词⼲提取:⼀般来说,就是把不影响词性的inflection的⼩尾巴砍掉

walking 砍ing = walk

walked 砍ed = walk

Lemmatization 词形归⼀:把各种类型的词的变形,都归为⼀个形式

went 归⼀ = go

are 归⼀ = be

NLTK实现Stemming

提供了很多种方式,一般使用SnowballStemmer就好了

>>> from nltk.stem.porter import PorterStemmer

>>> porter_stemmer = PorterStemmer()

>>> porter_stemmer.stem('maximum')

'maximum'

>>> porter_stemmer.stem('presumably')

'presum'

>>> porter_stemmer.stem('multiply')

'multipli'

>>> porter_stemmer.stem('provis')

'provi'

>>> >>> from nltk.stem import SnowballStemmer

>>> snowball_stemmer = SnowballStemmer("english")

>>> snowball_stemmer.stem('maximum')

'maximum'

>>> snowball_stemmer.stem('presumably')

'presum'

>>> >>> from nltk.stem.lancaster import LancasterStemmer

>>> lasncaster_stemmer = LancasterStemmer()

>>> lasncaster_stemmer.stem('maximum')

'maxim'

>>> lasncaster_stemmer.stem('presumably')

'presum'NLTK实现Lemma

类似的

>>> from nltk.stem import WordNetLemmatizer

>>> wordnet_lemmatizer = WordNetLemmatizer()

>>> wordnet_lemmatizer.lemmatize("dogs")

'dog'

>>> wordnet_lemmatizer.lemmatize("churches")

'church'

>>> wordnet_lemmatizer.lemmatize("went")

'went'

>>> wordnet_lemmatizer.lemmatize("abaci")

'abacus'

>>>