终于有了一点闲时间,可以静下心来好好看一下源码了.

本人C++代码能力一般,外加weiliu写的代码一点空行都没有,看的及其头疼.

先来prior_box的,再mulitbox_loss,有空再看一下annotated_data_layer,最后总领一下整个网络.直接贴代码,先来hpp.

#ifndef CAFFE_PRIORBOX_LAYER_HPP_

#define CAFFE_PRIORBOX_LAYER_HPP_

#include <vector>

#include "caffe/blob.hpp"

#include "caffe/layer.hpp"

#include "caffe/proto/caffe.pb.h"

namespace caffe {

/**

* @brief Generate the prior boxes of designated sizes and aspect ratios across

* all dimensions @f$ (H \times W) @f$.

*

* Intended for use with MultiBox detection method to generate prior (template).

*

* NOTE: does not implement Backwards operation.

*/

template <typename Dtype>

class PriorBoxLayer : public Layer<Dtype> {

public:

/**

* @param param provides PriorBoxParameter prior_box_param,

* with PriorBoxLayer options:

* - min_size (\b minimum box size in pixels. can be multiple. required!).

* - max_size (\b maximum box size in pixels. can be ignored or same as the

* # of min_size.).

* - aspect_ratio (\b optional aspect ratios of the boxes. can be multiple).

* - flip (\b optional bool, default true).

* if set, flip the aspect ratio.

*/

explicit PriorBoxLayer(const LayerParameter& param)

: Layer<Dtype>(param) {}

virtual void LayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual void Reshape(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

virtual inline const char* type() const { return "PriorBox"; }

virtual inline int ExactBottomBlobs() const { return 2; } //bottom_size = 2

virtual inline int ExactNumTopBlobs() const { return 1; } //top_size = 1

protected:

/**

* @brief Generates prior boxes for a layer with specified parameters.

*

* @param bottom input Blob vector (at least 2)

* -# @f$ (N \times C \times H_i \times W_i) @f$

* the input layer @f$ x_i @f$

* -# @f$ (N \times C \times H_0 \times W_0) @f$

* the data layer @f$ x_0 @f$

* @param top output Blob vector (length 1)

* -# @f$ (N \times 2 \times K*4) @f$ where @f$ K @f$ is the prior numbers

* By default, a box of aspect ratio 1 and min_size and a box of aspect

* ratio 1 and sqrt(min_size * max_size) are created.

*/

virtual void Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top);

/// @brief Not implemented

virtual void Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down, const vector<Blob<Dtype>*>& bottom) {

return;

}//Backward_cpu直接return

vector<float> min_sizes_;

vector<float> max_sizes_;

vector<float> aspect_ratios_;

bool flip_;

int num_priors_; //表示对特征图的每一个点考虑几个prior box

bool clip_;

vector<float> variance_;

int img_w_;

int img_h_;

float step_w_;

float step_h_;

float offset_;

};

} // namespace caffe

#endif // CAFFE_PRIORBOX_LAYER_HPP_hpp基本没什么内容,backward直接return.下面贴cpp文件

#include <algorithm>

#include <functional>

#include <utility>

#include <vector>

#include "caffe/layers/prior_box_layer.hpp"

//以fc7为例,参数设置如下

//prior_box_param {

// min_size: 60.0

// max_size: 111.0

// aspect_ratio: 2

// aspect_ratio: 3

// flip: true

// clip: false

// variance: 0.1

// variance: 0.1

// variance: 0.2

// variance: 0.2

// step: 16

// offset: 0.5

//}

namespace caffe {

template <typename Dtype>

void PriorBoxLayer<Dtype>::LayerSetUp(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const PriorBoxParameter& prior_box_param =

this->layer_param_.prior_box_param();

CHECK_GT(prior_box_param.min_size_size(), 0) << "must provide min_size."; //必须要有min_size

for (int i = 0; i < prior_box_param.min_size_size(); ++i) {

min_sizes_.push_back(prior_box_param.min_size(i));

CHECK_GT(min_sizes_.back(), 0) << "min_size must be positive.";

}

aspect_ratios_.clear();

aspect_ratios_.push_back(1.); //先把1压进aspect_ratios,由min_size * min_size确定的正方形prior box

flip_ = prior_box_param.flip();

// 接下来这个for的作用是将prior_box_param.aspect_ratio不重复的压入aspect_ratios.若flip = true,把其倒数也压进去

for (int i = 0; i < prior_box_param.aspect_ratio_size(); ++i) {

float ar = prior_box_param.aspect_ratio(i);

bool already_exist = false;

for (int j = 0; j < aspect_ratios_.size(); ++j) {

if (fabs(ar - aspect_ratios_[j]) < 1e-6) {

already_exist = true;

break;

}

}

if (!already_exist) {

aspect_ratios_.push_back(ar);

if (flip_) { //

aspect_ratios_.push_back(1./ar);

}

}

}//aspect_ratios含有1,2,1/2,3,1/3.

num_priors_ = aspect_ratios_.size() * min_sizes_.size(); // num_priors_=5

if (prior_box_param.max_size_size() > 0) {

CHECK_EQ(prior_box_param.min_size_size(), prior_box_param.max_size_size());//max_size个数和min一样

for (int i = 0; i < prior_box_param.max_size_size(); ++i) {

max_sizes_.push_back(prior_box_param.max_size(i));

CHECK_GT(max_sizes_[i], min_sizes_[i])

<< "max_size must be greater than min_size.";

num_priors_ += 1;//只有一个min和max_size,故num_priors_=6,此处的prior box是边长为sqrt(min*max)的正方形

}

}

clip_ = prior_box_param.clip();

//variance应该是4个,如果是一个,就把这个值压到variance_里面,其他情况则直接把0.1压到variance_里面

if (prior_box_param.variance_size() > 1) {

// Must and only provide 4 variance.

CHECK_EQ(prior_box_param.variance_size(), 4);

for (int i = 0; i < prior_box_param.variance_size(); ++i) {

CHECK_GT(prior_box_param.variance(i), 0);

variance_.push_back(prior_box_param.variance(i));

}

} else if (prior_box_param.variance_size() == 1) {

CHECK_GT(prior_box_param.variance(0), 0);

variance_.push_back(prior_box_param.variance(0));

} else {

// Set default to 0.1.

variance_.push_back(0.1);

}

// prototxt中一般未给定img_h,img_w和img_size,所以img_h,img_w = 0

if (prior_box_param.has_img_h() || prior_box_param.has_img_w()) {

CHECK(!prior_box_param.has_img_size())

<< "Either img_size or img_h/img_w should be specified; not both.";

img_h_ = prior_box_param.img_h();

CHECK_GT(img_h_, 0) << "img_h should be larger than 0.";

img_w_ = prior_box_param.img_w();

CHECK_GT(img_w_, 0) << "img_w should be larger than 0.";

} else if (prior_box_param.has_img_size()) {

const int img_size = prior_box_param.img_size();

CHECK_GT(img_size, 0) << "img_size should be larger than 0.";

img_h_ = img_size;

img_w_ = img_size;

} else {

img_h_ = 0;

img_w_ = 0;

}

//step赋值给step_h_和step_w_

if (prior_box_param.has_step_h() || prior_box_param.has_step_w()) {

CHECK(!prior_box_param.has_step())

<< "Either step or step_h/step_w should be specified; not both.";

step_h_ = prior_box_param.step_h();

CHECK_GT(step_h_, 0.) << "step_h should be larger than 0.";

step_w_ = prior_box_param.step_w();

CHECK_GT(step_w_, 0.) << "step_w should be larger than 0.";

} else if (prior_box_param.has_step()) {

const float step = prior_box_param.step();

CHECK_GT(step, 0) << "step should be larger than 0.";

step_h_ = step;

step_w_ = step; //step赋值给h_和w_

} else {

step_h_ = 0;

step_w_ = 0;

}

offset_ = prior_box_param.offset();

}

template <typename Dtype> //Reshape里的英文注释很重要

void PriorBoxLayer<Dtype>::Reshape(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const int layer_width = bottom[0]->width();

const int layer_height = bottom[0]->height();

vector<int> top_shape(3, 1);

// Since all images in a batch has same height and width, we only need to

// generate one set of priors which can be shared across all images.

top_shape[0] = 1; //同一层的priors,feature mp_size,img_size,aspect_ratios都一样,和batch无关,所以为1

// 2 channels. First channel stores the mean of each prior coordinate.

// Second channel stores the variance of each prior coordinate.

top_shape[1] = 2;

//对于1个prior,不管是prior coordinate还是variance都是4,fc7层有19*19*6个prioi

top_shape[2] = layer_width * layer_height * num_priors_ * 4;

CHECK_GT(top_shape[2], 0);

top[0]->Reshape(top_shape); // blob的reshape方法

}

template <typename Dtype>

void PriorBoxLayer<Dtype>::Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const int layer_width = bottom[0]->width();

const int layer_height = bottom[0]->height();

int img_width, img_height;

if (img_h_ == 0 || img_w_ == 0) {

img_width = bottom[1]->width();// 300

img_height = bottom[1]->height();// 300

} else {

img_width = img_w_;

img_height = img_h_;

}

float step_w, step_h;

if (step_w_ == 0 || step_h_ == 0) {

step_w = static_cast<float>(img_width) / layer_width;

step_h = static_cast<float>(img_height) / layer_height;

} else {

step_w = step_w_;

step_h = step_h_;

}

Dtype* top_data = top[0]->mutable_cpu_data();

int dim = layer_height * layer_width * num_priors_ * 4; //19*19*6*4

int idx = 0;

for (int h = 0; h < layer_height; ++h) {

for (int w = 0; w < layer_width; ++w) {

float center_x = (w + offset_) * step_w;

float center_y = (h + offset_) * step_h; // feature map上的点对应于原图上的位置,offset_=0.5做到了四舍五入

float box_width, box_height;

for (int s = 0; s < min_sizes_.size(); ++s) {

int min_size_ = min_sizes_[s];

//min_size*min_size的prioi box(60*60)总会有的,需要注意一下的是idx一直在自增

// first prior: aspect_ratio = 1, size = min_size

box_width = box_height = min_size_;

// xmin

top_data[idx++] = (center_x - box_width / 2.) / img_width;

// ymin

top_data[idx++] = (center_y - box_height / 2.) / img_height;

// xmax

top_data[idx++] = (center_x + box_width / 2.) / img_width;

// ymax

top_data[idx++] = (center_y + box_height / 2.) / img_height;//私认为除以300的作用是方便处理数据,数据太大不好处理

//设置了max_size的话,再生成一个sqrt(60*111)为边长的prio box

if (max_sizes_.size() > 0) {

CHECK_EQ(min_sizes_.size(), max_sizes_.size());

int max_size_ = max_sizes_[s];

// second prior: aspect_ratio = 1, size = sqrt(min_size * max_size)

box_width = box_height = sqrt(min_size_ * max_size_);

// xmin

top_data[idx++] = (center_x - box_width / 2.) / img_width;

// ymin

top_data[idx++] = (center_y - box_height / 2.) / img_height;

// xmax

top_data[idx++] = (center_x + box_width / 2.) / img_width;

// ymax

top_data[idx++] = (center_y + box_height / 2.) / img_height;

}

// 生成aspect_ratio=2,1/2,3,1/3的四种prior box,宽为60*sqrt(aspect_ratio),高为60/sqrt(aspect_ratio)

// rest of priors

for (int r = 0; r < aspect_ratios_.size(); ++r) {

float ar = aspect_ratios_[r];

if (fabs(ar - 1.) < 1e-6) {

continue;

}

box_width = min_size_ * sqrt(ar);

box_height = min_size_ / sqrt(ar);

// xmin

top_data[idx++] = (center_x - box_width / 2.) / img_width;

// ymin

top_data[idx++] = (center_y - box_height / 2.) / img_height;

// xmax

top_data[idx++] = (center_x + box_width / 2.) / img_width;

// ymax

top_data[idx++] = (center_y + box_height / 2.) / img_height;

}

}

}

}//top_data依次存储每个prior box坐标信息,因为/300了,所以都会小于1

//clip让prior box的坐标越左界的置0,越右界置1

// clip the prior's coordidate such that it is within [0, 1]

if (clip_) {

for (int d = 0; d < dim; ++d) {

top_data[d] = std::min<Dtype>(std::max<Dtype>(top_data[d], 0.), 1.);

}

}

//至此,top_data第一个channel的dim个坐标信息已写好,接下来写第二个channel的dim个variance_信息

// set the variance.

top_data += top[0]->offset(0, 1);//调用了blob的offset函数,计算出第二个channel初始位置的偏移量,使后面使用top_data[0]就可以设置variance_

if (variance_.size() == 1) {

caffe_set<Dtype>(dim, Dtype(variance_[0]), top_data);

} else {

int count = 0;

for (int h = 0; h < layer_height; ++h) {

for (int w = 0; w < layer_width; ++w) {

for (int i = 0; i < num_priors_; ++i) {

for (int j = 0; j < 4; ++j) {

top_data[count] = variance_[j];

++count;

}

}

}

}

}

}

INSTANTIATE_CLASS(PriorBoxLayer);

REGISTER_LAYER_CLASS(PriorBox);

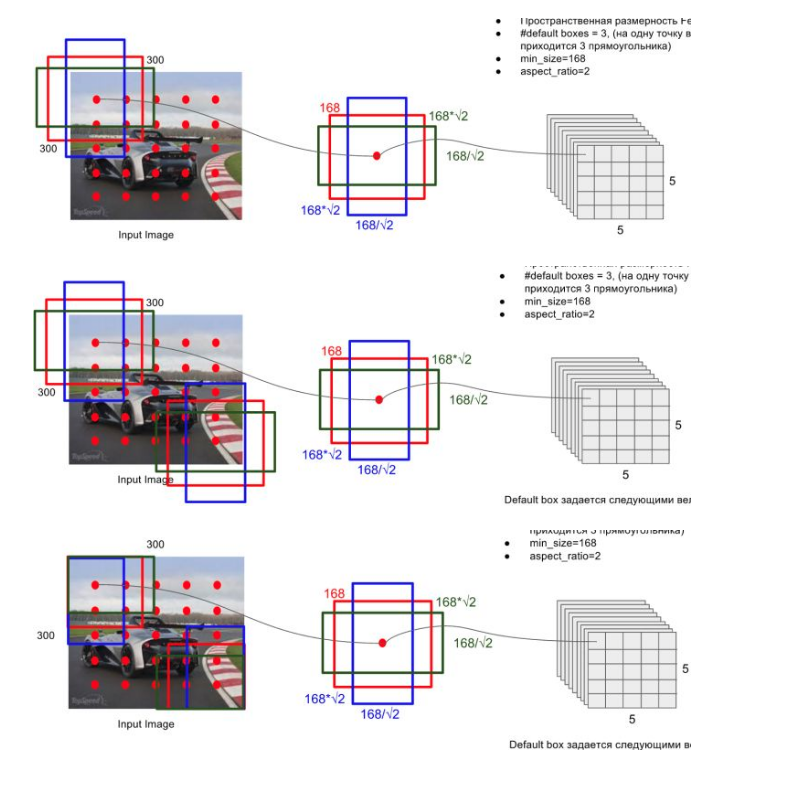

} // namespace caffe盗用知乎上的一张图来帮助理解:先将feature map上的每个点对应到300*300的img上,作为中心点.依此中心点做出num_prior个prior box,再把各个超出边界的box拉回来(前提是clip=true).

注释的比较多,在此总结一下:

1.num_prior=aspect_ratio个数*2 (flip=true)+ min_size为边长的1个正方形+sqrt(min*max_size)为边长的1个正方形;

2.关于img_h,img_w,step_w等等,参考caffe.proto文件.

// By default, we calculate img_height, img_width, step_x, step_y based on

// bottom[0] (feat) and bottom[1] (img). Unless these values are explicitely

// provided.

// Explicitly provide the img_size.

optional uint32 img_size = 7;

// Either img_size or img_h/img_w should be specified; not both.

optional uint32 img_h = 8;

optional uint32 img_w = 9;

// Explicitly provide the step size.

optional float step = 10;

// Either step or step_h/step_w should be specified; not both.

optional float step_h = 11;

optional float step_w = 12;这些参数是可给可不给的,网络可以自己获得,关于step再说一句:到该层下采样了n次,step=2^n(stride=2),和faster rcnn的含义一样.

3.关于varience,参见github. 除以varience是对预测框和真实框的误差进行放大,从而增大loss,增大梯度,加快收敛.

4.整个prior层以feature map和data层作为输入,为feature map每个点考虑num_prior个prioi box,输出shape为(1,2,layer_height * layer_width * num_priors_ * 4),也就是2个channel,第一个channel存放每个prioi box映射回原图的位置信息,第二个channel存放每个prioi box的varience信息.其实prior box就和anchor差不多,只不过前者在多scale的featurp map上获得且个数不为9.

5.关于min_size的设计问题,论文中给出了公式:

ssd的m=6(取了6个层做检测),第一层一般单独给出,第二层feature map对应的min_size=S1*300, max_size=S2*300;第三层min_size=S2*300, max_size=S3*300;以此类推...

代码中的实现略有不同, ssd_voc_pascal.py中分母除的是m-2,第一层的scale分别是0.1和0.2;ssd_coco中smin=0.15.max=0.9,且分母除的也是m-2.可以看出针对coco中小物体增多的情况下,采用了更小的smin,这也是ssd应用在自己的data domain中需要考虑的问题.

# ssd_pascal.py

min_ratio = 20

max_ratio = 90

step = int(math.floor((max_ratio - min_ratio) / (len(mbox_source_layers) - 2)))

min_sizes = []

max_sizes = []

for ratio in xrange(min_ratio, max_ratio + 1, step):

min_sizes.append(min_dim * ratio / 100.)

max_sizes.append(min_dim * (ratio + step) / 100.)

min_sizes = [min_dim * 10 / 100.] + min_sizes

max_sizes = [min_dim * 20 / 100.] + max_sizes最后,越看SSD,越觉得RGB真的牛, RPN网络是真的里程碑式设计.