前言

随着人工智能的不断发展,机器学习这门技术也越来越重要,很多人都开启了学习机器学习,本文就介绍了机器学习的基础内容。来源于哔哩哔哩博主“霹雳吧啦Wz”,博主学习作为笔记记录,欢迎大家一起讨论学习交流。

一、SSD源码使用介绍

如果用自己数据集,要修改三个部分:一是修改数据集路劲,然后二是再修改NUM-CLSS类别(类别+1,其中1是背景),三是要修改标签.json文件。案例是以Pascol_VOC数据集为例(0代表是背景,从1开始排序)。

二、SSD网络的搭建

2.1 Resnet网络

import torch.nn as nn

import torch

class Bottleneck(nn.Module):

expansion = 4

def __init__(self, in_channel, out_channel, stride=1, downsample=None):

super(Bottleneck, self).__init__()

self.conv1 = nn.Conv2d(in_channels=in_channel, out_channels=out_channel,

kernel_size=1, stride=1, bias=False) # squeeze channels

self.bn1 = nn.BatchNorm2d(out_channel)

# -----------------------------------------

self.conv2 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel,

kernel_size=3, stride=stride, bias=False, padding=1)

self.bn2 = nn.BatchNorm2d(out_channel)

# -----------------------------------------

self.conv3 = nn.Conv2d(in_channels=out_channel, out_channels=out_channel*self.expansion,

kernel_size=1, stride=1, bias=False) # unsqueeze channels

self.bn3 = nn.BatchNorm2d(out_channel*self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

def forward(self, x):

identity = x

if self.downsample is not None:

identity = self.downsample(x)

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

out += identity

out = self.relu(out)

return out

class ResNet(nn.Module):

def __init__(self, block, blocks_num, num_classes=1000, include_top=True):

super(ResNet, self).__init__()

self.include_top = include_top

self.in_channel = 64

self.conv1 = nn.Conv2d(3, self.in_channel, kernel_size=7, stride=2,

padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(self.in_channel)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, blocks_num[0])

self.layer2 = self._make_layer(block, 128, blocks_num[1], stride=2)

self.layer3 = self._make_layer(block, 256, blocks_num[2], stride=2)

self.layer4 = self._make_layer(block, 512, blocks_num[3], stride=2)

if self.include_top:

self.avgpool = nn.AdaptiveAvgPool2d((1, 1)) # output size = (1, 1)

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

def _make_layer(self, block, channel, block_num, stride=1):

downsample = None

if stride != 1 or self.in_channel != channel * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.in_channel, channel * block.expansion, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(channel * block.expansion))

layers = []

layers.append(block(self.in_channel, channel, downsample=downsample, stride=stride))

self.in_channel = channel * block.expansion

for _ in range(1, block_num):

layers.append(block(self.in_channel, channel))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

if self.include_top:

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.fc(x)

return x

def resnet50(num_classes=1000, include_top=True):

return ResNet(Bottleneck, [3, 4, 6, 3], num_classes=num_classes, include_top=include_top)

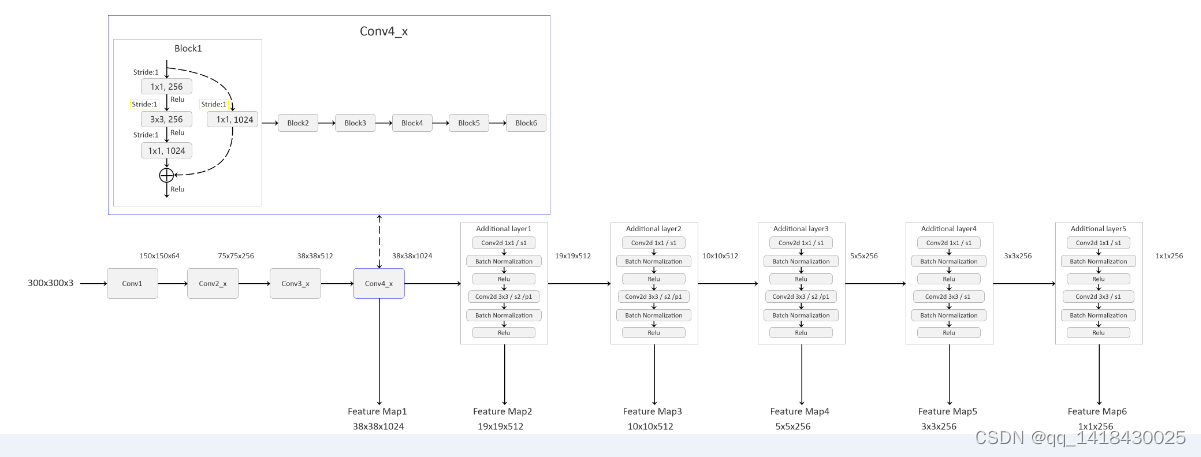

2.2 骨干网络(根据网路结构图来看 骨干采用的Resnet)

class Backbone(nn.Module):

def __init__(self, pretrain_path=None):

super(Backbone, self).__init__()

net = resnet50()

self.out_channels = [1024, 512, 512, 256, 256, 256]#每一个预测层channel

if pretrain_path is not None:#预训练权重

net.load_state_dict(torch.load(pretrain_path))

self.feature_extractor = nn.Sequential(*list(net.children())[:7])#构建特征提取部分 从conv1-conv4 子模块:nn.结构 左闭右开,索引0-6位置

conv4_block1 = self.feature_extractor[-1][0] #得到conv4的第一个模块

# 修改conv4_block1的步距,从2->1

conv4_block1.conv1.stride = (1, 1)

conv4_block1.conv2.stride = (1, 1)

conv4_block1.downsample[0].stride = (1, 1)

def forward(self, x):

x = self.feature_extractor(x)

return x

2.3 额外的一系列卷积层+预测器+初始化(对额外卷积层和预测器)

class SSD300(nn.Module):

def __init__(self, backbone=None, num_classes=21):

super(SSD300, self).__init__()

if backbone is None:

raise Exception("backbone is None")

if not hasattr(backbone, "out_channels"):

raise Exception("the backbone not has attribute: out_channel")

self.feature_extractor = backbone

self.num_classes = num_classes

# out_channels = [1024, 512, 512, 256, 256, 256] for resnet50

self._build_additional_features(self.feature_extractor.out_channels)#添加额外的一系列卷积层,得到相应的一系列特征提取器

self.num_defaults = [4, 6, 6, 6, 4, 4]#每一个预测特征层的每一个cell的框的个数 先验框anchor/bounding box/default box

location_extractors = []#预测器定位

confidence_extractors = []#预测器置信度

# out_channels = [1024, 512, 512, 256, 256, 256] for resnet50

for nd, oc in zip(self.num_defaults, self.feature_extractor.out_channels):

# nd is number_default_boxes, oc is output_channel

location_extractors.append(nn.Conv2d(oc, nd * 4, kernel_size=3, padding=1))

confidence_extractors.append(nn.Conv2d(oc, nd * self.num_classes, kernel_size=3, padding=1))

self.loc = nn.ModuleList(location_extractors)

self.conf = nn.ModuleList(confidence_extractors)

self._init_weights()

default_box = dboxes300_coco()

self.compute_loss = Loss(default_box)#损失

self.encoder = Encoder(default_box)

self.postprocess = PostProcess(default_box)#后处理

def _init_weights(self):

layers = [*self.additional_blocks, *self.loc, *self.conf]

for layer in layers:

for param in layer.parameters():

if param.dim() > 1:

nn.init.xavier_uniform_(param)

2.4 添加额外的一系列卷积层,得到相应的一系列特征提取器

def _build_additional_features(self, input_size):

"""

为backbone(resnet50)添加额外的一系列卷积层,得到相应的一系列特征提取器

:param input_size:

:return:

"""

additional_blocks = []

# input_size = [1024, 512, 512, 256, 256, 256] for resnet50

middle_channels = [256, 256, 128, 128, 128]#输出层的第一个卷积的深度

for i, (input_ch, output_ch, middle_ch) in enumerate(zip(input_size[:-1], input_size[1:], middle_channels)):

padding, stride = (1, 2) if i < 3 else (0, 1)#判断是否为前三个特征提取器

layer = nn.Sequential(

nn.Conv2d(input_ch, middle_ch, kernel_size=1, bias=False),

nn.BatchNorm2d(middle_ch),

nn.ReLU(inplace=True),

nn.Conv2d(middle_ch, output_ch, kernel_size=3, padding=padding, stride=stride, bias=False),

nn.BatchNorm2d(output_ch),

nn.ReLU(inplace=True),

)

additional_blocks.append(layer)

self.additional_blocks = nn.ModuleList(additional_blocks)

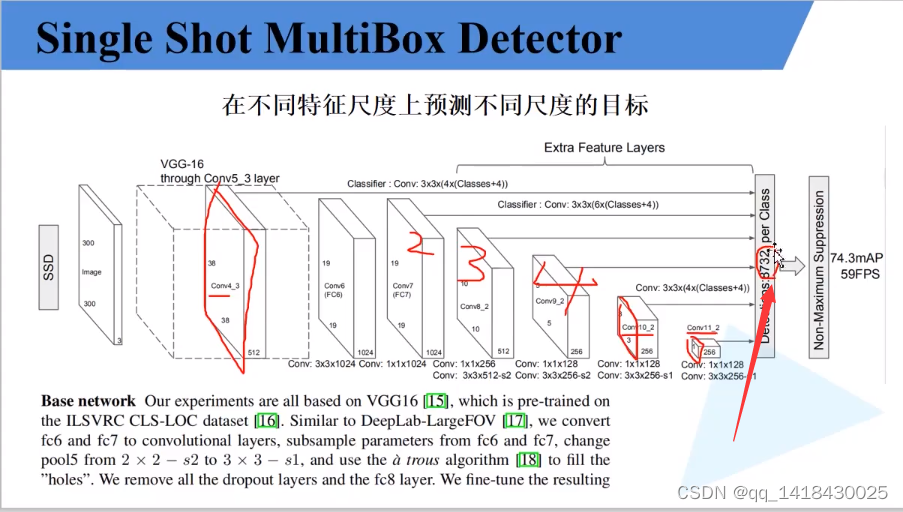

2.5 正向传播过程(根据图来理解代码 要多看网络结构图)

def forward(self, image, targets=None):

x = self.feature_extractor(image)#conv4 38x38x1024

# Feature Map 38x38x1024, 19x19x512, 10x10x512, 5x5x256, 3x3x256, 1x1x256

detection_features = torch.jit.annotate(List[Tensor], []) # [x]存储每一个特征层的列表

detection_features.append(x)

for layer in self.additional_blocks:#遍历每一个预测特征层

x = layer(x)#将上一层输出送到当前层 得到当前层对应的输出

detection_features.append(x)#再将当前层输出添加到列表当中

# Feature Map 38x38x4, 19x19x6, 10x10x6, 5x5x6, 3x3x4, 1x1x4

locs, confs = self.bbox_view(detection_features, self.loc, self.conf)#两个回归参数

# For SSD 300, shall return nbatch x 8732 x {

nlabels, nlocs} results

# 38x38x4 + 19x19x6 + 10x10x6 + 5x5x6 + 3x3x4 + 1x1x4 = 8732

if self.training:#训练模式下 计算损失

if targets is None:

raise ValueError("In training mode, targets should be passed")

# bboxes_out (Tensor 8732 x 4), labels_out (Tensor 8732)

bboxes_out = targets['boxes']

bboxes_out = bboxes_out.transpose(1, 2).contiguous()

# print(bboxes_out.is_contiguous())

labels_out = targets['labels']

# print(labels_out.is_contiguous())

# ploc, plabel, gloc, glabel

loss = self.compute_loss(locs, confs, bboxes_out, labels_out)

return {

"total_losses": loss}

# 将预测回归参数叠加到default box上得到最终预测box,并执行非极大值抑制虑除重叠框

# results = self.encoder.decode_batch(locs, confs)

results = self.postprocess(locs, confs)#非训练模式下 后处理得到输出

return results

2.6 两个回归参数

# Shape the classifier to the view of bboxes

def bbox_view(self, features, loc_extractor, conf_extractor):

locs = []

confs = []

for f, l, c in zip(features, loc_extractor, conf_extractor):#每一个预测特征层 特征图feature_map的位置参数(预测器) 特征图feature_map的置信度参数(预测器)

# [batch, n*4, feat_size(h), feat_size(w)] -> [batch, 4, -1]

locs.append(l(f).view(f.size(0), 4, -1))#-1代表自动推理最后一个位置元素的个数 其实是n x feat_size x feat_size 即当前预测特征层的所有default box数目

# [batch, n*classes, feat_size(h), feat_size(w)] -> [batch, classes, -1]

confs.append(c(f).view(f.size(0), self.num_classes, -1))#-1代表自动推理最后一个位置元素的个数 其实是n x feat_size x feat_size 即当前预测特征层的所有default box数目

locs, confs = torch.cat(locs, 2).contiguous(), torch.cat(confs, 2).contiguous()#拼接最后一个维度 即上面的-1维度

return locs, confs

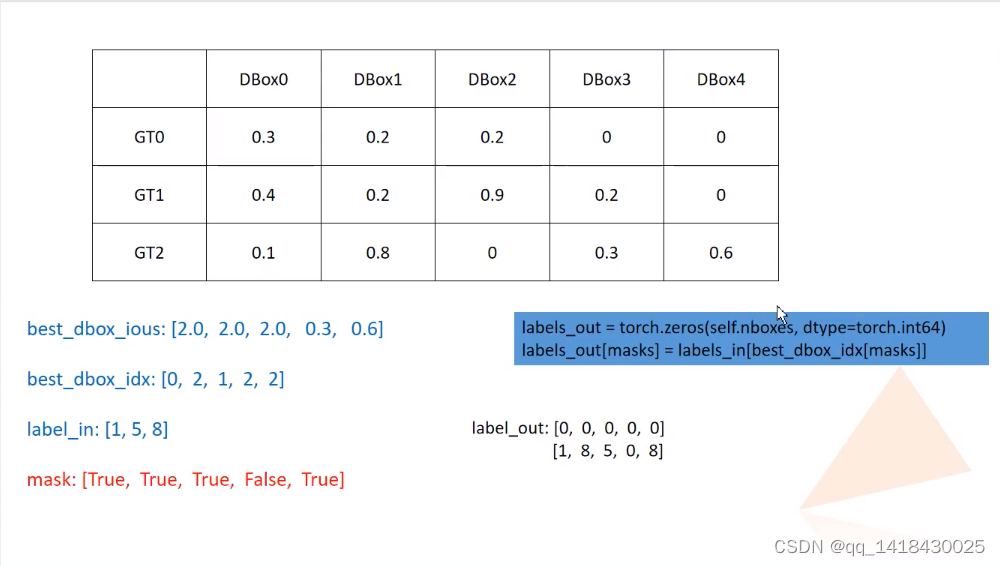

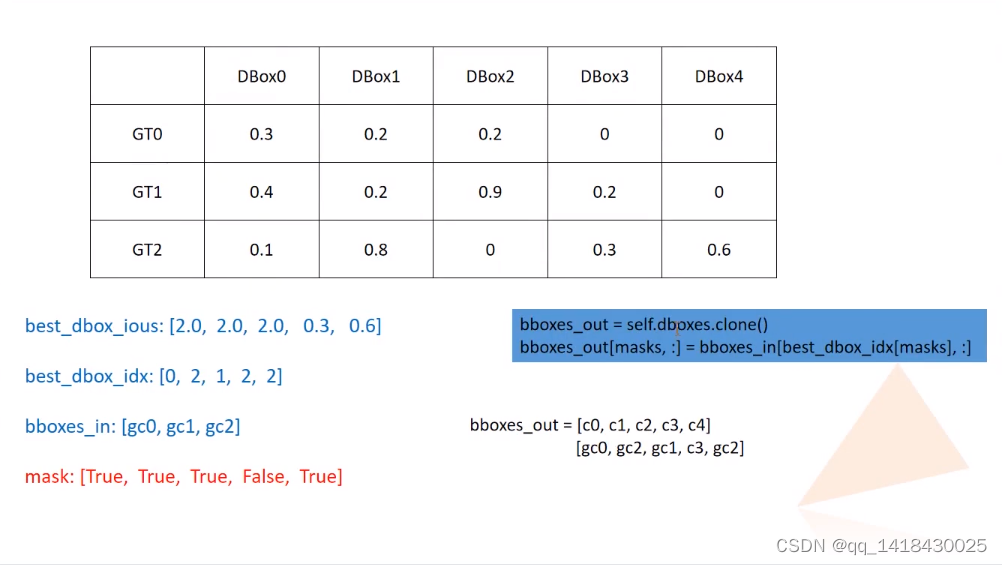

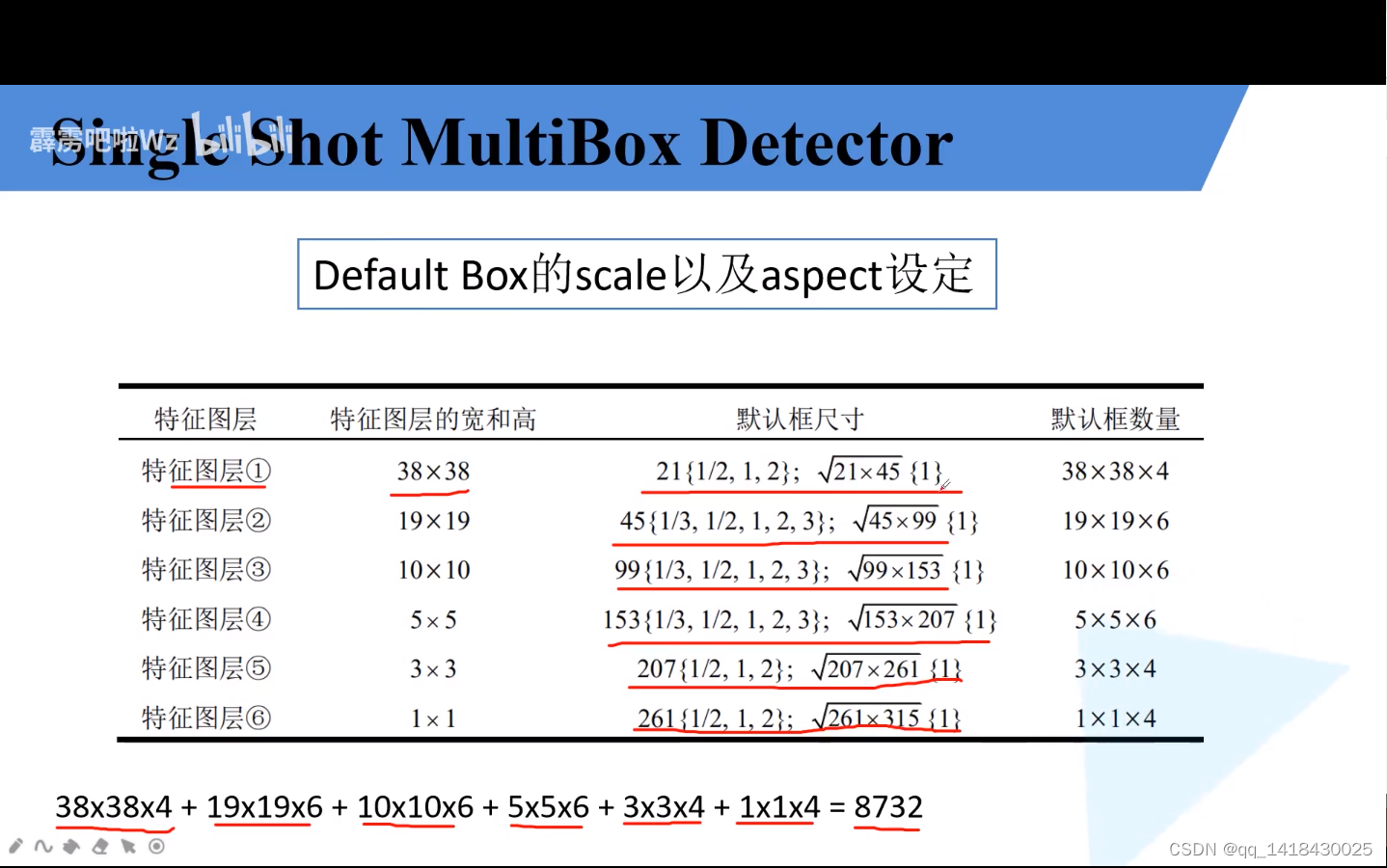

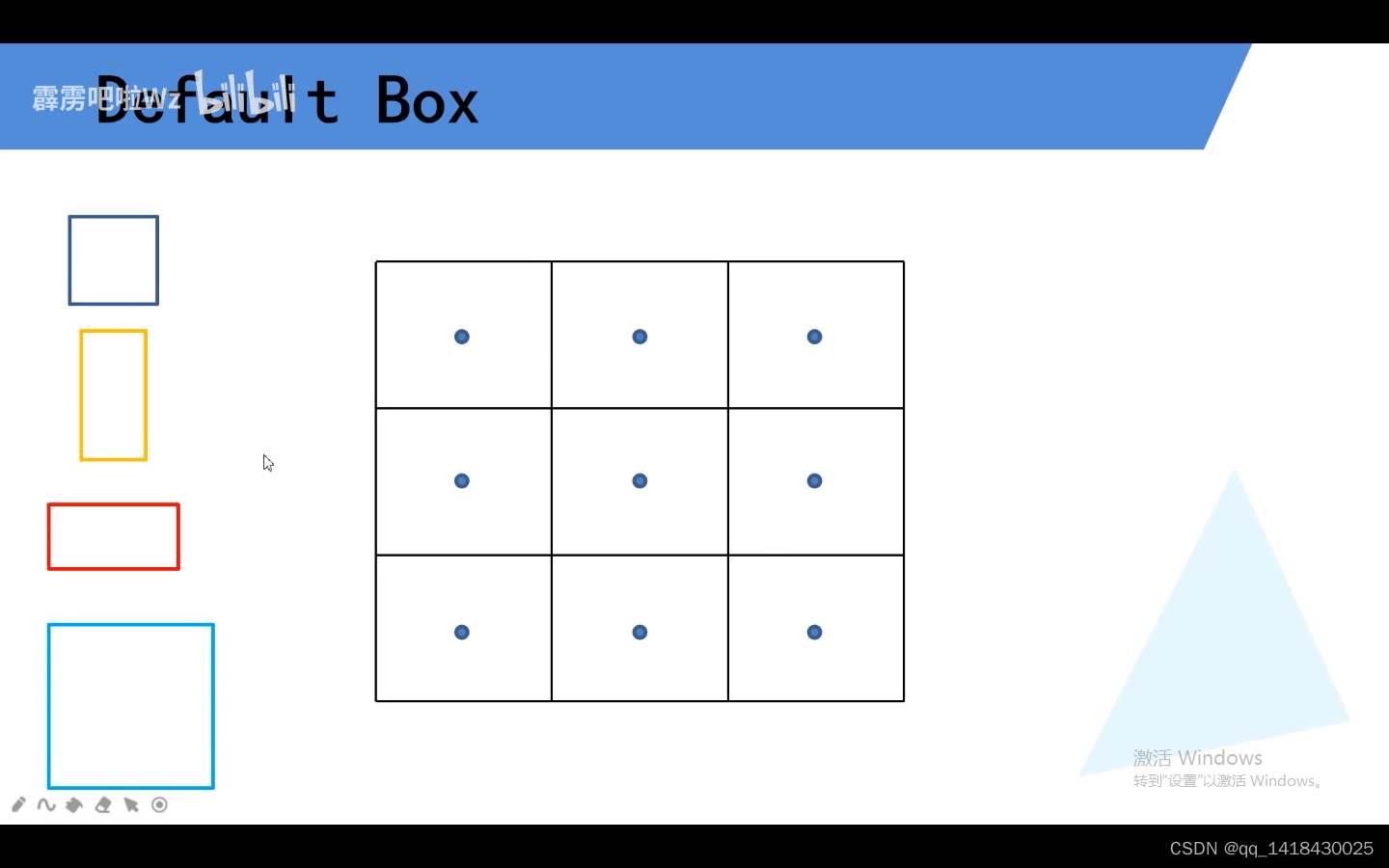

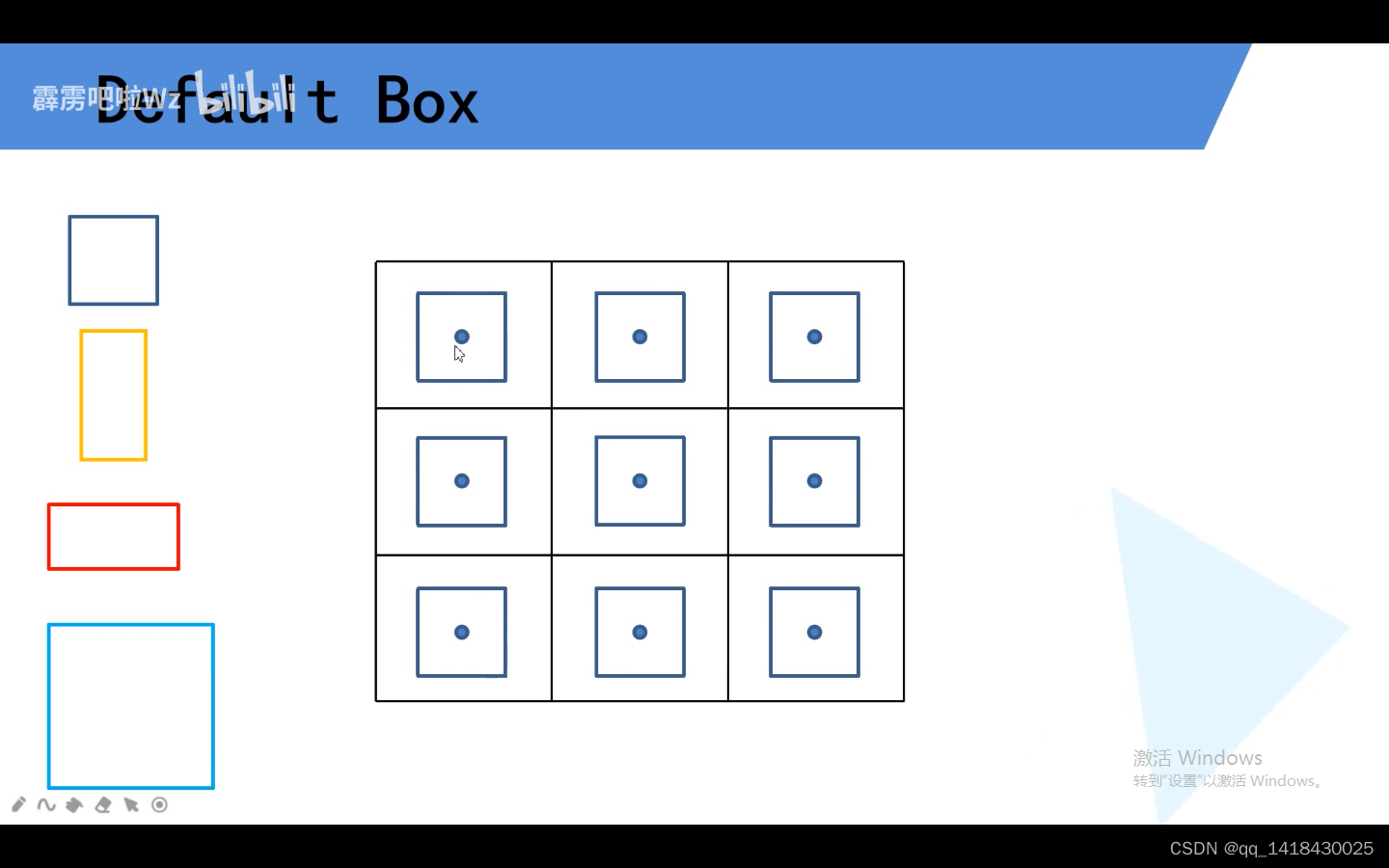

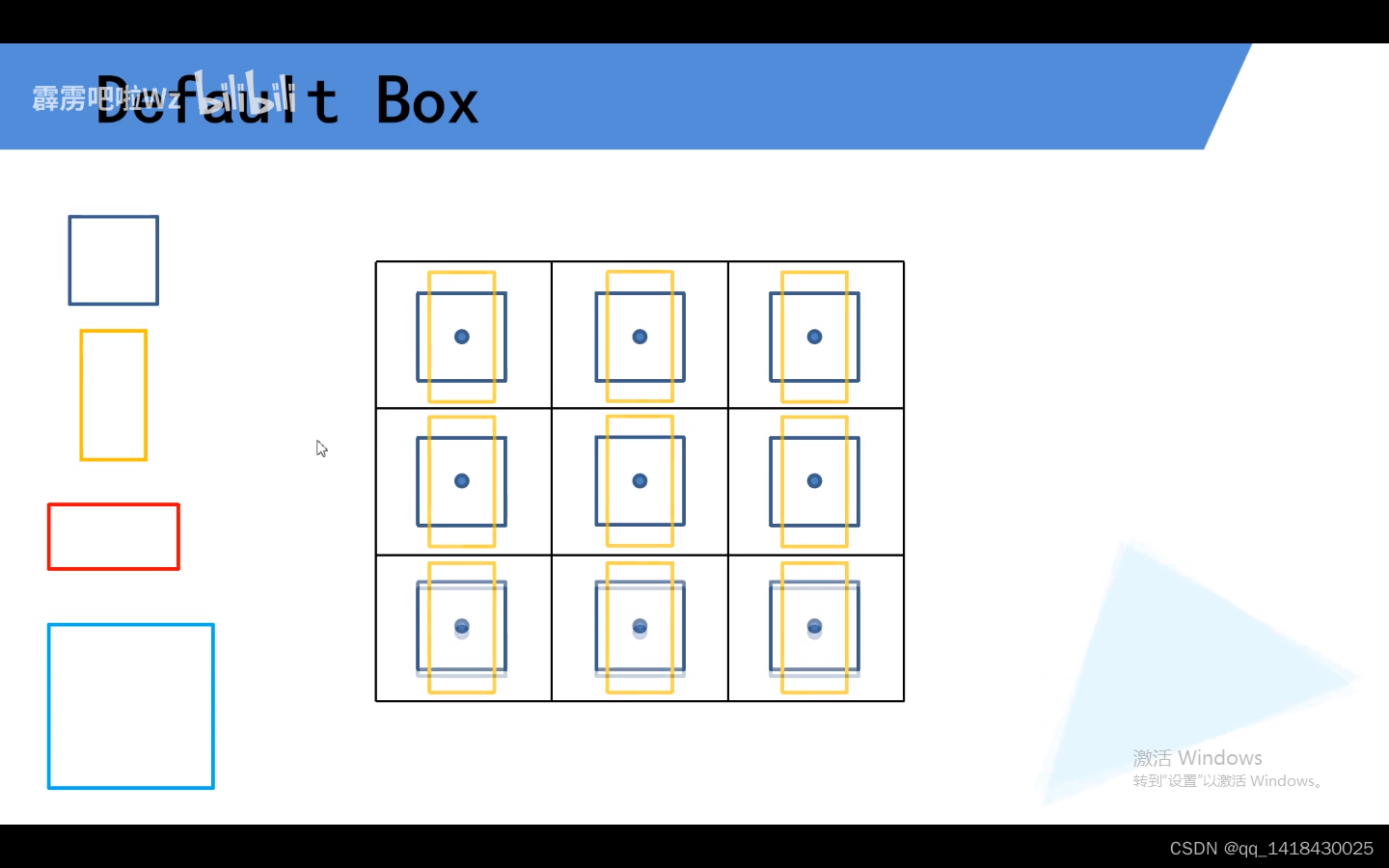

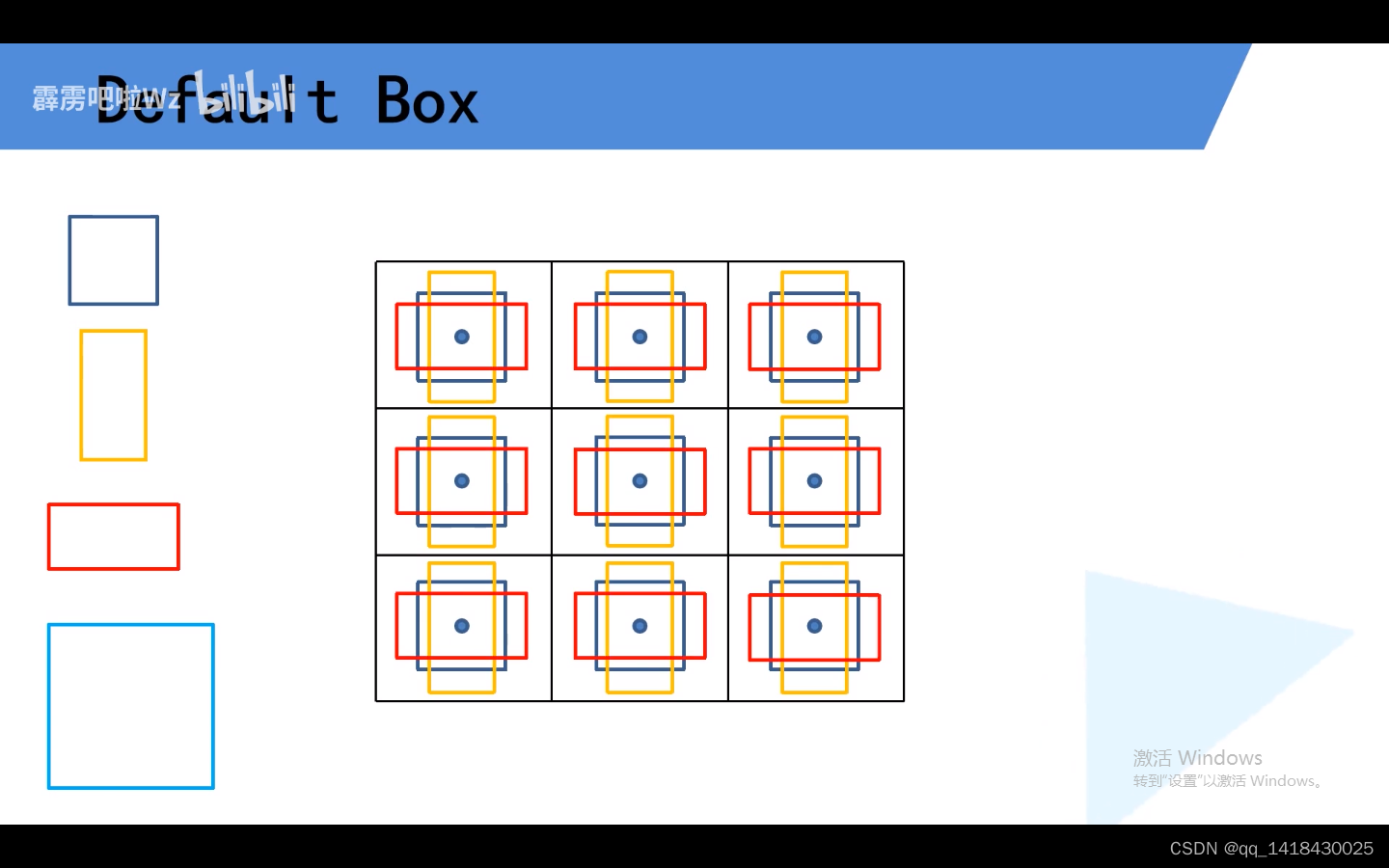

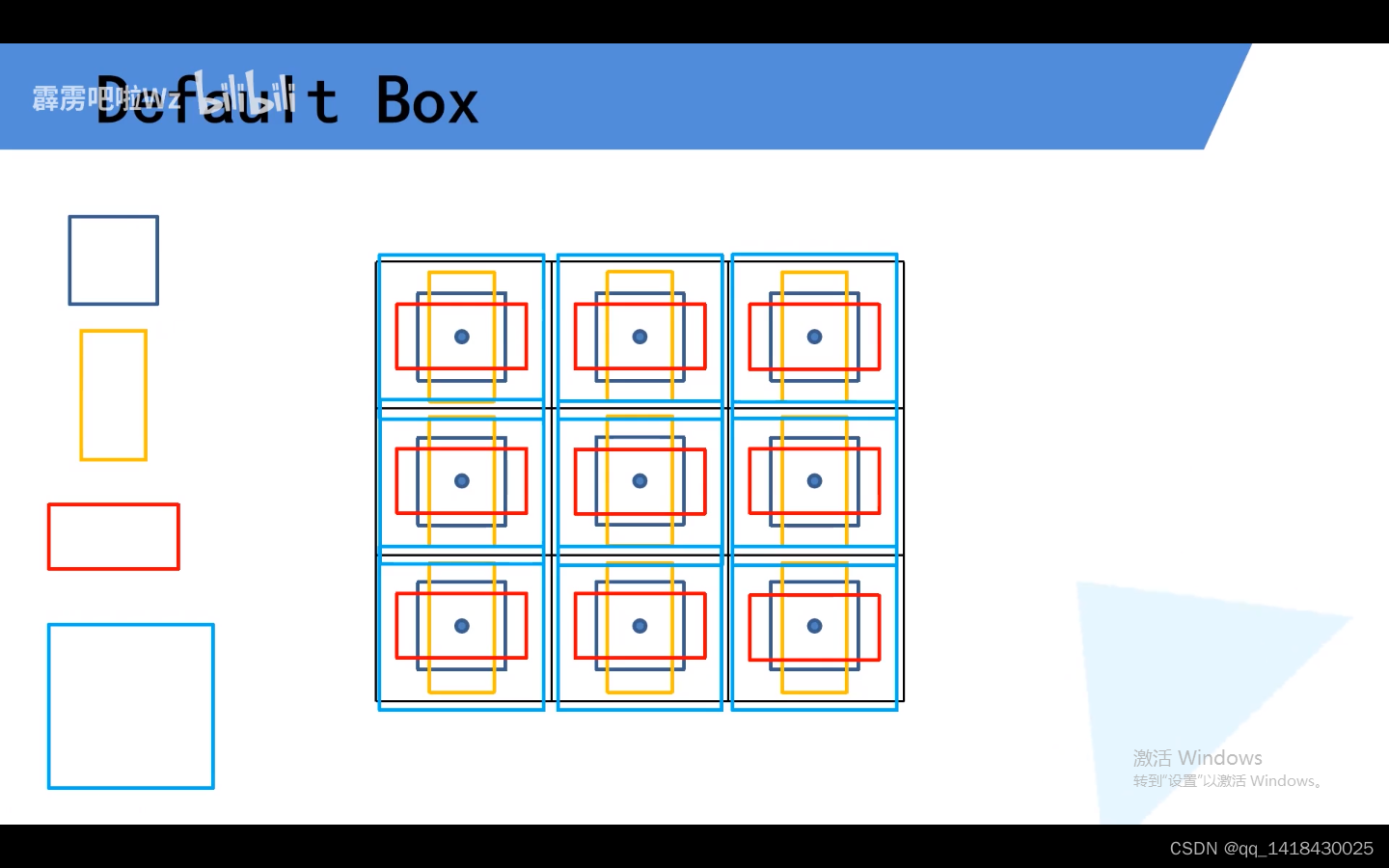

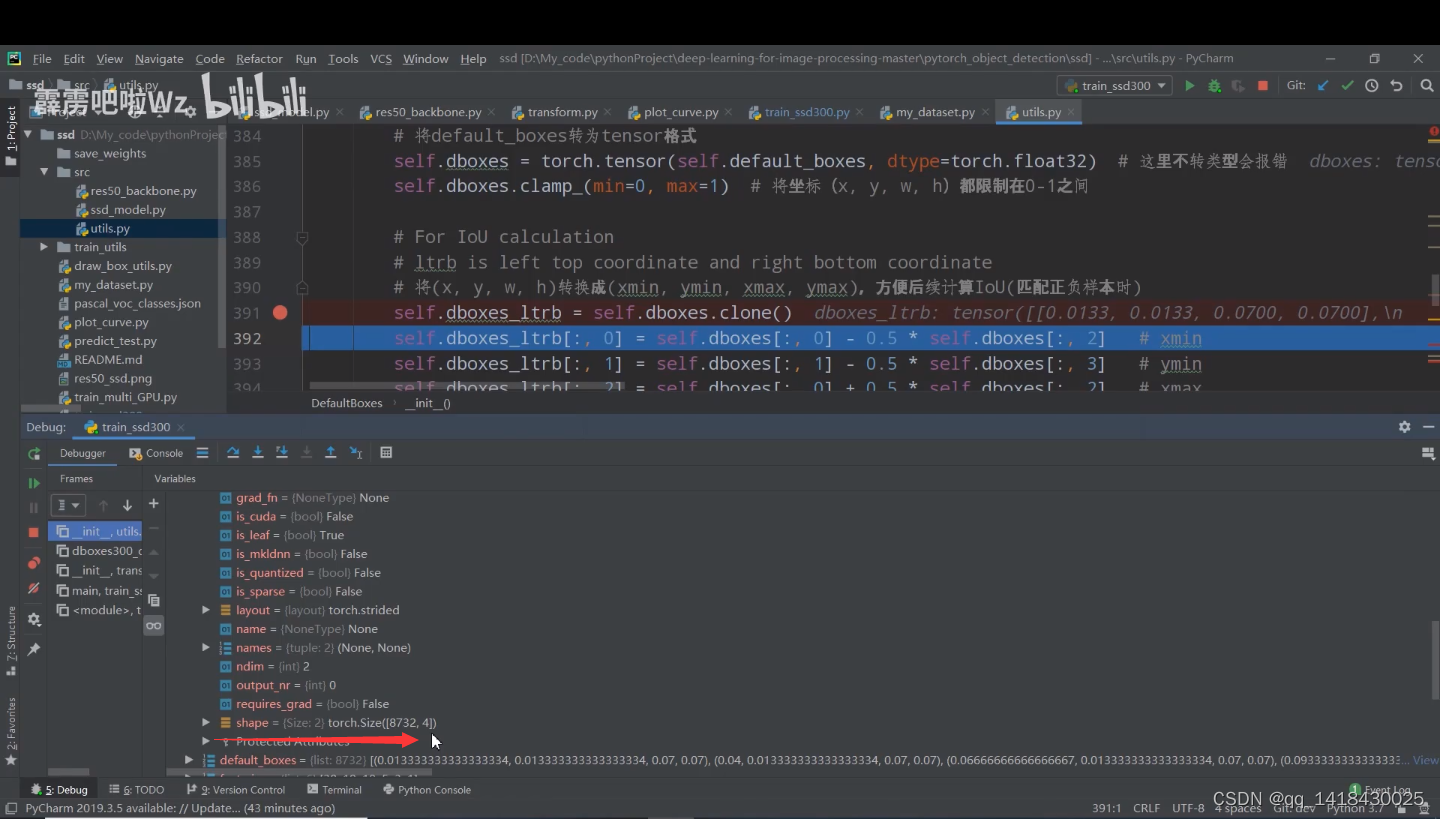

三、Default Box生成

3.1 在utils.py文件中Default Boxes模块实现方法:(SSD有6个预测特征层,每一个预测特征层会有两个scale(尺度)。关于比例:第一个预测特征层、最后两个预测特征层1:1;2:1;1:2。剩下的三个预测特征层除了1:1;2:1;1:2之外,还有3:1和1:3)(每一层预测特征层的两个scale,第一个scale会有1:1;2:1;1:2这三个default box。还有一个scale=根号第一个scaleX第二个scale,比例只有1:1)

class DefaultBoxes(object):

def __init__(self, fig_size, feat_size, steps, scales, aspect_ratios, scale_xy=0.1, scale_wh=0.2):

self.fig_size = fig_size # 输入网络的图像大小 300

# [38, 19, 10, 5, 3, 1]

self.feat_size = feat_size # 每个预测层的feature map尺寸(根据网络结构图看)

self.scale_xy_ = scale_xy#损失的一个参数

self.scale_wh_ = scale_wh

# According to https://github.com/weiliu89/caffe

# Calculation method slightly different from paper

# [8, 16, 32, 64, 100, 300]

self.steps = steps # 每个特征层上的一个cell在原图上的跨度(理论上是等于fig_size/feat_size)

# [21, 45, 99, 153, 207, 261, 315]

self.scales = scales # 每个特征层上预测的default box的scale

fk = fig_size / np.array(steps) # 计算每层特征层的fk(原论文说是feat_size,即每个预测层的尺寸)

# [[2], [2, 3], [2, 3], [2, 3], [2], [2]]

self.aspect_ratios = aspect_ratios # 每个预测特征层上预测的default box的ratios

self.default_boxes = []

# size of feature and number of feature

# 遍历每层特征层,计算default box

for idx, sfeat in enumerate(self.feat_size):

sk1 = scales[idx] / fig_size # scale转为相对值[0-1]

sk2 = scales[idx + 1] / fig_size # scale转为相对值[0-1]

sk3 = sqrt(sk1 * sk2)

# 先添加两个1:1比例的default box宽和高

all_sizes = [(sk1, sk1), (sk3, sk3)]

# 再将剩下不同比例的default box宽和高添加到all_sizes中

for alpha in aspect_ratios[idx]:

w, h = sk1 * sqrt(alpha), sk1 / sqrt(alpha)

all_sizes.append((w, h))#2:1

all_sizes.append((h, w))#1:2

# 计算当前特征层对应原图上的所有default box

for w, h in all_sizes:

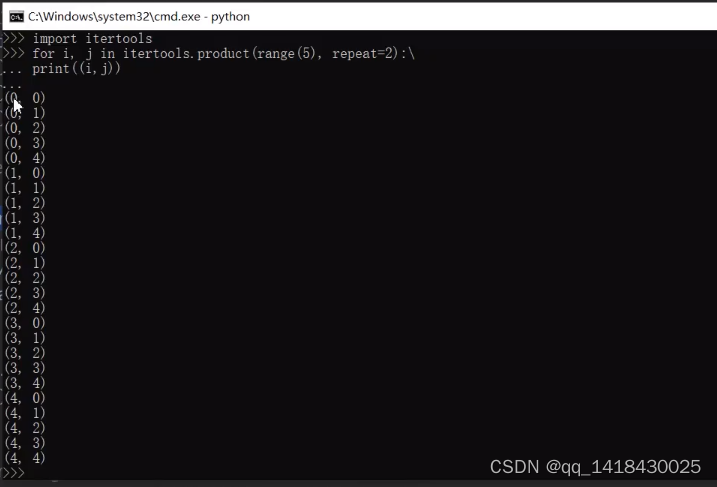

for i, j in itertools.product(range(sfeat), repeat=2): # i -> 行(y), j -> 列(x) 遍历所有cell

# 计算每个default box的中心坐标(范围是在0-1之间)

cx, cy = (j + 0.5) / fk[idx], (i + 0.5) / fk[idx]#转为相对值[0-1]

self.default_boxes.append((cx, cy, w, h))#坐标信息

# 将default_boxes转为tensor格式

self.dboxes = torch.as_tensor(self.default_boxes, dtype=torch.float32) # 这里不转类型会报错

self.dboxes.clamp_(min=0, max=1) # 裁剪,将坐标(x, y, w, h)都限制在0-1之间

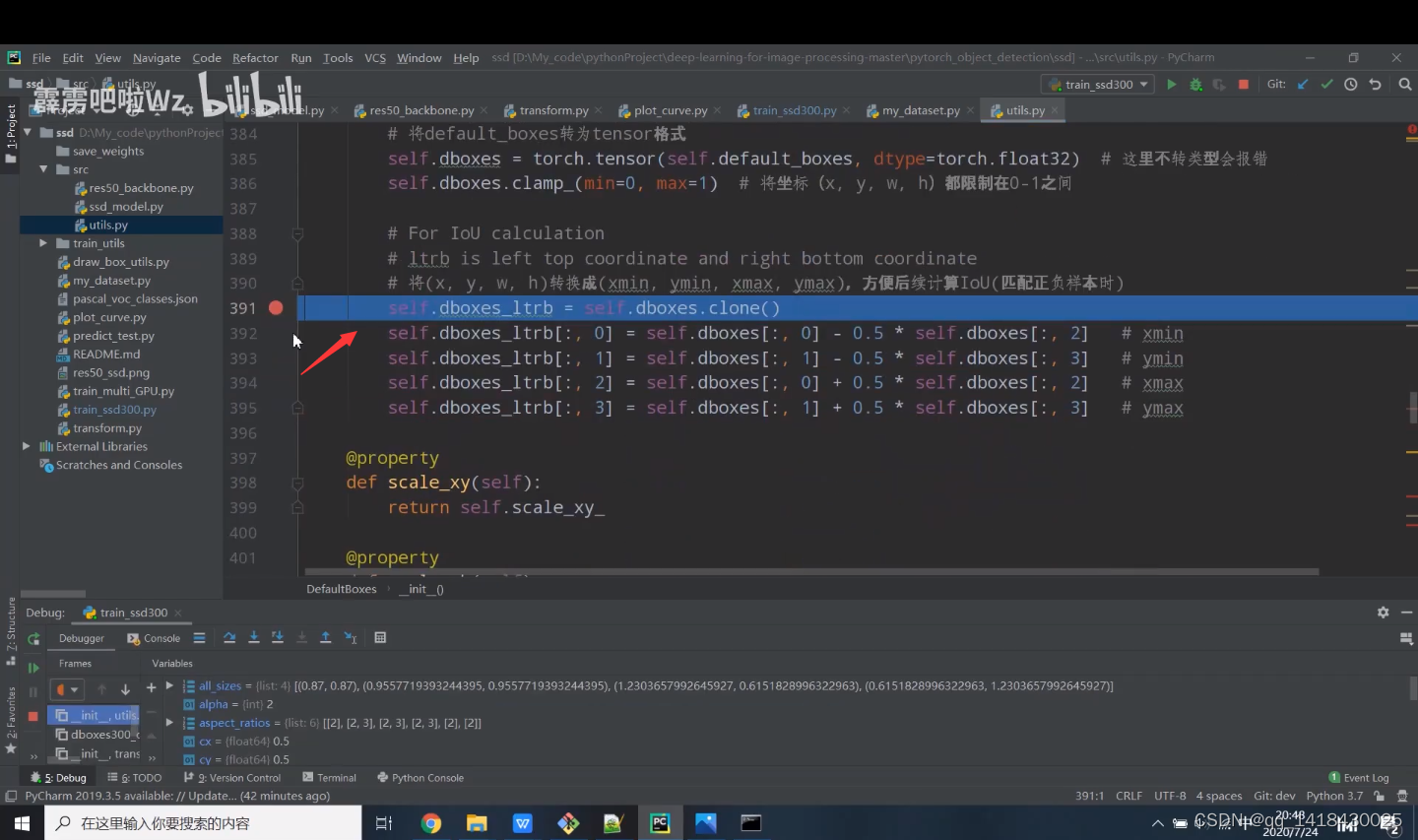

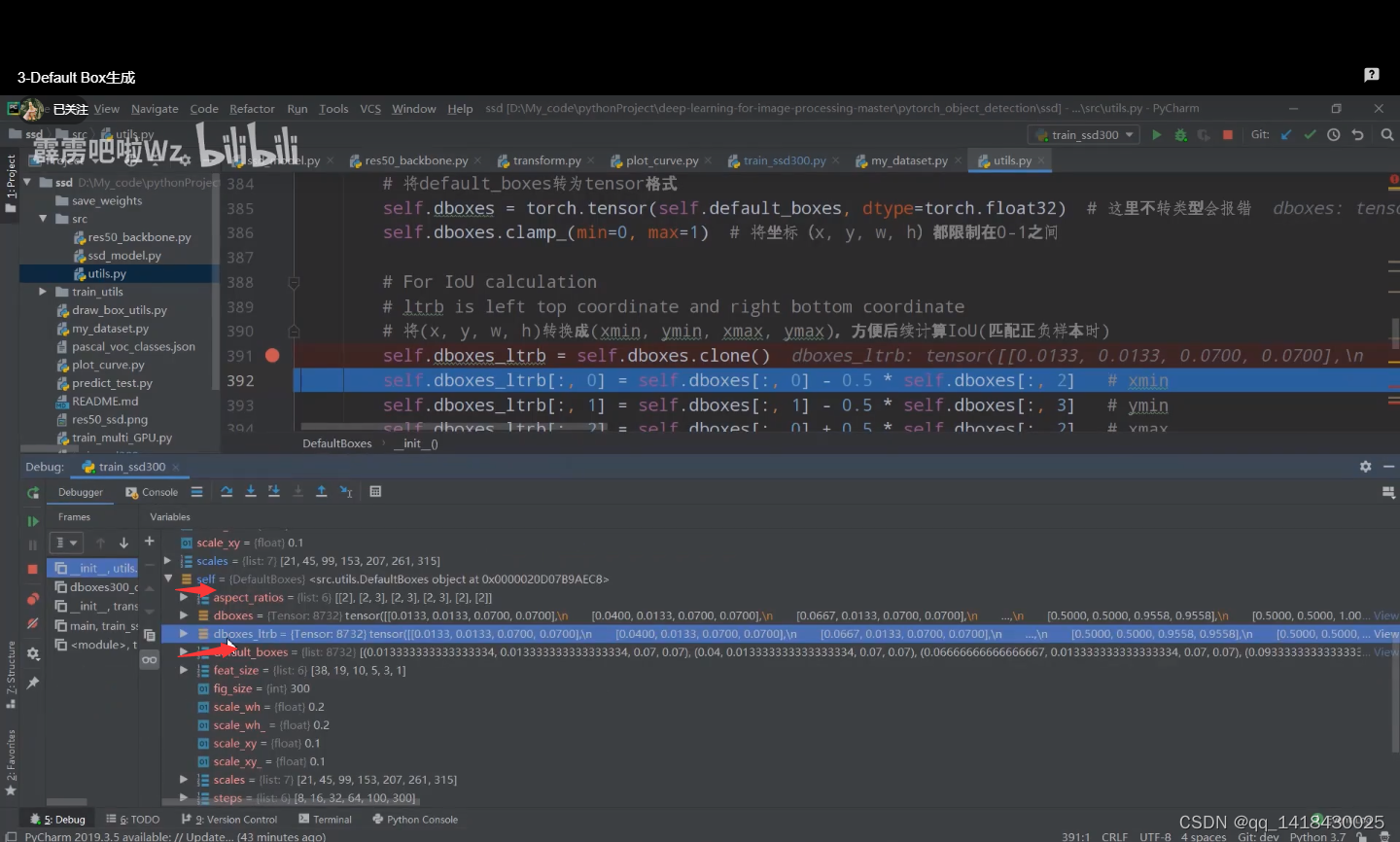

# For IoU calculation

# ltrb is left top coordinate and right bottom coordinate

# 将(x, y, w, h)转换成(xmin, ymin, xmax, ymax),方便后续计算IoU(匹配正负样本时)

self.dboxes_ltrb = self.dboxes.clone()

self.dboxes_ltrb[:, 0] = self.dboxes[:, 0] - 0.5 * self.dboxes[:, 2] # xmin

self.dboxes_ltrb[:, 1] = self.dboxes[:, 1] - 0.5 * self.dboxes[:, 3] # ymin

self.dboxes_ltrb[:, 2] = self.dboxes[:, 0] + 0.5 * self.dboxes[:, 2] # xmax

self.dboxes_ltrb[:, 3] = self.dboxes[:, 1] + 0.5 * self.dboxes[:, 3] # ymax

@property

def scale_xy(self):

return self.scale_xy_

@property

def scale_wh(self):

return self.scale_wh_

def __call__(self, order='ltrb'):

# 根据需求返回对应格式的default box

if order == 'ltrb':

return self.dboxes_ltrb

if order == 'xywh':

return self.dboxes

def dboxes300_coco():

figsize = 300 # 输入网络的图像大小

feat_size = [38, 19, 10, 5, 3, 1] # 每个预测层的feature map尺寸

steps = [8, 16, 32, 64, 100, 300] # 每个特征层上的一个cell在原图上的跨度

# use the scales here: https://github.com/amdegroot/ssd.pytorch/blob/master/data/config.py

scales = [21, 45, 99, 153, 207, 261, 315] # 每个特征层上预测的default box的scale

aspect_ratios = [[2], [2, 3], [2, 3], [2, 3], [2], [2]] # 每个预测特征层上预测的default box的ratios

dboxes = DefaultBoxes(figsize, feat_size, steps, scales, aspect_ratios)

return dboxes

3.2 关于坐标(最后有两种类型坐标,分别是(x, y, w, h)和(xmin, ymin, xmax, ymax))

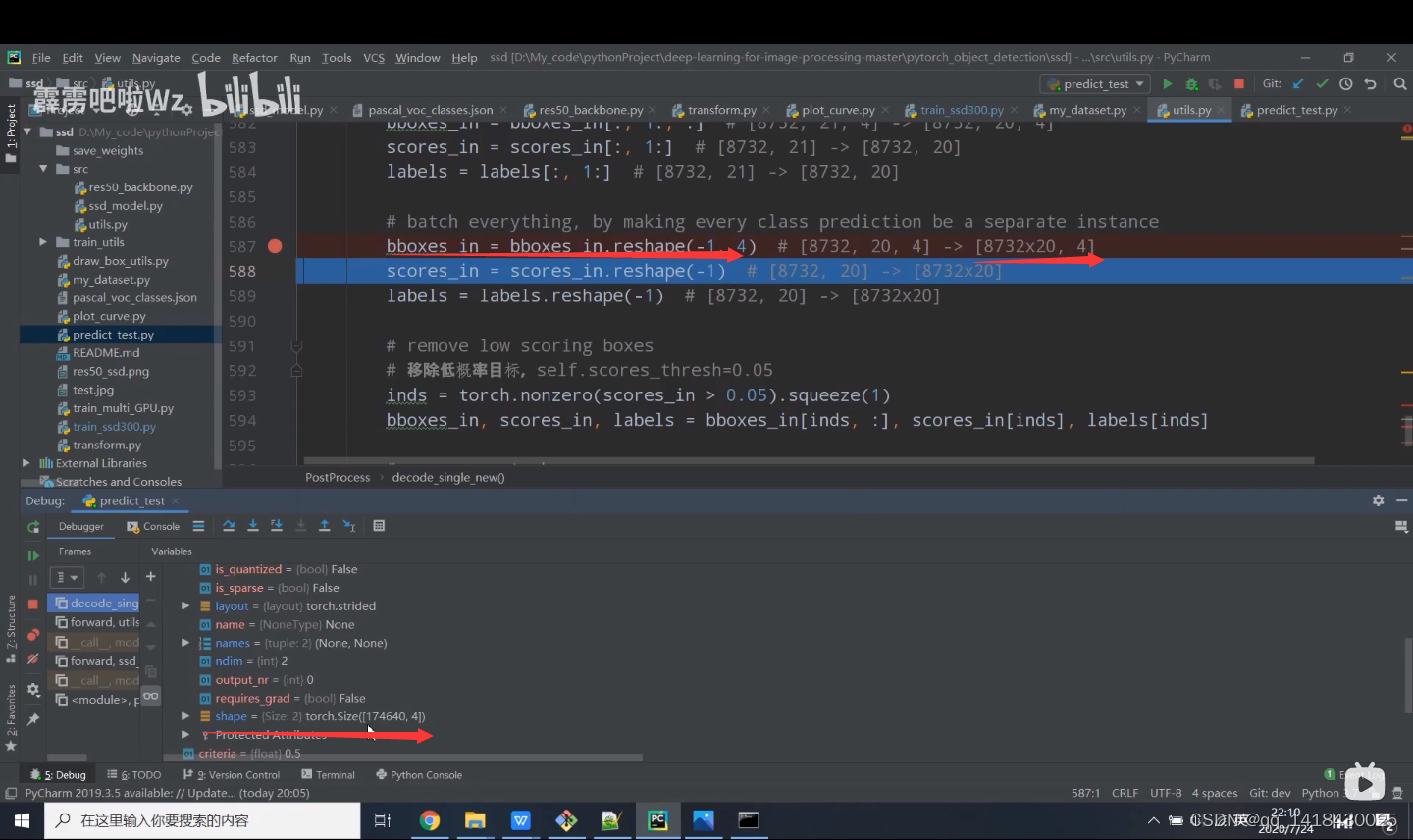

3.3 关于不懂的代码也可以点击单步调试 看看变量的变化

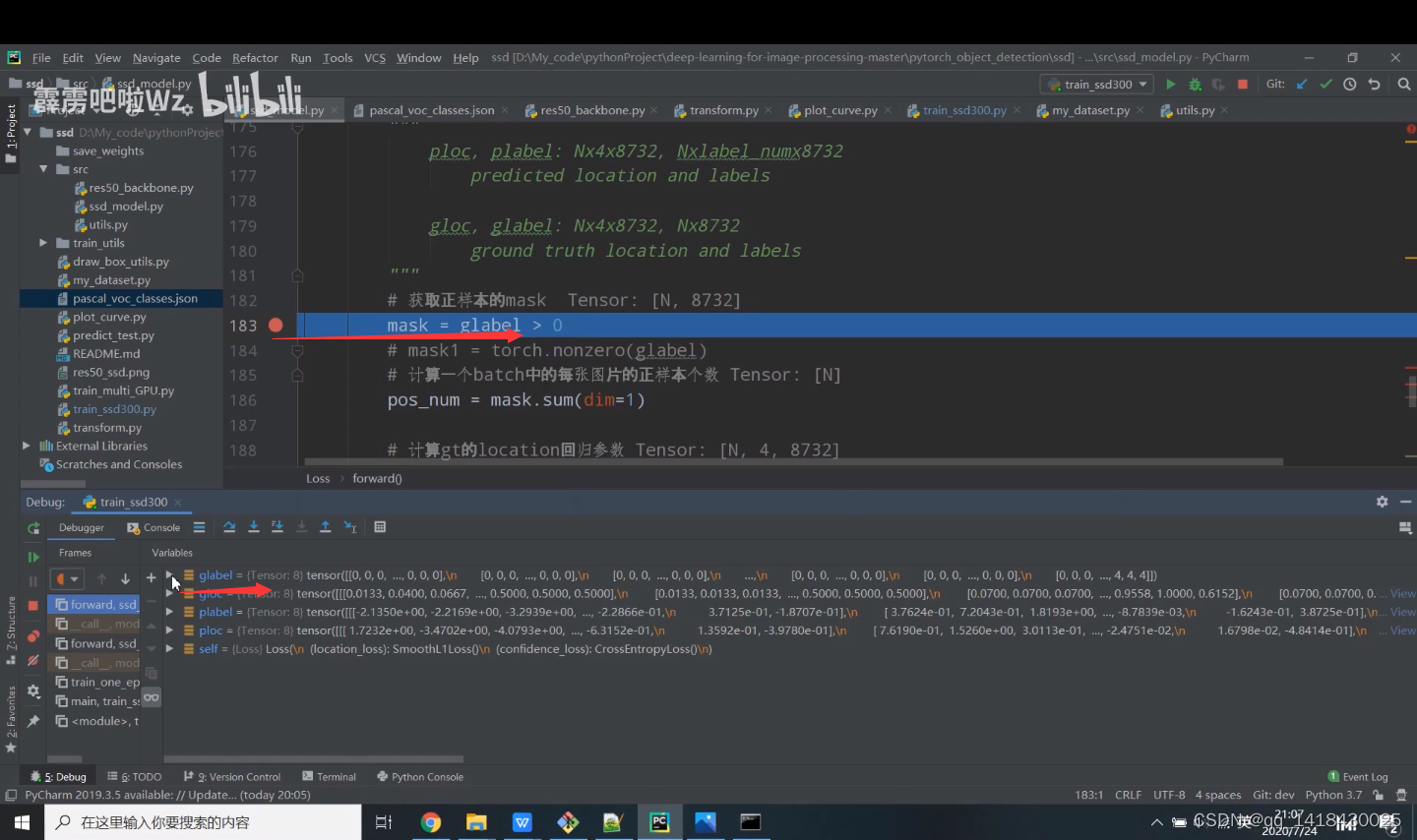

四、Loss的计算

class Loss(nn.Module):

"""

Implements the loss as the sum of the followings:

1. Confidence Loss: All labels, with hard negative mining

2. Localization Loss: Only on positive labels

Suppose input dboxes has the shape 8732x4

"""

def __init__(self, dboxes):

super(Loss, self).__init__()

# Two factor are from following links

# http://jany.st/post/2017-11-05-single-shot-detector-ssd-from-scratch-in-tensorflow.html

self.scale_xy = 1.0 / dboxes.scale_xy # 10

self.scale_wh = 1.0 / dboxes.scale_wh # 5

self.location_loss = nn.SmoothL1Loss(reduction='none')

# [num_anchors, 4] -> [4, num_anchors] -> [1, 4, num_anchors]

self.dboxes = nn.Parameter(dboxes(order="xywh").transpose(0, 1).unsqueeze(dim=0),#交换01位置 再在第一个位置0增加一个新的位置

requires_grad=False)

self.confidence_loss = nn.CrossEntropyLoss(reduction='none')

def _location_vec(self, loc):

# type: (Tensor) -> Tensor

"""

Generate Location Vectors

计算ground truth相对anchors的回归参数

:param loc: anchor匹配到的对应GTBOX Nx4x8732

:return:

"""

gxy = self.scale_xy * (loc[:, :2, :] - self.dboxes[:, :2, :]) / self.dboxes[:, 2:, :] # Nx2x8732(系数加速网络收敛)

gwh = self.scale_wh * (loc[:, 2:, :] / self.dboxes[:, 2:, :]).log() # Nx2x8732(系数加速网络收敛)

return torch.cat((gxy, gwh), dim=1).contiguous()

def forward(self, ploc, plabel, gloc, glabel):

# type: (Tensor, Tensor, Tensor, Tensor) -> Tensor

"""

ploc, plabel: Nx4x8732, Nxlabel_numx8732

predicted location and labels

gloc, glabel: Nx4x8732, Nx8732

ground truth location and labels

"""

# 获取正样本的mask Tensor: [N, 8732] 预处理过程中ancor box对应的gt box对应匹配的GT坐标以及真实标签

mask = torch.gt(glabel, 0) # (gt: >) gt>0 类别索引是从1开始

# mask1 = torch.nonzero(glabel)

# 计算一个batch中的每张图片的正样本个数 Tensor: [N]

pos_num = mask.sum(dim=1) #pos_num:tensor([8,17,18,15,10,11,10,13])

# 计算gt的location回归参数 Tensor: [N, 4, 8732]

vec_gd = self._location_vec(gloc)

# sum on four coordinates, and mask

# 计算定位损失(只有正样本)

loc_loss = self.location_loss(ploc, vec_gd).sum(dim=1) # Tensor: [N, 8732]

loc_loss = (mask.float() * loc_loss).sum(dim=1) # Tenosr: [N]

# hard negative mining Tenosr: [N, 8732]

con = self.confidence_loss(plabel, glabel)

# positive mask will never selected

# 获取负样本

con_neg = con.clone()

con_neg[mask] = 0.0 #将正样本置为0

# 按照confidence_loss降序排列 con_idx(Tensor: [N, 8732])

_, con_idx = con_neg.sort(dim=1, descending=True)

_, con_rank = con_idx.sort(dim=1) # 这个步骤比较巧妙

# number of negative three times positive

# 用于损失计算的负样本数是正样本的3倍(在原论文Hard negative mining部分),

# 但不能超过总样本数8732

neg_num = torch.clamp(3 * pos_num, max=mask.size(1)).unsqueeze(-1)

neg_mask = torch.lt(con_rank, neg_num) # (lt: <) Tensor [N, 8732]

# confidence最终loss使用选取的正样本loss+选取的负样本loss

con_loss = (con * (mask.float() + neg_mask.float())).sum(dim=1) # Tensor [N]

# avoid no object detected

# 避免出现图像中没有GTBOX的情况

total_loss = loc_loss + con_loss

# eg. [15, 3, 5, 0] -> [1.0, 1.0, 1.0, 0.0]

num_mask = torch.gt(pos_num, 0).float() # 统计一个batch中的每张图像中是否存在正样本

pos_num = pos_num.float().clamp(min=1e-6) # 防止出现分母为零的情况

ret = (total_loss * num_mask / pos_num).mean(dim=0) # 只计算存在正样本的图像损失

return ret

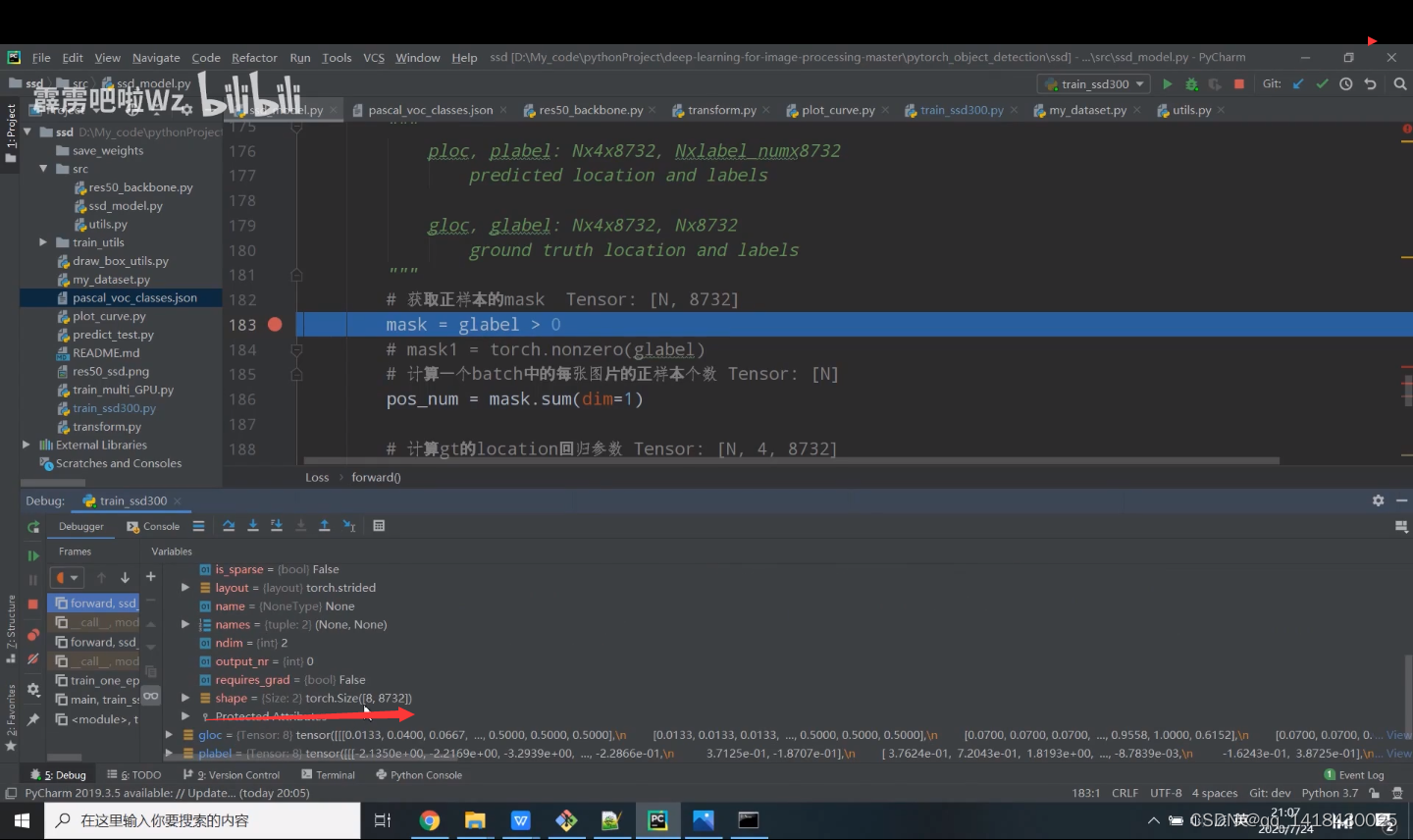

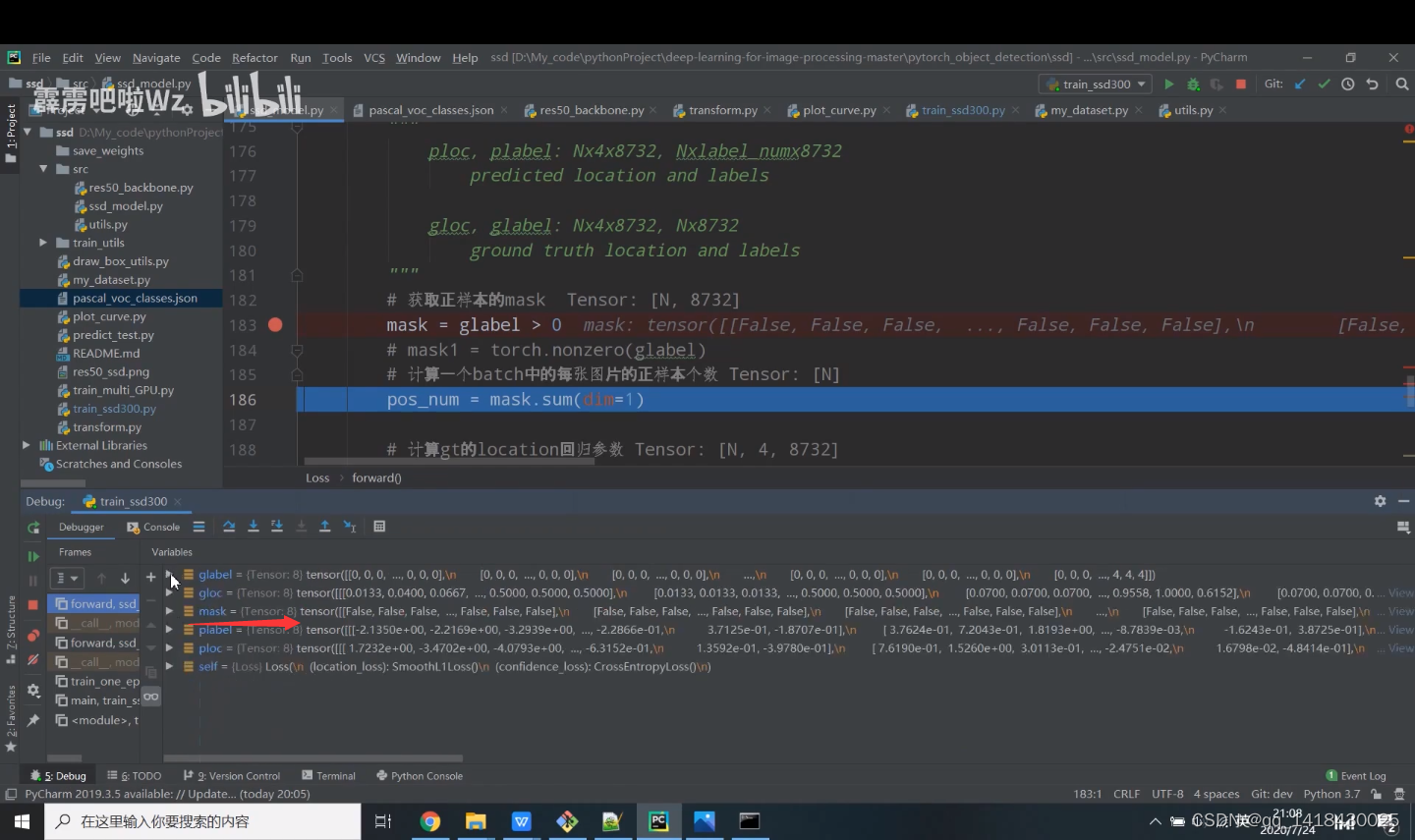

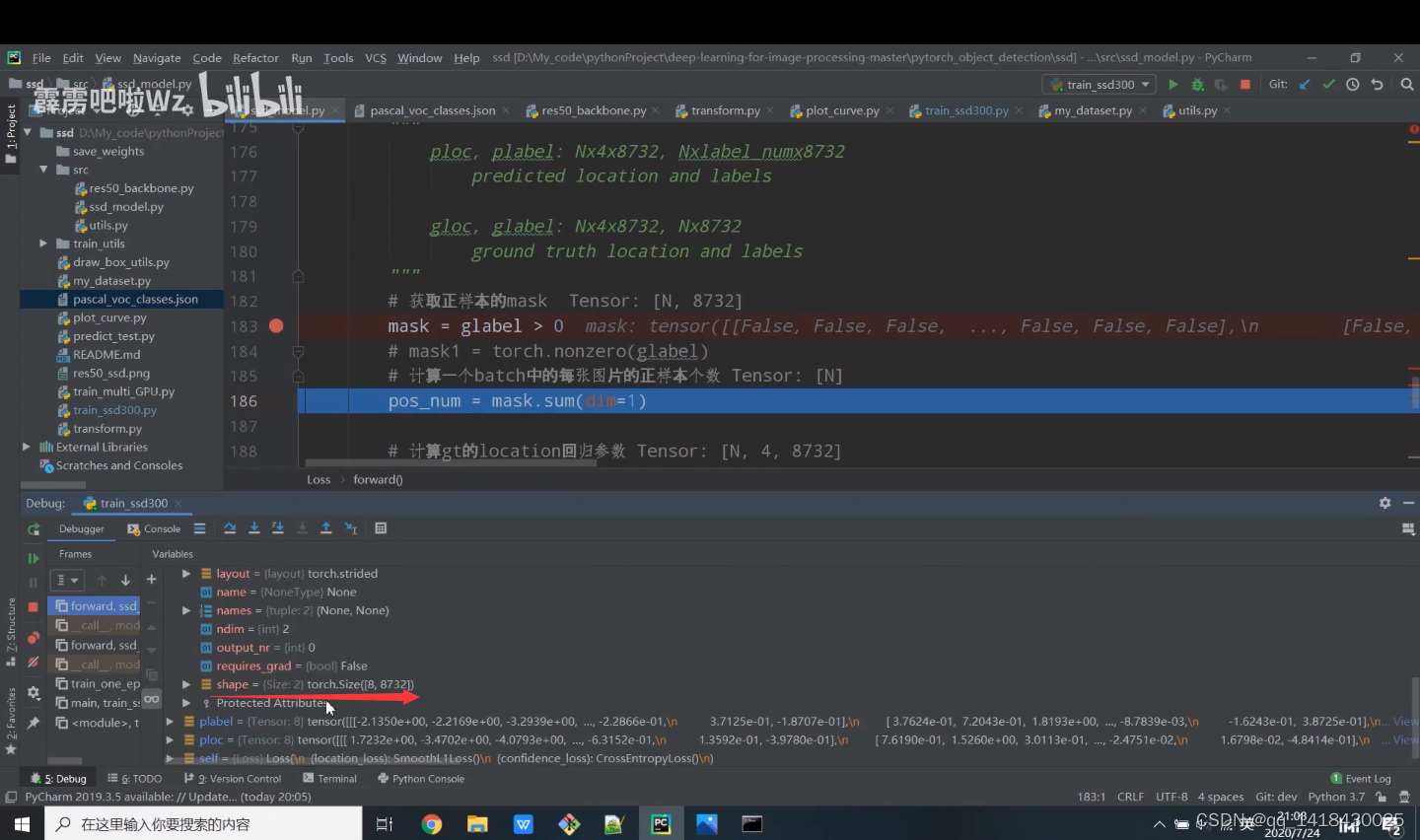

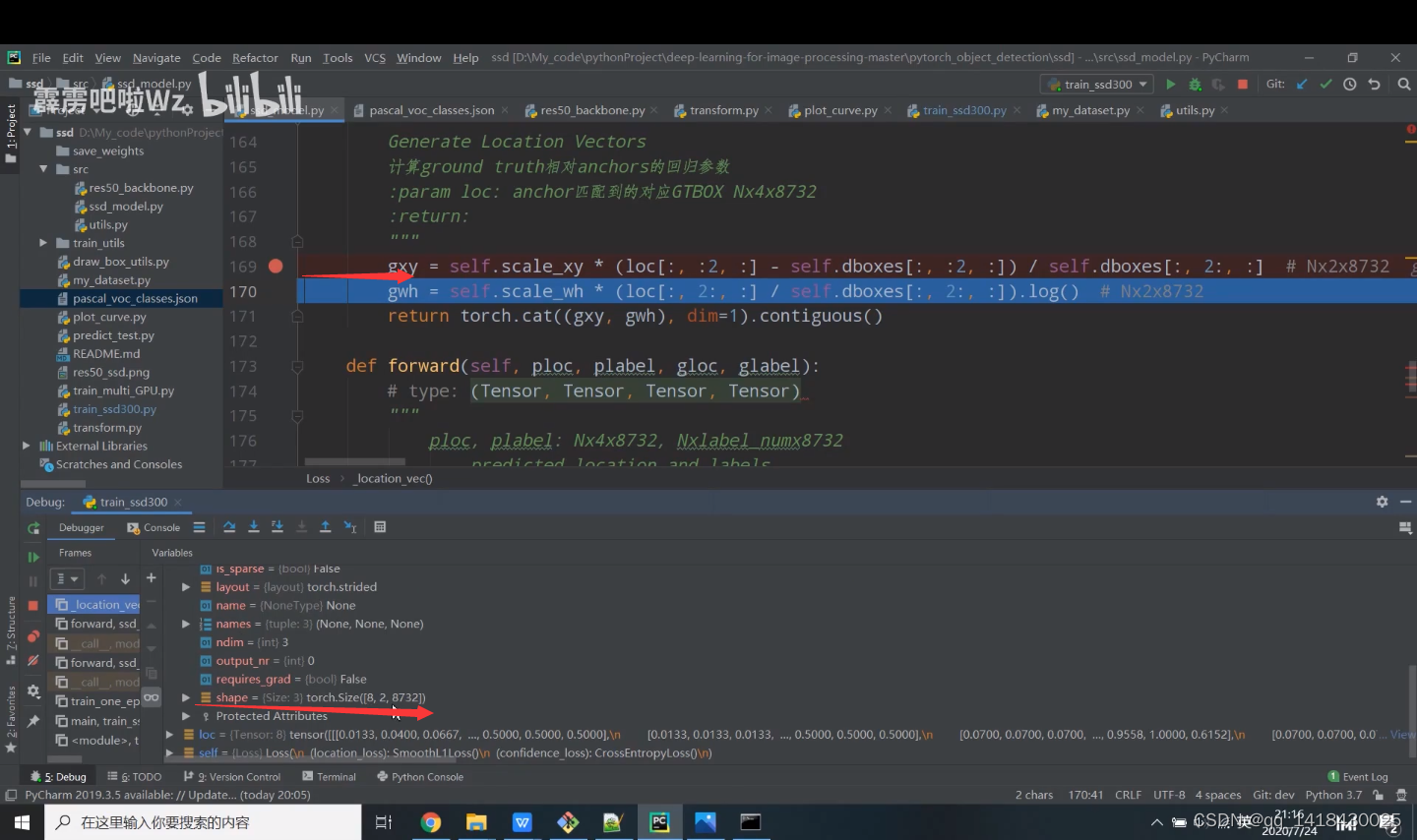

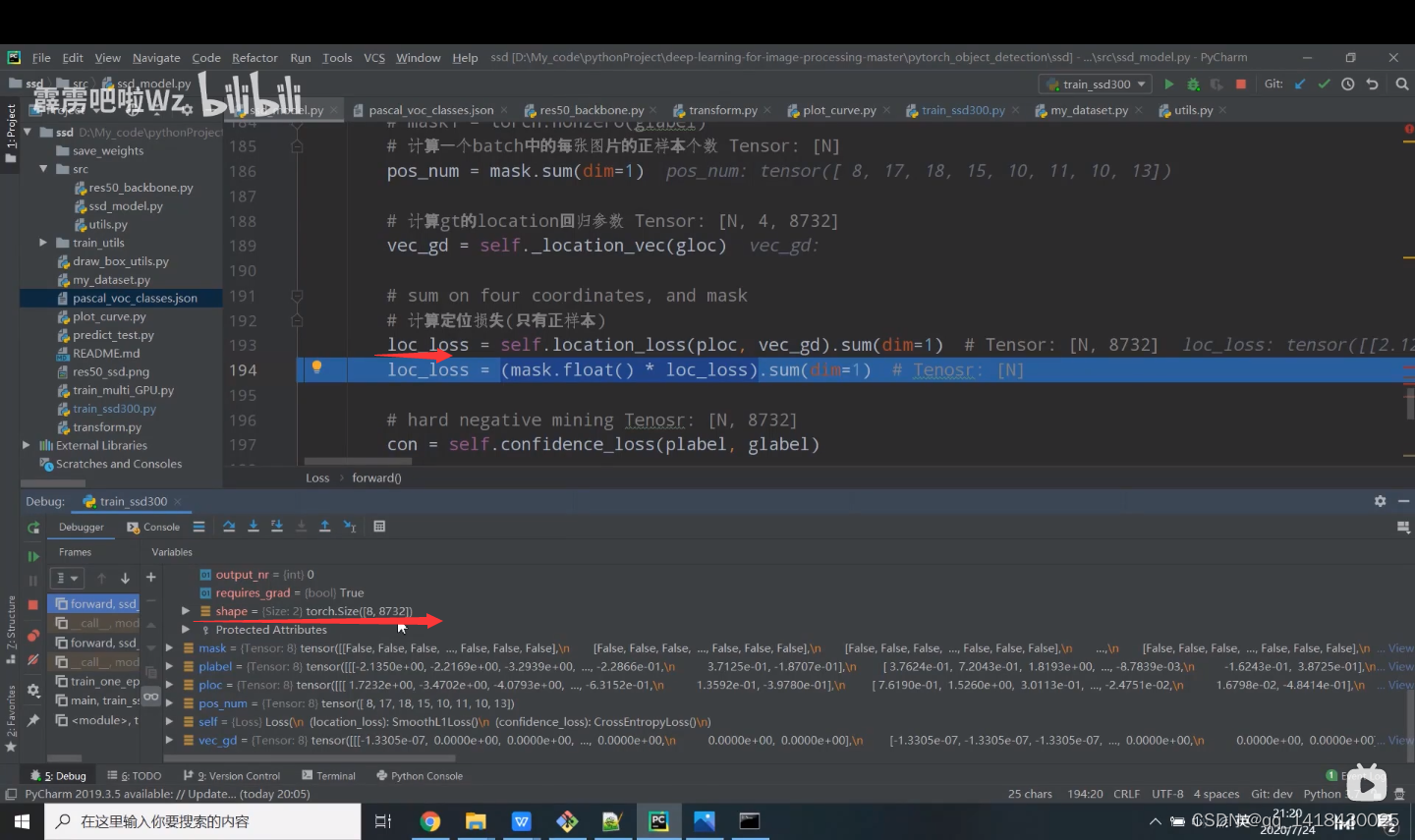

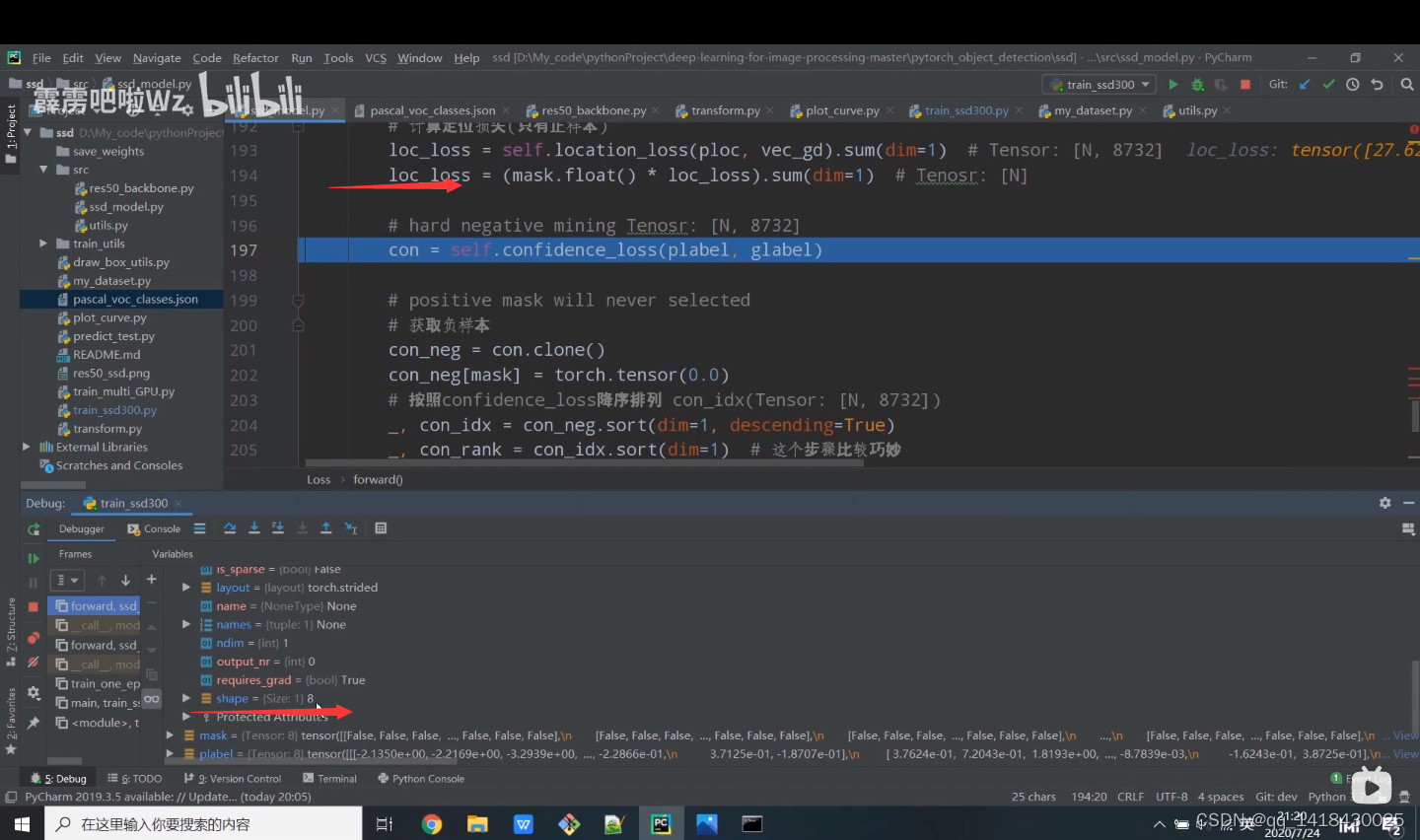

4.1 mask(glabel)调试

mask和glabel都是相同的维度,8x8732.

gloc参数的shape,8x4x8732(batchsize第一个),这就是为什么在loss初始化函数中将dboxes进行transpose处理第一个维度和第二个维度以及通过unsqueeze函数将第一个维度进行扩充。

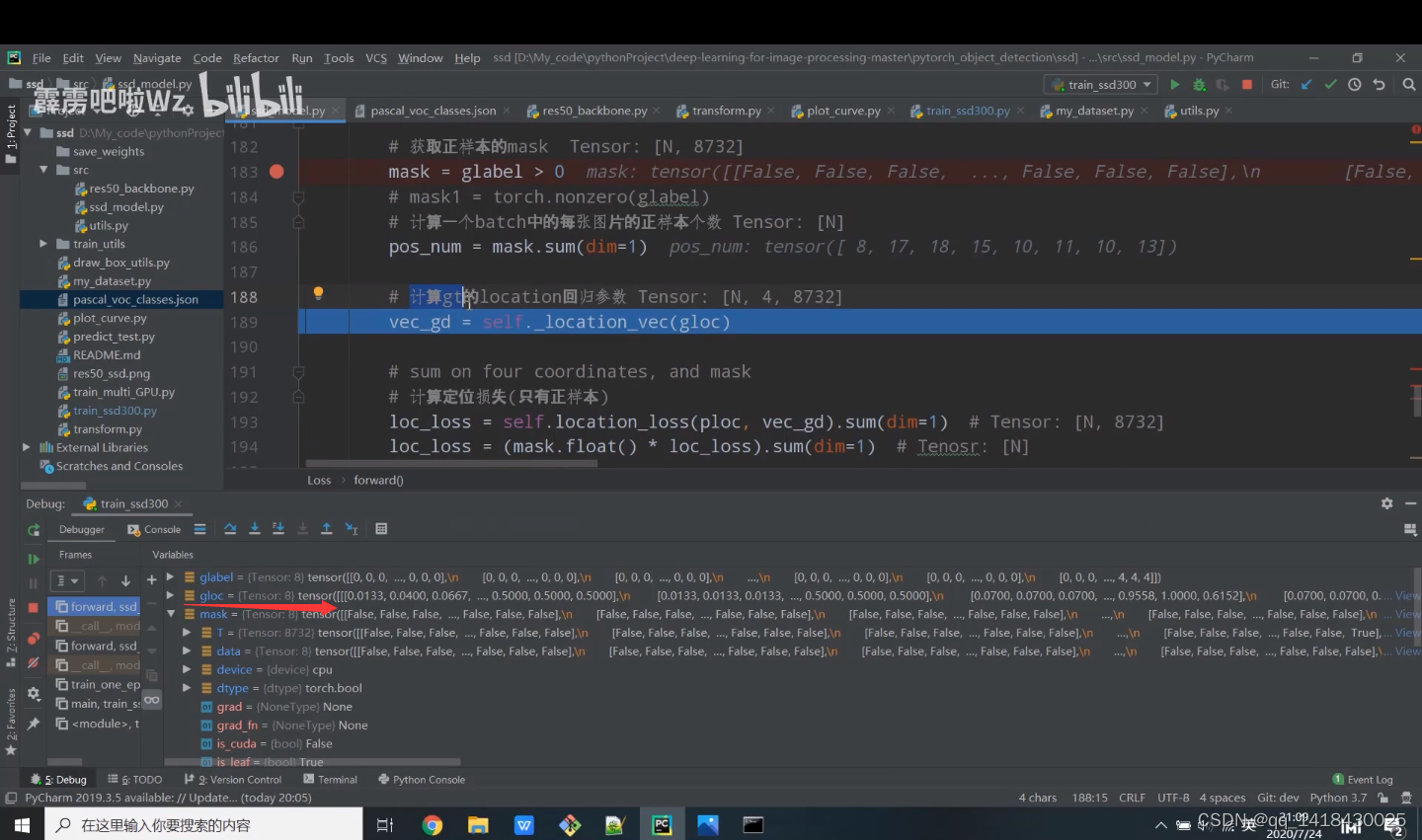

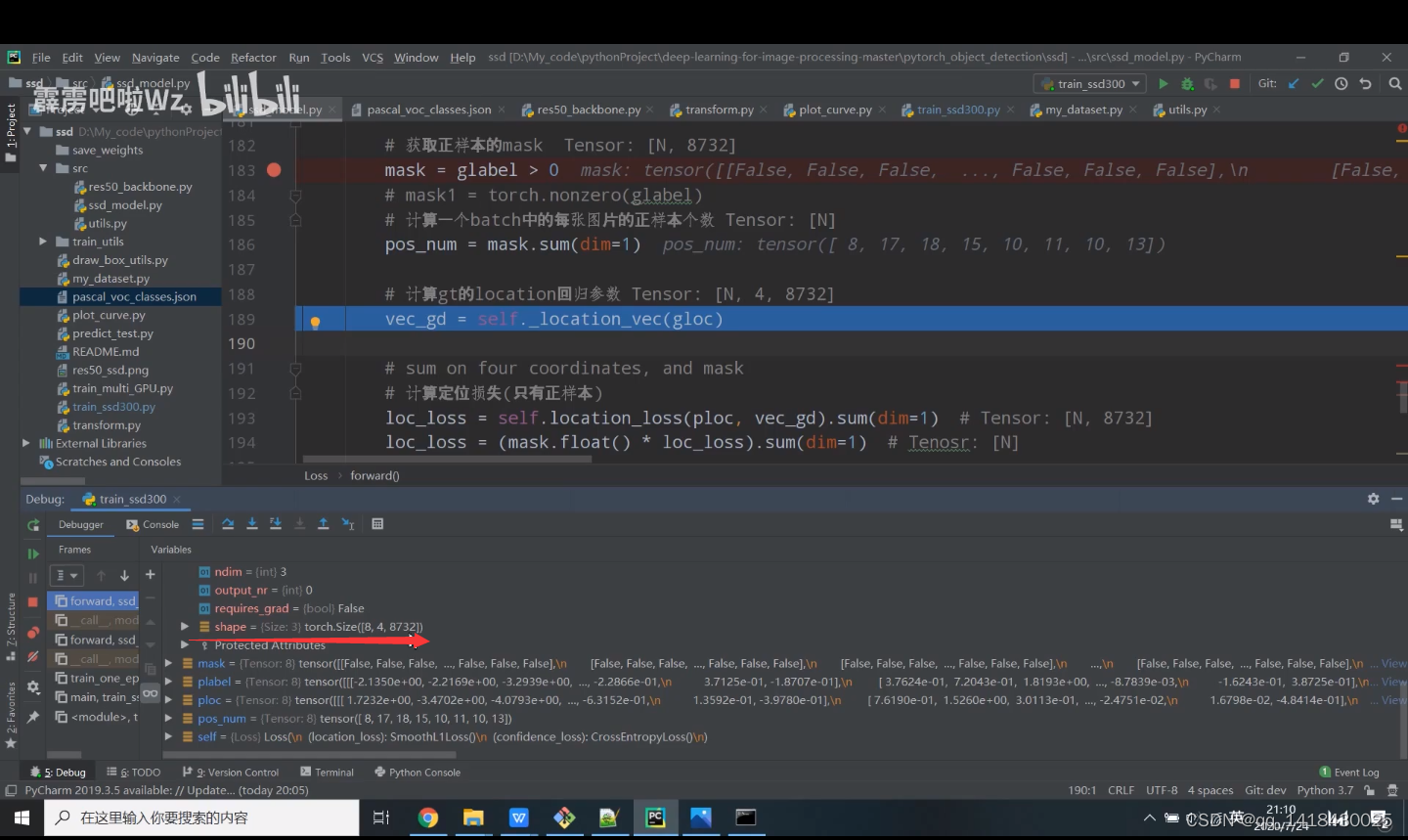

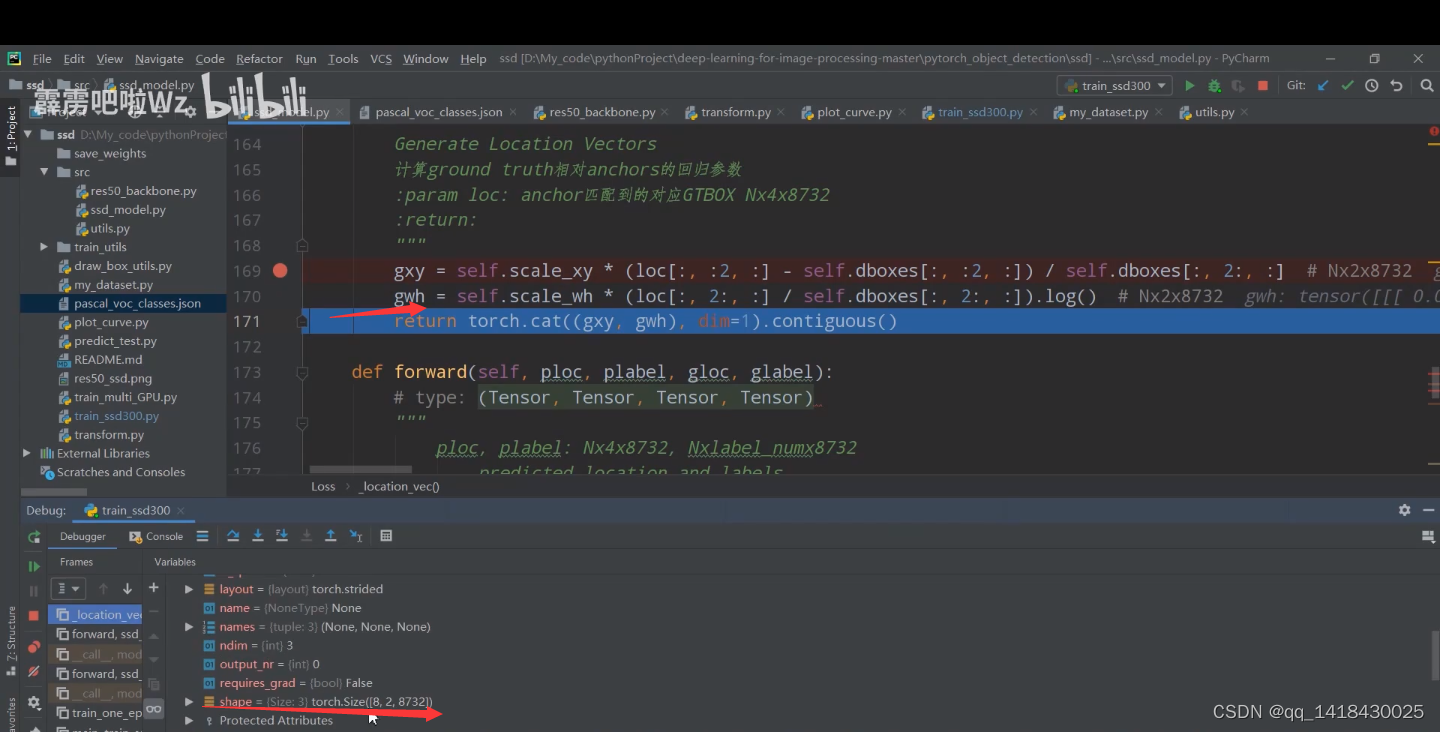

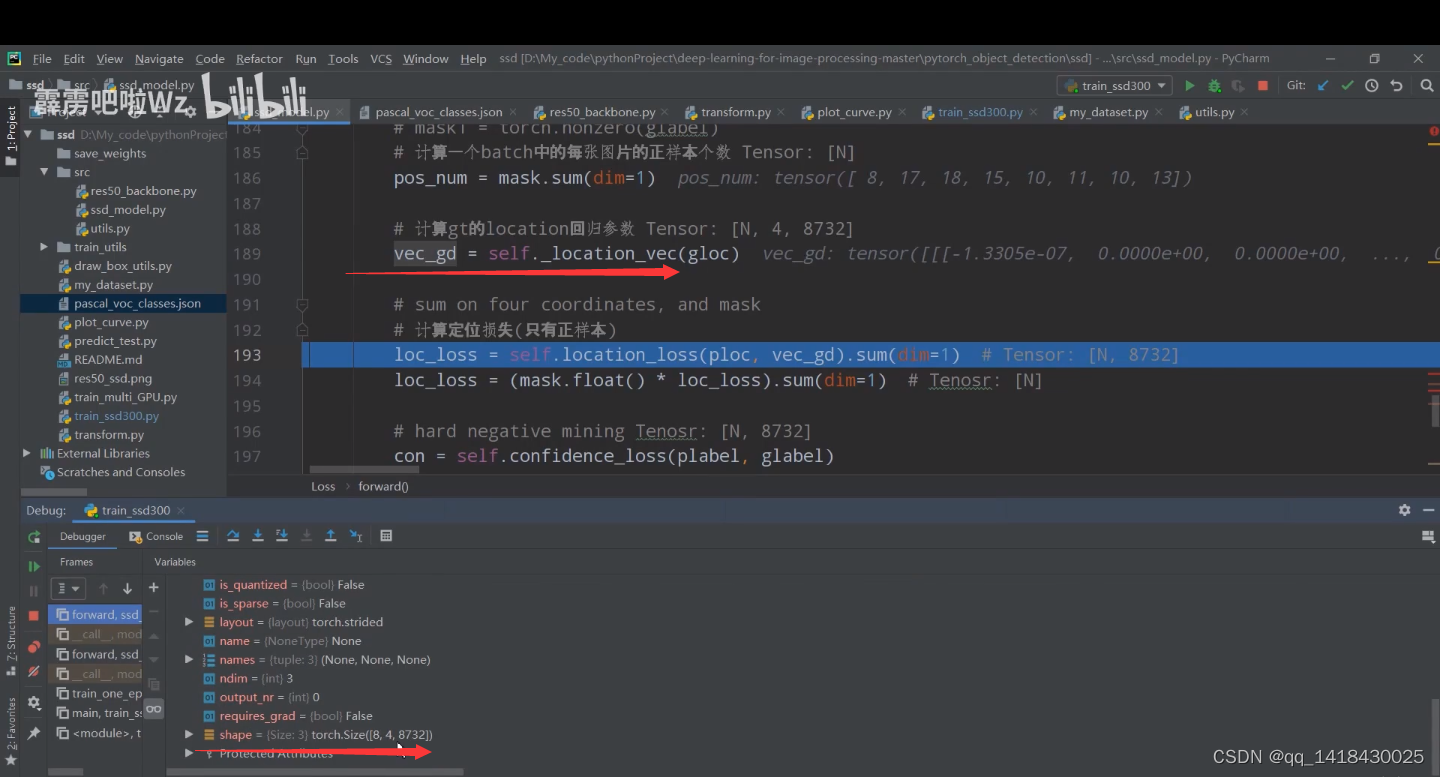

4.2 定位损失的计算

关于定位损失计算:中心x和y的回归参数分别等于ground true的中心x(y)-default box(anchor)的中心x(y),再除以default box(anchor)的宽度w(高度h);宽度w和高度h分别等于ground true的宽度w(高度h)/default box(anchor)的宽度w(高度h),再取对数。

def _location_vec(self, loc):

# type: (Tensor) -> Tensor

"""

Generate Location Vectors

计算ground truth相对anchors的回归参数

:param loc: anchor匹配到的对应GTBOX Nx4x8732

:return:

"""

gxy = self.scale_xy * (loc[:, :2, :] - self.dboxes[:, :2, :]) / self.dboxes[:, 2:, :] # Nx2x8732(系数加速网络收敛)

gwh = self.scale_wh * (loc[:, 2:, :] / self.dboxes[:, 2:, :]).log() # Nx2x8732(系数加速网络收敛)

return torch.cat((gxy, gwh), dim=1).contiguous()

扫描二维码关注公众号,回复:

14542251 查看本文章

通过这部分得到的vec_gdshape是8x4x8732,与gloc一样。

loc_loss包括正负样本损失的shape是8,8732;loc_loss只包含正样本损失的shape是8.负样本损失对于定位没有意义,所有正样本的定位损失。

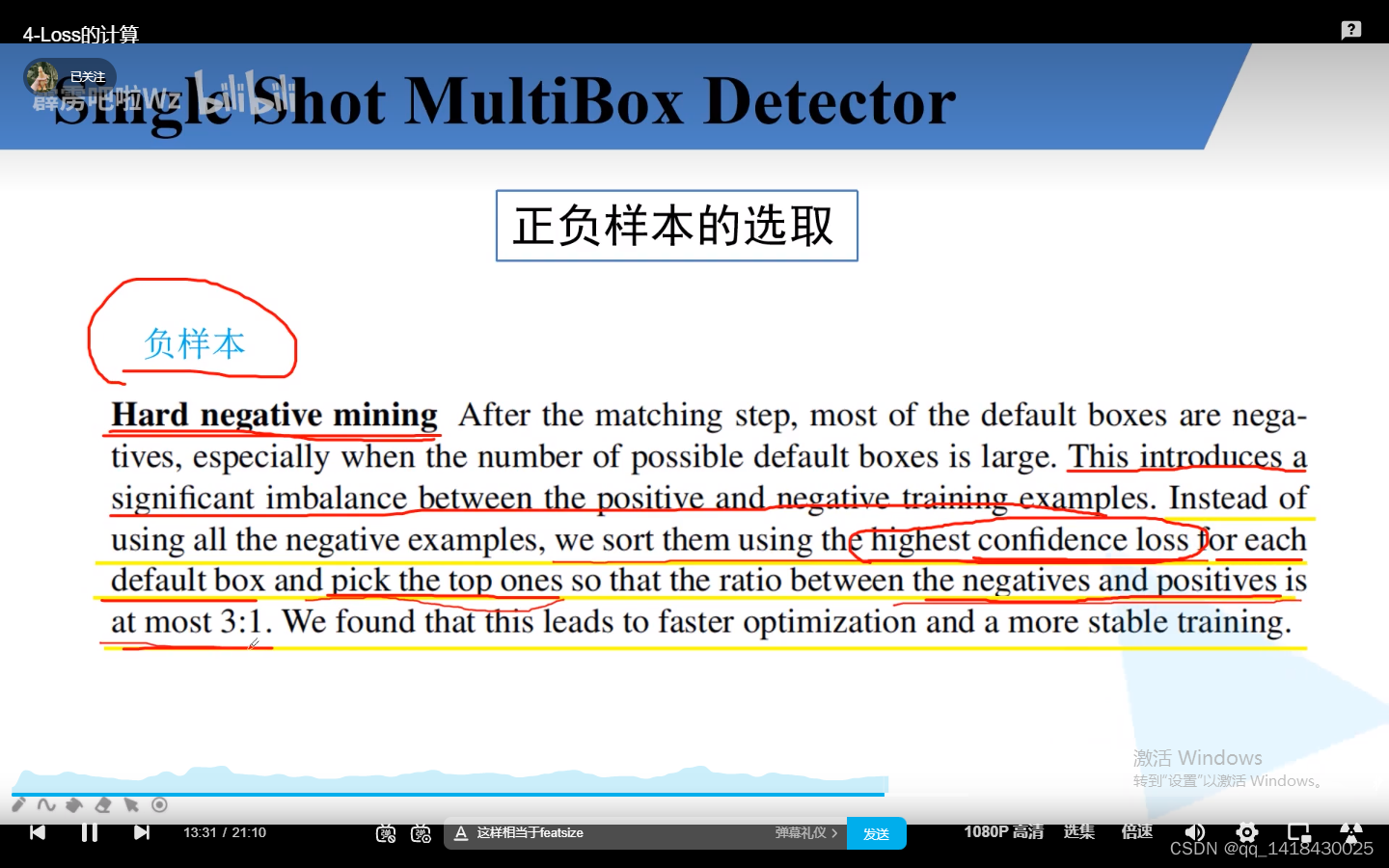

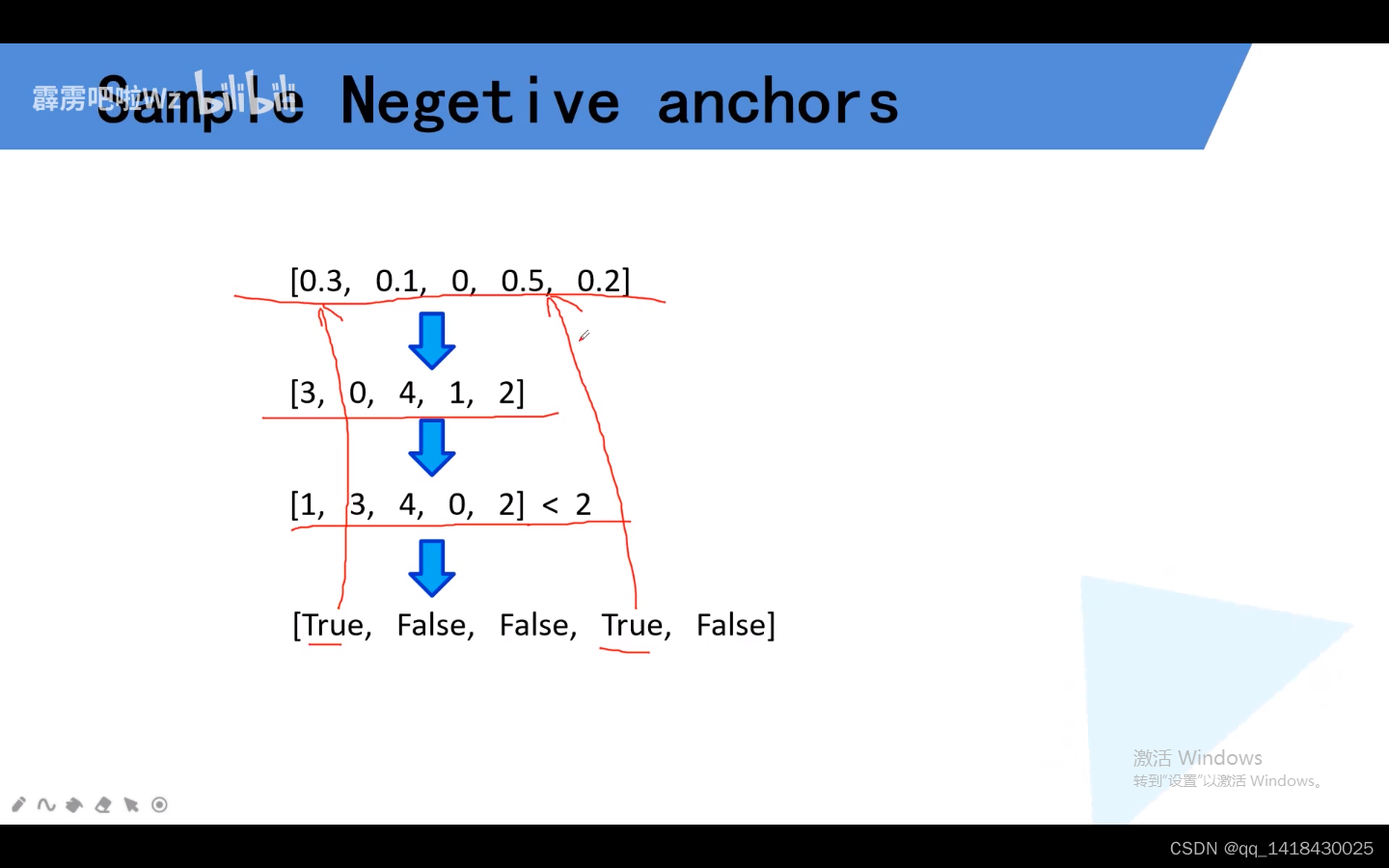

4.3 负样本获取(有一个独特的排序思想)

关于负样本选取,根据置信度损失分数由高到低进行排序,选取分数较高的作为负样本,正负样本的比例大概是在3:1,负样本是正样本的三倍左右.

通过以上算法可以将top2的两个选取出来.

# 按照confidence_loss降序排列 con_idx(Tensor: [N, 8732])

_, con_idx = con_neg.sort(dim=1, descending=True)

_, con_rank = con_idx.sort(dim=1) # 这个步骤比较巧妙

# number of negative three times positive

# 用于损失计算的负样本数是正样本的3倍(在原论文Hard negative mining部分),

# 但不能超过总样本数8732

neg_num = torch.clamp(3 * pos_num, max=mask.size(1)).unsqueeze(-1)

neg_mask = torch.lt(con_rank, neg_num) # (lt: <) Tensor [N, 8732]

五、后处理算法

class PostProcess(nn.Module):#后处理部分

def __init__(self, dboxes):

super(PostProcess, self).__init__()

# [num_anchors, 4] -> [1, num_anchors, 4]

self.dboxes_xywh = nn.Parameter(dboxes(order='xywh').unsqueeze(dim=0),

requires_grad=False)

self.scale_xy = dboxes.scale_xy # 0.1

self.scale_wh = dboxes.scale_wh # 0.2

self.criteria = 0.5 #非极大值阈值IOU

self.max_output = 100 #输出限制 最多一百个目标

def forward(self, bboxes_in, scores_in):#坐标偏移量以及预测每一个类别

# 通过预测的boxes回归参数得到最终预测坐标, 将预测目标score通过softmax处理

bboxes, probs = self.scale_back_batch(bboxes_in, scores_in)

outputs = torch.jit.annotate(List[Tuple[Tensor, Tensor, Tensor]], [])

# 遍历一个batch中的每张image数据

# bboxes: [batch, 8732, 4]

for bbox, prob in zip(bboxes.split(1, 0), probs.split(1, 0)): # split_size, split_dim

# bbox: [1, 8732, 4] -> [8732,4]

bbox = bbox.squeeze(0)

prob = prob.squeeze(0)

outputs.append(self.decode_single_new(bbox, prob, self.criteria, self.max_output))

return outputs

def scale_back_batch(self, bboxes_in, scores_in):

# type: (Tensor, Tensor) -> Tuple[Tensor, Tensor]

"""

1)通过预测的boxes回归参数得到最终预测坐标

2)将box格式从xywh转换回ltrb

3)将预测目标score通过softmax处理

Do scale and transform from xywh to ltrb

suppose input N x 4 x num_bbox | N x label_num x num_bbox

bboxes_in: [N, 4, 8732]是网络预测的xywh回归参数

scores_in: [N, label_num, 8732]是预测的每个default box的各目标概率

"""

# Returns a view of the original tensor with its dimensions permuted.

# [batch, 4, 8732] -> [batch, 8732, 4]

bboxes_in = bboxes_in.permute(0, 2, 1)

# [batch, label_num, 8732] -> [batch, 8732, label_num]

scores_in = scores_in.permute(0, 2, 1)

# print(bboxes_in.is_contiguous())

bboxes_in[:, :, :2] = self.scale_xy * bboxes_in[:, :, :2] # 预测的x, y回归参数

bboxes_in[:, :, 2:] = self.scale_wh * bboxes_in[:, :, 2:] # 预测的w, h回归参数

# 将预测的回归参数叠加到default box上得到最终的预测边界框

bboxes_in[:, :, :2] = bboxes_in[:, :, :2] * self.dboxes_xywh[:, :, 2:] + self.dboxes_xywh[:, :, :2]

bboxes_in[:, :, 2:] = bboxes_in[:, :, 2:].exp() * self.dboxes_xywh[:, :, 2:]

# transform format to ltrb

l = bboxes_in[:, :, 0] - 0.5 * bboxes_in[:, :, 2]

t = bboxes_in[:, :, 1] - 0.5 * bboxes_in[:, :, 3]

r = bboxes_in[:, :, 0] + 0.5 * bboxes_in[:, :, 2]

b = bboxes_in[:, :, 1] + 0.5 * bboxes_in[:, :, 3]

bboxes_in[:, :, 0] = l # xmin

bboxes_in[:, :, 1] = t # ymin

bboxes_in[:, :, 2] = r # xmax

bboxes_in[:, :, 3] = b # ymax

# scores_in: [batch, 8732, label_num]

return bboxes_in, F.softmax(scores_in, dim=-1)

5.1 损失时候计算的scale_xy是10(1/0.1=10),scale_wh是5(1/0.2=5);预测的x, y回归参数是0.1和0.2.在计算gt box的回归参数时候有乘scale_xy(10)和scale_wh(5)这个缩放因子。

def decode_single_new(self, bboxes_in, scores_in, criteria, num_output):

# type: (Tensor, Tensor, float, int) -> Tuple[Tensor, Tensor, Tensor]

"""

decode:

input : bboxes_in (Tensor 8732 x 4), scores_in (Tensor 8732 x nitems)

output : bboxes_out (Tensor nboxes x 4), labels_out (Tensor nboxes)

criteria : IoU threshold of bboexes

max_output : maximum number of output bboxes

"""

device = bboxes_in.device

num_classes = scores_in.shape[-1]# +背景

# 对越界的bbox进行裁剪

bboxes_in = bboxes_in.clamp(min=0, max=1)

# [8732, 4] -> [8732, 21, 4]

bboxes_in = bboxes_in.repeat(1, num_classes).reshape(scores_in.shape[0], -1, 4)

# create labels for each prediction

labels = torch.arange(num_classes, device=device)

# [num_classes] -> [8732, num_classes]

labels = labels.view(1, -1).expand_as(scores_in)

# remove prediction with the background label

# 移除归为背景类别的概率信息 0对应背景

bboxes_in = bboxes_in[:, 1:, :] # [8732, 21, 4] -> [8732, 20, 4]

scores_in = scores_in[:, 1:] # [8732, 21] -> [8732, 20]

labels = labels[:, 1:] # [8732, 21] -> [8732, 20]

# batch everything, by making every class prediction be a separate instance

bboxes_in = bboxes_in.reshape(-1, 4) # [8732, 20, 4] -> [8732x20, 4]

scores_in = scores_in.reshape(-1) # [8732, 20] -> [8732x20]

labels = labels.reshape(-1) # [8732, 20] -> [8732x20]

# remove low scoring boxes

# 移除低概率目标,self.scores_thresh=0.05

# inds = torch.nonzero(scores_in > 0.05).squeeze(1)

inds = torch.where(torch.gt(scores_in, 0.05))[0]

bboxes_in, scores_in, labels = bboxes_in[inds, :], scores_in[inds], labels[inds]

# remove empty boxes

ws, hs = bboxes_in[:, 2] - bboxes_in[:, 0], bboxes_in[:, 3] - bboxes_in[:, 1]#右下角x-左上角x=宽度w 右下角y-左上角y=高度h

keep = (ws >= 1 / 300) & (hs >= 1 / 300)#相对值

# keep = keep.nonzero().squeeze(1)

keep = torch.where(keep)[0]

bboxes_in, scores_in, labels = bboxes_in[keep], scores_in[keep], labels[keep]

# non-maximum suppression

keep = batched_nms(bboxes_in, scores_in, labels, iou_threshold=criteria)

# keep only topk scoring predictions

keep = keep[:num_output]

bboxes_out = bboxes_in[keep, :]

scores_out = scores_in[keep]

labels_out = labels[keep]

return bboxes_out, labels_out, scores_out

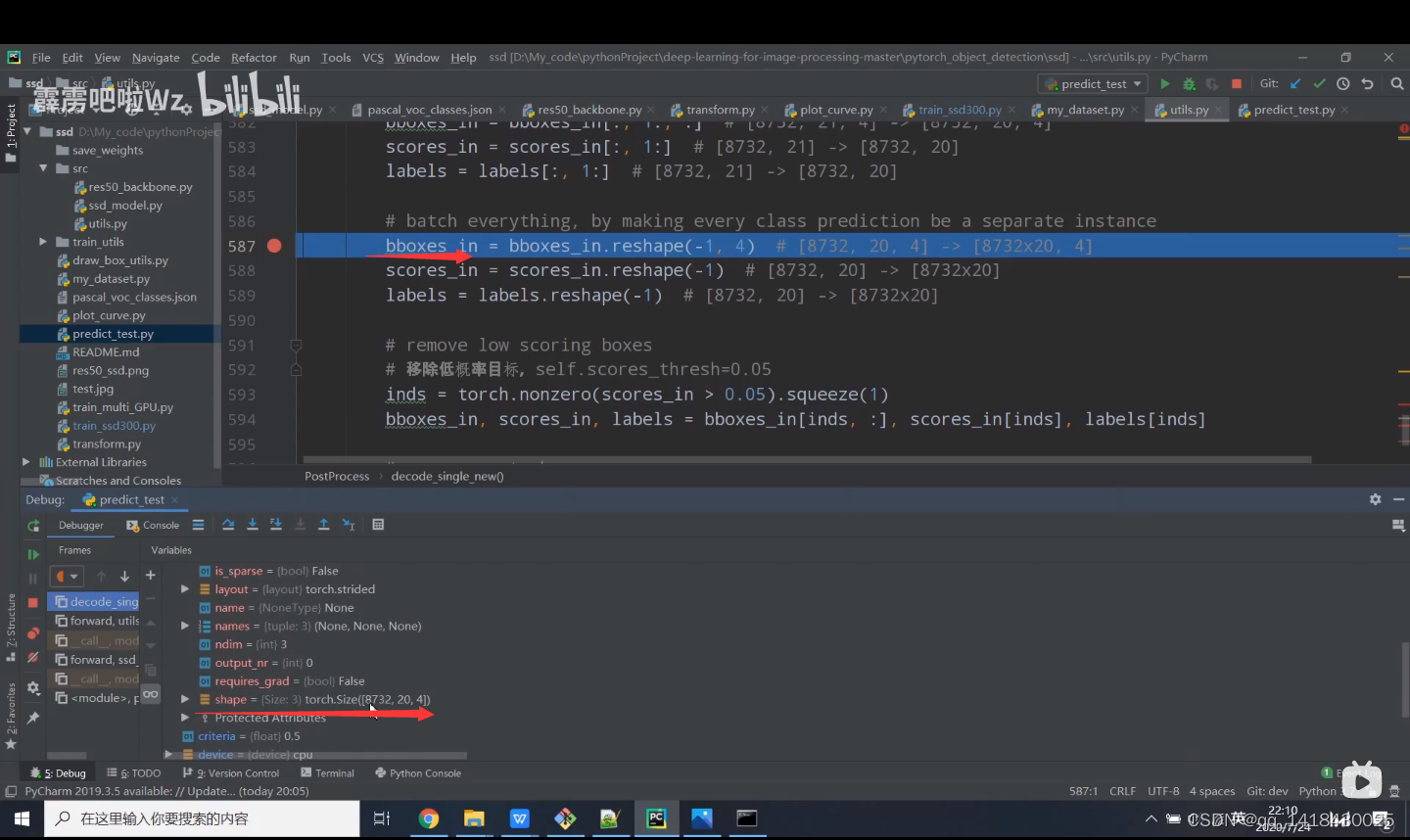

5.2 官方提供的方法是在Encoder模块中有一个decode_single非极大值抑制算法,该方法效率比较低,但是便于理解,针对每一张图片的每一个类别执行非极大值抑制

5.3 通过reshape方法可以将bboxes_in转化为展平方式,同理:scores_in,labels也是通过reshape方法转化为展平方式。下面预测原始预测没有载入预训练权重,所以预测并不准确。

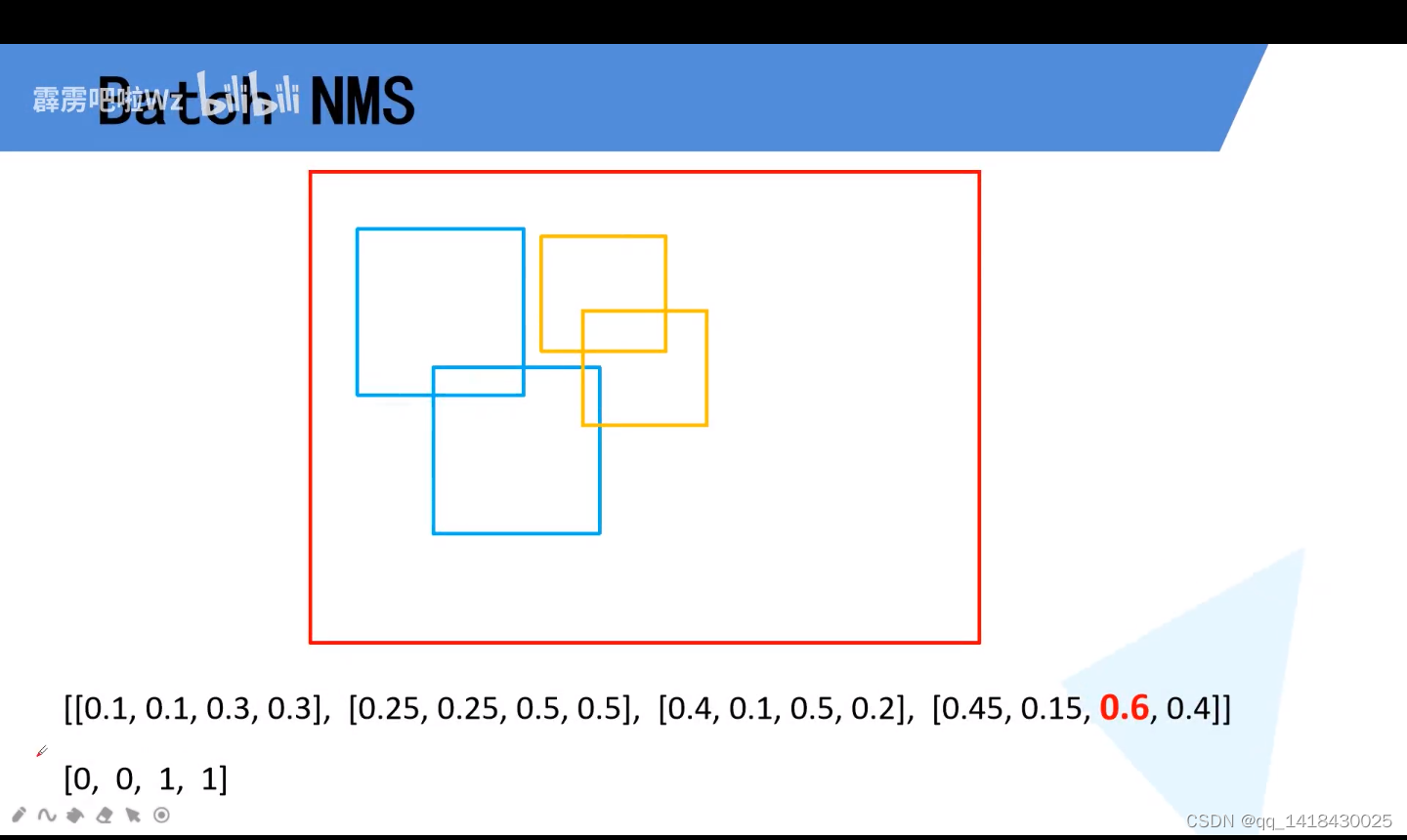

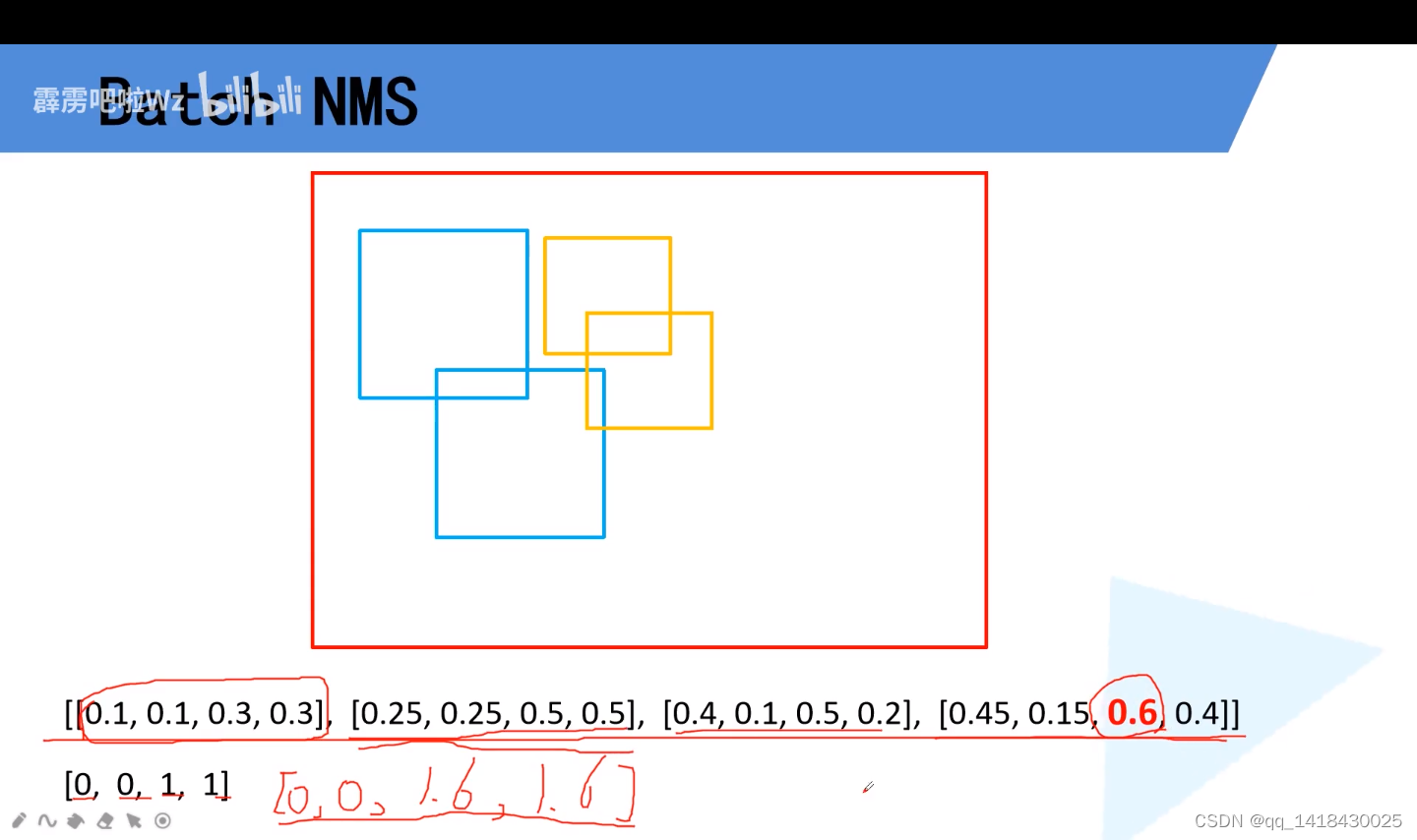

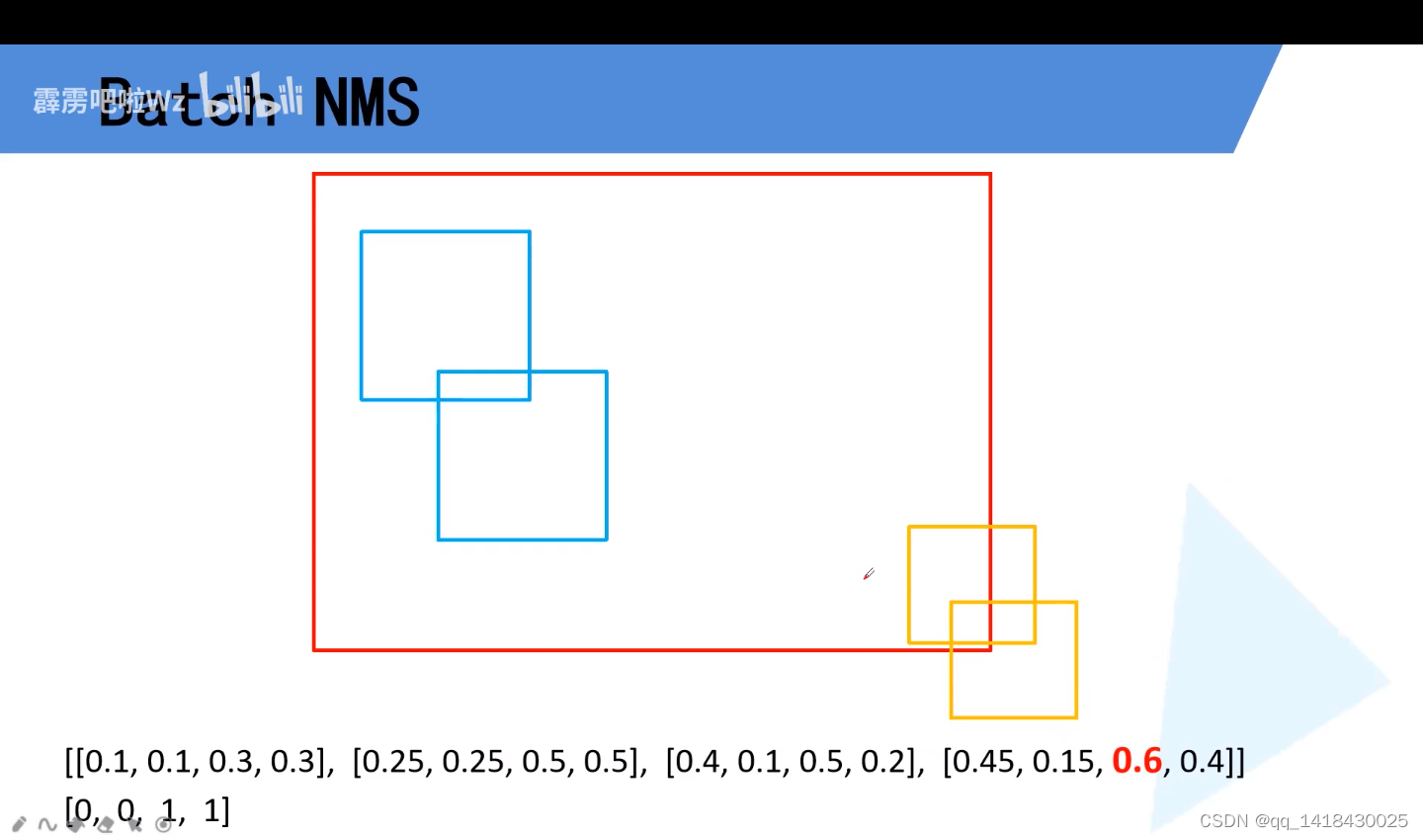

def batched_nms(boxes, scores, idxs, iou_threshold):

# type: (Tensor, Tensor, Tensor, float) -> Tensor

"""

Performs non-maximum suppression in a batched fashion.

Each index value correspond to a category, and NMS

will not be applied between elements of different categories.

Parameters

----------

boxes : Tensor[N, 4]

boxes where NMS will be performed. They

are expected to be in (x1, y1, x2, y2) format

scores : Tensor[N]

scores for each one of the boxes

idxs : Tensor[N]

indices of the categories for each one of the boxes.

iou_threshold : float

discards all overlapping boxes

with IoU < iou_threshold

Returns

-------

keep : Tensor

int64 tensor with the indices of

the elements that have been kept by NMS, sorted

in decreasing order of scores

"""

if boxes.numel() == 0:

return torch.empty((0,), dtype=torch.int64, device=boxes.device)

# strategy: in order to perform NMS independently per class.

# we add an offset to all the boxes. The offset is dependent

# only on the class idx, and is large enough so that boxes

# from different classes do not overlap

# 获取所有boxes中最大的坐标值(xmin, ymin, xmax, ymax)

max_coordinate = boxes.max()

# to(): Performs Tensor dtype and/or device conversion

# 为每一个类别生成一个很大的偏移量

# 这里的to只是让生成tensor的dytpe和device与boxes保持一致

offsets = idxs.to(boxes) * (max_coordinate + 1)

# boxes加上对应层的偏移量后,保证不同类别之间boxes不会有重合的现象

boxes_for_nms = boxes + offsets[:, None]

keep = nms(boxes_for_nms, scores, iou_threshold)

return keep

5.4 NMS算法(将不同类别的边界框分开,防止重合)

六、正负样本匹配