心情

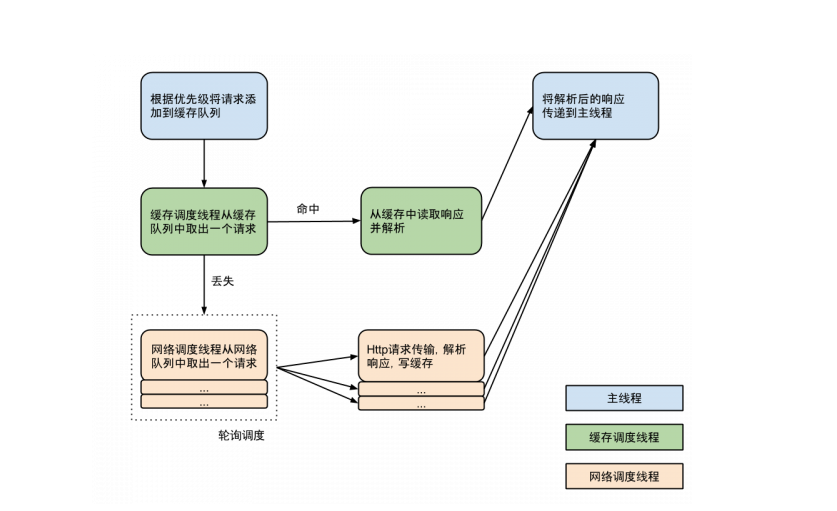

来这家公司也有差不多一年的时间了,项目中网络请求部分用到的是Volley,之前都是从别人的博客中了解Volley的用法和他的工作原理。如今项目也写的差不多了,回想起来,知道怎么用,似乎其他的也忘记差不多了,于是,自己想认真看下Volley的源码。先贴张图,看着流程图,也许代码好理解些。

源码解析

1.Volley初始化

public static RequestQueue newRequestQueue(Context context, HttpStack stack) {

File cacheDir = new File(context.getCacheDir(), "volley");

String userAgent = "volley/0";

try {

String network = context.getPackageName();

PackageInfo queue = context.getPackageManager().getPackageInfo(network, 0);

userAgent = network + "/" + queue.versionCode;

} catch (NameNotFoundException var6) {

;

}

if(stack == null) {

if(VERSION.SDK_INT >= 9) {

stack = new HurlStack();

} else {

stack = new HttpClientStack(AndroidHttpClient.newInstance(userAgent));

}

}

BasicNetwork network1 = new BasicNetwork((HttpStack)stack);

RequestQueue queue1 = new RequestQueue(new DiskBasedCache(cacheDir), network1);

queue1.start();

return queue1;

}首先确定一个Volley网络缓存文件夹交给DiskBasedCache去处理,而DiskBasedCache是Cache的唯一实现,具体实现,这里我们不关心。然后根据Android的版本号去选择要使用的处理请求的方式,3.0之前的用HttpClient,3.0以后就用HttpUrlConnection。然后交给BasicNetWork,通过我们的BasicNetWork生成RequestQueue,用到了适配器模式。

2.构造请求队列

public RequestQueue(Cache cache, Network network, int threadPoolSize, ResponseDelivery delivery) {

this.mSequenceGenerator = new AtomicInteger();

this.mWaitingRequests = new HashMap();

this.mCurrentRequests = new HashSet();

this.mCacheQueue = new PriorityBlockingQueue();

this.mNetworkQueue = new PriorityBlockingQueue();

this.mCache = cache;

this.mNetwork = network;

this.mDispatchers = new NetworkDispatcher[threadPoolSize];

this.mDelivery = delivery;

}

一起来看看它的构造方法,好多属性要初始化。没关系,弄懂他们就对Volley的理解差不多了。

- mSequenceGenerator:是一个序列号生成器,算是对Request的一个标识。

- mWaitingRequests : 这是一个Map容器,里面维护了一个队列。这个是Volley对于缓存请求的一个策略,后面就知道了。

- mCurrentRequests : 这个是当前请求的Request,看到名字就知道啥意思了。

- mCacheQueue : 这是一个缓存队列,我们请求就会放到缓存队列中,如果这里面有就不用去从网络上请求了。

- mNetworkQueue : 这个是网络请求队列,是要从这个队列上取Request去请求网络的。

- mCache:这是我们之前传进来的DiskBaseCache,硬盘缓存。

- mNetwork:这个就是我们之前传进来的BasicNetwork,其实就是出去Http连接的封装而已。

- mDispatchers : 这个称之为网络请求分发者,就是从网络请求队列中获取Request,并且处理的。

mDelivery : 这个是执行传达者,就是每当我们的请求处理完成之后,就是通过它告知我们的UI线程的,从而完成更新。

那么,mDelivery是怎么通知我们UI线程呢,其实就是封装了一层Handler。

public RequestQueue(Cache cache, Network network, int threadPoolSize) {

this(cache, network, threadPoolSize, new ExecutorDelivery(new Handler(Looper.getMainLooper())));

}

看,ExecutorDelivery是mDelivery的具体实现,就是通过获得了主线程的Looper,然后把消息丢到MQ中,让Handler从主线程中的Looper中去取消息。当然还有个重要的属性没有介绍,就是mCacheDispatcher,这个是下面个步骤才初始化的,它就是缓存分发者,就是从我们之前介绍的mCacheQueue 队列中获取Request的。

3.开始轮循

public void start() {

this.stop();

this.mCacheDispatcher = new CacheDispatcher(this.mCacheQueue, this.mNetworkQueue, this.mCache, this.mDelivery);

this.mCacheDispatcher.start();

for(int i = 0; i < this.mDispatchers.length; ++i) {

NetworkDispatcher networkDispatcher = new NetworkDispatcher(this.mNetworkQueue, this.mNetwork, this.mCache, this.mDelivery);

this.mDispatchers[i] = networkDispatcher;

networkDispatcher.start();

}

}这里,虽然代码很简单,但是做的事情却很多,这里将缓存分发者和网络分发者都启动了,那么这些分发者是什么呢?这些分发者都是继承了Thread类,就是我们调用了RequestQueue.start()方法,启动了这么多线程,其中缓存线程是一条,网络请求线程默认是4条,一共启动了5条线程。

4.添加请求

public Request add(Request request) {

request.setRequestQueue(this);

Set var2 = this.mCurrentRequests;

synchronized(this.mCurrentRequests) {

this.mCurrentRequests.add(request);

}

request.setSequence(this.getSequenceNumber());

request.addMarker("add-to-queue");

if(!request.shouldCache()) {

this.mNetworkQueue.add(request);

return request;

} else {

Map var7 = this.mWaitingRequests;

synchronized(this.mWaitingRequests) {

String cacheKey = request.getCacheKey();

if(this.mWaitingRequests.containsKey(cacheKey)) {

Object stagedRequests = (Queue)this.mWaitingRequests.get(cacheKey);

if(stagedRequests == null) {

stagedRequests = new LinkedList();

}

((Queue)stagedRequests).add(request);

this.mWaitingRequests.put(cacheKey, stagedRequests);

if(VolleyLog.DEBUG) {

VolleyLog.v("Request for cacheKey=%s is in flight, putting on hold.", new Object[]{cacheKey});

}

} else {

this.mWaitingRequests.put(cacheKey, (Object)null);

this.mCacheQueue.add(request);

}

return request;

}

}

}我喜欢直接看重点,如果开发者设置了不缓存,那么直接将这个Request丢到网络请求队列,让网络请求分发者去处理,如果设置了缓存,那么如果mWaitingRequests中没有键的话,直接丢到缓存队列中,让缓存分发者去处理,同时把mWaitingRequests的值置为空,先开始,这里我也没理解,后来断点调试了之后才明白。第一次请求进来,mWaitingRequests肯定是空,那么就丢到缓存队列中去了,第二次在进来,我们的mWaitingRequests中已经有key了,那么Request就到了我们mWaitingRequests的key中维护的列队中去了。这样是为了防止多次网络请求,节省流量,这是一种策略,如果我们的mWaitingRequests中有key的话,那么我们的请求正在处理。那么,你可以会问,那么触发了一个请求,然后过了几秒还触发了一个请求,那你不是拦截么,其实,在Request处理完成之后,分发者们会调用Request中的RequestQueue中finish()方法,会清空mWaitingRequests所有的缓存。

void finish(Request request) {

Set var2 = this.mCurrentRequests;

synchronized(this.mCurrentRequests) {

this.mCurrentRequests.remove(request);

}

if(request.shouldCache()) {

Map var7 = this.mWaitingRequests;

synchronized(this.mWaitingRequests) {

String cacheKey = request.getCacheKey();

Queue waitingRequests = (Queue)this.mWaitingRequests.remove(cacheKey);

if(waitingRequests != null) {

if(VolleyLog.DEBUG) {

VolleyLog.v("Releasing %d waiting requests for cacheKey=%s.", new Object[]{Integer.valueOf(waitingRequests.size()), cacheKey});

}

this.mCacheQueue.addAll(waitingRequests);

}

}

}

}这样,就很清楚了,Request中维护了一个RequestQueue对象,从而在处理完成了之后调用RequestQueue中的finish方法。至于Request什么时候调用的,接着看。

5.缓存分发者

public void run() {

if (DEBUG) VolleyLog.v("start new dispatcher");

Process.setThreadPriority(Process.THREAD_PRIORITY_BACKGROUND);

// Make a blocking call to initialize the cache.

mCache.initialize();

while (true) {

try {

// Get a request from the cache triage queue, blocking until

// at least one is available.

final Request request = mCacheQueue.take();

request.addMarker("cache-queue-take");

// If the request has been canceled, don't bother dispatching it.

if (request.isCanceled()) {

request.finish("cache-discard-canceled");

continue;

}

// Attempt to retrieve this item from cache.

Cache.Entry entry = mCache.get(request.getCacheKey());

if (entry == null) {

request.addMarker("cache-miss");

// Cache miss; send off to the network dispatcher.

mNetworkQueue.put(request);

continue;

}

// If it is completely expired, just send it to the network.

if (entry.isExpired()) {

request.addMarker("cache-hit-expired");

request.setCacheEntry(entry);

mNetworkQueue.put(request);

continue;

}

// We have a cache hit; parse its data for delivery back to the request.

request.addMarker("cache-hit");

Response<?> response = request.parseNetworkResponse(

new NetworkResponse(entry.data, entry.responseHeaders));

request.addMarker("cache-hit-parsed");

if (!entry.refreshNeeded()) {

// Completely unexpired cache hit. Just deliver the response.

mDelivery.postResponse(request, response);

} else {

// Soft-expired cache hit. We can deliver the cached response,

// but we need to also send the request to the network for

// refreshing.

request.addMarker("cache-hit-refresh-needed");

request.setCacheEntry(entry);

// Mark the response as intermediate.

response.intermediate = true;

// Post the intermediate response back to the user and have

// the delivery then forward the request along to the network.

mDelivery.postResponse(request, response, new Runnable() {

@Override

public void run() {

try {

mNetworkQueue.put(request);

} catch (InterruptedException e) {

// Not much we can do about this.

}

}

});

}

} catch (InterruptedException e) {

// We may have been interrupted because it was time to quit.

if (mQuit) {

return;

}

continue;

}

}

}首先,缓存分发者,把我们的硬盘缓存初始化,然后再判断Request是否已经取消,是否已经过期,如果都是true的话,就丢到网络请求队列中去,如果满足了一系列要求,就直接用我们硬盘缓存中的数据,更新UI。否则通过传达者放到网络请求队列中,让网络请求分发者去处理。当然,我们的缓存分发者一直都是在无限循环中,而我们的mCacheQueue.take()是个堵塞操作,没有Request对象,就一直停留在那里。底下的网络请求分发者也是如此。

6网络分发者

@Override

public void run() {

Process.setThreadPriority(Process.THREAD_PRIORITY_BACKGROUND);

Request request;

while (true) {

try {

// Take a request from the queue.

request = mQueue.take();

} catch (InterruptedException e) {

// We may have been interrupted because it was time to quit.

if (mQuit) {

return;

}

continue;

}

try {

request.addMarker("network-queue-take");

// If the request was cancelled already, do not perform the

// network request.

if (request.isCanceled()) {

request.finish("network-discard-cancelled");

continue;

}

// Tag the request (if API >= 14)

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.ICE_CREAM_SANDWICH) {

TrafficStats.setThreadStatsTag(request.getTrafficStatsTag());

}

// Perform the network request.

NetworkResponse networkResponse = mNetwork.performRequest(request);

request.addMarker("network-http-complete");

// If the server returned 304 AND we delivered a response already,

// we're done -- don't deliver a second identical response.

if (networkResponse.notModified && request.hasHadResponseDelivered()) {

request.finish("not-modified");

continue;

}

// Parse the response here on the worker thread.

Response<?> response = request.parseNetworkResponse(networkResponse);

request.addMarker("network-parse-complete");

// Write to cache if applicable.

// TODO: Only update cache metadata instead of entire record for 304s.

if (request.shouldCache() && response.cacheEntry != null) {

mCache.put(request.getCacheKey(), response.cacheEntry);

request.addMarker("network-cache-written");

}

// Post the response back.

request.markDelivered();

mDelivery.postResponse(request, response);

} catch (VolleyError volleyError) {

parseAndDeliverNetworkError(request, volleyError);

} catch (Exception e) {

VolleyLog.e(e, "Unhandled exception %s", e.toString());

mDelivery.postError(request, new VolleyError(e));

}

}

}

这里一步一步来,首先从网络请求队列中获取Request对象,然后如果标识是取消状态,则调用finish。之后就用BasicNetWork去执行网络请求了,获取了NetworkResponse对象,NetworkResponse对象内部封装了服务器那边返回的状态码,回复头,回复体。如果状态码是304表示没有修改,notModified也就是true,还有Request已经被传达者传达了了,那么调用finish方法,结束请求,那么需要更新内容呢,就

通过Request中的parseNetworkResponse解析服务器传达过来的内容。如果需要缓存的话,就将内容保存到硬盘中,最后,通过传达者更新UI,然后将request的是否已经传达过的属性改为true。

这里值得注意的是,当调用request的parseNetworkResponse方法的时候,看了Request的实现StringRequest,其中的代码是这样的:

@Override

protected Response<String> parseNetworkResponse(NetworkResponse response) {

String parsed;

try {

parsed = new String(response.data, HttpHeaderParser.parseCharset(response.headers));

} catch (UnsupportedEncodingException e) {

parsed = new String(response.data);

}

return Response.success(parsed, HttpHeaderParser.parseCacheHeaders(response));

}再到HttpHeaderParser去看看

public static Entry parseCacheHeaders(NetworkResponse response) {

long now = System.currentTimeMillis();

Map headers = response.headers;

long serverDate = 0L;

long serverExpires = 0L;

long softExpire = 0L;

long maxAge = 0L;

boolean hasCacheControl = false;

String serverEtag = null;

String headerValue = (String)headers.get("Date");

if(headerValue != null) {

serverDate = parseDateAsEpoch(headerValue);

}

headerValue = (String)headers.get("Cache-Control");

if(headerValue != null) {

hasCacheControl = true;

String[] entry = headerValue.split(",");

for(int i = 0; i < entry.length; ++i) {

String token = entry[i].trim();

if(token.equals("no-cache") || token.equals("no-store")) {

return null;

}

if(token.startsWith("max-age=")) {

try {

maxAge = Long.parseLong(token.substring(8));

} catch (Exception var19) {

;

}

} else if(token.equals("must-revalidate") || token.equals("proxy-revalidate")) {

maxAge = 0L;

}

}

}

headerValue = (String)headers.get("Expires");

if(headerValue != null) {

serverExpires = parseDateAsEpoch(headerValue);

}

serverEtag = (String)headers.get("ETag");

if(hasCacheControl) {

softExpire = now + maxAge * 1000L;

} else if(serverDate > 0L && serverExpires >= serverDate) {

softExpire = now + (serverExpires - serverDate);

}

Entry var20 = new Entry();

var20.data = response.data;

var20.etag = serverEtag;

var20.softTtl = softExpire;

var20.ttl = var20.softTtl;

var20.serverDate = serverDate;

var20.responseHeaders = headers;

return var20;

}这个是对http回复头信息进行解析,http协议中有一行是”Cache-Control”,这个需要服务器那边做支持的,否则就没有这行内容,还有”Expires”过期的时间。这些协议都是属于http应用层缓存,与volley无关的。

总结

最后,结合前面的流程图看看,其实就是当网络请求过来了,封装成Request,然后在缓存队列中找找,找到了,没有过期的缓存,标识都是符合我们的要求的,就直接通过Handler更新我们的UI,如果不符合我们的要求,就丢到网络请求队列中,有四条线程从里面取请求对象,进行解析,然后放到硬盘缓存中,最后传达UI更新信息。只有自己花些时间去分析了,才是自己的东西,看别人分析的,看懂了还是别人的东西,过段时间会忘掉的。如果有时间和精力把Volley自己动手实现遍那就更理解了,哈哈。