使用selenium +PhantomJS()/Chrome爬取 淘宝页面

首先创建一个config.py的文件。在里面做些适当配置:

# 缓存模式

SERVICE_ARGS = ['--disk-cache=true']

# 搜索名称

KEYWORD = '情人节礼物'

在主文件中编写代码:

# coding:utf-8

import re

from pprint import pprint

from selenium import webdriver

from selenium.common.exceptions import TimeoutException

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from pyquery import PyQuery as pq

from config import *

browser = webdriver.PhantomJS(service_args=SERVICE_ARGS)

wait = WebDriverWait(browser, 10)

browser.set_window_size(1400, 900)

def serach():

print('正在搜索')

try:

browser.get("https://www.taobao.com")

# 等待输入框加载完毕

input = wait.until(

EC.presence_of_element_located((By.CSS_SELECTOR, '#q'))

)

# 等待确定键加载完毕

submit = wait.until(

EC.element_to_be_clickable((By.CSS_SELECTOR, '#J_TSearchForm > div.search-button > button'))

)

# 向输入框填入内容

input.send_keys(KEYWORD)

# 点击搜索按钮

submit.click()

# 得出页面数据

total = wait.until(

EC.presence_of_element_located((By.CSS_SELECTOR, '#mainsrp-pager > div > div > div > div.total'))

)

get_product()

return total.text

except TimeoutError:

return serach()

def get_product():

# 得到每个栏目的玩家信息

wait.until(EC.presence_of_element_located((By.CSS_SELECTOR, '#mainsrp-itemlist .items .item')))

html = browser.page_source

doc = pq(html)

items = doc('#mainsrp-itemlist .items .item').items()

for item in items:

product = {

'price': item.find('.price').text(),

'deal': item.find('.deal-cnt').text()[:-3],

'name': item.find('.title').text(),

'shop': item.find('.shop').text(),

'location': item.find('.location').text()

}

pprint(product)

def next_page(page_count):

print('正在翻页', page_count)

try:

input = wait.until(

EC.presence_of_element_located((By.CSS_SELECTOR, '#mainsrp-pager > div > div > div > div.form > input'))

)

# 等待确定键加载完毕

submit = wait.until(

EC.element_to_be_clickable(

(By.CSS_SELECTOR, '#mainsrp-pager > div > div > div > div.form > span.btn.J_Submit'))

)

input.clear()

input.send_keys(page_count)

submit.click()

wait.until(EC.text_to_be_present_in_element(

(By.CSS_SELECTOR, '#mainsrp-pager > div > div > div > ul > li.item.active > span'), str(page_count)

))

get_product()

except TimeoutError:

next_page(page_count)

def main():

try:

total = serach()

total = int(re.compile(r'(\d+)').search(total).group(1))

# print(total)

for i in range(2, total + 1):

next_page(i)

except Exception:

print('出现异常')

finally:

browser.close()

if __name__ == '__main__':

main()

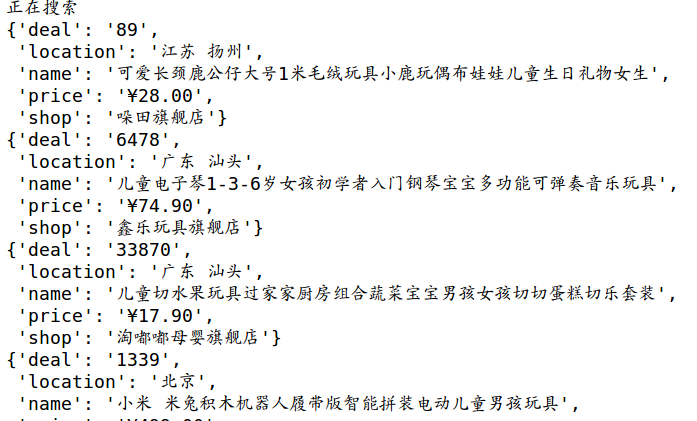

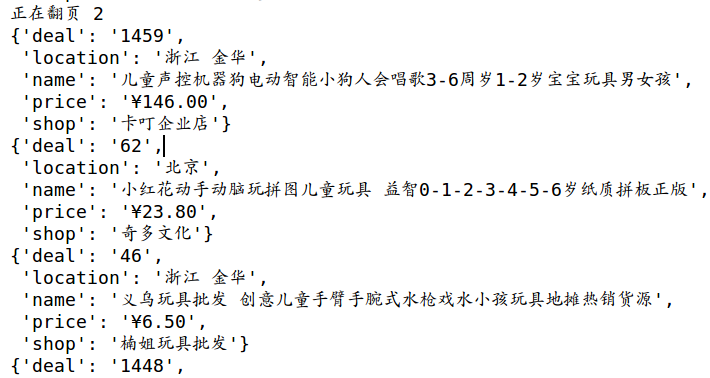

部分效果图如下: