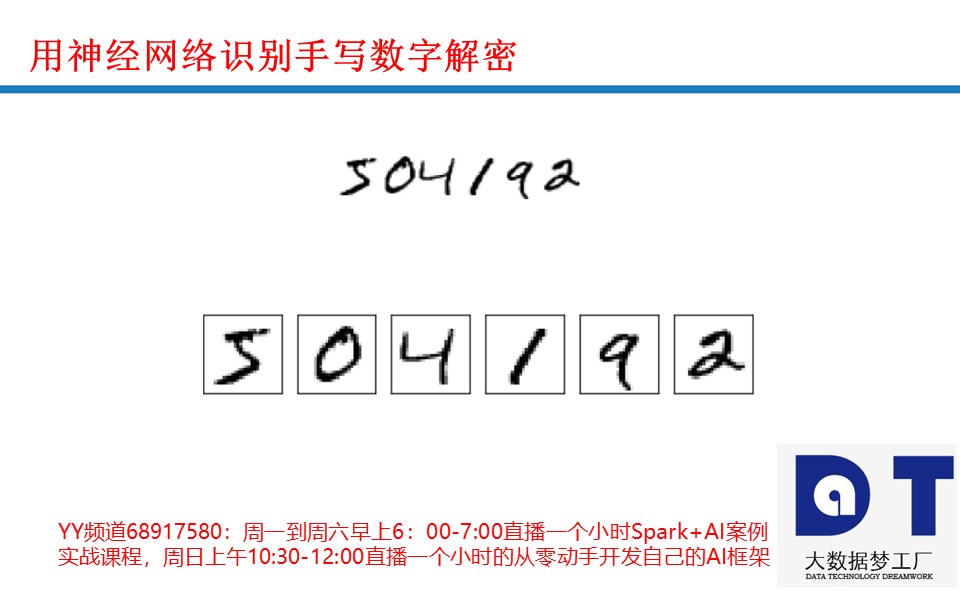

王家林老师人工智能AI 第10节课:用神经网络识别手写数字内幕解密 老师微信13928463918

作业:运行Pytorch的示例。

- 安装pytorch

C:\Users\lenovo>conda install pytorch -c pytorch

Fetching package metadata .................

Solving package specifications: .

Package plan for installation in environment G:\ProgramData\Anaconda3:

The following NEW packages will be INSTALLED:

pytorch: 0.4.0-py36_cuda80_cudnn7he774522_1 pytorch

Proceed ([y]/n)? y

pytorch-0.4.0- 100% |###############################| Time: 0:12:59 711.47 kB/s

C:\Users\lenovo>- 安装 torchvision:

C:\Users\lenovo>pip install --no-deps torchvision

Collecting torchvision

Cache entry deserialization failed, entry ignored

Cache entry deserialization failed, entry ignored

Downloading https://files.pythonhosted.org/packages/ca/0d/f00b2885711e08bd71242ebe7b96561e6f6d01fdb4b9dcf4d37e2e13c5e1/torchvision-0.2.1-py2.py3-none-any.whl (54kB)

100% |████████████████████████████████| 61kB 76kB/s

Installing collected packages: torchvision

Successfully installed torchvision-0.2.1

You are using pip version 9.0.1, however version 10.0.1 is available.

You should consider upgrading via the 'python -m pip install --upgrade pip' command.

(g:\ProgramData\Anaconda3) C:\Users\lenovo>pip install --no-deps torchvision

Collecting torchvision

Using cached https://files.pythonhosted.org/packages/ca/0d/f00b2885711e08bd71242ebe7b96561e6f6d01fdb4b9dcf4d37e2e13c5e1/torchvision-0.2.1-py2.py3-none-any.whl

Installing collected packages: torchvision

Successfully installed torchvision-0.2.1

(g:\ProgramData\Anaconda3) C:\Users\lenovo>- Pytorch的示例代码

from __future__ import print_function

import argparse

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = nn.Conv2d(10, 20, kernel_size=5)

self.conv2_drop = nn.Dropout2d()

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

def forward(self, x):

x = F.relu(F.max_pool2d(self.conv1(x), 2))

x = F.relu(F.max_pool2d(self.conv2_drop(self.conv2(x)), 2))

x = x.view(-1, 320)

x = F.relu(self.fc1(x))

x = F.dropout(x, training=self.training)

x = self.fc2(x)

return F.log_softmax(x, dim=1)

def train(args, model, device, train_loader, optimizer, epoch):

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, target)

loss.backward()

optimizer.step()

if batch_idx % args.log_interval == 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

def test(args, model, device, test_loader):

model.eval()

test_loss = 0

correct = 0

with torch.no_grad():

for data, target in test_loader:

data, target = data.to(device), target.to(device)

output = model(data)

test_loss += F.nll_loss(output, target, size_average=False).item() # sum up batch loss

pred = output.max(1, keepdim=True)[1] # get the index of the max log-probability

correct += pred.eq(target.view_as(pred)).sum().item()

test_loss /= len(test_loader.dataset)

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

def main():

# Training settings

parser = argparse.ArgumentParser(description='PyTorch MNIST Example')

parser.add_argument('--batch-size', type=int, default=64, metavar='N',

help='input batch size for training (default: 64)')

parser.add_argument('--test-batch-size', type=int, default=1000, metavar='N',

help='input batch size for testing (default: 1000)')

parser.add_argument('--epochs', type=int, default=10, metavar='N',

help='number of epochs to train (default: 10)')

parser.add_argument('--lr', type=float, default=0.01, metavar='LR',

help='learning rate (default: 0.01)')

parser.add_argument('--momentum', type=float, default=0.5, metavar='M',

help='SGD momentum (default: 0.5)')

parser.add_argument('--no-cuda', action='store_true', default=False,

help='disables CUDA training')

parser.add_argument('--seed', type=int, default=1, metavar='S',

help='random seed (default: 1)')

parser.add_argument('--log-interval', type=int, default=10, metavar='N',

help='how many batches to wait before logging training status')

args = parser.parse_args()

use_cuda = not args.no_cuda and torch.cuda.is_available()

torch.manual_seed(args.seed)

device = torch.device("cuda" if use_cuda else "cpu")

kwargs = {'num_workers': 1, 'pin_memory': True} if use_cuda else {}

train_loader = torch.utils.data.DataLoader(

datasets.MNIST('../data', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])),

batch_size=args.batch_size, shuffle=True, **kwargs)

test_loader = torch.utils.data.DataLoader(

datasets.MNIST('../data', train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])),

batch_size=args.test_batch_size, shuffle=True, **kwargs)

model = Net().to(device)

optimizer = optim.SGD(model.parameters(), lr=args.lr, momentum=args.momentum)

for epoch in range(1, args.epochs + 1):

train(args, model, device, train_loader, optimizer, epoch)

test(args, model, device, test_loader)

if __name__ == '__main__':

main()- 运行结果如下:

.....Train Epoch: 5 [53760/60000 (90%)] Loss: 0.512370

Train Epoch: 5 [54400/60000 (91%)] Loss: 0.238426

Train Epoch: 5 [55040/60000 (92%)] Loss: 0.179841

Train Epoch: 5 [55680/60000 (93%)] Loss: 0.132503

Train Epoch: 5 [56320/60000 (94%)] Loss: 0.122262

Train Epoch: 5 [56960/60000 (95%)] Loss: 0.151063

Train Epoch: 5 [57600/60000 (96%)] Loss: 0.268425

Train Epoch: 5 [58240/60000 (97%)] Loss: 0.142108

Train Epoch: 5 [58880/60000 (98%)] Loss: 0.174616

Train Epoch: 5 [59520/60000 (99%)] Loss: 0.185637

Test set: Average loss: 0.0775, Accuracy: 9766/10000 (98%)

Train Epoch: 6 [0/60000 (0%)] Loss: 0.140676

Train Epoch: 6 [640/60000 (1%)] Loss: 0.129360

Train Epoch: 6 [1280/60000 (2%)] Loss: 0.343696

Train Epoch: 6 [1920/60000 (3%)] Loss: 0.238283

Train Epoch: 6 [2560/60000 (4%)] Loss: 0.285906

Train Epoch: 6 [3200/60000 (5%)] Loss: 0.192555

Train Epoch: 6 [3840/60000 (6%)] Loss: 0.146404

Train Epoch: 6 [4480/60000 (7%)] Loss: 0.370778

Train Epoch: 6 [5120/60000 (9%)] Loss: 0.124215

Train Epoch: 6 [5760/60000 (10%)] Loss: 0.326730

Train Epoch: 6 [6400/60000 (11%)] Loss: 0.238912

Train Epoch: 6 [7040/60000 (12%)] Loss: 0.049849

Train Epoch: 6 [7680/60000 (13%)] Loss: 0.275858

Train Epoch: 6 [8320/60000 (14%)] Loss: 0.430975

Train Epoch: 6 [8960/60000 (15%)] Loss: 0.186454

Train Epoch: 6 [9600/60000 (16%)] Loss: 0.289752

Train Epoch: 6 [10240/60000 (17%)] Loss: 0.180624

Train Epoch: 6 [10880/60000 (18%)] Loss: 0.279719

Train Epoch: 6 [11520/60000 (19%)] Loss: 0.119862

Train Epoch: 6 [12160/60000 (20%)] Loss: 0.190458

Train Epoch: 6 [12800/60000 (21%)] Loss: 0.150031

Train Epoch: 6 [13440/60000 (22%)] Loss: 0.491369

Train Epoch: 6 [14080/60000 (23%)] Loss: 0.322809

Train Epoch: 6 [14720/60000 (25%)] Loss: 0.506018

Train Epoch: 6 [15360/60000 (26%)] Loss: 0.098282

Train Epoch: 6 [16000/60000 (27%)] Loss: 0.244221

Train Epoch: 6 [16640/60000 (28%)] Loss: 0.135457

Train Epoch: 6 [17280/60000 (29%)] Loss: 0.255323

Train Epoch: 6 [17920/60000 (30%)] Loss: 0.261518

Train Epoch: 6 [18560/60000 (31%)] Loss: 0.181049

Train Epoch: 6 [19200/60000 (32%)] Loss: 0.087846

Train Epoch: 6 [19840/60000 (33%)] Loss: 0.130504

Train Epoch: 6 [20480/60000 (34%)] Loss: 0.139496

Train Epoch: 6 [21120/60000 (35%)] Loss: 0.214906

Train Epoch: 6 [21760/60000 (36%)] Loss: 0.485395

Train Epoch: 6 [22400/60000 (37%)] Loss: 0.180646

Train Epoch: 6 [23040/60000 (38%)] Loss: 0.268171

Train Epoch: 6 [23680/60000 (39%)] Loss: 0.286735

Train Epoch: 6 [24320/60000 (41%)] Loss: 0.204765

Train Epoch: 6 [24960/60000 (42%)] Loss: 0.242695

Train Epoch: 6 [25600/60000 (43%)] Loss: 0.114012

Train Epoch: 6 [26240/60000 (44%)] Loss: 0.361593

Train Epoch: 6 [26880/60000 (45%)] Loss: 0.135894

Train Epoch: 6 [27520/60000 (46%)] Loss: 0.155844

Train Epoch: 6 [28160/60000 (47%)] Loss: 0.127069

Train Epoch: 6 [28800/60000 (48%)] Loss: 0.362843

Train Epoch: 6 [29440/60000 (49%)] Loss: 0.205102

Train Epoch: 6 [30080/60000 (50%)] Loss: 0.116812

Train Epoch: 6 [30720/60000 (51%)] Loss: 0.288545

Train Epoch: 6 [31360/60000 (52%)] Loss: 0.383816

Train Epoch: 6 [32000/60000 (53%)] Loss: 0.250747

Train Epoch: 6 [32640/60000 (54%)] Loss: 0.322820

Train Epoch: 6 [33280/60000 (55%)] Loss: 0.151687

Train Epoch: 6 [33920/60000 (57%)] Loss: 0.255547

Train Epoch: 6 [34560/60000 (58%)] Loss: 0.139503

Train Epoch: 6 [35200/60000 (59%)] Loss: 0.320120

Train Epoch: 6 [35840/60000 (60%)] Loss: 0.242631

Train Epoch: 6 [36480/60000 (61%)] Loss: 0.154654

Train Epoch: 6 [37120/60000 (62%)] Loss: 0.234150

Train Epoch: 6 [37760/60000 (63%)] Loss: 0.190037

Train Epoch: 6 [38400/60000 (64%)] Loss: 0.123296

Train Epoch: 6 [39040/60000 (65%)] Loss: 0.437532

Train Epoch: 6 [39680/60000 (66%)] Loss: 0.321334

Train Epoch: 6 [40320/60000 (67%)] Loss: 0.196731

Train Epoch: 6 [40960/60000 (68%)] Loss: 0.288327

Train Epoch: 6 [41600/60000 (69%)] Loss: 0.176173

Train Epoch: 6 [42240/60000 (70%)] Loss: 0.138010

Train Epoch: 6 [42880/60000 (71%)] Loss: 0.225764

Train Epoch: 6 [43520/60000 (72%)] Loss: 0.140000

Train Epoch: 6 [44160/60000 (74%)] Loss: 0.428833

Train Epoch: 6 [44800/60000 (75%)] Loss: 0.183989

Train Epoch: 6 [45440/60000 (76%)] Loss: 0.228906

Train Epoch: 6 [46080/60000 (77%)] Loss: 0.094086

Train Epoch: 6 [46720/60000 (78%)] Loss: 0.169911

Train Epoch: 6 [47360/60000 (79%)] Loss: 0.132307

Train Epoch: 6 [48000/60000 (80%)] Loss: 0.101523

Train Epoch: 6 [48640/60000 (81%)] Loss: 0.157610

Train Epoch: 6 [49280/60000 (82%)] Loss: 0.246277

Train Epoch: 6 [49920/60000 (83%)] Loss: 0.116810

Train Epoch: 6 [50560/60000 (84%)] Loss: 0.154430

Train Epoch: 6 [51200/60000 (85%)] Loss: 0.517138

Train Epoch: 6 [51840/60000 (86%)] Loss: 0.248686

Train Epoch: 6 [52480/60000 (87%)] Loss: 0.197340

Train Epoch: 6 [53120/60000 (88%)] Loss: 0.115430

Train Epoch: 6 [53760/60000 (90%)] Loss: 0.107924

Train Epoch: 6 [54400/60000 (91%)] Loss: 0.137767

Train Epoch: 6 [55040/60000 (92%)] Loss: 0.088935

Train Epoch: 6 [55680/60000 (93%)] Loss: 0.518349

Train Epoch: 6 [56320/60000 (94%)] Loss: 0.436731

Train Epoch: 6 [56960/60000 (95%)] Loss: 0.214996

Train Epoch: 6 [57600/60000 (96%)] Loss: 0.177371

Train Epoch: 6 [58240/60000 (97%)] Loss: 0.313230

Train Epoch: 6 [58880/60000 (98%)] Loss: 0.207640

Train Epoch: 6 [59520/60000 (99%)] Loss: 0.120605

Test set: Average loss: 0.0664, Accuracy: 9809/10000 (98%)

Train Epoch: 7 [0/60000 (0%)] Loss: 0.132107

Train Epoch: 7 [640/60000 (1%)] Loss: 0.166791

Train Epoch: 7 [1280/60000 (2%)] Loss: 0.249678

Train Epoch: 7 [1920/60000 (3%)] Loss: 0.224326

Train Epoch: 7 [2560/60000 (4%)] Loss: 0.135910

Train Epoch: 7 [3200/60000 (5%)] Loss: 0.158317

Train Epoch: 7 [3840/60000 (6%)] Loss: 0.248836

Train Epoch: 7 [4480/60000 (7%)] Loss: 0.170678

Train Epoch: 7 [5120/60000 (9%)] Loss: 0.194215

Train Epoch: 7 [5760/60000 (10%)] Loss: 0.164261

Train Epoch: 7 [6400/60000 (11%)] Loss: 0.226558

Train Epoch: 7 [7040/60000 (12%)] Loss: 0.186963

Train Epoch: 7 [7680/60000 (13%)] Loss: 0.245857

Train Epoch: 7 [8320/60000 (14%)] Loss: 0.221825

Train Epoch: 7 [8960/60000 (15%)] Loss: 0.129389

Train Epoch: 7 [9600/60000 (16%)] Loss: 0.163193

Train Epoch: 7 [10240/60000 (17%)] Loss: 0.353852

Train Epoch: 7 [10880/60000 (18%)] Loss: 0.274763

Train Epoch: 7 [11520/60000 (19%)] Loss: 0.246091

Train Epoch: 7 [12160/60000 (20%)] Loss: 0.304186

Train Epoch: 7 [12800/60000 (21%)] Loss: 0.203195

Train Epoch: 7 [13440/60000 (22%)] Loss: 0.238596

Train Epoch: 7 [14080/60000 (23%)] Loss: 0.275092

Train Epoch: 7 [14720/60000 (25%)] Loss: 0.198658

Train Epoch: 7 [15360/60000 (26%)] Loss: 0.170903

Train Epoch: 7 [16000/60000 (27%)] Loss: 0.187789

Train Epoch: 7 [16640/60000 (28%)] Loss: 0.128413

Train Epoch: 7 [17280/60000 (29%)] Loss: 0.096226

Train Epoch: 7 [17920/60000 (30%)] Loss: 0.329999

Train Epoch: 7 [18560/60000 (31%)] Loss: 0.187559

Train Epoch: 7 [19200/60000 (32%)] Loss: 0.334050

Train Epoch: 7 [19840/60000 (33%)] Loss: 0.204843

Train Epoch: 7 [20480/60000 (34%)] Loss: 0.217350

Train Epoch: 7 [21120/60000 (35%)] Loss: 0.177834

Train Epoch: 7 [21760/60000 (36%)] Loss: 0.133202

Train Epoch: 7 [22400/60000 (37%)] Loss: 0.311163

Train Epoch: 7 [23040/60000 (38%)] Loss: 0.208412

Train Epoch: 7 [23680/60000 (39%)] Loss: 0.228364

Train Epoch: 7 [24320/60000 (41%)] Loss: 0.194834

Train Epoch: 7 [24960/60000 (42%)] Loss: 0.272166

Train Epoch: 7 [25600/60000 (43%)] Loss: 0.149569

Train Epoch: 7 [26240/60000 (44%)] Loss: 0.174903

Train Epoch: 7 [26880/60000 (45%)] Loss: 0.195625

Train Epoch: 7 [27520/60000 (46%)] Loss: 0.305712

Train Epoch: 7 [28160/60000 (47%)] Loss: 0.219578

Train Epoch: 7 [28800/60000 (48%)] Loss: 0.231685

Train Epoch: 7 [29440/60000 (49%)] Loss: 0.241889

Train Epoch: 7 [30080/60000 (50%)] Loss: 0.277982

Train Epoch: 7 [30720/60000 (51%)] Loss: 0.289829

Train Epoch: 7 [31360/60000 (52%)] Loss: 0.179086

Train Epoch: 7 [32000/60000 (53%)] Loss: 0.385793

Train Epoch: 7 [32640/60000 (54%)] Loss: 0.284146

Train Epoch: 7 [33280/60000 (55%)] Loss: 0.071324

Train Epoch: 7 [33920/60000 (57%)] Loss: 0.154465

Train Epoch: 7 [34560/60000 (58%)] Loss: 0.148975

Train Epoch: 7 [35200/60000 (59%)] Loss: 0.174513

Train Epoch: 7 [35840/60000 (60%)] Loss: 0.247944

Train Epoch: 7 [36480/60000 (61%)] Loss: 0.309157

Train Epoch: 7 [37120/60000 (62%)] Loss: 0.301235

Train Epoch: 7 [37760/60000 (63%)] Loss: 0.145940

Train Epoch: 7 [38400/60000 (64%)] Loss: 0.244258

Train Epoch: 7 [39040/60000 (65%)] Loss: 0.225267

Train Epoch: 7 [39680/60000 (66%)] Loss: 0.161202

Train Epoch: 7 [40320/60000 (67%)] Loss: 0.088899

Train Epoch: 7 [40960/60000 (68%)] Loss: 0.119786

Train Epoch: 7 [41600/60000 (69%)] Loss: 0.271811

Train Epoch: 7 [42240/60000 (70%)] Loss: 0.250824

Train Epoch: 7 [42880/60000 (71%)] Loss: 0.333640

Train Epoch: 7 [43520/60000 (72%)] Loss: 0.115780

Train Epoch: 7 [44160/60000 (74%)] Loss: 0.169147

Train Epoch: 7 [44800/60000 (75%)] Loss: 0.132361

Train Epoch: 7 [45440/60000 (76%)] Loss: 0.087468

Train Epoch: 7 [46080/60000 (77%)] Loss: 0.154758

Train Epoch: 7 [46720/60000 (78%)] Loss: 0.078850

Train Epoch: 7 [47360/60000 (79%)] Loss: 0.292808

Train Epoch: 7 [48000/60000 (80%)] Loss: 0.168954

Train Epoch: 7 [48640/60000 (81%)] Loss: 0.219281

Train Epoch: 7 [49280/60000 (82%)] Loss: 0.127809

Train Epoch: 7 [49920/60000 (83%)] Loss: 0.099499

Train Epoch: 7 [50560/60000 (84%)] Loss: 0.107390

Train Epoch: 7 [51200/60000 (85%)] Loss: 0.347860

Train Epoch: 7 [51840/60000 (86%)] Loss: 0.168160

Train Epoch: 7 [52480/60000 (87%)] Loss: 0.181870

Train Epoch: 7 [53120/60000 (88%)] Loss: 0.186288

Train Epoch: 7 [53760/60000 (90%)] Loss: 0.115913

Train Epoch: 7 [54400/60000 (91%)] Loss: 0.550203

Train Epoch: 7 [55040/60000 (92%)] Loss: 0.118155

Train Epoch: 7 [55680/60000 (93%)] Loss: 0.281515

Train Epoch: 7 [56320/60000 (94%)] Loss: 0.169372

Train Epoch: 7 [56960/60000 (95%)] Loss: 0.367320

Train Epoch: 7 [57600/60000 (96%)] Loss: 0.121198

Train Epoch: 7 [58240/60000 (97%)] Loss: 0.158715

Train Epoch: 7 [58880/60000 (98%)] Loss: 0.178910

Train Epoch: 7 [59520/60000 (99%)] Loss: 0.437783

Test set: Average loss: 0.0608, Accuracy: 9809/10000 (98%)

Train Epoch: 8 [0/60000 (0%)] Loss: 0.282619

Train Epoch: 8 [640/60000 (1%)] Loss: 0.207113

Train Epoch: 8 [1280/60000 (2%)] Loss: 0.158446

Train Epoch: 8 [1920/60000 (3%)] Loss: 0.187004

Train Epoch: 8 [2560/60000 (4%)] Loss: 0.218975

Train Epoch: 8 [3200/60000 (5%)] Loss: 0.153180

Train Epoch: 8 [3840/60000 (6%)] Loss: 0.345649

Train Epoch: 8 [4480/60000 (7%)] Loss: 0.151264

Train Epoch: 8 [5120/60000 (9%)] Loss: 0.227515

Train Epoch: 8 [5760/60000 (10%)] Loss: 0.168394

Train Epoch: 8 [6400/60000 (11%)] Loss: 0.091970

Train Epoch: 8 [7040/60000 (12%)] Loss: 0.155689

Train Epoch: 8 [7680/60000 (13%)] Loss: 0.288804

Train Epoch: 8 [8320/60000 (14%)] Loss: 0.153606

Train Epoch: 8 [8960/60000 (15%)] Loss: 0.216723

Train Epoch: 8 [9600/60000 (16%)] Loss: 0.208320

Train Epoch: 8 [10240/60000 (17%)] Loss: 0.197067

Train Epoch: 8 [10880/60000 (18%)] Loss: 0.187951

Train Epoch: 8 [11520/60000 (19%)] Loss: 0.050377

Train Epoch: 8 [12160/60000 (20%)] Loss: 0.227952

Train Epoch: 8 [12800/60000 (21%)] Loss: 0.366510

Train Epoch: 8 [13440/60000 (22%)] Loss: 0.257651

Train Epoch: 8 [14080/60000 (23%)] Loss: 0.172555

Train Epoch: 8 [14720/60000 (25%)] Loss: 0.160587

Train Epoch: 8 [15360/60000 (26%)] Loss: 0.111184

Train Epoch: 8 [16000/60000 (27%)] Loss: 0.292117

Train Epoch: 8 [16640/60000 (28%)] Loss: 0.153204

Train Epoch: 8 [17280/60000 (29%)] Loss: 0.172316

Train Epoch: 8 [17920/60000 (30%)] Loss: 0.162212

Train Epoch: 8 [18560/60000 (31%)] Loss: 0.223066

Train Epoch: 8 [19200/60000 (32%)] Loss: 0.059182

Train Epoch: 8 [19840/60000 (33%)] Loss: 0.251611

Train Epoch: 8 [20480/60000 (34%)] Loss: 0.296954

Train Epoch: 8 [21120/60000 (35%)] Loss: 0.172164

Train Epoch: 8 [21760/60000 (36%)] Loss: 0.517990

Train Epoch: 8 [22400/60000 (37%)] Loss: 0.195220

Train Epoch: 8 [23040/60000 (38%)] Loss: 0.374590

Train Epoch: 8 [23680/60000 (39%)] Loss: 0.192233

Train Epoch: 8 [24320/60000 (41%)] Loss: 0.225816

Train Epoch: 8 [24960/60000 (42%)] Loss: 0.220230

Train Epoch: 8 [25600/60000 (43%)] Loss: 0.181024

Train Epoch: 8 [26240/60000 (44%)] Loss: 0.103231

Train Epoch: 8 [26880/60000 (45%)] Loss: 0.252104

Train Epoch: 8 [27520/60000 (46%)] Loss: 0.168575

Train Epoch: 8 [28160/60000 (47%)] Loss: 0.150211

Train Epoch: 8 [28800/60000 (48%)] Loss: 0.144782

Train Epoch: 8 [29440/60000 (49%)] Loss: 0.138220

Train Epoch: 8 [30080/60000 (50%)] Loss: 0.308417

Train Epoch: 8 [30720/60000 (51%)] Loss: 0.112789

Train Epoch: 8 [31360/60000 (52%)] Loss: 0.130063

Train Epoch: 8 [32000/60000 (53%)] Loss: 0.156165

Train Epoch: 8 [32640/60000 (54%)] Loss: 0.301132

Train Epoch: 8 [33280/60000 (55%)] Loss: 0.147178

Train Epoch: 8 [33920/60000 (57%)] Loss: 0.191041

Train Epoch: 8 [34560/60000 (58%)] Loss: 0.090890

Train Epoch: 8 [35200/60000 (59%)] Loss: 0.089310

Train Epoch: 8 [35840/60000 (60%)] Loss: 0.069493

Train Epoch: 8 [36480/60000 (61%)] Loss: 0.111256

Train Epoch: 8 [37120/60000 (62%)] Loss: 0.177840

Train Epoch: 8 [37760/60000 (63%)] Loss: 0.253496

Train Epoch: 8 [38400/60000 (64%)] Loss: 0.160639

Train Epoch: 8 [39040/60000 (65%)] Loss: 0.246134

Train Epoch: 8 [39680/60000 (66%)] Loss: 0.103705

Train Epoch: 8 [40320/60000 (67%)] Loss: 0.079531

Train Epoch: 8 [40960/60000 (68%)] Loss: 0.162642

Train Epoch: 8 [41600/60000 (69%)] Loss: 0.207469

Train Epoch: 8 [42240/60000 (70%)] Loss: 0.161166

Train Epoch: 8 [42880/60000 (71%)] Loss: 0.100684

Train Epoch: 8 [43520/60000 (72%)] Loss: 0.273587

Train Epoch: 8 [44160/60000 (74%)] Loss: 0.102385

Train Epoch: 8 [44800/60000 (75%)] Loss: 0.249855

Train Epoch: 8 [45440/60000 (76%)] Loss: 0.160454

Train Epoch: 8 [46080/60000 (77%)] Loss: 0.243182

Train Epoch: 8 [46720/60000 (78%)] Loss: 0.283987

Train Epoch: 8 [47360/60000 (79%)] Loss: 0.146208

Train Epoch: 8 [48000/60000 (80%)] Loss: 0.065463

Train Epoch: 8 [48640/60000 (81%)] Loss: 0.387891

Train Epoch: 8 [49280/60000 (82%)] Loss: 0.252187

Train Epoch: 8 [49920/60000 (83%)] Loss: 0.134607

Train Epoch: 8 [50560/60000 (84%)] Loss: 0.094168

Train Epoch: 8 [51200/60000 (85%)] Loss: 0.399077

Train Epoch: 8 [51840/60000 (86%)] Loss: 0.132705

Train Epoch: 8 [52480/60000 (87%)] Loss: 0.486581

Train Epoch: 8 [53120/60000 (88%)] Loss: 0.324304

Train Epoch: 8 [53760/60000 (90%)] Loss: 0.135775

Train Epoch: 8 [54400/60000 (91%)] Loss: 0.074069

Train Epoch: 8 [55040/60000 (92%)] Loss: 0.278384

Train Epoch: 8 [55680/60000 (93%)] Loss: 0.198904

Train Epoch: 8 [56320/60000 (94%)] Loss: 0.115595

Train Epoch: 8 [56960/60000 (95%)] Loss: 0.308912

Train Epoch: 8 [57600/60000 (96%)] Loss: 0.303760

Train Epoch: 8 [58240/60000 (97%)] Loss: 0.119735

Train Epoch: 8 [58880/60000 (98%)] Loss: 0.089456

Train Epoch: 8 [59520/60000 (99%)] Loss: 0.098887

Test set: Average loss: 0.0619, Accuracy: 9814/10000 (98%)

Train Epoch: 9 [0/60000 (0%)] Loss: 0.156521

Train Epoch: 9 [640/60000 (1%)] Loss: 0.200918

Train Epoch: 9 [1280/60000 (2%)] Loss: 0.210294

Train Epoch: 9 [1920/60000 (3%)] Loss: 0.140972

Train Epoch: 9 [2560/60000 (4%)] Loss: 0.125257

Train Epoch: 9 [3200/60000 (5%)] Loss: 0.243680

Train Epoch: 9 [3840/60000 (6%)] Loss: 0.234712

Train Epoch: 9 [4480/60000 (7%)] Loss: 0.174132

Train Epoch: 9 [5120/60000 (9%)] Loss: 0.148584

Train Epoch: 9 [5760/60000 (10%)] Loss: 0.195251

Train Epoch: 9 [6400/60000 (11%)] Loss: 0.169661

Train Epoch: 9 [7040/60000 (12%)] Loss: 0.118175

Train Epoch: 9 [7680/60000 (13%)] Loss: 0.246922

Train Epoch: 9 [8320/60000 (14%)] Loss: 0.486625

Train Epoch: 9 [8960/60000 (15%)] Loss: 0.081676

Train Epoch: 9 [9600/60000 (16%)] Loss: 0.332239

Train Epoch: 9 [10240/60000 (17%)] Loss: 0.087396

Train Epoch: 9 [10880/60000 (18%)] Loss: 0.151818

Train Epoch: 9 [11520/60000 (19%)] Loss: 0.236995

Train Epoch: 9 [12160/60000 (20%)] Loss: 0.062987

Train Epoch: 9 [12800/60000 (21%)] Loss: 0.163697

Train Epoch: 9 [13440/60000 (22%)] Loss: 0.194872

Train Epoch: 9 [14080/60000 (23%)] Loss: 0.057095

Train Epoch: 9 [14720/60000 (25%)] Loss: 0.180274

Train Epoch: 9 [15360/60000 (26%)] Loss: 0.142546

Train Epoch: 9 [16000/60000 (27%)] Loss: 0.137272

Train Epoch: 9 [16640/60000 (28%)] Loss: 0.171397

Train Epoch: 9 [17280/60000 (29%)] Loss: 0.291310

Train Epoch: 9 [17920/60000 (30%)] Loss: 0.158085

Train Epoch: 9 [18560/60000 (31%)] Loss: 0.139838

Train Epoch: 9 [19200/60000 (32%)] Loss: 0.098919

Train Epoch: 9 [19840/60000 (33%)] Loss: 0.249284

Train Epoch: 9 [20480/60000 (34%)] Loss: 0.123017

Train Epoch: 9 [21120/60000 (35%)] Loss: 0.125626

Train Epoch: 9 [21760/60000 (36%)] Loss: 0.252014

Train Epoch: 9 [22400/60000 (37%)] Loss: 0.179193

Train Epoch: 9 [23040/60000 (38%)] Loss: 0.103279

Train Epoch: 9 [23680/60000 (39%)] Loss: 0.076723

Train Epoch: 9 [24320/60000 (41%)] Loss: 0.240713

Train Epoch: 9 [24960/60000 (42%)] Loss: 0.219019

Train Epoch: 9 [25600/60000 (43%)] Loss: 0.161173

Train Epoch: 9 [26240/60000 (44%)] Loss: 0.173746

Train Epoch: 9 [26880/60000 (45%)] Loss: 0.215212

Train Epoch: 9 [27520/60000 (46%)] Loss: 0.106520

Train Epoch: 9 [28160/60000 (47%)] Loss: 0.233202

Train Epoch: 9 [28800/60000 (48%)] Loss: 0.200203

Train Epoch: 9 [29440/60000 (49%)] Loss: 0.375947

Train Epoch: 9 [30080/60000 (50%)] Loss: 0.156159

Train Epoch: 9 [30720/60000 (51%)] Loss: 0.188050

Train Epoch: 9 [31360/60000 (52%)] Loss: 0.094386

Train Epoch: 9 [32000/60000 (53%)] Loss: 0.344400

Train Epoch: 9 [32640/60000 (54%)] Loss: 0.065343

Train Epoch: 9 [33280/60000 (55%)] Loss: 0.289973

Train Epoch: 9 [33920/60000 (57%)] Loss: 0.167610

Train Epoch: 9 [34560/60000 (58%)] Loss: 0.118668

Train Epoch: 9 [35200/60000 (59%)] Loss: 0.134193

Train Epoch: 9 [35840/60000 (60%)] Loss: 0.156949

Train Epoch: 9 [36480/60000 (61%)] Loss: 0.152963

Train Epoch: 9 [37120/60000 (62%)] Loss: 0.347262

Train Epoch: 9 [37760/60000 (63%)] Loss: 0.389002

Train Epoch: 9 [38400/60000 (64%)] Loss: 0.149599

Train Epoch: 9 [39040/60000 (65%)] Loss: 0.058464

Train Epoch: 9 [39680/60000 (66%)] Loss: 0.164368

Train Epoch: 9 [40320/60000 (67%)] Loss: 0.079361

Train Epoch: 9 [40960/60000 (68%)] Loss: 0.147844

Train Epoch: 9 [41600/60000 (69%)] Loss: 0.154546

Train Epoch: 9 [42240/60000 (70%)] Loss: 0.175295

Train Epoch: 9 [42880/60000 (71%)] Loss: 0.169502

Train Epoch: 9 [43520/60000 (72%)] Loss: 0.238568

Train Epoch: 9 [44160/60000 (74%)] Loss: 0.323940

Train Epoch: 9 [44800/60000 (75%)] Loss: 0.238466

Train Epoch: 9 [45440/60000 (76%)] Loss: 0.207365

Train Epoch: 9 [46080/60000 (77%)] Loss: 0.220383

Train Epoch: 9 [46720/60000 (78%)] Loss: 0.210696

Train Epoch: 9 [47360/60000 (79%)] Loss: 0.121878

Train Epoch: 9 [48000/60000 (80%)] Loss: 0.204027

Train Epoch: 9 [48640/60000 (81%)] Loss: 0.130146

Train Epoch: 9 [49280/60000 (82%)] Loss: 0.200332

Train Epoch: 9 [49920/60000 (83%)] Loss: 0.101311

Train Epoch: 9 [50560/60000 (84%)] Loss: 0.261215

Train Epoch: 9 [51200/60000 (85%)] Loss: 0.139616

Train Epoch: 9 [51840/60000 (86%)] Loss: 0.114818

Train Epoch: 9 [52480/60000 (87%)] Loss: 0.191505

Train Epoch: 9 [53120/60000 (88%)] Loss: 0.164865

Train Epoch: 9 [53760/60000 (90%)] Loss: 0.084798

Train Epoch: 9 [54400/60000 (91%)] Loss: 0.151823

Train Epoch: 9 [55040/60000 (92%)] Loss: 0.189350

Train Epoch: 9 [55680/60000 (93%)] Loss: 0.131283

Train Epoch: 9 [56320/60000 (94%)] Loss: 0.092493

Train Epoch: 9 [56960/60000 (95%)] Loss: 0.108735

Train Epoch: 9 [57600/60000 (96%)] Loss: 0.210052

Train Epoch: 9 [58240/60000 (97%)] Loss: 0.283552

Train Epoch: 9 [58880/60000 (98%)] Loss: 0.195462

Train Epoch: 9 [59520/60000 (99%)] Loss: 0.088956

Test set: Average loss: 0.0537, Accuracy: 9833/10000 (98%)

Train Epoch: 10 [0/60000 (0%)] Loss: 0.232688

Train Epoch: 10 [640/60000 (1%)] Loss: 0.130718

Train Epoch: 10 [1280/60000 (2%)] Loss: 0.238487

Train Epoch: 10 [1920/60000 (3%)] Loss: 0.097148

Train Epoch: 10 [2560/60000 (4%)] Loss: 0.096266

Train Epoch: 10 [3200/60000 (5%)] Loss: 0.109697

Train Epoch: 10 [3840/60000 (6%)] Loss: 0.175715

Train Epoch: 10 [4480/60000 (7%)] Loss: 0.104340

Train Epoch: 10 [5120/60000 (9%)] Loss: 0.358345

Train Epoch: 10 [5760/60000 (10%)] Loss: 0.077152

Train Epoch: 10 [6400/60000 (11%)] Loss: 0.299876

Train Epoch: 10 [7040/60000 (12%)] Loss: 0.213904

Train Epoch: 10 [7680/60000 (13%)] Loss: 0.083583

Train Epoch: 10 [8320/60000 (14%)] Loss: 0.199138

Train Epoch: 10 [8960/60000 (15%)] Loss: 0.249819

Train Epoch: 10 [9600/60000 (16%)] Loss: 0.151206

Train Epoch: 10 [10240/60000 (17%)] Loss: 0.189757

Train Epoch: 10 [10880/60000 (18%)] Loss: 0.208848

Train Epoch: 10 [11520/60000 (19%)] Loss: 0.071570

Train Epoch: 10 [12160/60000 (20%)] Loss: 0.256983

Train Epoch: 10 [12800/60000 (21%)] Loss: 0.117856

Train Epoch: 10 [13440/60000 (22%)] Loss: 0.230843

Train Epoch: 10 [14080/60000 (23%)] Loss: 0.305246

Train Epoch: 10 [14720/60000 (25%)] Loss: 0.103493

Train Epoch: 10 [15360/60000 (26%)] Loss: 0.173460

Train Epoch: 10 [16000/60000 (27%)] Loss: 0.182112

Train Epoch: 10 [16640/60000 (28%)] Loss: 0.249207

Train Epoch: 10 [17280/60000 (29%)] Loss: 0.183754

Train Epoch: 10 [17920/60000 (30%)] Loss: 0.192911

Train Epoch: 10 [18560/60000 (31%)] Loss: 0.063303

Train Epoch: 10 [19200/60000 (32%)] Loss: 0.475773

Train Epoch: 10 [19840/60000 (33%)] Loss: 0.086931

Train Epoch: 10 [20480/60000 (34%)] Loss: 0.048210

Train Epoch: 10 [21120/60000 (35%)] Loss: 0.092995

Train Epoch: 10 [21760/60000 (36%)] Loss: 0.206281

Train Epoch: 10 [22400/60000 (37%)] Loss: 0.498290

Train Epoch: 10 [23040/60000 (38%)] Loss: 0.102867

Train Epoch: 10 [23680/60000 (39%)] Loss: 0.074512

Train Epoch: 10 [24320/60000 (41%)] Loss: 0.207413

Train Epoch: 10 [24960/60000 (42%)] Loss: 0.101784

Train Epoch: 10 [25600/60000 (43%)] Loss: 0.138648

Train Epoch: 10 [26240/60000 (44%)] Loss: 0.098210

Train Epoch: 10 [26880/60000 (45%)] Loss: 0.108851

Train Epoch: 10 [27520/60000 (46%)] Loss: 0.178737

Train Epoch: 10 [28160/60000 (47%)] Loss: 0.215719

Train Epoch: 10 [28800/60000 (48%)] Loss: 0.233939

Train Epoch: 10 [29440/60000 (49%)] Loss: 0.135513

Train Epoch: 10 [30080/60000 (50%)] Loss: 0.076783

Train Epoch: 10 [30720/60000 (51%)] Loss: 0.052472

Train Epoch: 10 [31360/60000 (52%)] Loss: 0.232018

Train Epoch: 10 [32000/60000 (53%)] Loss: 0.262077

Train Epoch: 10 [32640/60000 (54%)] Loss: 0.117633

Train Epoch: 10 [33280/60000 (55%)] Loss: 0.139539

Train Epoch: 10 [33920/60000 (57%)] Loss: 0.273097

Train Epoch: 10 [34560/60000 (58%)] Loss: 0.037134

Train Epoch: 10 [35200/60000 (59%)] Loss: 0.263009

Train Epoch: 10 [35840/60000 (60%)] Loss: 0.082134

Train Epoch: 10 [36480/60000 (61%)] Loss: 0.149813

Train Epoch: 10 [37120/60000 (62%)] Loss: 0.187479

Train Epoch: 10 [37760/60000 (63%)] Loss: 0.298204

Train Epoch: 10 [38400/60000 (64%)] Loss: 0.213883

Train Epoch: 10 [39040/60000 (65%)] Loss: 0.258259

Train Epoch: 10 [39680/60000 (66%)] Loss: 0.115666

Train Epoch: 10 [40320/60000 (67%)] Loss: 0.073885

Train Epoch: 10 [40960/60000 (68%)] Loss: 0.058780

Train Epoch: 10 [41600/60000 (69%)] Loss: 0.125212

Train Epoch: 10 [42240/60000 (70%)] Loss: 0.113951

Train Epoch: 10 [42880/60000 (71%)] Loss: 0.055782

Train Epoch: 10 [43520/60000 (72%)] Loss: 0.149072

Train Epoch: 10 [44160/60000 (74%)] Loss: 0.156896

Train Epoch: 10 [44800/60000 (75%)] Loss: 0.157356

Train Epoch: 10 [45440/60000 (76%)] Loss: 0.215374

Train Epoch: 10 [46080/60000 (77%)] Loss: 0.096225

Train Epoch: 10 [46720/60000 (78%)] Loss: 0.089743

Train Epoch: 10 [47360/60000 (79%)] Loss: 0.193218

Train Epoch: 10 [48000/60000 (80%)] Loss: 0.060242

Train Epoch: 10 [48640/60000 (81%)] Loss: 0.159633

Train Epoch: 10 [49280/60000 (82%)] Loss: 0.166531

Train Epoch: 10 [49920/60000 (83%)] Loss: 0.228062

Train Epoch: 10 [50560/60000 (84%)] Loss: 0.056025

Train Epoch: 10 [51200/60000 (85%)] Loss: 0.220259

Train Epoch: 10 [51840/60000 (86%)] Loss: 0.158656

Train Epoch: 10 [52480/60000 (87%)] Loss: 0.241285

Train Epoch: 10 [53120/60000 (88%)] Loss: 0.084270

Train Epoch: 10 [53760/60000 (90%)] Loss: 0.313425

Train Epoch: 10 [54400/60000 (91%)] Loss: 0.090520

Train Epoch: 10 [55040/60000 (92%)] Loss: 0.145082

Train Epoch: 10 [55680/60000 (93%)] Loss: 0.044837

Train Epoch: 10 [56320/60000 (94%)] Loss: 0.080298

Train Epoch: 10 [56960/60000 (95%)] Loss: 0.062218

Train Epoch: 10 [57600/60000 (96%)] Loss: 0.147062

Train Epoch: 10 [58240/60000 (97%)] Loss: 0.182195

Train Epoch: 10 [58880/60000 (98%)] Loss: 0.294599

Train Epoch: 10 [59520/60000 (99%)] Loss: 0.147527

Test set: Average loss: 0.0491, Accuracy: 9850/10000 (98%)

3980元团购原价19800元的AI课程,团购请加王家林老师微信13928463918

基于王家林老师独创的人工智能“项目情景投射”学习法,任何IT人员皆可在无需数学和Python语言的基础上的情况下3个月左右的时间成为AI技术实战高手:

1,五节课(分别在4月9-13号早上YY视频直播)教你从零起步(无需Python和数学基础)开发出自己的AI深度学习框架,五节课的学习可能胜过你五年的自我摸索;

2,30个真实商业案例代码中习得AI(从零起步到AI实战专家之路):10大机器学习案例、13大深度学习案例、7大增强学习案例(本文档中有案例的详细介绍和案例实现代码截图);

3,100天的涅槃蜕变,平均每天学习1个小时(周一到周六早上6:00-7:00YY频道68917580视频直播),周末复习每周的6个小时的直播课程(报名学员均可获得所有的直播视频、全部的商业案例完整代码、最具阅读价值的AI资料等)。