一、数据归整化

1.1数据合并

1.1.1、DataFrame拼接

from pandas import Series,DataFrame

import pandas as pd

import numpy as np

df1 = DataFrame({"key":["b","b","a","c","a","a","b"],

"data1":range(7)})

df2 = DataFrame({"key":["a","b","d"],

"data2":range(3)})

#合并操作merge,默认找相同的列名数据,取交集

#有相同列名的时候合并

pd.merge(df1,df2) ####也叫内连接

pd.merge(df1,df2,on="key")

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

data1 key data2

0 0 b 1

1 1 b 1

2 6 b 1

3 2 a 0

4 4 a 0

5 5 a 0

df3 = DataFrame({"lkey":["b","b","a","c","a","a","b"],

"data1":range(7)})

df4 = DataFrame({"rkey":["a","b","d"],

"data2":range(3)})

#没有相同列索引的时候

pd.merge(df3,df4,left_on="lkey",right_on="rkey")

'''

data1 lkey data2 rkey

0 0 b 1 b

1 1 b 1 b

2 6 b 1 b

3 2 a 0 a

4 4 a 0 a

5 5 a 0 a'''

##ge各种连接方法 ####并集

#左连接

#右连接

#外连接

pd.merge(df1,df2,how="left")####以df1的key为主

#如果在df2中没有df1的行索引上没数据,则是NaN

'''

data1 key data2

0 0 b 1.0

1 1 b 1.0

2 2 a 0.0

3 3 c NaN

4 4 a 0.0

5 5 a 0.0

6 6 b 1.0'''

pd.merge(df1,df2,how="right")####以df2的key为主,原理同上

'''

data1 key data2

0 0.0 b 1

1 1.0 b 1

2 6.0 b 1

3 2.0 a 0

4 4.0 a 0

5 5.0 a 0

6 NaN d 2'''

pd.merge(df1,df2,how="outer")####并集

'''

data1 key data2

0 0.0 b 1.0

1 1.0 b 1.0

2 6.0 b 1.0

3 2.0 a 0.0

4 4.0 a 0.0

5 5.0 a 0.0

6 3.0 c NaN

7 NaN d 2.0'''

df1 = DataFrame({"a":[1.,NA,5.,NA],

"b":[NA,2.,NA,6.],

"c":range(2,18,4)})

df2 = DataFrame({"a":[5.,4.,NA,3.,7.],

"b":[NA,3.,4.,6.,8.]})

# DataFrame中的补丁

df1.combine_first(df2) #df2补丁df1 1.1.2、Series数据的连接

from pandas import Series,DataFrame

import pandas as pd

import numpy as np

s1 = Series([0,1],index =["a","b"])

s2 = Series([2,3,4],index =["c","d","e"])

s3 = Series([5,6],index =["f","g"])

pd.concat([s1,s2,s3]) #concat 上下拼接

'''a 0

b 1

c 2

d 3

e 4

f 5

g 6

dtype: int64'''

#将多个Series拼接成一个DtaFrame

df_concat = pd.concat([s1,s2,s3],axis = 1)

df_concat #列拼接,index索引叠加

'''

0 1 2

a 0.0 NaN NaN

b 1.0 NaN NaN

c NaN 2.0 NaN

d NaN 3.0 NaN

e NaN 4.0 NaN

f NaN NaN 5.0

g NaN NaN 6.0'''

df_concat = pd.concat([s1,s2,s3],axis = 1,join = "inner")####取交集

##层次化索引

pd.concat([s1,s2],keys=["one","two"])

'''one a 0

b 1

two c 2

d 3

e 4

dtype: int64'''

a = Series([NA,2,NA,2.3,23,NA],index = list("asdfdr"))

b = Series(range(7),index = list("asdfdrc"))

a.combine_first(b)1.2、数据的加载、存储和解析

读取

from pandas import DataFrame,Series

import pandas as pd

import numpy as np

import sys

pd.read_csv("data/ex1.csv")

pd.read_table("data/ex1.csv",sep=",")#用read_table读取

pd.read_table("data/ex1.csv",sep="|")

pd.read_table("data/ex1.csv",sep="\t")

#如果没有索引,默认分配

pd.read_csv("data/ex2.csv",header = None)

#手动指定biao标题数据

pd.read_csv('data/ex2.csv',names = ["a","b","c","d","message"])

#指定层次化索引

pd.read_csv("data/csv_mindex.csv",index_col=["key1","key2"])

#用任意字符进行分割

pd.read_table("data/ex3.csv",sep="\s+")

#跳过读取

pd.read_csv("data/ex4.csv",skiprows=[0,2,3])

#加载存在NA的数据,类似替换

pd.read_csv("data/ex5.csv",na_values=["4"])

#将原始数据中某些数用sen替换

sen={"message":['foo',"NA"],'something':['two']}

pd.read_csv("data/ex5.csv",na_values=sen)

#pandas将数据写入到csv文件中

df = DataFrame(np.random.randn(4,3),columns=["a","b","c"],

index = list("1234"))

写入

#将数据直接输出

df.to_csv(sys.stdout,sep="|")

#为数据中的na值做标记

df.to_csv(sys.stdout,na_rep="NULL")

data.to_csv(sys.stdout,index = False,columns=list("abf"))1.3数据的重塑和选择

from pandas import Series,DataFrame

import pandas as pd

import numpy as np

from numpy import nan as NA

data = Series(np.random.randn(10),index= [list("aaabbbccdd"),[1,2,3,1,2,3,1,2,2, 3]])

data

#将行索引(index)转换为列索引(columns)

reslut = data.unstack()

reslut

'''

1 2 3

a -2.310478 -0.992366 0.838925

b 0.353980 -1.074091 -0.272188

c 0.532546 -0.962216 NaN

d NaN 0.045797 0.049312'''

#将列索引(columns)转换为行索引(index)

reslut.stack()

# DataFrame行列索引的重塑和转化

data = DataFrame(np.arange(6).reshape(2,3),

index = pd.Index(["上海","北京"],name = "省份"),

columns = pd.Index([2011,2012,2013],name="年份"))

'''

年份 2011 2012 2013

省份

上海 0 1 2

北京 3 4 5'''

result = data.stack()

'''省份 年份

上海 2011 0

2012 1

2013 2

北京 2011 3

2012 4

2013 5

dtype: int32'''

###将DataFrame的层次化列索引转换到行索引上

result.unstack() ########默认转换最内层的索引

'''

numbers one two three

state

Ohio 0 1 2

Colorado 3 4 5'''

df = DataFrame({"left":result,

"right":result+5},

columns = pd.Index(["left","right"],

name = "side"))

df

'''

side left right

state numbers

Ohio one 0 5

two 1 6

three 2 7

Colorado one 3 8

two 4 9

three 5 10'''

df.unstack("state") #作为旋转轴的级别将成为最低级别

side left right

state Ohio Colorado Ohio Colorado

numbers

one 0 3 5 8

two 1 4 6 9

three 2 5 7 101.4数据转化,清除重复数据

from pandas import Series,DataFrame

import pandas as pd

import numpy as np

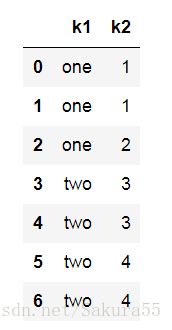

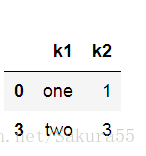

data=DataFrame({"k1":["one"]*3+["two"]*4,'k2':[1,1,2,3,3,4,4]})

data#检测DataFrame中的每一行是否是重复的数据行

mask = data.duplicated() #判断的是一行和另一行比较

mask

#方法一:通过花式索引去除重复的行数据

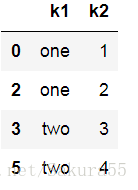

data[~mask]#方法二:通过DataFrame内置的drop_duplicates函数取出重读的数据行

#去除的规则:所有的列数据都出现重复的行

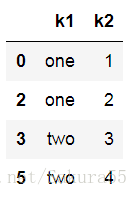

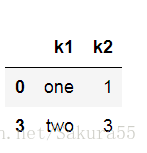

data.drop_duplicates()data.drop_duplicates(["k1"])###只要那一列有重复的,都去除#指定keep参数指定需要保留哪些重复的数据

#保留重复中的第一次数据的行索引

data.drop_duplicates(["k1"],keep="first")

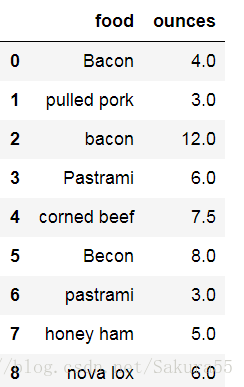

data=DataFrame({'food':['Bacon','pulled pork','bacon','Pastrami',

'corned beef','Becon','pastrami','honey ham','nova lox'],

'ounces':[4,3,12,6,7.5,8,3,5,6]})

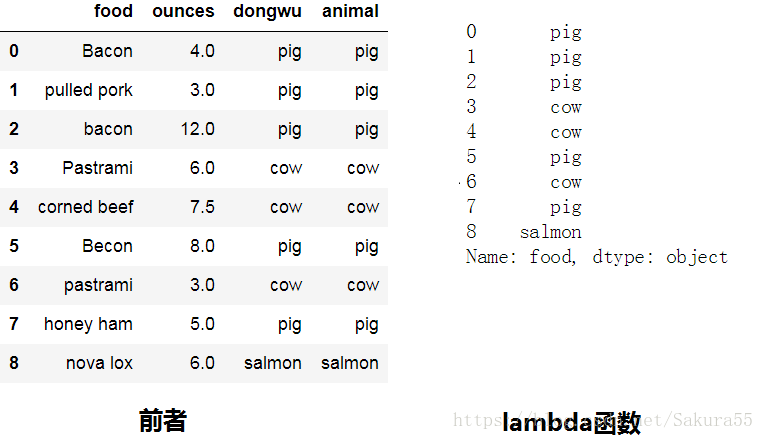

dataanimal={

'bacon':'pig',

'pulled pork':'pig',

'pastrami':'cow',

'honey ham':'pig',

'nova lox':'salmon',

'corned beef':'cow',

'becon':"pig"

}

animal#匹配

data["animal"] = data["food"].map(str.lower).map(animal)

#使用lambda函数匹配

data["food"].map(lambda x:animal[x.lower()])

扫描二维码关注公众号,回复:

1782322 查看本文章

1.5 替换

from pandas import Series,DataFrame

import pandas as pd

import numpy as np

from numpy import nan as NA

series = Series([1,-0.63,23,-11,-100,3])

series.replace(-11,NA)

series.replace([-11,3],NA) #ti替换多个

#针对不同的替换指定的值

#方法1 #字典格式

series.replace({-11:NA,3:0})

#方法2##多个列表格式

series.replace([-11,3],[NA,23152])1.6 数据拆分

1.6.1cut

from pandas import Series,DataFrame

import pandas as pd

import numpy as np

from numpy import nan as NA

age = [22,23,30,45,35,78,45,67]

#将所有年龄进行分组

bins=[18,28,40,60,100]

cats = pd.cut(age,bins)

'''[(18, 28], (18, 28], (28, 40], (40, 60], (28, 40], (60, 100], (40, 60], (60, 100]]

Categories (4, interval[int64]): [(18, 28] < (28, 40] < (40, 60] < (60, 100]]'''

# pd.value_counts方法统计每个区间段的人数

pd.value_counts(cats)

cats.codes

#表示每个数值属于第几个区间

'''array([0, 0, 1, 2, 1, 3, 2, 3], dtype=int8)'''

pd.cut(data,4,precision=2)##保留两位有效数字

#利用组数最大值和最小值的差除以组数份数,再一次叠加划分

'''

sub=pd.max(data)-pd.min(data)

sub/4

'''1.6.2 qcut

from pandas import DataFrame,Series

import pandas as pd

import numpy as np

data = np.random.randn(1000) #服从正太分布

result = pd.qcut(data,4) #均分4份

result

'''[(-3.06, -0.644], (-0.644, -0.0267], (-0.644, -0.0267], (-0.644, -0.0267], (-3.06, -0.644], ..., (-0.0267, 0.622], (-0.0267, 0.622], (-3.06, -0.644], (-0.0267, 0.622], (-0.644, -0.0267]]

Length: 1000

Categories (4, interval[float64]): [(-3.06, -0.644] < (-0.644, -0.0267] < (-0.0267, 0.622] < (0.622, 3.055]]'''

pd.value_counts(result)

'''(0.622, 3.055] 250

(-0.0267, 0.622] 250

(-0.644, -0.0267] 250

(-3.06, -0.644] 250

dtype: int64'''

result = pd.qcut(data,[0,0.1,0.5,0.9,1]) #每个区间的百分比占位

'''(-0.0267, 1.124] 400

(-1.192, -0.0267] 400

(1.124, 3.055] 100

(-3.06, -1.192] 100

dtype: int6pd.value_counts(result)'''

#如果分位数的差值和小于1的情况

result = pd.qcut(data,[0,0.3,0.4,0.75])

pd.value_counts(result) #只有750个数

'''

(-0.238, 0.622] 350

(-3.06, -0.478] 300

(-0.478, -0.238] 100

dtype: int64

'''1.7筛选

from pandas import DataFrame,Series

import pandas as pd

import numpy as np

data=np.random.randn(1000,4)

df = DataFrame(data)

#np.random.seed(number) number是随机生成的种子

# np.random.randn() #默认生成随机数的种子数是当前时间的时间戳

#定义一个种子数

np.random.seed(12345532)#只能用一次

np.random.rand()

'''0.38055403705122426'''

np.random.rand()

'''0.04502724431858285'''

col = data[3]

'''array([ 0.83174379, -0.75549206, -0.6345612 , 0.0674386 ])'''

col[np.abs(col)>0] #查找某一列值大于0 的行数据

col[(np.abs(col)>1).any(1)] #查找

#将所有绝对值大于3的参数设置3,绝对值小于3的复数,设为-3

np.sign(data)*3

'''array([[ 3., -3., -3., 3.],

[ 3., -3., 3., 3.],

[ 3., -3., 3., -3.],

...,

[ 3., 3., 3., -3.],

[-3., -3., -3., -3.],

[ 3., 3., -3., 3.]])'''二、数据的聚合和分组

2.1、groupby

from pandas import DataFrame,Series

import pandas as pd

import numpy as np

import sys

data = DataFrame( {'key1' : ['a', 'b', 'a', 'b', 'a', 'b', 'a', 'a'],

'key2' : ['one','one','two','three','two','two','one','three'],

'data1': np.random.randint(1,10, 8),

'data2': np.random.randint(1,10, 8)})

结果如下:

data1 data2 key1 key2

0 1 5 a one

1 6 8 b one

2 7 5 a two

3 2 3 b three

4 8 5 a two

5 3 9 b two

6 9 2 a one

7 4 5 a three

grouped = data["data1"].groupby(data["key1"])

'''<pandas.core.groupby.SeriesGroupBy object at 0x0000000008EBAF60>'''

#调用已经分组的数据的一些方法,能得出相应的结果

#获取每分组中的平均值

grouped.mean()

'''key1

a 5.800000

b 3.666667

Name: data1, dtype: float64'''

grouped = data["data1"].groupby([data["key1"],data["key2"]])

grouped.mean() #同上结构

#获取分组之后每一则相应的元素出现的次数

data.groupby([data["key1"],data["key2"]]).size()

'''key1 key2

a one 2

three 1

two 2

b one 1

three 1

two 1

dtype: int64'''

#for....in 循环分出结果

for name,group in data.groupby("key1"):

print(name)

print(group)

'''

#for....in 循环分出结果

for name,group in data.groupby("key1"):

print(name)

print(group)

#for....in 循环分出结果

for name,group in data.groupby("key1"):

print(name)

print(group)

a

data1 data2 key1 key2

0 1 5 a one

2 7 5 a two

4 8 5 a two

6 9 2 a one

7 4 5 a three

b

data1 data2 key1 key2

1 6 8 b one

3 2 3 b three

5 3 9 b two'''

#将分类结果转化为字典

p = dict(list(data.groupby("key1")))

#将分类结果转化为字典

p = dict(list(data.groupby("key1")))

#将分类结果转化为字典

p = dict(list(data.groupby("key1")))

p

{'a': data1 data2 key1 key2

0 1 5 a one

2 7 5 a two

4 8 5 a two

6 9 2 a one

7 4 5 a three, 'b': data1 data2 key1 key2

1 6 8 b one

3 2 3 b three

5 3 9 b two}

#按照列的数据类型分组

grouped = data.groupby(data.dtypes,axis = 1)

dict(list(grouped))

{dtype('int32'): data1 data2

0 1 5

1 6 8

2 7 5

3 2 3

4 8 5

5 3 9

6 9 2

7 4 5, dtype('O'): key1 key2

0 a one

1 b one

2 a two

3 b three

4 a two

5 b two

6 a one

7 a three}

#选择数据分类中的一个或者一组

#方法一

# data.groupby(["key1",'key2'])[["data2"]].mean()

#方法二

data["data2"].groupby([data["key1"],data["key2"]]).mean()

#通过字典或者Series进行分组

people = DataFrame(np.random.randn(5,5),

index = list("12345"),

columns = list("asdfg"))

结果:

a s d f g

1 -1.139766 -1.284590 0.264661 0.769478 -0.602812

2 0.729188 1.317163 1.269746 0.758019 0.437370

3 -1.037269 0.405382 0.164030 -1.229866 0.369245

4 -0.521364 1.301120 0.079933 0.092221 -0.512522

5 -0.807109 0.753260 -1.669533 -1.372556 -0.624470

#创建一个将列名进行映射的字典

mapping = {"a":"red","b":"red","c":"blue","d":"blue","e":"orange","f":"orange"}

by_columns = people.groupby(mapping,axis = 1)

by_columns.sum()

blue orange red

1 -1.690004 0.657012 -0.374866

2 1.981260 1.065506 -0.283357

3 -1.419508 -2.122820 0.859591

4 0.496744 -0.587665 0.999475

5 0.081005 -0.387064 -0.270888

#将mapping没有转化为Series

seriesmap=Series(mapping)

people.groupby(seriesmap,axis = 1).count()

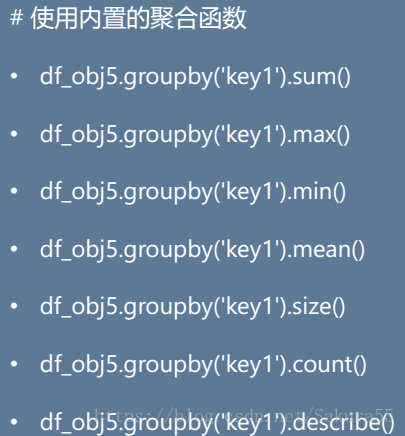

2.2、数据聚合

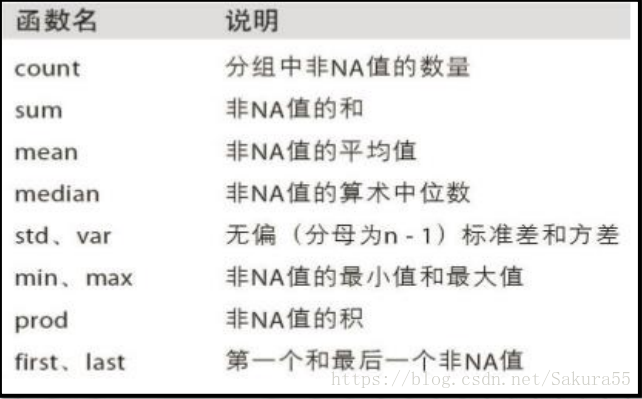

2.2.1、基础聚合函数

#常用的分组后计算方法

dict_obj = {'key1' : ['a', 'b', 'a', 'b', 'a', 'b', 'a', 'a'],

'key2' : ['one', 'one', 'two', 'three', 'two', 'two', 'one', 'three'],

'data1': np.random.randint(1,10, 8),

'data2': np.random.randint(1,10, 8)}

df = DataFrame(dict_obj)

data1 data2 key1 key2

0 1 4 a one

1 8 2 b one

2 3 7 a two

3 5 5 b three

4 5 9 a two

5 9 9 b two

6 4 5 a one

7 9 9 a three

df.groupby(df["key1"]).sum()2.2.2、自定义聚合函数

agg

#定义函数,获取某一列数据的最大或者最新哦袄的数值之差

def peak_range(df):

# print(type(df))

return df.max()-df.min()

df.groupby("key1").agg(peak_range)

data1 data2

key1

a 8 5

b 4 7

df.groupby("key2").agg(lambda df:df.max()-df.min())

##使用agg 函数,对多个聚合函数调用

df.groupby("key1").agg(["mean",'std','count',peak_range])

#使用字典作用没列不同的聚合函数

dict_mapping = {"data1":'mean',

"data2":"sum"}

df.groupby('key1').agg(dict_mapping)

df = pd.DataFrame(data={'books':['bk1','bk1','bk1',

'bk2','bk2','bk3'],

'price': [12,12,12,15,15,17]})

#将分组后和的books作为索引

df.groupby("books",as_index=True).sum()

price

books

bk1 36

bk2 30

bk3 17

df.groupby("books",as_index=False).sum()

结果:

books price

0 bk1 36

1 bk2 30

2 bk3 17apply

from pandas import DataFrame,Series

import pandas as pd

import numpy as np

#加载数据

tips=pd.read_csv("data/tips.csv")

#为数据集加一行,作为客户的小费占总额的占比

tips["tipss"]=tips["tip"]/tips["total_bill"]

#定义函数,筛选出最大前五名

def top(df,n=5,columns="tipss"):

return df.sort_values(by=columns)[-n:]

top(tips,n=6)

total_bill tip smoker day time size tipss

109 14.31 4.00 Yes Sat Dinner 2 0.279525

183 23.17 6.50 Yes Sun Dinner 4 0.280535

232 11.61 3.39 No Sat Dinner 2 0.291990

67 3.07 1.00 Yes Sat Dinner 1 0.325733

178 9.60 4.00 Yes Sun Dinner 2 0.416667

172 7.25 5.15 Yes Sun Dinner 2 0.710345

#对数据集对是否抽烟分组

grouped = tips.groupby('smoker')

grouped.apply(top)

#为apply使用的函数传参

result = tips.groupby(['smoker', 'day']).apply(top, n=1,columns='total_bill')

frame = pd.DataFrame({'data1': np.random.randn(1000),

'data2': np.random.randn(1000)})

quartiles = pd.cut(frame.data1, 4)

quartiles[:10]

0 (-1.693, 0.093]

1 (-1.693, 0.093]

2 (-1.693, 0.093]

3 (0.093, 1.879]

4 (0.093, 1.879]

5 (-1.693, 0.093]

6 (-1.693, 0.093]

7 (0.093, 1.879]

8 (-1.693, 0.093]

9 (0.093, 1.879]

Name: data1, dtype: category

Categories (4, interval[float64]): [(-3.486, -1.693] < (-1.693, 0.093] < (0.093, 1.879] < (1.879, 3.665]]

def get_stats(group):

return { 'min': group.min(), 'max': group.max(),

'count':group.count(),'mean': group.mean() }

grouped = frame.data2.groupby(quartiles)

grouped.apply(get_stats).unstack() #行==》列

count max mean min

data1

(-3.486, -1.693] 41.0 1.863387 -0.042594 -2.277672

(-1.693, 0.093] 502.0 2.864208 0.108999 -2.898347

(0.093, 1.879] 434.0 2.571995 0.040860 -2.806596

(1.879, 3.665] 23.0 2.760370 -0.220554 -2.147130