因为课程需要,前两天花了一天学习python并写了一个豆瓣电影的爬虫。

课程要求是这样的:

爬取豆瓣网站上,电影排名在前50名的电影,包括电影名字,电影评分,电影简介,

爬下来的电影数据进行分类,按照不同分类保存在数据库/Excel中的不同表中。

python的环境安装配置,以及语法解释本次就不提及了。

由于本人的正则不够熟练,所以之前在用正则写时并没有成功爬下来,几次尝试失败之后,本人运用了beautifulSoup来爬。所以如果希望正则方式的朋友就抱歉了。

直接上代码。

sql:

# Host: 127.0.0.1 (Version: 5.7.17-log)

# Date: 2018-06-04 11:52:30

# Generator: MySQL-Front 5.3 (Build 4.269)

/*!40101 SET NAMES utf8 */;

drop database if exists pythontest;

create database pythontest default character set utf8;

use pythontest;

#

# Structure for table "爱情表"

#

CREATE TABLE `爱情表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "传记表"

#

CREATE TABLE `传记表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "动画表"

#

CREATE TABLE `动画表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "动作表"

#

CREATE TABLE `动作表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "儿童表"

#

CREATE TABLE `儿童表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "犯罪表"

#

CREATE TABLE `犯罪表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "歌舞表"

#

CREATE TABLE `歌舞表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "纪录片表"

#

CREATE TABLE `纪录片表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "家庭表"

#

CREATE TABLE `家庭表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "惊悚表"

#

CREATE TABLE `惊悚表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "剧情表"

#

CREATE TABLE `剧情表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "科幻表"

#

CREATE TABLE `科幻表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "历史表"

#

CREATE TABLE `历史表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "冒险表"

#

CREATE TABLE `冒险表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "奇幻表"

#

CREATE TABLE `奇幻表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "同性表"

#

CREATE TABLE `同性表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "喜剧表"

#

CREATE TABLE `喜剧表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "悬疑表"

#

CREATE TABLE `悬疑表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "音乐表"

#

CREATE TABLE `音乐表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "灾难表"

#

CREATE TABLE `灾难表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

#

# Structure for table "战争表"

#

CREATE TABLE `战争表` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`name` varchar(30) DEFAULT NULL COMMENT '电影名',

`score` float DEFAULT NULL COMMENT '评分',

`review` varchar(100) DEFAULT NULL COMMENT '简介',

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

本人贪方便并且本次核心不在数据库,所以数据库表是用中文创建的。如果各位有要求的话可以自行修改。

python:

#!/usr/bin/env python # _*_coding:utf-8_*_ import requests import pymysql import sys from bs4 import BeautifulSoup downUrl = 'http://movie.douban.com/top250/' def grapInFo(pra): soup = BeautifulSoup(pra, 'html.parser') ol = soup.find('ol', class_='grid_view') name = [] # 名字 score = [] # 评分 reviewList = [] # 短评 categoryList = [] # 类别 reload(sys) # Unicode编码与ASCII编码的不兼容 sys.setdefaultencoding('utf - 8') for i in ol.find_all('li'): detail = i.find('div', attrs={'class': 'hd'}) movieName = detail.find( 'span', attrs={'class': 'title'}).get_text() # 电影名字 scoreList = i.find( 'span', attrs={'class': 'rating_num'}).get_text() # 评分 review = i.find('span', attrs={'class': 'inq'}) # 短评 category = i.find('div', attrs={'class' : 'bd'}).find('p').get_text() #类别 category = str(category[category.rfind('/')+2 : len(str(category))].replace(' ', '').strip()) if review: # 判断是否有短评 reviewList.append(review.get_text()) else: reviewList.append('无') score.append(scoreList) name.append(movieName) categoryList.append(category) page = soup.find('span', attrs={'class': 'next'}).find('a') # 获取下一页 if page: return name, score, reviewList, categoryList, downUrl + page['href'] return name, score, reviewList, categoryList, None def main(): url = downUrl name = [] score = [] review = [] category = [] i = 1 headers = { 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/47.0.2526.80 Safari/537.36' } while i < 3: pra = requests.get(url, headers=headers).content movie, scoreNum, reviewList, categoryList, url = grapInFo(pra) name = name + movie score = score + scoreNum review = review + reviewList category = category + categoryList i = i + 1 # 打开数据库连接 db = pymysql.connect("127.0.0.1", "root", "", "pythontest", charset="utf8") # 使用cursor()方法获取操作游标 cursor = db.cursor() for (i, o, r, c) in zip(name, score, review, category): print i , " ", o, " ", r, " ", c j = 0 while j < len(c.decode('utf-8')): print c.decode('utf-8')[j: j+2].encode('utf-8') j += 2 sql = "INSERT INTO %s (name, \ score, review) \ VALUES ('%s', '%f', '%s')" % \ (c.decode('utf-8')[j: j+2].encode('utf-8') + '表', str(i), float(o), str(r)) print sql try: cursor.execute(sql) db.commit() except: db.rollback() db.close() if __name__ == '__main__': main()

py2:

#!/usr/bin/python # -*- coding: UTF-8 -*- import requests import sys import pymysql from bs4 import BeautifulSoup url = "https://movie.douban.com/top250" headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko'} # 解析网页 def praseHtml( url, headers): currenturl = url i = 1 # 序号 page = 1 #页数 # 打开数据库连接 db = pymysql.connect("127.0.0.1", "root", "", "pythontest", charset="utf8") # 使用cursor()方法获取操作游标 cursor = db.cursor() while currenturl: html = requests.get(currenturl, headers=headers).content # 获取网页源码 soup = BeautifulSoup(html, 'html.parser') # 解析当前页,获取想要的内容 moveList = soup.find('ol', class_='grid_view') reload(sys) sys.setdefaultencoding('utf8') for moveLi in moveList.find_all('li'): movieName = moveLi.find('span', attrs={'class': 'title'}).get_text() #电影名字 star = moveLi.find('span', attrs={'class' : 'rating_num'}).get_text() #星级 summary = moveLi.find('span', attrs={'class' : 'inq'}).get_text() # 简介 category = moveLi.find('div', attrs={'class' : 'bd'}).find('p').get_text() #类别 category = str(category[category.rfind('/')+2 : len(str(category))].replace(' ', '').strip()) print str(i) + ": " + movieName + " " + star + " " + summary + " " + category j = 0 while j < len(category.decode('utf-8')): print category.decode('utf-8')[j: j + 2].encode('utf-8') sql = "INSERT INTO %s (name, \ score, review) \ VALUES ('%s', '%f', '%s')" % \ (category.decode('utf-8')[j: j + 2].encode('utf-8') + '表', str(movieName), float(star), str(summary)) print sql try: cursor.execute(sql) db.commit() except: db.rollback() j += 2 i += 1 # 下一页 nextpage = soup.find('span', attrs={'class': 'next'}).find('a') # next = nextpage['href'] #这样写报错:NoneType object is not subscriptable if nextpage: currenturl = url + nextpage['href'] else: currenturl = None page += 1 if page==3: break db.close() praseHtml( url, headers)

本人写了两种。原理是一样的,大家可以自行查看。

本次爬虫输入入门级别比较简单,所以也不做过多解释了。

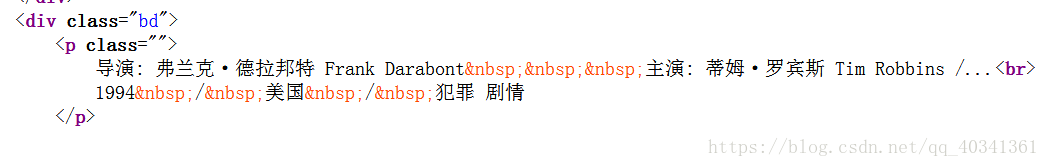

给大家提及一下,本次难点在于豆瓣电影的分类中。由于在相应li中没有分类的标签,而是将分类标签与其他信息混合起来。

所以对这些字符串的处理比较复杂,因为本人习惯用java以及众多内库,初学python不太适应,所以本次处理可能有更好的方式。如果各位有更简洁的方式,不妨留下让本人学习。

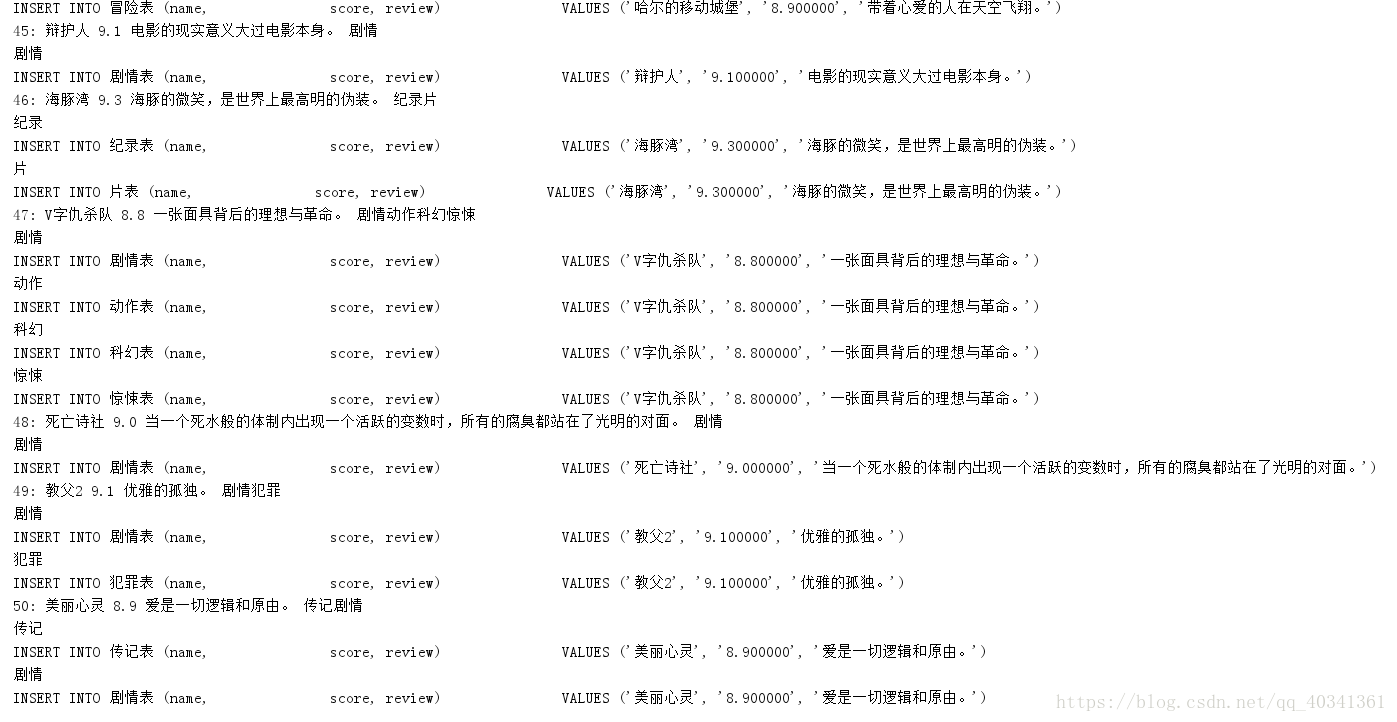

然后是运行结果:

爬虫挺有趣也挺实用的。之后空闲还会接着学习以及尝试各种爬虫。