文章列表

1.TensorFlow入门深度学习–01.基础知识. .

2.TensorFlow入门深度学习–02.基础知识. .

3.TensorFlow入门深度学习–03.softmax-regression实现MNIST数据分类. .

4.TensorFlow入门深度学习–04.自编码器(对添加高斯白噪声后的MNIST图像去噪).

5.TensorFlow入门深度学习–05.多层感知器实现MNIST数据分类.

6.TensorFlow入门深度学习–06.可视化工具TensorBoard.

7.TensorFlow入门深度学习–07.卷积神经网络概述.

8.TensorFlow入门深度学习–08.AlexNet(对MNIST数据分类).

9.TensorFlow入门深度学习–09.tf.contrib.slim用法详解.

10.TensorFlow入门深度学习–10.VGGNets16(slim实现).

11.TensorFlow入门深度学习–11.GoogLeNet(Inception V3 slim实现).

…

TensorFlow入门深度学习–04.自编码器(对添加高斯白噪声后的MNIST图像去噪)

自编码数学原理详参【】,下面我们主要实现去噪子编码器。首先导入的库

import numpy as np

import sklearn.preprocessing as prep

import tensorflow as tf

import matplotlib.pyplot as plt

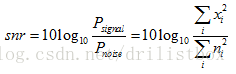

from tensorflow.examples.tutorials.mnist import input_data接着定义了生成高斯噪声的函数wgn,由于事先预定数组x的每一列代表一个实例数据,所以分为1维(即只有一个实例)以及2维(每一行代表一个实例)这两种情况分别进行噪声生成,并通过设定信噪比SNR来决定添加噪声的大小,信噪比的定义如下:

相应的程

def wgn(x, snr):

snr = 10**(snr/10.0)

if(len(x.shape)==1):

xpower = np.sum(x**2)/len(x)

npower = xpower / snr

return np.random.randn(len(x)) * np.sqrt(npower)

elif(len(x.shape)==2):

xpower = np.sum(x**2,1)/x.shape[1]

npower = xpower / snr

# return np.array([(power**0.5 * np.random.randn(x.shape[1])).tolist() for power in npower])

# return np.array([(np.random.normal(0.0, power**0.5, x.shape[1])).tolist() for power in npower])

return np.array([(power**0.5 * np.random.standard_normal(x.shape[1])).tolist() for power in npower])标准化函数,由于激活函数采用的是sigmoid函数,对输入数据进行标准化有利于使得神经元处于活跃区,加速收敛。

def xavier_init(fan_in, fan_out, constant = 1):

low = -constant * np.sqrt(6.0 / (fan_in + fan_out))

high = constant * np.sqrt(6.0 / (fan_in + fan_out))

return tf.random_uniform((fan_in, fan_out), minval = low, maxval = high, dtype = tf.float32)接下来定义去躁自编码器类,该自编码中除输入层以外,还有一个中间层和输出层,中间层激活函数为sigmoid,输出层激活函数为线性函数f(x)=x,损失函数采用平方损失函数,并考虑了隐藏层权重系数的L1项,该类中还定义了一个predictRun函数,该函数返回添加噪声后的图像数据及重构后的图像数据,方便画图查看结果用。

class AGNAutoencoder(object):

def __init__(self, layer_node):

self.n_input = layer_node[0]

self.n_hidden = layer_node[1]

self.transfer = tf.nn. sigmoid

self.weights = {

'w1':tf.Variable(xavier_init(self.n_input, self.n_hidden)),

'b1':tf.Variable(tf.zeros([self.n_hidden], dtype = tf.float32)),

'w2':tf.Variable(tf.zeros([self.n_hidden, self.n_input], dtype = tf.float32)),

'b2':tf.Variable(tf.zeros([self.n_input], dtype = tf.float32))

}

self.x = tf.placeholder(tf.float32, [None, self.n_input])

self.noise = tf.placeholder(tf.float32, [None, self.n_input])

_, reconstruction = self.predict(self.x)

self.cost = 0.5 * tf.reduce_sum(tf.pow(tf.subtract(reconstruction, self.x), 2.0))

tf.add_to_collection( 'losses', self.cost )

tf.add_to_collection( 'losses', tf.contrib.layers.l1_regularizer(alpha)(self.weights['w1']))

self.cost = tf.add_n(tf.get_collection('losses'))

self.optimizer = tf.train.AdamOptimizer(learning_rate).minimize(self.cost)

self.sess = tf.Session()

self.sess.run(tf.global_variables_initializer())

def Train_eval(self, x, snr):

return self.sess.run([self.cost, self.optimizer], feed_dict = {self.x: x, self.noise: wgn(x, snr)})

def encoder(self, x):# 定义压缩函数

layer = self.transfer(tf.add(tf.matmul(x, self.weights['w1']), self.weights['b1']))

return layer

def decoder(self, x):# 定义解压函数

layer = tf.add(tf.matmul(x, self.weights['w2']), self.weights['b2'])

return layer

def predict(self, x):

xAddNoise = tf.add(x , tf.random_normal((self.n_input,)))

hidden = self.encoder(xAddNoise)

return xAddNoise, self.decoder(hidden)

def predictRun(self, x, snr):

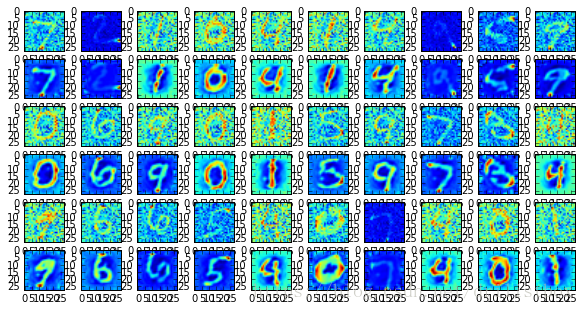

return self.sess.run(self.predict(self.x), feed_dict = {self.x: x, self.noise: wgn(x, snr)})下面开始加载数据及设置超参数及网络模型。需要注意的是,程序中用所有训练数据来获取标准化用的均值和方差,并在训练和测试时对所有数据都用这组均值和方差来进行标准化,以保住训练数据及测试数据的一致性。最后选取30个测试数据将含噪声的原始数据(奇数行)以及重构后的结果(偶数行)绘了出来。

#读入数据

mnist = input_data.read_data_sets('./../MNISTDat', one_hot = True)

snr = 20

#设置超参数

alpha = 2.0

learning_rate = 0.002 # 学习率

training_epochs = 10 # 训练轮数

batch_size = 128 # 每次训练的数据

display_step = 1 # 每隔多少轮显示一次训练结果

examples_to_show = 10 # 提示从测试集中选择10张图片取验证自动编码器的结果

#网络参数

layer_node = [784, 300]

preprocessor = prep.StandardScaler().fit(mnist.train.images)

agnac = AGNAutoencoder(layer_node)

for epoch in range(training_epochs):

avg_cost = 0.

n_samples = int(mnist.train.num_examples)

total_batch = int(n_samples / batch_size)

for i in range(total_batch):

batch_x, _ = mnist.train.next_batch(batch_size)

batch_x = preprocessor.transform(batch_x)

loss, _ = agnac.Train_eval(batch_x, snr)

avg_cost += loss / n_samples * batch_size

if epoch % display_step == 0:

print("Epoch:", '%04d' % (epoch + 1), "cost=", "{:.9f}".format(avg_cost))

examples_to_show = 10

X_testAddNoise, encode_decode = agnac.predictRun(preprocessor.transform(mnist.test.images[:examples_to_show]), snr)

examples_to_show = 30

X_testAddNoise, encode_decode = agnac.predictRun(preprocessor.transform(mnist.test.images[:examples_to_show]), snr)

f, a = plt.subplots(6, 10, figsize=(10, 5))

for j in range(3):

for i in range(10):

a[0+2*j][i].imshow(np.reshape(X_testAddNoise[i+10*j], (28, 28)))

a[1+2*j][i].imshow(np.reshape(encode_decode[i+10*j], (28, 28)))

f.show()

plt.draw()

agnac.sess.close()运行完后的结果如下:

TensorFlow实现 :https://pan.baidu.com/s/14A91inmZmSC55dgDv8ZZ3Q