using com.rfilkov.kinect;

using System;

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using UnityEngine.UI;

using Windows.Kinect;

using static com.rfilkov.kinect.KinectInterop;

using static UnityEditor.Experimental.GraphView.GraphView;

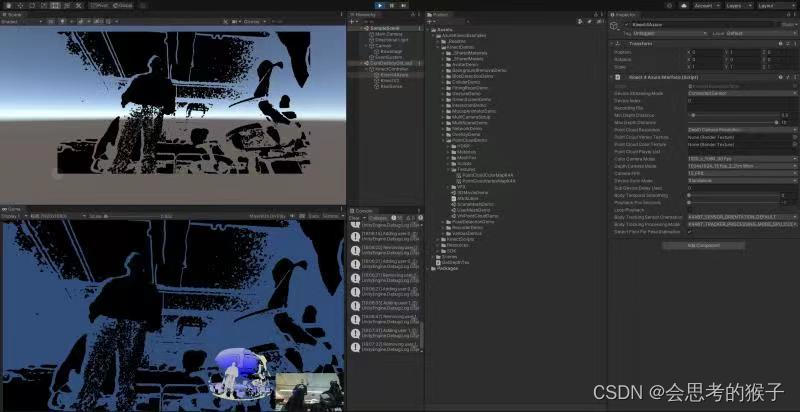

public class GetDepthTex : MonoBehaviour

{

KinectManager kinectManager;

RawImage rawimage;

ushort[] ushorts;

Texture2D infraredTexture;

private KinectInterop.SensorData sensorData = null;

private ushort[] tDepthImage = null;

ulong frameTime = 0;

ulong lastUsedFrameTime = 0;

// Start is called before the first frame update

void Start()

{

kinectManager = KinectManager.Instance;

rawimage = GetComponent<RawImage>();

//ushorts = kinectManager.GetRawDepthMap(0);

sensorData = (kinectManager != null && kinectManager.IsInitialized()) ?

kinectManager.GetSensorData(0) : null;

sensorData.sensorInterface.EnableColorCameraDepthFrame(sensorData, true);

tDepthImage = new ushort[1920 * 1080];

print("sensorData.depthImageWidth: " + sensorData.depthImageWidth);

infraredTexture = new Texture2D(1920, 1080, TextureFormat.RGBA32, false);

}

// Update is called once per frame

void Update()

{

//ushort[] transformedDepthFrame = sensorData.sensorInterface.GetColorCameraDepthFrame(sensorData, ref tDepthImage, ref frameTime);

//if (transformedDepthFrame != null && frameTime != lastUsedFrameTime)

//{

// lastUsedFrameTime = frameTime;

// // do something with the transformed depth frame

// //ushort[] yourData = sensorData.sensorInterface.GetColorCameraDepthFrame(sensorData, ref tDepthImage, ref frameTime);// wherever you get this from;

// byte[] byteData = new byte[sizeof(ushort) * transformedDepthFrame.Length];

// // On memory level copy the bytes from yourData into byteData

// Buffer.BlockCopy(transformedDepthFrame, 0, byteData, 0, byteData.Length);

// infraredTexture = new Texture2D(1920, 1080, TextureFormat.R16, false);

// infraredTexture.LoadRawTextureData(byteData);

// infraredTexture.Apply();

// rawimage.texture = infraredTexture;

//}

if (kinectManager)

{

//rawimage.texture = kinectManager.GetDepthImageTex(0);

//rawimage.texture = kinectManager.GetColorImageTex(0);

ushort[] yourData = sensorData.sensorInterface.GetColorCameraDepthFrame(sensorData, ref tDepthImage, ref frameTime);// wherever you get this from;

var pixels = new Color[yourData.Length];

print(yourData.Length);

for (var i = 0; i < pixels.Length; i++)

{

// Map the 16-bit ushort value (0 to 65535) into a normal 8-bit float value (0 to 1)

var scale = (float)yourData[i] / ushort.MaxValue;

// Then simply use that scale value for all three channels R,G and B

// => grey scaled pixel colors

float alpha = scale>0 ? 1 : 0;

var pixel = new Color(0, 0, 0, alpha);

pixels[i] = pixel;

}

//Then rather use SetPixels to apply all these pixel colors to your texture

infraredTexture.SetPixels(pixels);

infraredTexture.alphaIsTransparency = true;

infraredTexture.Apply();

rawimage.texture = infraredTexture;

}

}

}