上次整理了googlenet V1,V2和V3在同一篇文章里进行描述的,所以我们也在这里一起学习。

tensorflow发布了所有的模型

https://github.com/tensorflow/models/blob/master/slim/README.md#pre-trained-models

论文列表:

[v1] Going Deeper with Convolutions, 6.67% test error

http://arxiv.org/abs/1409.4842

[v2] Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift, 4.8% test error

http://arxiv.org/abs/1502.03167

[v3] Rethinking the Inception Architecture for Computer Vision, 3.5% test error

http://arxiv.org/abs/1512.00567

[v4] Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning, 3.08% test error

http://arxiv.org/abs/1602.07261

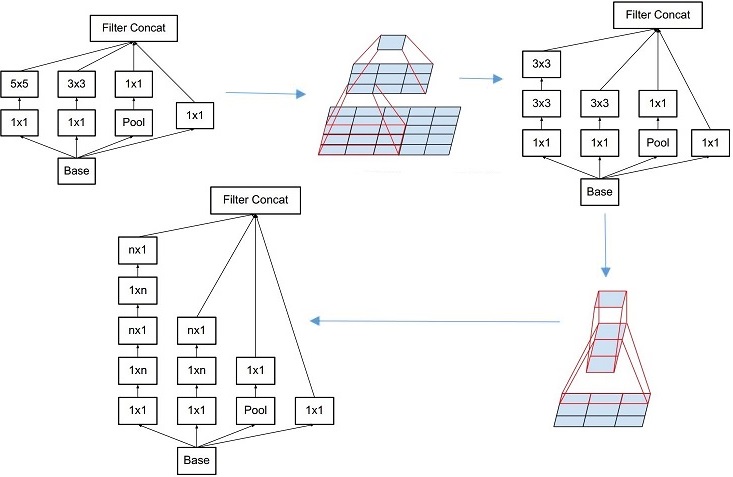

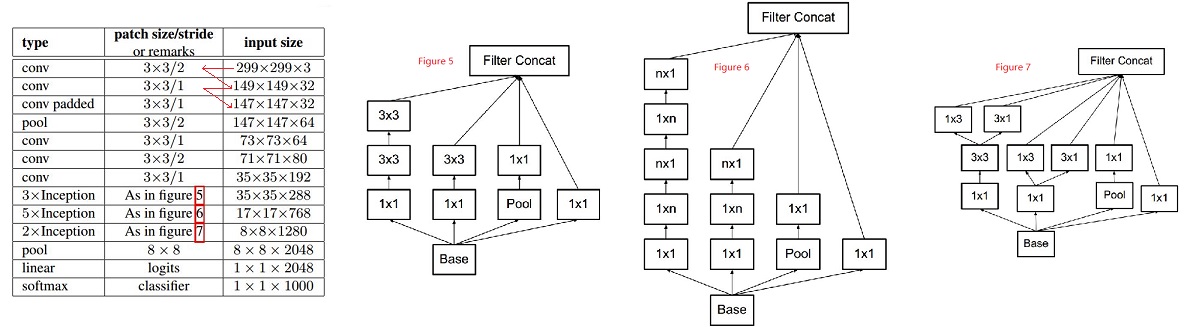

- Inception v1的网络,将1x1,3x3,5x5的conv和3x3的pooling,stack在一起,一方面增加了网络的width,另一方面增加了网络对尺度的适应性;

- v2的网络在v1的基础上,进行了改进,一方面了加入了BN层,减少了Internal Covariate Shift(内部neuron的数据分布发生变化),使每一层的输出都规范化到一个N(0, 1)的高斯,另外一方面学习VGG用2个3x3的conv替代inception模块中的5x5,既降低了参数数量,也加速计算;

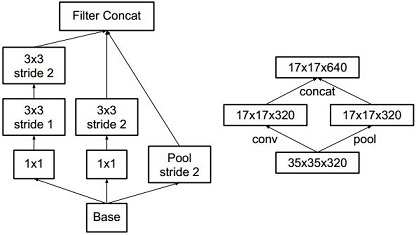

- v3一个最重要的改进是分解(Factorization),将7x7分解成两个一维的卷积(1x7,7x1),3x3也是一样(1x3,3x1),这样的好处,既可以加速计算(多余的计算能力可以用来加深网络),又可以将1个conv拆成2个conv,使得网络深度进一步增加,增加了网络的非线性,还有值得注意的地方是网络输入从224x224变为了299x299,更加精细设计了35x35/17x17/8x8的模块;

- v4研究了Inception模块结合Residual Connection能不能有改进?发现ResNet的结构可以极大地加速训练,同时性能也有提升,得到一个Inception-ResNet v2网络,同时还设计了一个更深更优化的Inception v4模型,能达到与Inception-ResNet v2相媲美的性能。

http://blog.csdn.net/shuzfan/article/details/50738394

GoogLeNet V1出现的同期,性能与之接近的大概只有VGGNet了,并且二者在图像分类之外的很多领域都得到了成功的应用。但是相比之下,GoogLeNet的计算效率明显高于VGGNet,大约只有500万参数,只相当于Alexnet的1/12(GoogLeNet的caffemodel大约50M,VGGNet的caffemodel则要超过600M)。

GoogLeNet的表现很好,但是,如果想要通过简单地放大Inception结构来构建更大的网络,则会立即提高计算消耗。此外,在V1版本中,文章也没给出有关构建Inception结构注意事项的清晰描述。因此,在文章中作者首先给出了一些已经被证明有效的用于放大网络的通用准则和优化方法。这些准则和方法适用但不局限于Inception结构。

General Design Principles

下面的准则来源于大量的实验,因此包含一定的推测,但实际证明基本都是有效的。

1 . 避免表达瓶颈,特别是在网络靠前的地方。 信息流前向传播过程中显然不能经过高度压缩的层,即表达瓶颈。从input到output,feature map的宽和高基本都会逐渐变小,但是不能一下子就变得很小。比如你上来就来个kernel = 7, stride = 5 ,这样显然不合适。

另外输出的维度channel,一般来说会逐渐增多(每层的num_output),否则网络会很难训练。(特征维度并不代表信息的多少,只是作为一种估计的手段)

2 . 高维特征更易处理。 高维特征更易区分,会加快训练。

3. 可以在低维嵌入上进行空间汇聚而无需担心丢失很多信息。 比如在进行3x3卷积之前,可以对输入先进行降维而不会产生严重的后果。假设信息可以被简单压缩,那么训练就会加快。

4 . 平衡网络的宽度与深度。

1-Motivation

作者认为:网络训练过程中参数不断改变导致后续每一层输入的分布也发生变化,而学习的过程又要使每一层适应输入的分布,因此我们不得不降低学习率、小心地初始化。作者将分布发生变化称之为 internal covariate shift。

大家应该都知道,我们一般在训练网络的时会将输入减去均值,还有些人甚至会对输入做白化等操作,目的是为了加快训练。为什么减均值、白化可以加快训练呢,这里做一个简单地说明:

首先,图像数据是高度相关的,假设其分布如下图a所示(简化为2维)。由于初始化的时候,我们的参数一般都是0均值的,因此开始的拟合y=Wx+b,基本过原点附近,如图b红色虚线。因此,网络需要经过多次学习才能逐步达到如紫色实线的拟合,即收敛的比较慢。如果我们对输入数据先作减均值操作,如图c,显然可以加快学习。更进一步的,我们对数据再进行去相关操作,使得数据更加容易区分,这样又会加快训练,如图d。

白化的方式有好几种,常用的有PCA白化:即对数据进行PCA操作之后,在进行方差归一化。这样数据基本满足0均值、单位方差、弱相关性。作者首先考虑,对每一层数据都使用白化操作,但分析认为这是不可取的。因为白化需要计算协方差矩阵、求逆等操作,计算量很大,此外,反向传播时,白化操作不一定可导。于是,作者采用下面的Normalization方法。

2-Normalization via Mini-Batch Statistics

数据归一化方法很简单,就是要让数据具有0均值和单位方差,如下式:

于是最后的输出为:

上述公式中用到了均值E和方差Var,需要注意的是理想情况下E和Var应该是针对整个数据集的,但显然这是不现实的。因此,作者做了简化, 用一个Batch的均值和方差作为对整个数据集均值和方差的估计。

整个BN的 算法 如下:

1、先进行 n×1 卷积再进行 1×n 卷积,与直接进行 n×n 卷积的结果是等价的。原文如下:

In theory, we could go even further and argue that one can replace any n × n convolution by a 1 × n convolution followed by a n × 1 convolution

2、非对称卷积能够降低运算量,这个很好理解吧,原来是 n×n 次乘法,改了以后,变成了 2×n 次乘法了,n越大,运算量减少的越多,原文如下:

the computational cost saving increases dramatically as n grows.

3、虽然可以降低运算量,但这种方法不是哪儿都适用的,非对称卷积在图片大小介于12×12到20×20大小之间的时候,效果比较好,具体原因未知。。。原文如下:

In practice, we have found that employing this factorization does not work well on early layers, but it gives very good results on medium grid-sizes (On m×m feature maps, where m ranges between 12 and 20).

def InceptionV3(include_top=True, weights='imagenet',

input_tensor=None):

'''Instantiate the Inception v3 architecture,

optionally loading weights pre-trained

on ImageNet. Note that when using TensorFlow,

for best performance you should set

`image_dim_ordering="tf"` in your Keras config

at ~/.keras/keras.json.

The model and the weights are compatible with both

TensorFlow and Theano. The dimension ordering

convention used by the model is the one

specified in your Keras config file.

Note that the default input image size for this model is 299x299.

# Arguments

include_top: whether to include the 3 fully-connected

layers at the top of the network.

weights: one of `None` (random initialization)

or "imagenet" (pre-training on ImageNet).

input_tensor: optional Keras tensor (i.e. output of `layers.Input()`)

to use as image input for the model.

# Returns

A Keras model instance.

'''

if weights not in {'imagenet', None}:

raise ValueError('The `weights` argument should be either '

'`None` (random initialization) or `imagenet` '

'(pre-training on ImageNet).')

# Determine proper input shape

if K.image_dim_ordering() == 'th':

if include_top:

input_shape = (3, 299, 299)

else:

input_shape = (3, None, None)

else:

if include_top:

input_shape = (299, 299, 3)

else:

input_shape = (None, None, 3)

if input_tensor is None:

img_input = Input(shape=input_shape)

else:

if not K.is_keras_tensor(input_tensor):

img_input = Input(tensor=input_tensor)

else:

img_input = input_tensor

if K.image_dim_ordering() == 'th':

channel_axis = 1

else:

channel_axis = 3

x = conv2d_bn(img_input, 32, 3, 3, subsample=(2, 2), border_mode='valid')

x = conv2d_bn(x, 32, 3, 3, border_mode='valid')

x = conv2d_bn(x, 64, 3, 3)

x = MaxPooling2D((3, 3), strides=(2, 2))(x)

x = conv2d_bn(x, 80, 1, 1, border_mode='valid')

x = conv2d_bn(x, 192, 3, 3, border_mode='valid')

x = MaxPooling2D((3, 3), strides=(2, 2))(x)

# mixed 0, 1, 2: 35 x 35 x 256

for i in range(3):

branch1x1 = conv2d_bn(x, 64, 1, 1)

branch5x5 = conv2d_bn(x, 48, 1, 1)

branch5x5 = conv2d_bn(branch5x5, 64, 5, 5)

branch3x3dbl = conv2d_bn(x, 64, 1, 1)

branch3x3dbl = conv2d_bn(branch3x3dbl, 96, 3, 3)

branch3x3dbl = conv2d_bn(branch3x3dbl, 96, 3, 3)

branch_pool = AveragePooling2D(

(3, 3), strides=(1, 1), border_mode='same')(x)

branch_pool = conv2d_bn(branch_pool, 32, 1, 1)

x = merge([branch1x1, branch5x5, branch3x3dbl, branch_pool],

mode='concat', concat_axis=channel_axis,

name='mixed' + str(i))

# mixed 3: 17 x 17 x 768

branch3x3 = conv2d_bn(x, 384, 3, 3, subsample=(2, 2), border_mode='valid')

branch3x3dbl = conv2d_bn(x, 64, 1, 1)

branch3x3dbl = conv2d_bn(branch3x3dbl, 96, 3, 3)

branch3x3dbl = conv2d_bn(branch3x3dbl, 96, 3, 3,

subsample=(2, 2), border_mode='valid')

branch_pool = MaxPooling2D((3, 3), strides=(2, 2))(x)

x = merge([branch3x3, branch3x3dbl, branch_pool],

mode='concat', concat_axis=channel_axis,

name='mixed3')

# mixed 4: 17 x 17 x 768

branch1x1 = conv2d_bn(x, 192, 1, 1)

branch7x7 = conv2d_bn(x, 128, 1, 1)

branch7x7 = conv2d_bn(branch7x7, 128, 1, 7)

branch7x7 = conv2d_bn(branch7x7, 192, 7, 1)

branch7x7dbl = conv2d_bn(x, 128, 1, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 128, 7, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 128, 1, 7)

branch7x7dbl = conv2d_bn(branch7x7dbl, 128, 7, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 192, 1, 7)

branch_pool = AveragePooling2D((3, 3), strides=(1, 1), border_mode='same')(x)

branch_pool = conv2d_bn(branch_pool, 192, 1, 1)

x = merge([branch1x1, branch7x7, branch7x7dbl, branch_pool],

mode='concat', concat_axis=channel_axis,

name='mixed4')

# mixed 5, 6: 17 x 17 x 768

for i in range(2):

branch1x1 = conv2d_bn(x, 192, 1, 1)

branch7x7 = conv2d_bn(x, 160, 1, 1)

branch7x7 = conv2d_bn(branch7x7, 160, 1, 7)

branch7x7 = conv2d_bn(branch7x7, 192, 7, 1)

branch7x7dbl = conv2d_bn(x, 160, 1, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 160, 7, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 160, 1, 7)

branch7x7dbl = conv2d_bn(branch7x7dbl, 160, 7, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 192, 1, 7)

branch_pool = AveragePooling2D(

(3, 3), strides=(1, 1), border_mode='same')(x)

branch_pool = conv2d_bn(branch_pool, 192, 1, 1)

x = merge([branch1x1, branch7x7, branch7x7dbl, branch_pool],

mode='concat', concat_axis=channel_axis,

name='mixed' + str(5 + i))

# mixed 7: 17 x 17 x 768

branch1x1 = conv2d_bn(x, 192, 1, 1)

branch7x7 = conv2d_bn(x, 192, 1, 1)

branch7x7 = conv2d_bn(branch7x7, 192, 1, 7)

branch7x7 = conv2d_bn(branch7x7, 192, 7, 1)

branch7x7dbl = conv2d_bn(x, 160, 1, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 192, 7, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 192, 1, 7)

branch7x7dbl = conv2d_bn(branch7x7dbl, 192, 7, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 192, 1, 7)

branch_pool = AveragePooling2D((3, 3), strides=(1, 1), border_mode='same')(x)

branch_pool = conv2d_bn(branch_pool, 192, 1, 1)

x = merge([branch1x1, branch7x7, branch7x7dbl, branch_pool],

mode='concat', concat_axis=channel_axis,

name='mixed7')

# mixed 8: 8 x 8 x 1280

branch3x3 = conv2d_bn(x, 192, 1, 1)

branch3x3 = conv2d_bn(branch3x3, 320, 3, 3,

subsample=(2, 2), border_mode='valid')

branch7x7x3 = conv2d_bn(x, 192, 1, 1)

branch7x7x3 = conv2d_bn(branch7x7x3, 192, 1, 7)

branch7x7x3 = conv2d_bn(branch7x7x3, 192, 7, 1)

branch7x7x3 = conv2d_bn(branch7x7x3, 192, 3, 3,

subsample=(2, 2), border_mode='valid')

branch_pool = AveragePooling2D((3, 3), strides=(2, 2))(x)

x = merge([branch3x3, branch7x7x3, branch_pool],

mode='concat', concat_axis=channel_axis,

name='mixed8')

# mixed 9: 8 x 8 x 2048

for i in range(2):

branch1x1 = conv2d_bn(x, 320, 1, 1)

branch3x3 = conv2d_bn(x, 384, 1, 1)

branch3x3_1 = conv2d_bn(branch3x3, 384, 1, 3)

branch3x3_2 = conv2d_bn(branch3x3, 384, 3, 1)

branch3x3 = merge([branch3x3_1, branch3x3_2],

mode='concat', concat_axis=channel_axis,

name='mixed9_' + str(i))

branch3x3dbl = conv2d_bn(x, 448, 1, 1)

branch3x3dbl = conv2d_bn(branch3x3dbl, 384, 3, 3)

branch3x3dbl_1 = conv2d_bn(branch3x3dbl, 384, 1, 3)

branch3x3dbl_2 = conv2d_bn(branch3x3dbl, 384, 3, 1)

branch3x3dbl = merge([branch3x3dbl_1, branch3x3dbl_2],

mode='concat', concat_axis=channel_axis)

branch_pool = AveragePooling2D(

(3, 3), strides=(1, 1), border_mode='same')(x)

branch_pool = conv2d_bn(branch_pool, 192, 1, 1)

x = merge([branch1x1, branch3x3, branch3x3dbl, branch_pool],

mode='concat', concat_axis=channel_axis,

name='mixed' + str(9 + i))

if include_top:

# Classification block

x = AveragePooling2D((8, 8), strides=(8, 8), name='avg_pool')(x)

x = Flatten(name='flatten')(x)

x = Dense(1000, activation='softmax', name='predictions')(x)

# Create model

model = Model(img_input, x)

# load weights

if weights == 'imagenet':

if K.image_dim_ordering() == 'th':

if include_top:

weights_path = get_file('inception_v3_weights_th_dim_ordering_th_kernels.h5',

TH_WEIGHTS_PATH,

cache_subdir='models',

md5_hash='b3baf3070cc4bf476d43a2ea61b0ca5f')

else:

weights_path = get_file('inception_v3_weights_th_dim_ordering_th_kernels_notop.h5',

TH_WEIGHTS_PATH_NO_TOP,

cache_subdir='models',

md5_hash='79aaa90ab4372b4593ba3df64e142f05')

model.load_weights(weights_path)

if K.backend() == 'tensorflow':

warnings.warn('You are using the TensorFlow backend, yet you '

'are using the Theano '

'image dimension ordering convention '

'(`image_dim_ordering="th"`). '

'For best performance, set '

'`image_dim_ordering="tf"` in '

'your Keras config '

'at ~/.keras/keras.json.')

convert_all_kernels_in_model(model)

else:

if include_top:

weights_path = get_file('inception_v3_weights_tf_dim_ordering_tf_kernels.h5',

TF_WEIGHTS_PATH,

cache_subdir='models',

md5_hash='fe114b3ff2ea4bf891e9353d1bbfb32f')

else:

weights_path = get_file('inception_v3_weights_tf_dim_ordering_tf_kernels_notop.h5',

TF_WEIGHTS_PATH_NO_TOP,

cache_subdir='models',

md5_hash='2f3609166de1d967d1a481094754f691')

model.load_weights(weights_path)

if K.backend() == 'theano':

convert_all_kernels_in_model(model)

return model