Title:Domain Adaptive Object Detection for Autonomous Driving under FoggyWeather

雾天环境下自动驾驶领域自适应目标检测

Abstract:Most object detection methods for autonomous drivingusually assume a consistent feature distribution betweentraining and testing data,which is not always the case whenweathers differ significantly.The object detection modeltrained under clear weather might be not effective enoughon the foggy weather because of the domain gap.This paperproposes a novel domain adaptive object detection frame-work for autonomous driving under foggy weather.Ourmethod leverages both image-level and object-level adap-tation to diminish the domain discrepancy in image styleand object appearance.To further enhance the model’scapabilities under challenging samples,we also come upwith a new adversarial gradient reversal layer to performadversarial mining for the hard examples together with do-main adaptation.Moreover,we propose to generate anauxiliary domain by data augmentation to enforce a newdomain-level metric regularization.Experimental resultson public benchmarks show the effectiveness and accu-racy of the proposed method.The code is available athttps://github.com/jinlong17/DA-Detect.

摘要:大多数用于自动驾驶的目标检测方法通常假设训练和测试数据之间具有一致的特征分布,当天气差异较大时,情况并不总是如此。在晴朗天气下训练的目标检测模型在雾天天气下可能会因为域间隙而不够有效。本文提出了一种新的雾天环境下自动驾驶领域自适应目标检测框架。我们的方法同时利用图像级和对象级自适应来减少图像风格和对象外观的领域差异。为了进一步增强模型在挑战样本下的能力,我们还提出了一个新的对抗梯度反转层,结合域适应对困难样本进行对抗挖掘。此外,我们提出通过数据增强来生成辅助域,以执行新的域级度量正则化。在公共基准测试集上的实验结果表明了所提方法的有效性和准确性。代码可在https://github.com/jinlong17/DA- Detect获取。

1.Introduction:

Autonomous driving has wide applications for intelli-gent transportation systems,such as improving the efficiencyin the automatic 24/7 working manner,reducing the laborcosts,enhancing the comfortableness of customers,and soon[23,51].With the computer vision and artificial intel-ligence techniques,object detection plays a critical role inautonomous driving to understand the surrounding drivingscenarios[54,59].In some cases,the autonomous vehiclemight work in the complex residential and industry areas.The diverse weather conditions might make the object de-tection in these environments more difficult.For example,the usages of heating,gas,coal,and vehicle emissions inresidential and industry areas might be possible to generatemore frequent foggy or hazy weather,leading to a significant challenge to the object detection system installed on theautonomous vehicle.

1介绍:

无人驾驶在智能交通系统中有着广泛的应用,如提高自动24 / 7工作方式的效率、降低人工成本、增强客户的舒适性等。随着计算机视觉和人工智能技术的发展,目标检测在自动驾驶中发挥着至关重要的作用,以了解周围的驾驶场景。在某些情况下,自动驾驶汽车可能工作在复杂的居民区和工业区。多样的天气状况可能使得这些环境中的目标检测更加困难。例如,在居民区和工业区使用暖气、煤气、煤和车辆排放可能会产生更频繁的雾天或雾霾天气,这对安装在自动驾驶车辆上的目标检测系统提出了重大挑战。

Many deep learning models such as Faster R-CNN[37],YOLO[36]have demonstrated great success in autonomousdriving.However,most of these well-known methods as-sume that the feature distributions of training and testing dataare homogeneous.Such an assumption may fail when takingthe real-world diverse weather conditions into account[40].For example,as shown in Fig.1,the Faster R-CNN modeltrained on the clear-weather data(source domain)is capableof detecting objects accurately under good weather,but itsperformance drops significantly when it comes to the foggyweather(target domain).This degradation is caused by thefeature domain gap between divergent weather conditions,as the model is unfamiliar with the feature distribution onthe target domain,while the detection performance could beimproved under the foggy weather with domain adaptation.

许多深度学习模型如Faster R- CNN [ 37 ]、YOLO [ 36 ]等在自动驾驶领域取得了巨大的成功。然而,这些著名的方法大多假设训练和测试数据的特征分布是均匀的。当考虑到现实世界中不同的天气条件时,这种假设可能会失败[ 40 ]。例如,如图1所示,在晴朗天气数据(源域)上训练的Faster R - CNN模型能够在好天气下准确检测目标,但在有雾天气(目标域)上其性能明显下降。这种退化是由不同天气条件下的特征域差距造成的,因为模型不熟悉目标域上的特征分布,而在有雾天气下通过域自适应可以提高检测性能。

Domain adaptation,as a technique of transfer learning,isto reduce the domain shift between various weathers.Thispaper proposes a novel domain adaptation framework toachieve robust object detection performance in autonomousdriving under foggy weather.As manually annotating im-ages under adverse weathers is usually time-consuming,our design follows an unsupervised fashion same as thatin[5,26,43],where clear-weather images(source domain)are well labeled and foggy weather images(target domain)have no annotations.Inspired by[5,15],our method lever-ages both image-level and object-level adaptation to diminishthe domain discrepancy in image style and object appear-ance jointly,which is realized by involving image-level andobject-level domain classifiers to enable our convolutionalneural networks generating domain-invariant latent featurerepresentations.Specifically,the domain classifiers aim tomaximize the probability of distinguishing the features pro-duced by different domains,whereas the detection modelexpects to generate the domain-invariant features to confusethe classifiers.

域适应作为迁移学习的一种技术,是为了减少不同天气之间的域偏移。本文提出了一种新颖的域自适应框架,以实现雾天环境下自动驾驶的鲁棒目标检测性能。由于在恶劣天气下人工标注图像通常很耗时,我们的设计遵循了与[ 5、26、43]相同的无监督方式,其中晴朗天气图像(源域)有很好的标注,有雾天气图像(目标域)没有标注。受[ 5、15]的启发,我们的方法同时利用图像级和对象级的自适应来共同减少图像风格和对象外观的域差异,这是由图像级和对象级域分类器使我们的卷积神经网络生成域不变的潜在特征表示来实现的。具体来说,领域分类器旨在最大化区分不同领域产生的特征的概率,而检测模型则期望生成领域不变的特征来混淆分类器。

This paper also addresses two critical insights that areignored by previous domain adaptation methods[5,9,15,26,61]:1)Different training samples might have different challenging levels to be fully harnessed during the transferlearning,while existing works usually omit such diversity;2)Previous domain adaptation methods only consider thesource domain and target domain for transfer learning,whilethe domain-level feature metric distance to the third relateddomain might be neglected.However,embedding the min-ing for hard examples and involving an extra related domainmight potentially further enhance the model’s robust learningcapabilities,which has not been carefully explored before.To emphasize these two insights,we propose a new Ad-versarial Gradient Reversal Layer(AdvGRL)and generatean auxiliary domain by data augmentation.The AdvGRLperforms adversarial mining for the hard examples to en-hance the model learning on the challenging scenarios,andthe auxiliary domain enforces a new domain-level metricregularization during the transfer learning.Experimentalresults on the public benchmarks Cityscapes[7]and FoggyCityscapes[40]show the effectiveness of each proposedcomponent and the superior object detection performanceover the baseline and comparison methods.Overall,thecontributions of this paper are summarized as follows:

本文还提出了两个被先前的领域自适应方法[ 5、9、15、26、61]忽略的关键见解:1 )不同的训练样本在迁移学习过程中可能有不同的挑战水平需要充分利用,而现有的工作通常忽略了这种多样性;2 )以往的域适应方法仅考虑源域和目标域进行迁移学习,而域级特征度量到第三个相关域的距离可能被忽略。然而,嵌入对困难示例的挖掘和涉及额外的相关域可能会进一步增强模型的鲁棒学习能力,而这在以前还没有仔细研究过。为了强调这两个见解,我们提出了一个新的对抗梯度反转层( Ad-versarial Gradient Reversal Layer,AdvGRL ),并通过数据增强生成一个辅助域。AdvGRL对困难样本进行对抗挖掘以增强模型在挑战性场景下的学习,辅助域在迁移学习过程中执行新的域级度量正则化。在公共基准测试集Cityscapes [ 7 ]和FoggyCityscapes [ 40 ]上的实验结果表明了本文提出的每个组件的有效性以及优于基准和对比方法的目标检测性能。总体而言,本文的贡献总结如下:

•We propose a novel deep transfer learning baseddomain adaptive object detection framework for au-tonomous driving under foggy weather,including theimage-level and object-level adaptations,which istrained with labeled clear-weather data and unlabeledfoggy-weather data to enhance the generalization abilityof the deep learning based object detection model.

•We propose a new Adversarial Gradient Reversal Layer(AdvGRL)to perform adversarial mining for the hardexamples together with the domain adaptation to furtherenhance the model’s transfer learning capabilities underchallenging samples.

•We propose a new domain-level metric regularizationduring the transfer learning.By generating an auxiliarydomain with data augmentation,the domain-level met-ric constraint between source domain,auxiliary domain,and target domain is ensured as regularization duringthe transfer learning.

• 我们提出了一种新的基于深度迁移学习的雾天自动驾驶领域自适应目标检测框架,包括图像级和目标级自适应,分别使用有标记的晴空数据和无标记的雾天数据进行训练,以增强基于深度学习的目标检测模型的泛化能力。

• 我们提出了一种新的对抗梯度翻转层( Adversarial Gradient Reversal Layer,AdvGRL )对困难样本进行对抗挖掘,并结合领域自适应进一步提升模型在挑战样本下的迁移学习能力。

• 我们在迁移学习过程中提出了一种新的领域级度量正则化。通过生成带有数据增强的辅助域,在迁移学习过程中保证源域、辅助域和目标域之间的域级度量约束为正则化。

2.Related Work

2.1.Object detection for autonomous driving

Recent advancement in deep learning has brought out-standing progress in autonomous driving[6,25,33,53],andobject detection has been one of the most active topic underthis field[8,41,45,59].Regarding the network architecture,current object detection algorithms can be roughly split intotwo categories:two-stage methods and single-stage methods.Two-stage object detection algorithms typically composeof two processes:1)region proposal,2)object classifica-tion and localization refinement.R-CNN[14]is the firstwork for this kind of methods,it applies selective search forregional proposals and independent CNNs for each objectprediction.Fast R-CNN[13]improves R-CNN by obtainingobject features from the shared feature map learned by oneCNN.Faster R-CNN[37]further enhances the frameworkby proposing Region Proposal Network(RPN)to replacethe selective search stage.Single-stage object detectionalgorithms predict object bounding boxes and classes si-multaneously in one same stage.These methods usuallyleverage pre-defined anchors to classify objects and regressbounding boxes,they are less time-consuming but less ac-curate compared to two-stage algorithms.Milestones forthis category include SSD-series[29],YOLO-series[36]and RetinaNet[28].Despite their success in clear-weathervisual scenes,these object detection methods might not beemployed in autonomous driving directly due to the complexreal-world weather conditions.

2. 相关工作

2.1 面向自动驾驶的目标检测

近年来,深度学习的发展为自动驾驶[ 6、25、33、53]带来了突破性的进展,目标检测也成为[ 8、41、45、59]领域最活跃的研究课题之一。关于网络架构,目前的目标检测算法大致可以分为两类:两阶段方法和单阶段方法。两阶段目标检测算法通常由两个过程组成:1 )区域建议;2 )目标分类和定位精化。R-CNN [ 14 ]是这类方法的第一个工作,它对每个目标预测应用选择性搜索区域建议和独立的CNN。Fast R- CNN [ 13 ]通过从一个CNN学习到的共享特征图中获取目标特征来改进R - CNN。Faster R- CNN [ 37 ]通过提出区域建议网络( RPN )来代替选择性搜索阶段,进一步增强了框架。单阶段目标检测算法在同一阶段同时预测目标框和类。这些方法通常利用预定义的锚点来分类对象和回归边界框,与两阶段算法相比,它们的耗时较少,但精度较低。该类别的里程碑包括SSD系列[ 29 ]、YOLO系列[ 36 ]和Retina Net [ 28 ]。尽管这些目标检测方法在晴朗的视觉场景中取得了成功,但由于复杂的实际天气条件,这些目标检测方法可能无法直接用于自动驾驶。

2.2.Object detection for autonomous driving underdifferent weather

In order to address the diverse weather conditions en-countered in autonomous driving,many datasets have beengenerated[31,32,34,40]and many methods have been pro-posed[2,17,18,22,35,42,44]in recent years.For example,Foggy Cityscape[40]is a synthetic dataset that applies fogsimulation to Cityscape for scene understanding in foggyweather.TJU-DHD[32]is a diverse dataset for object de-tection in real-world scenarios which contains variances interms of illumination,scene,weather and season.In this paper,we focus on the object detection problem in foggyweather.Huang et al.[22]propose a DSNet(Dual-SubnetNetwork)that involves a detection subnet and a restorationsubnet.This network can be trained with multi-task learningby combining visibility enhancement task and object detec-tion task,thus outperforms pure object detectors.Hahneret al.[17]develop a fog simulation approach to enhanceexisting real lidar dataset,and show this approach can beleveraged to improve current object detection methods infoggy weather.Qian et al.[35]propose a MVDNet(Multi-modal Vehicle Detection Network)that takes advantage oflidar and radar signals to obtain proposals.Then the region-wise features from these two sensors are fused together toget final detection results.Bijelic et al.[2]develop a networkthat takes the data from four sensors as input:lidar,RGBcamera,gated camera,and radar.This architecture usesentropy-steered adaptive deep fusion to get fused featuremaps for prediction.These methods typically rely on inputdata from other sensors rather than RGB camera itself,whichis not the general case for many autonomous driving cars.Thus we aim to develop an object detection architecture thatonly takes RGB camera data as input in this work.

2.2 不同天气下自动驾驶的目标检测

为了应对自动驾驶中遇到的多样天气条件,近年来产生了许多数据集[ 31、32、34、40]和提出了许多方法[ 2、17、18、22、35、42、44]。例如,Foggy市景[ 40 ]是将雾模拟应用于市景的合成数据集,用于雾天场景理解。TJU-DHD [ 32 ]是一个用于现实场景中目标检测的多样化数据集,包含光照、场景、天气和季节等变化。在这篇 论文中,我们重点研究了雾天的目标检测问题。Huang等[ 22 ]提出了一种包含检测子网和恢复子网的DSNet ( Dual-SubnetNetwork )。该网络可以通过结合可见性增强任务和目标检测任务进行多任务学习训练,从而优于纯目标检测器。Hahner等[ 17 ]开发了一种雾模拟方法来增强现有的真实激光雷达数据集,并表明这种方法可以被用来改进当前的雾天目标检测方法。Qian等人[ 35 ]提出了一种MVDNet(多模态车辆检测网络),利用激光雷达和雷达信号来获取建议。然后将这两个传感器的区域特征融合在一起,得到最终的检测结果。Bijelic等人[ 2 ]开发了一个网络,将来自四个传感器的数据作为输入:激光雷达、RGB相机、门控相机和雷达。该架构使用熵引导的自适应深度融合得到融合特征图进行预测。这些方法通常依赖于其他传感器的输入数据而不是RGB相机本身,这对于许多自动驾驶汽车来说并不是一般情况。因此,本文旨在开发一种仅以RGB相机数据作为输入的目标检测架构。

2.3.Domain adaptation for object detection

Domain adaptation reduces the discrepancy between dif-ferent domains,thus allows the model trained on sourcedomain to be applicable on unlabeled target domain.Pre-vious domain adaptation works mainly focus on the taskof image classification[46–48,56],while more and moremethods have been proposed to solve domain adaptation forobject detection in recent years[5,15,24,39,49,50,55,58,60].Domain adaptive detectors could be obtained if the featuresfrom different domains are aligned[5,15,18,39,49,52].Fromthis perspective,Chen et al.[5]introduce a Domain AdaptiveFaster R-CNN framework to reduce domain gap from imagelevel and instance level,and the image-and-instance consis-tency is subsequently employed to improve cross-domainrobustness.He et al.[18]propose a MAF(multi-adversarialFaster R-CNN)framework to minimize the domain distri-bution disparity by aligning domain features and proposalfeatures hierarchically.On the other hand,some works tryto solve domain adaptation through image style transfermethods[21,24,41].Shan et al.[41]first convert imagesfrom source domain to target domain with image transla-tion module,then train the object detector with adversarialtraining on target domain.Hsu et al.[21]choose to translateimages progressively,and add a weighted task loss duringadversarial training stage for tackling the problem of imagequality difference.Many previous methods[4,27,38,62]design complex architectures.[62]used multi-scale back-bone Feature Pyramid Networks and considered pixel-leveland category-level adaptation.[27]used the complex GraphConvolution Network and graph matching algorithms.[38]used the similarity-based clustering and grouping.[4]usesthe uncertainty-guided self-training mechanism(Probabilis-tic Teacher and Focal Loss)to capture the uncertainty ofunlabeled target data from a gradually evolving teacher andguides student learning.Differently,our method does notbring extra learnable parameters to original Faster R-CNNmodel because our AdvGRL is based on adversarial training(gradient reversal)and Domain-level Metric Regularizationis based on triplet loss.Previous domain adaptation meth-ods usually treat training samples at the same challenginglevel,while we employ advGRL for adversarial hard ex-ample mining to improve transfer learning.Moreover,wegenerate an auxiliary domain and apply domain-level metricregularization to avoid overfitting.

2.3. 用于目标检测的领域自适应

域自适应减少了不同域之间的差异,从而允许在源域上训练的模型适用于未标记的目标域。以往的领域自适应工作主要集中在图像分类任务[ 46 ~ 48、56 ],而近年来提出了越来越多的方法来解决目标检测领域自适应问题[ 5、15、24、39、49、50、55、58、60]。如果来自不同域的特征对齐,则可以得到域自适应检测器[ 5、15、18、39、49、52]。从这个角度出发,Chen等[ 5 ]提出了一种域自适应Faster R - CNN框架,从图像级和实例级减少域差距,并利用图像和实例的一致性来提高跨域鲁棒性。He等[ 18 ]提出了MAF( multi-adversarial Faster R-CNN)框架,通过分层对齐领域特征和推荐特征来最小化领域分布差异。另一方面,一些工作尝试通过图像风格迁移方法[ 21、24、41]来解决域适应问题。Shan等[ 41 ]首先通过图像翻译模块将源域图像转换到目标域,然后在目标域上对目标检测器进行对抗训练。Hsu等[ 21 ]针对图像质量差异问题,选择渐进地翻译图像,并在对抗训练阶段添加加权任务损失。以前的很多方法[ 4、27、38、62]都设计了复杂的体系结构。[ 62 ]使用多尺度骨干特征金字塔网络,并考虑像素级和类别级的自适应。文献[ 27 ]使用了复杂的图卷积网络和图匹配算法。[ 38 ]使用了基于相似性的聚类和分组。[ 4 ]使用不确定性引导的自训练机制(概率性教师与焦点损失)来捕获来自一个逐渐发展的教师的未标记目标数据的不确定性并指导学生学习。不同的是,我们的方法不会给原始的Faster R - CNN模型带来额外的可学习参数,因为我们的AdvGRL是基于对抗训练(梯度反转)和基于三元组损失的领域级度量正则化。以往的领域自适应方法通常将训练样本放在相同的挑战性水平上,而我们使用advGRL进行对抗难例挖掘以改进迁移学习。此外,我们生成一个辅助域,并应用域级度量正则化来避免过拟合。

3.Proposed Method

In this section,we will first introduce the overall networkarchitecture,then describe the image-level and object-leveladaptation method,and finally,reveal the details of AdvGRLand domain-level metric regularization.

3 .提出方法

在这一部分,我们将首先介绍网络的整体架构,然后描述图像级和对象级的自适应方法,最后揭示AdvGRLand域级度量正则化的细节。

3.1.Network Architecture

As illustrated in Fig.2,our proposed model adopts thepipeline in Faster R-CNN for object detection.The Con-volutional Neural Network(CNN)backbone extracts theimage-level features from the RGB images and send them toRegion Proposal Network(RPN)to generate object propos-als.Afterwards,the ROI pooling accepts both image-levelfeatures and object proposals as the input to retrieve theobject-level features.Eventually,a detection head is appliedon the object-level features to produce the final predictions.Based on the Faster R-CNN framework,we integrate twomore components:image-level domain adaptation module,and object-level domain adaptation module.For both mod-ules,we deploy a new Adversarial Gradient Reversal Layer(AdvGRL)together with the domain classifier to extractdomain-invariant features and perform adversarial hard ex-ample mining.Moreover,we involve an auxiliary domain toimpose a new domain-level metric regularization to enforcethe feature metric distance between different domains.Allthree domains,i.e.,source,target,and auxiliary domains,will be employed simultaneously during the training.

3.1.网络架构

如图2所示,我们提出的模型采用Faster R - CNN中的流水线进行目标检测。卷积神经网络( Con-volutional Neural Network,CNN )主干从RGB图像中提取图像级特征,并将其发送给区域建议网络( Region提案网络,RPN )生成目标建议。之后,ROI池化同时接受图像级特征和目标提议作为输入来检索目标级特征。最后,在对象级特征上应用一个检测头来产生最终的预测。在Faster R - CNN框架的基础上,我们增加了两个组件:图像级域适应模块和对象级域适应模块。对于这两个模型,我们部署了一个新的对抗梯度逆转层( Adversarial Gradient Reversal Layer,AdvGRL )与领域分类器一起提取领域不变特征并进行对抗难例挖掘。此外,我们引入一个辅助域来施加一个新的域级度量正则化,以加强不同域之间的特征度量距离。在训练过程中,源域、目标域和辅助域这三个域将被同时使用。

3.2.Image-level Adaptation

The image-level domain representation is obtained fromthe backbone feature extraction and contains rich globalinformation such as style,scale and illumination,which canpotentially pose significant impacts on the detection task[5].Therefore,a domain classifier is introduced to classify thedomains of the upcoming image-level features to enhancethe image-level global alignment.The domain classifier isjust a simple CNN with two convolutional layers and it willoutput a prediction to identify the feature domain.We use the binary cross entropy loss for the domain classifier asfollows:

where i∈{1,...,N}represents the N training images,Gi∈{1,0}is the ground truth of the domain label in thei-th training image(1 and 0 stand for source and target do-mains respectively),and Pi is the prediction of the domainclassifier.

图像级域表示由主干特征提取得到,包含丰富的全局信息,如风格、尺度和光照等,对检测任务有重要影响[ 5 ]。因此,引入领域分类器对即将到来的图像级特征进行领域分类,以增强图像级全局对齐。该域分类器只是一个简单的CNN,具有两个卷积层,它将输出一个预测来识别特征域。我们使用域分类器的二进制交叉熵损失如下:

式中:i∈{ 1,..,N }表示N个训练图像,Gi∈{ 1,0 }为第i个训练图像( 1和0分别代表源域和目标域)中领域标签的真实值,Pi为领域分类器的预测。

3.3.Object-level Adaptation

Besides the image-level global difference in different ·domains,the objects in different domains might be alsodissimilar in the appearance,size,color,etc.In this paper,we define each region proposal after the ROI Pooling layer inFaster R-CNN as a potential object.Similar with image-leveladaptation module,after retrieving the object-level domainrepresentation by ROI pooling,we implement a object-leveldomain classifier to identify the feature derivation from localinformation.A well-trained object-level classifier,a neuralnetwork with 3 fully-connected layers,will help align theobject-level feature distribution.We also use the binary cross entropy loss for this domain classifier:

where j∈{1,...,M}is the j-th detected object(regionproposal)in the i-th image,Pi,j is the prediction of theobject-level domain classifier for the j-th region proposal inthe i-th image,and Gi,j is the corresponding binary ground-truth label for source and target domains respectively.

3.3 .对象级自适应

除了不同域中图像级的全局差异外,不同域中的对象在外观、大小、颜色等方面也可能不同。本文将Faster R - CNN中ROI Pooling层后的每个区域建议定义为潜在对象。与图像级自适应模块类似,在通过ROI池化检索出目标域表示后,我们实现了一个目标域分类器来识别局部信息中的特征派生。一个训练有素的对象级分类器,一个具有3个全连接层的神经网络,将有助于对齐对象级特征分布。我们还将二进制交叉熵损失用于这个域分类器:

该域分类器同样采用二值交叉熵损失:其中,j∈{ 1,..,M }为第i幅图像中第j个检测对象( regionproposal ),Pi,j为对象级域分类器对第i幅图像中第j个区域提议的预测,Gi,j分别为源域和目标域对应的二值真值标签。

3.4.Adversarial Gradient Reversal Layer

In this section,we first review the original Gradient Rever-sal Layer(GRL)[10],then we make a detailed description of the proposed Adversarial Gradient Reversal Layer(Adv-GRL)for our domain adaptive object detection framework.

在这一部分中,我们首先回顾了原始的梯度反向层( GRL ) [ 10 ],然后详细介绍了我们提出的域自适应目标检测框架Adv - GRL的 。

The original GRL is used for unsupervised domain adap-tation of the image classification task[10].Specifically,itleaves the input unchanged during forward propagation andreverses the gradient by multiplying it by a negative scalarwhen back-propagating to the base network ahead duringtraining.A domain classifier is trained to maximize theprobability of identifying the domain while the base networkahead is optimized to confuse the domain classifier.In thisway,the domain-invariant features are obtained to realizethe domain adaptation.The forward propagation of GRL isdefined as:

原始GRL用于图像分类任务的无监督域适应[ 10 ]。具体来说,它在前向传播过程中保持输入不变,在训练过程中反向传播到前方的基础网络时,将梯度乘以一个负的标量来反向传播。训练一个域分类器以最大化识别域的概率,同时优化前向基网络以混淆域分类器。通过这种方式,获得领域不变特征,实现领域自适应。GRL的前向传播定义为:

where v is an input feature vector,and Rλdenotes theforwarding function that GRL performs,and the back-propagation of GRL is defined as:

其中v是输入特征向量,R λ表示GRL执行的转发函数,GRL的反向传播定义为:

where I is an identity matrix and−λis a negative scalar.

式中:I为单位矩阵,- λ为负标量。

The original GRL sets either a constant or a changing−λbased on the training iterations[10].However,thissetting ignores an insight that different training samplesmight have different challenging levels during the transferlearning.Therefore,this paper proposes a novel AdvGRL toperform adversarial mining for the hard examples togetherwith the domain adaptation to further enhance the model’stransfer learning capabilities under challenging examples.This can be done by simply replacingλby a newλadvin Eq.(4)of GRL,which forms the proposed AdvGRL.Particularly,λadv is calculated as:

原始GRL根据训练迭代次数设置常数或变化的- λ [ 10 ]。然而,这种设定忽略了一个洞见,即不同的训练样本在迁移学习过程中可能具有不同的挑战性。因此,本文提出一种新颖的AdvGRL对困难样本进行对抗挖掘,并结合领域自适应,进一步增强模型在挑战样本下的迁移学习能力。这可以简单地用一个新的λ advin方程代替λ。( 4 )的GRL,构成了AdvGRL。特别地,λ adv的计算公式为:

where Lc is the loss of the domain classifier,αis a hardnessthreshold to judge whether the training sample is challenging,βis the overflow threshold to avoid generating excessivegradients in the back-propagation,andλ0=1 is set as afixed parameter in our experiment.In other words,if thedomain classifier’s loss Lc is smaller,the domain of the training sample can be more easily identified,whose featureis not the desired domain-invariant feature,so this kind oftraining sample is a harder example for domain adaptation.The relation ofλadv and Lc is shown in Fig.3.

其中Lc是域分类器的损失,α是判断训练样本是否具有挑战性的硬度阈值,β是防止反向传播过程中产生过度梯度的溢出阈值,λ0 = 1是实验中固定的参数。换句话说,如果域分类器的损失Lc越小, 训练样本的域就越容易被识别,其特征并不是期望的域不变特征,因此这类训练样本是域自适应的一个比较困难的例子。λ adv与Lc的关系如图3所示。

On summary,the proposed AdvGRL has two effects:1)AdvGRL could use negative gradients during back-propagation to confuse the domain classifier so as to generatedomain-invariant features;2)AdvGRL could perform ad-versarial mining for the hard examples to further enhancethe model generalization under challenging examples.Theproposed AdvGRL is applied to both image-level and object-level domain adaptation in our domain adaptive object de-tection framework,as shown in Fig.2.

综上所述,本文提出的AdvGRL有两个效果:1 ) AdvGRL可以利用反向传播过程中的负梯度混淆领域分类器,从而生成领域不变特征;2 ) AdvGRL能够对困难样本进行对抗挖掘,进一步增强模型在具有挑战性样本下的泛化能力。在我们的域自适应目标检测框架中,AdvGRL被应用于图像级和目标级的域自适应,如图2所示。

3.5.Domain-level Metric Regularization

Previous existing domain adaptation methods mainly fo-cus on the transfer learning from source domain S to targetdomain T,which neglects the potential benefits of the thirdrelated domain can bring.To address this and thus addition-ally involve the feature metric constraint between differentdomains,we introduce an auxiliary domain for a domain-level metric regularization during the transfer learning.

3.5.域级度量正则化

现有的域适应方法主要关注源域S到目标域T的迁移学习,忽略了第三个相关域能够带来的潜在好处。为了解决这个问题,从而额外涉及不同域之间的特征度量约束,我们在迁移学习过程中引入一个辅助域用于域级别的度量正则化。

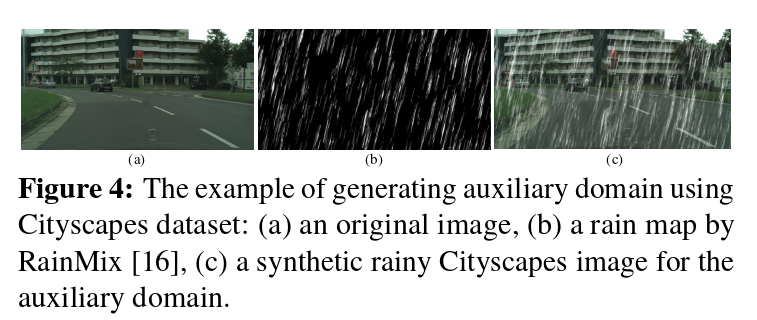

Based on the source domain S,we can apply some ad-vanced data augmentation methods to generate an auxiliarydomain A.For the autonomous driving scenario,the trainingdata in different weather conditions can be synthesized fromthe clear-weather data,then the three input images of our ar-chitecture(as shown in Fig.2)could be aligned images.Forexample,we generate an auxiliary domain with the advanceddata augmentation method RainMix[16,20].Specifically,we randomly sample a rain map from the public dataset ofreal rain streaks[11],then perform random transformationsusing the RainMix technique on the rain map,where theserandom transformations(i.e.,rotate,zoom,translate,shear)are sampled and combined.Finally,these transformed rainmaps can be blended with the source domain images,whichcan simulate the diverse rain patterns in the real world.Theexample of generating auxiliary domain is shown in Fig.4.Different with other methods including data augmentation tothe source/target domain,by generating an auxiliary domainwith data augmentation,the domain-level metric constraintbetween source,auxiliary,and target domains is ensured.

在源域S的基础上,我们可以应用一些先进的数据增强方法来生成辅助域A。对于自动驾驶场景,不同天气条件下的训练数据可以从晴朗天气的数据中合成,那么我们的架构(如图2所示)的三个输入图像可以是对齐图像。例如,我们使用改进的数据增强方法RainMix[ 16、20]生成一个辅助域。具体来说,我们从真实雨线的公共数据集中随机采样一个雨图[ 11 ],然后使用Rain Mix技术对雨图进行随机变换,其中这些随机变换(即旋转、缩放、平移、剪切)被采样和组合。最后,这些变换后的雨图可以与源域图像进行融合,模拟现实世界中多样化的雨型。生成辅助域的实例如图4所示。与其他包括数据增强到源/目标域的方法不同,通过数据增强生成辅助域,保证了源域、辅助域和目标域之间的域级度量约束。

Let us define the i-th training image’s global image-levelfeatures of S,A and T as FSi,FAi,and FTirespectively.Weexpect to ensure the feature metric distance between the FSiand FTicloser than the feature metric distance between FSiand FAiafter reducing the domain gap between S and T,which is defined as:

定义S、A和T的第i张训练图像的全局图像级特征分别为FSi、FAi和FTi。期望在缩小S和T之间的域间隙后,保证FSi和FTi之间的特征度量距离比FSi和FAi之间的特征度量距离更近,定义为:

where d(,)denotes the metric distance of the correspondingfeatures.This constraint can be implemented by a tripletstructure,where the FSi,FTi,FAican be treated as anchor,positive and negative in the triplet structure.Therefore,asthe domain-level metric regularization on image features,the above image-level constraint in Eq.(6)is equivalent tominimize the following image-level triplet loss:

式中:d(、。)表示对应特征的度量距离。这个约束可以通过一个三元组结构来实现,其中FSi,FTi,FAican在三元组结构中被处理为锚,正和负。因此,作为图像特征上的域级度量正则化,上式中的图像级约束。式( 6 )等价于最小化如下图像级三元组损失:

3.6.Loss Function

The final training loss of the proposed network is a sum-mation of each individual part,which can be written as:

3 . 6 .损失函数

所提网络的最终训练损失是每个单独部分的总和,可以写为:

where Lcls and Lreg are the loss of classification and theloss of regression in the original Faster R-CNN respectively,and w is a weight to balance the Faster R-CNN loss andthe domain adaptation loss for training.We set w=0.1in our experiments.In the training,the proposed domainadaptive object detection framework can be trained in anend-to-end manner using a standard Stochastic GradientDescent algorithm.During the testing,the original Faster R-CNN architecture with trained adapted weights can be usedfor object detection,after removing the domain adaptationcomponents.

其中Lcls和Lreg分别为原始Faster R - CNN中的分类损失和回归损失,w是平衡Faster R - CNN损失和域适应损失进行训练的权重。我们在实验中设定w = 0.1。在训练中,所提出的域自适应目标检测框架可以使用标准的随机梯度下降算法以端到端的方式进行训练。在测试过程中,移除领域自适应成分后,可以使用原Faster R - CNN架构训练得到的自适应权重进行目标检测。

3.7.General Domain Adaptive Object Detection

Our model has the capability to be adapted to generaldomain adaptive object detection.For the scenarios thatthe images from target domain are synthesized from thesource domain with pixel-to-pixel correspondence(e.g.,Cityscapes−→Foggy Cityscapes),our method can be di-rectly applied without modification.For the scenarios wheretarget and source domains do not have strict correspondence(e.g.,Cityscapes−→KITTI),our method can be applied bysimply removing the LRobjloss to eliminate the dependenceon the object alignment in the model training.

3 . 7 .通用域自适应目标检测

我们的模型具有适应通用域自适应目标检测的能力。对于目标域图像由源域合成且像素对应关系为(例如, Cityscapes-→Foggy Cityscapes)的场景,我们的方法可以直接应用,无需修改。对于目标域和源域不具有严格对应关系的场景( e.g. , Cityscapes-→KITTI),我们的方法可以通过简单地去除LRobjloss来消除模型训练中对目标对齐的依赖。

4.Experiments

4.1.Benchmark

Our experiments are based on the public object detectionbenchmarks Cityscapes[7]and Foggy Cityscapes[40]forautonomous driving.Cityscapes[7]is a widely used au-tonomous driving dataset,which is a collection of imageswith city street scenarios in clear weather conditions from27 cities.In Cityscapes dataset,there are 2,975 training im-ages and 500 validation images with instance segmentationannotations which can be transformed into bounding-box an-notations with 8 categories.All images are 3-channel RGBimages and captured by a car-mounted video camera withthe same resolution of 1024×2048.Foggy Cityscapes[40]is established by simulating the fog of different intensity lev-els on the Cityscapes images,which generates the simulatedthree levels of fog based on the depth map and a physicalmodel[40].Its image number,resolution,training/validationsplit,and annotations are same as those of Cityscapes.Fol-lowing the previous methods[5,15,49],the images with thefog of highest intensity level are utilized as the target domainfor transfer learning in our experiments.

4 .实验

4.1.基准

我们的实验基于自主驾驶的公共目标检测基准Cityscapes [ 7 ]和Foggy Cityscapes [ 40 ]。Cityscapes [ 7 ]是一个广泛使用的自动驾驶数据集,它是来自27个城市的在晴朗天气条件下具有城市街道场景的图像集合。在Cityscapes数据集中,有2,975张训练图像和500张验证图像带有实例分割标注,这些标注可以转化为8类边界框标注。所有图像均为3通道RGB图像,由分辨率为1024 × 2048的车载摄像机采集。通过在Cityscapes图像上模拟不同强度等级的雾,生成基于深度图和物理模型的模拟三级雾[ 40 ]。其图像数量、分辨率、训练/验证分割和注释与Cityscapes相同。沿用之前的方法[ 5、15、49],在我们的实验中使用雾气强度最高的图像作为目标域进行迁移学习。

4.2.Experimental Setting

Dataset setting:We set the labeled training set ofCityscapes[7]as source domain and the unlabeled train-ing set of Foggy Cityscapes[40]as target domain during thetraining.Then,the trained model is tested on the validationset of Foggy Cityscapes to report the evaluation result.Wedenote this setting as the Cityscapes−→Foggy Cityscapesexperiment in this paper.

4 . 2 .实验设置

数据集设置:训练时将Cityscapes [ 7 ]的有标签训练集作为源域,将Foggy Cityscapes [ 40 ]的无标签训练集作为目标域。然后,将训练好的模型在Foggy Cityscapes的验证集上进行测试,报告评估结果。本文将这一设定称为Cityscapes -→Foggy Cityscapes实验。

Training and parameter setting:In the experiments,weadopt ResNet-50 as the backbone for the Faster R-CNN[37]detection network,which is pre-trained on ImageNet.Duringtraining,following setting in[5,37],the back-propagationand stochastic gradient descent(SGD)are used to optimizeall the networks.The whole network is trained with aninitial learning rate 0.01 for 50k iterations and then reducedto 0.001 for another 20k iterations.For all experiments,aweight decay of 0.0005 and a momentum of 0.9 are used,and each batch includes an image of source domain,animage of target domain and an image of auxiliary domain.For comparison,theλin the original GRL(Eq.(4))is setas 1.The hardness thresholdαin the AdvGRL(Eq.(5))isset as 0.63 by averaging the values of Eq.(1)when Pi=0.7,Gi=1 and Pi=0.3,Gi=0.Our code is implementedwith PyTorch and Mask R-CNN Benchmark Toolbox[30],and all models are trained using a GeForce RTX3090 GPUcard with 24GB memory.

训练和参数设置:在实验中,我们采用ResNet - 50作为Faster R- CNN [ 37 ]检测网络的主干,该网络是在ImageNet上预训练的。在训练过程中,按照[ 5、37]中的设置,使用反向传播和随机梯度下降( SGD )来优化所有网络。整个网络以0.01的初始学习率进行50k次迭代训练,再减少到0.001进行20k次迭代。所有实验均采用0.0005的权重衰减和0.9的动量,每个批次包括源域图像、目标域图像和辅助域图像。为便于比较,将原始GRL ( Eq . ( 4 ) )设为1 . Adv GRL中的硬度阈值α (式( 1 ) )。( 5 ) )通过平均式( 5 )的值设为0.63。( 1 )当Pi = 0.7,Gi = 1和Pi = 0.3,Gi = 0时。我们的代码使用PyTorch和Mask R- CNN Benchmark Toolbox实现[ 30 ],所有模型都使用24GB内存的精视RTX3090 GPU卡进行训练。

Evaluation metrics and comparison methods:We set theIntersection over Union(IoU)threshold as 0.5 to computethe Average Precision(AP)of each category and mean Av-erage Precision(mAP)of all categories.Then we compareour proposed method with some recent domain adaptationcomparison methods in our experiments,such as SCDA[64],DM[24],MAF[18],MCAR[60],SWDA[39],PDA[21],RPN-PR[58],MTOR[3],DA-Faster[5],GPA[49],andUaDAN[15].

评价指标和比较方法:我们将Intersection over Union ( IoU )阈值设为0.5,计算每个类别的平均精度( Average Precision,AP )和所有类别的平均精度( Mean Average Precision,mAP )。在实验中,我们将我们提出的方法与最近的域适应比较方法进行了比较,如SCDA [ 64 ],DM [ 24 ],MAF [ 18 ],MCAR [ 60 ],SWDA [ 39 ],PDA [ 21 ],RPN-PR [ 58 ],MTOR [ 3 ],DA-Faster [ 5 ],GPA [ 49 ],UaDAN [ 15 ]。

4.3.Clear to Foggy Adaptation

The results of weather adaptation from clear weather tofoggy weather are represented in Table 1.Compared withother domain adaptation methods,we can see that our pro-posed method achieves the best detection performance with amAP of 42.3%,which is higher than the second best methodUaDAN[15]with a mAP improvement of 1.2%.For eachcategory,we can see that the proposed method is able to al-leviate the domain gap over most of the categories in FoggyCityscapes,e.g.,bus got 51.2%,bicycle got 39.1%,persongot 36.5%,rider got 46.7%,and train got 48.7%as the bestperformance in AP,which is highlight in Table 1.The pro-posed method can reach the 48.7%AP for the train detectionin Foggy Cityscapes,compared to the 45.4%AP by the sec-ond best method DA-Faster,where the proposed method is3.3%better than DA-Faster.While PDA got 54.4%in car,GPA got 32.4%in motorcycle,DA-faster got 30.9%in truckas the best performance in some categories,the proposedmethod is comparable across these three categories with aminor difference.Obviously,compared to these recent do-main adaptation methods,the proposed method achieves thebest performance in overall mAP performance and morethan half categories of Foggy Cityscapes.

4 . 3 .晴转雾适应

晴转雾天气适应结果如表1所示。与其他域适应方法相比,我们提出的方法取得了最好的检测性能,amAP为42.3 %,高于排名第二的方法UaDAN [ 15 ],mAP提高了1.2 %。对于每个类别,我们可以看到本文提出的方法能够缓解Foggy Cityscapes中大部分类别的领域差距,例如,公交占51.2 %,自行车占39.1 %,行人占36.5 %,骑车人占46.7 %,火车占48.7 %,这是表1中的亮点。本文方法对雾天城市场景下的列车检测可达到48.7 %的AP,而次优方法DA - Faster可达到45.4 %的AP,本文方法比DA - Faster提高了3.3 %。而PDA在轿车中获得了54.4 %,GPA在摩托车中获得了32.4 %,DA - faster在卡车中获得了30.9 %,在某些类别中表现最好,本文提出的方法在这3个类别中具有可比性,但存在一定的差异。显然,与这些最新的领域自适应方法相比,本文方法在总体m AP性能和超过一半的雾天城市景观类别上取得了最好的性能。

4.4.Cross-Camera Adaptation

To fully evaluate the proposed method,we conduct anexperiment to perform the cross-camera adaptation betweenreal-world autonomous driving datasets with different cam-era settings.To apply our method to the unaligned datasetsin the real-world,we simply remove LRobj(Eq.8)to applyour method from Cityscapes(source)to KITTI[12](target)datasets for cross-camera adaptation.Same as[5],we useKITTI training set(7,481 images of resolution 1250×375)as target domain in both adaptation and evaluation,and APof Car on target domain is evaluated.The result is in Table 3,where the proposed method achieved outstanding perfor-mance compared with recent SOTA methods.

4 . 4 .跨相机自适应

为了充分评估所提出的方法,我们进行了一个实验,在不同相机设置的真实自动驾驶数据集之间进行跨相机自适应。为了将我们的方法应用于现实世界中未对齐的数据集,我们简单地去除LRobj (式8 ),将我们的方法从Cityscapes (源)应用于KITTI [ 12 ] (目标)数据集,用于跨相机自适应。与文献[ 5 ]相同,我们使用KITTI训练集(分辨率为1250 × 375的影像7 481张)作为目标域进行适配和评估,并对Car在目标域上的AP进行评估。结果如表3所示,与最近的SOTA方法相比,所提出的方法取得了优异的性能。

4.5.Ablation Study on Components

The effect of each individual proposed component forthe domain adaptation detection method is investigatedin this section.All experiments are conducted with thesame RestNet-50 backbone on the Cityscapes−→FoggyCityscapes experiment.The results are presented in Ta-ble 2.In the first row,‘img’and‘obj’stand for the image-level adaptation module and object-level adaptation modulerespectively,while‘AdvGRL’and‘Reg’denote the pro-posed Adversarial Gradient Reversal Layer and domain-level metric Regularization respectively.‘img+obj+GRL’stands for the baseline model in our experiment.We denotethat‘img+obj+AdvGRL’(Ours w/o Auxiliary Domain)and‘img+obj+AdvGRL+Reg’use the AdvGRL to replace theoriginal GRL.The‘Source only’indicates the Faster R-CNNmodel without domain adaptation trained only with labeledsource domain images.The ablation study in Table 2 clearly justifies the positive effect of each proposed component ofthe domain adaptive object detection.

4 . 5 .成分消融研究

本节研究了每个单独提出的组件对于域适应检测方法的影响。所有实验都是在Cityscapes -→FoggyCityscapes实验上用相同的RestNet - 50主干进行的。结果见表2。在第一行中,' img '和' obj '分别表示图像级自适应模块和对象级自适应模块,' AdvGRL '和' Reg '分别表示提出的对抗梯度翻转层和域级度量正则化。' img + obj + GRL '表示实验中的基线模型。我们称' img + obj + AdvGRL ' (我们的w / o辅助域)和' img + obj + AdvGRL + Reg '使用AdvGRL替换原始GRL。"仅源域"表示仅使用已标记源域图像训练的无域自适应Faster R - CNN模型。表2中的消融研究清楚地证明了域自适应目标检测的每个建议组件的积极作用。

4.6.Ablation Study on Parameters

The study on different hyper-parameters of Eq.9 andEq.5 are conducted.We use the Cityscapes−→FoggyCityscapes as the study case.First,the loss balance weightw in Eq.9 is set as 0.1,0.01,0.001 separately for train-ing,and the corresponding detection mA P(s)are 42.34,41.30,41.19,respectively.Second,in the AdvGRL(Eq.5),the overflow thresholdβand hardness thresholdαare setas(1)β=30,α=0.63,(2)β=10,α=0.63,(3)β=30,α=0.54,and(4)β=10,α=0.54,whereα=0.54 is computed by averaging the values of Eq.1when Pi=0.9,Gi=1 and Pi=0.1,Gi=0.The detec-tion m AP(s)of these settings are(1)42.34,(2)38.83,(3)39.38,(4)40.47,respectively.

4.6 .消融参数研究

对式( 9 )和式( 5 )的不同超参数进行研究。我们以Cityscapes -→FoggyCityscapes为研究案例。首先将式( 9 )中的损失平衡权重w分别设为0.1,0.01,0.001进行训练,对应的检测mA P ( s )分别为42.34,41.30,41.19。其次,在AdvGRL (式( 5 ) )中,设定溢出阈值β和硬度阈值α分别为( 1 ) β = 30,α = 0.63,( 2 ) β = 10,α = 0.63,( 3 ) β = 30,α = 0.54,( 4 ) β = 10,α = 0.54,其中α = 0.54由式( 1 )在Pi = 0.9,Gi = 1和Pi = 0.1,Gi = 0时取平均值得到。这些设置的检测m AP ( s )分别为( 1 ) 42.34,( 2 ) 38.83,( 3 ) 39.38,( 4 ) 40.47。

4.7.Discussion on Visualized Hard Examples

Using λadv of the proposed AdvGRL,we could find somehard examples,as shown in Fig.5.We compute the L1distance of features FSiand FTiafter the CNN backboneof Fig.2 as the approximated hardness ah,where smallerah means harder for transfer learning.Intuitively,if the fogcovers more objects as shown in bounding-box regions ofFig.5,it will be more difficult.

4.7.可视化硬示例讨论

利用本文提出的AdvGRL的λ adv,可以发现一些硬示例,如图5所示。我们计算了特征FSi和FTi在图2的CNN主干之后的L1距离作为近似硬度ah,其中ah越小表示迁移学习越困难。直觉上,如果雾覆盖更多的对象,如图5中的边界框区域所示,则会更加困难。

4.8.Discussion on Domain Randomization,Pre-trained Models,and Qualitative Results

Domain Randomization:Domain randomization might beused to reduce the domain shift between source domain andtarget domain.We use two ways as the domain randomiza-tion in the Cityscapes−→Foggy Cityscapes experiment,i.e.,regular data argumentation and CycleGAN[63]based imagestyle transfer.1)We construct the auxiliary domain by regu-lar data argumentation(color change+blur+salt&peppernoises),where our method’s detection mAP is 38.7,com-pared to our 42.3 by the auxiliary domain with rain synthesis.2)We train a CycleGAN to transfer the image style betweenthe training sets of Cityscapes and Foggy Cityscapes.Usingthe generated fake foggy-style image of Cityscapes by thetrained CycleGAN model to train a Faster R-CNN model,itachieves detection mAP as 32.8.These experiments showthat commonly used domain randomization could not wellsolve the domain adaptation problem.

4.8.关于域随机化、预训练模型和定性结果的讨论

域随机化:域随机化可以用来减少源域和目标域之间的域转移。在Cityscapes -→Foggy Cityscapes实验中,我们采用了两种方式作为域随机化,即常规数据论证和基于CycleGAN [ 63 ]的图像风格迁移。1 )通过常规数据论证(颜色变化+模糊+椒盐噪声)构建辅助域,本文方法的检测m AP为38.7,而通过雨水合成辅助域构建的m AP为42.3。2 )训练一个CycleGAN来在Cityscapes和Foggy Cityscapes的训练集之间传递图像风格。利用训练好的CycleGAN模型生成的城市风貌伪雾图像训练Faster R - CNN模型,检测mAP为32.8。这些实验表明,常用的域随机化不能很好地解决域适应问题。

Pre-trained Models:We use the pre-trained Faster R-CNNmodel in[5]to initialize our method,then our methodgets the detection m AP as 41.3 in the Cityscapes−→FoggyCityscapes experiment,compared to 42.3 by our methodwithout pre-trained detection model.

预训练模型:我们使用文献[ 5 ]中预训练的Faster R - CNN模型来初始化我们的方法,然后我们的方法在Cityscapes -→FoggyCityscapes实验中得到检测m AP为41.3,而没有预训练检测模型的方法为42.3。

Qualitative Results:We visualize some detection results onthe Foggy Cityscapes dataset in Fig.6,which shows that theproposed domain adaptive method improves the detectionperformance in foggy weather significantly.

定性结果:我们在Foggy Cityscapes数据集上可视化了部分检测结果,如图6所示,表明本文提出的域自适应方法显著提高了雾天的检测性能。

5.Conclusions

In this paper,we propose a novel domain adaptive objectdetection framework for autonomous driving.The image-level and object-level adaptations are designed to reducethe domain shift on the global image style and local objectappearance.A new adversarial gradient reversal layer isproposed to perform adversarial mining for hard examplestogether with domain adaptation.Considering the featuremetric distance between the source domain,target domain,and auxiliary domain by data augmentation,we propose anew domain-level metric regularization.Furthermore,ourmethod could be applied to solve the general domain adap-tive object detection problem.We conduct the transfer learn-ing experiments from Cityscapes to Foggy Cityscapes andfrom Cityscapes to KITTI,and experimental results show that the proposed method is quite effective.

5 .结论

在本文中,我们提出了一种新的用于自动驾驶的域自适应目标检测框架。图像级和对象级自适应旨在减少域偏移对全局图像风格和局部对象外观的影响。提出了一种新的对抗梯度反转层,结合域适应对困难样本进行对抗挖掘。通过数据增强考虑源域、目标域和辅助域之间的特征度量距离,我们提出了一种新的域级度量正则化。此外,我们的方法可以应用于解决一般的域自适应目标检测问题。我们进行了从Cityscapes到Foggy Cityscapes和从Cityscapes到KITTI的迁移学习实验,实验结果表明本文提出的方法是非常有效的。