目录

有一个开源的、商业上可用的机器学习工具包,叫做[scikit-learn](https://scikit-learn.org/stable/index.html)。这个工具包包含了你在本课程中要使用的许多算法的实现。

文章目标:

利用scikit-learn使用梯度下降法实现线性回归

使用线性回归模型SGDRegressor进行预测_无桨靠浪的博客-CSDN博客

1,导入库

import numpy as np

#设计array对象中数据的精度

np.set_printoptions(precision=2)

#导入线性模型,LinearRegression,还有梯度下降函数SGDRegressor

from sklearn.linear_model import LinearRegression, SGDRegressor

#导入数据预处理库,使用正则化函数

from sklearn.preprocessing import StandardScaler

#导入数据集

from lab_utils_multi import load_house_data

import matplotlib.pyplot as plt

dlblue = '#0096ff'; dlorange = '#FF9300'; dldarkred='#C00000'; dlmagenta='#FF40FF'; dlpurple='#7030A0';

plt.style.use('./deeplearning.mplstyle')Scikit-learn有一个梯度下降回归模型[sklearn.linear_model.SGDRegressor](https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.SGDRegressor.html#examples-using-sklearn-linear-model-sgdregressor)。 像你之前实现的梯度下降一样,这个模型在归一化的输入下表现最好。[sklearn.preprocessing.StandardScaler](https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.StandardScaler.html#sklearn.preprocessing.StandardScaler)将执行z-score归一化。在这里,它被称为 "标准分数"。

2,导入数据集

X_train, y_train = load_house_data()

X_features = ['size(sqft)','bedrooms','floors','age']

3,缩放/归一化训练数据

scaler = StandardScaler()

X_norm = scaler.fit_transform(X_train)

print(f"Peak to Peak range by column in Raw X:{np.ptp(X_train,axis=0)}")

print(f"Peak to Peak range by column in Normalized X:{np.ptp(X_norm,axis=0)}")print(np.ptp(x, axis=0)) # 求极值(最大最小值的差)

print(f‘‘)的用法_Joey9898的博客-CSDN博客

4,创建并拟合回归模型

sgdr = SGDRegressor(max_iter=1000)

sgdr.fit(X_norm, y_train)

print(sgdr)

print(f"number of iterations completed: {sgdr.n_iter_}, number of weight updates: {sgdr.t_}")5,查看参数

注意,这些参数是与*规范化*的输入数据相关的。拟合的参数非常接近于在以前的材料中用这个数据发现的参数。

b_norm = sgdr.intercept_

w_norm = sgdr.coef_

print(f"model parameters: w: {w_norm}, b:{b_norm}")

print(f"model parameters from previous lab: w: [110.56 -21.27 -32.71 -37.97], b: 363.16")6,预测

# make a prediction using sgdr.predict()

y_pred_sgd = sgdr.predict(X_norm)

# make a prediction using w,b.

y_pred = np.dot(X_norm, w_norm) + b_norm

print(f"prediction using np.dot() and sgdr.predict match: {(y_pred == y_pred_sgd).all()}")

print(f"Prediction on training set:\n{y_pred[:4]}" )

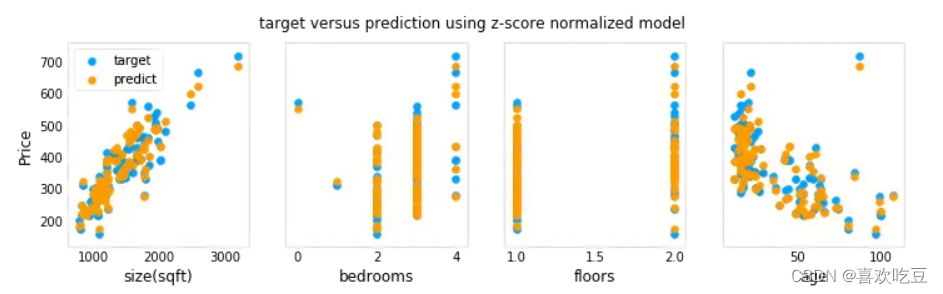

print(f"Target values \n{y_train[:4]}")7,可视化

# plot predictions and targets vs original features

fig,ax=plt.subplots(1,4,figsize=(12,3),sharey=True)

for i in range(len(ax)):

ax[i].scatter(X_train[:,i],y_train, label = 'target')

ax[i].set_xlabel(X_features[i])

ax[i].scatter(X_train[:,i],y_pred,color=dlorange, label = 'predict')

ax[0].set_ylabel("Price"); ax[0].legend();

fig.suptitle("target versus prediction using z-score normalized model")

plt.show()