大家好,我是虎哥,使用NVIDIA Jeston TX1 也有很长一段时间了,由于这是基本停产的一个模块,其实自己也担心有很多官方的demo无法适配跑起来了,所以花了点时间,进一步研究发挥其GPU性能,使用各种硬件协处理器来加速。今天周末,开始测试一下DeepStream自带的C++DEMO,开始入门学习DeepStream的使用,讲通过详细分享官方自带的5个典型例子,来完成入门学习,分享给大家,也是自己做个笔记总结。

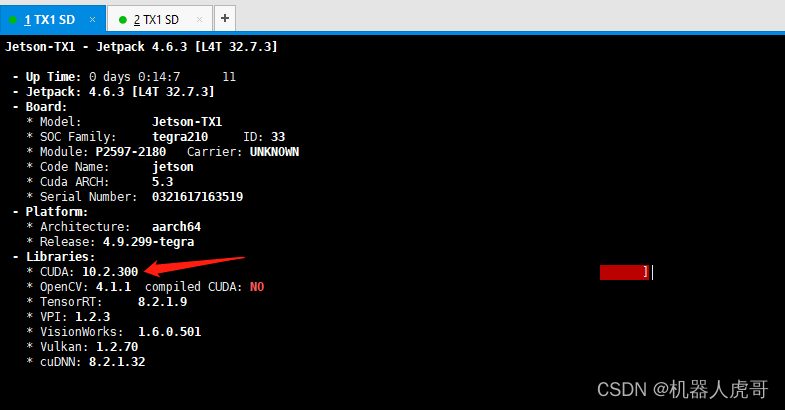

先交代一下我自己的测试环境,硬件好事TX1+EHub_tx1_tx2_E100载板的环境, 关于测试硬件EHub_tx1_tx2_E100载板请查看:EdgeBox_EHub_tx1_tx2_E100 开发板评测_机器人虎哥的博客-CSDN博客。系统装ubuntu 18.04 的环境。安装了英伟达配套的所有cuda的套件库。

目录

0、C/C++ Sample Apps Source Details

3) osd_sink_pad_buffer_probe函数

0、C/C++ Sample Apps Source Details

官网入口:C/C++ Sample Apps Source Details — DeepStream 6.2 Release documentation

DeepStream SDK 包含插件、库、应用程序和源代码的存档。对于Debian安装(在Jetson或dGPU上)和SDK管理器安装,sources目录位于/opt/nvidia/deepstream/deepstream-6.2 sources。对于tar包,源文件位于提取的deepstream包中。DeepStream Python绑定和示例应用程序作为单独的包提供。有关详细信息,请参阅GitHub - NVIDIA-AI-IOT/deepstream_python_apps: DeepStream SDK Python bindings and sample applications

使用Graph Composer创建的DeepStream图列在参考图部分。有关详细信息,请参见Graph Composer简介。

| Reference test application | Path inside sources directory | Description |

|---|---|---|

| Sample test application 1 | apps/sample_apps/deepstream-test1 | Sample of how to use DeepStream elements for a single H.264 stream: filesrc → decode → nvstreammux → nvinfer or nvinferserver (primary detector) → nvdsosd → renderer. This app uses resnet10.caffemodel for detection. |

| Sample test application 2 | apps/sample_apps/deepstream-test2 | Sample of how to use DeepStream elements for a single H.264 stream: filesrc → decode → nvstreammux → nvinfer or nvinferserver (primary detector) → nvtracker → nvinfer or nvinferserver (secondary classifier) → nvdsosd → renderer. This app uses resnet10.caffemodel for detection and 3 classifier models (i.e., Car Color, Make and Model). |

| Sample test application 3 | apps/sample_apps/deepstream-test3 | Builds on deepstream-test1 (simple test application 1) to demonstrate how to:Use multiple sources in the pipeline.Use a uridecodebin to accept any type of input (e.g. RTSP/File), any GStreamer supported container format, and any codec.Configure Gst-nvstreammux to generate a batch of frames and infer on it for better resource utilization.Extract the stream metadata, which contains useful information about the frames in the batched buffer.This app uses resnet10.caffemodel for detection. |

| Sample test application 4 | apps/sample_apps/deepstream-test4 | Builds on deepstream-test1 for a single H.264 stream: filesrc, decode, nvstreammux, nvinfer or nvinferserver, nvdsosd, renderer to demonstrate how to:Use the Gst-nvmsgconv and Gst-nvmsgbroker plugins in the pipeline.Create NVDS_META_EVENT_MSG type metadata and attach it to the buffer.Use NVDS_META_EVENT_MSG for different types of objects, e.g. vehicle and person.Implement “copy” and “free” functions for use if metadata is extended through the extMsg field.This app uses resnet10.caffemodel for detection. |

| Sample test application 5 | apps/sample_apps/deepstream-test5 | Builds on top of deepstream-app. Demonstrates:Use of Gst-nvmsgconv and Gst-nvmsgbroker plugins in the pipeline for multistream.How to configure Gst-nvmsgbroker plugin from the config file as a sink plugin (for KAFKA, Azure, etc.).How to handle the RTCP sender reports from RTSP servers or cameras and translate the Gst Buffer PTS to a UTC timestamp.For more details refer the RTCP Sender Report callback function test5_rtcp_sender_report_callback() registration and usage in deepstream_test5_app_main.c. GStreamer callback registration with rtpmanager element’s “handle-sync” signal is documented in apps-common/src/deepstream_source_bin.c.This app uses resnet10.caffemodel for detection. |

接下来的时间,我们就通过系统学习这5个示例程序。

-

test1: DeepStream的Hello World。介绍如何基于多种DeepStream 插件来构建一个Gstream 管道。这个样例中输入的是一个视频文件,经过解码、批处理、目标检测,并将检测信息显示在屏幕中。

-

test2: 在test1的基础上,将二级网络级联到一级网络。图中我们也能看到,在目标检测之后多了一个图像分类的模块。

-

test3: 在test1的基础上,如何实现多数据源。比如同时接入4路视频,实现对4路视频数据的同时推理。

-

test4: 在test1的基础上,展示如何使用message broker插件创建物联网服务。

参考资料

DeepStream SDK开发指南:NVIDIA DeepStream SDK Developer Guide — DeepStream 6.2 Release documentation

DeepStream 概况: https://docs.nvidia.com/metropolis/deepstream/dev-guide/text/DS_Overview.html

DeepStream 数据结构:https://docs.nvidia.com/metropolis/deepstream/dev-guide/text/DS_plugin_metadata.html

GStreamer 学习笔记: https://www.cnblogs.com/phinecos/archive/2009/06/07/1498166.html

B站DeepStream 相关视频合集:jetson nano中如何下载deepstream-哔哩哔哩_Bilibili

1、Sample test application 1

DeepStream的Hello World。介绍如何基于多种DeepStream 插件来构建一个Gstream 管道。这个样例中输入的是一个视频文件,经过解码、批处理、目标检测,并将检测信息显示在屏幕中。test1样例的整体流程: 首先数据源元件负责从磁盘上读取视频数据,解析器元件负责对数据进行解析,编码器元件负责对数据进行解码,流多路复用器元件负责批处理帧以实现最佳推理性能,推理元件负责实现加速推理,转换器元件负责将数据格式转换为输出显示支持的格式,可视化元件负责将边框与文本等信息绘制到图像中,渲染元件和接收器元件负责输出到屏幕上。

这个示例是延时如何对单个H.264流使用DeepStream元素的示例:

filesrc → decode → nvstreammux → nvinfer or nvinferserver (primary detector) → nvdsosd → renderer.

此应用程序使用resnet10.caffemodel进行检测。关于caffemodel的相关知识,自行百度补充。

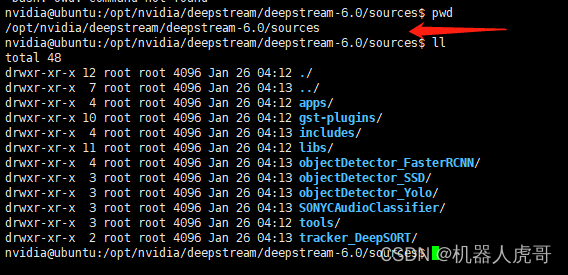

1.1 进入目录,找到代码:

cd /opt/nvidia/deepstream/deepstream-6.0/sources

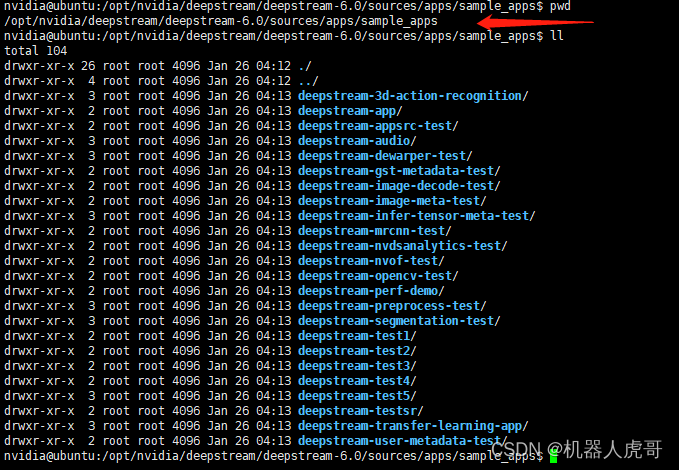

cd /opt/nvidia/deepstream/deepstream-6.0/sources/apps/sample_apps

进入deepstream-test1目录

目录结构:

#/opt/nvidia/deepstream/deepstream-6.0/sources/apps/sample_apps/deepstream-test1$ ll --->deepstream_test1_app.c --->dstest1_pgie_config.txt --->Makefile --->README

1.2 按照指导编译文件

从 README文件种可以看到编译步骤:

Compilation Steps: $ Set CUDA_VER in the MakeFile as per platform. For Jetson, CUDA_VER=10.2 For x86, CUDA_VER=11.4 $ sudo make

确认自己系统CUDA_VER,我自己就是CUDA_VER=10.2

打开MakeFile文件修改

保存退出后编译:

sudo make

nvidia@ubuntu:/opt/nvidia/deepstream/deepstream-6.0/sources/apps/sample_apps/deepstream-test1$ sudo make

cc -c -o deepstream_test1_app.o -DPLATFORM_TEGRA -I../../../includes -I /usr/local/cuda-10.2/include -pthread -I/usr/include/gstreamer-1.0 -I/usr/include/glib-2.0 -I/usr/lib/aarch64-linux-gnu/glib-2.0/include deepstream_test1_app.c

cc -o deepstream-test1-app deepstream_test1_app.o -lgstreamer-1.0 -lgobject-2.0 -lglib-2.0 -L/usr/local/cuda-10.2/lib64/ -lcudart -L/opt/nvidia/deepstream/deepstream-6.0/lib/ -lnvdsgst_meta -lnvds_meta -lcuda -Wl,-rpath,/opt/nvidia/deepstream/deepstream-6.0/lib/编译完成。

1.3 运行测试

README文件 有如何运行的说明

To run: $ ./deepstream-test1-app <h264_elementary_stream> NOTE: To compile the sources, run make with "sudo" or root permission.

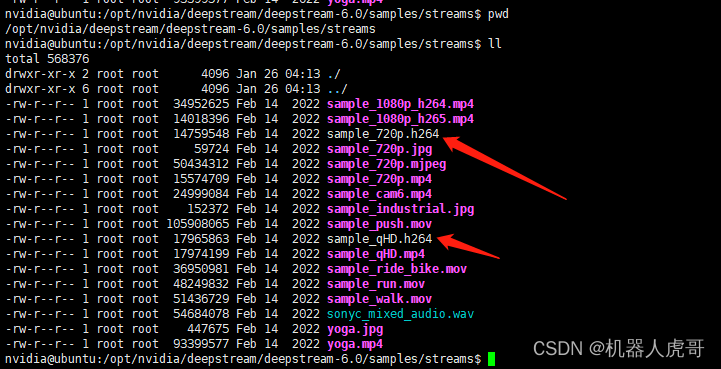

官方自带的样本种有H264视频

所在目录:

/opt/nvidia/deepstream/deepstream-6.0/samples/streams回到测试目录:

cd /opt/nvidia/deepstream/deepstream-6.0/sources/apps/sample_apps/deepstream-test1执行测试命令:在NoMachine终端执行

./deepstream-test1-app /opt/nvidia/deepstream/deepstream-6.0/samples/streams/sample_720p.h264

注意:回车后,需要等待至少15秒才能弹出窗口,所以请耐心等待。当然,没有具体测量,所以可能时间更久。

1.4 更换视频文件,检验不同场景的效果

上面跑通了官方原版的测试,我就想换一个视频场景,然后看看官方提供的推理模型效果,中间也是有一堆转折。首先我们来看之前的测试命令:

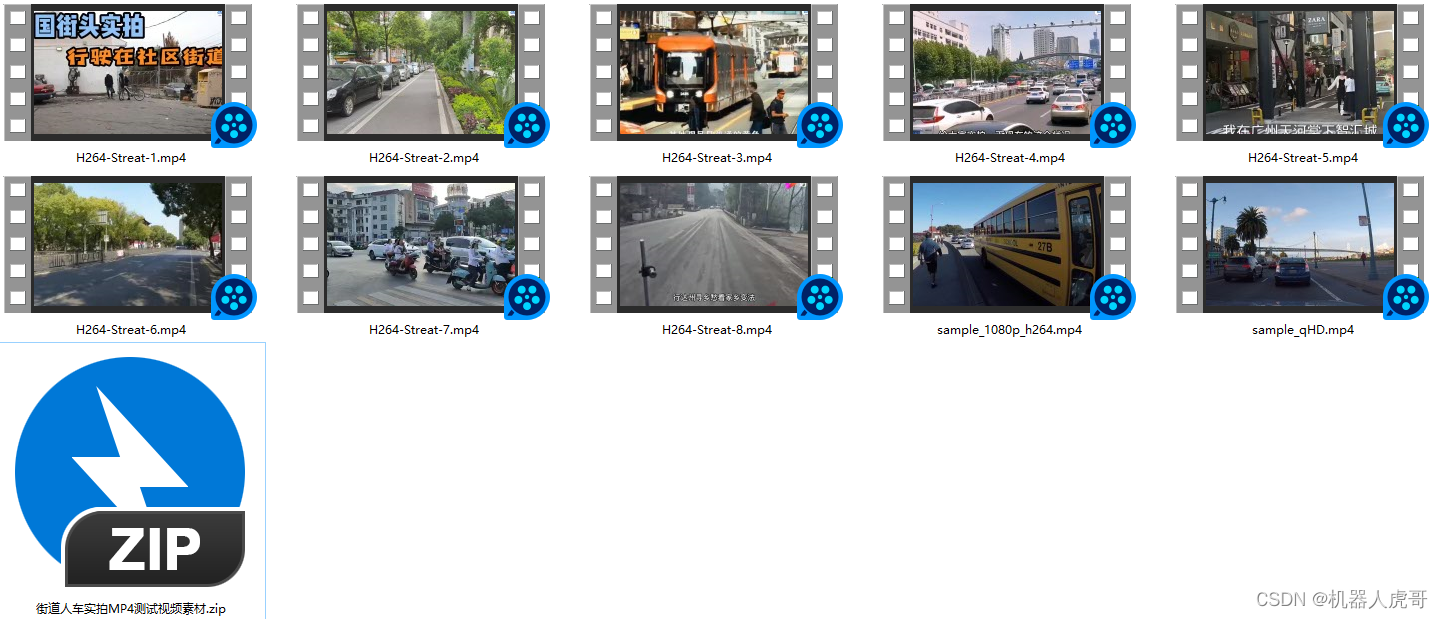

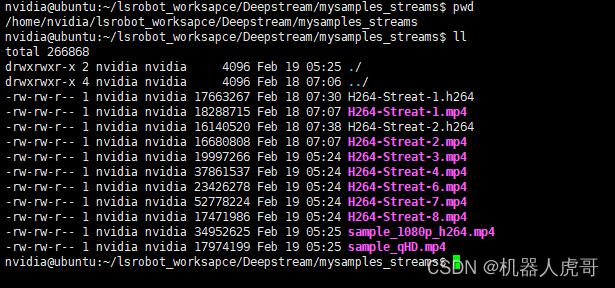

./deepstream-test1-app /opt/nvidia/deepstream/deepstream-6.0/samples/streams/sample_720p.h264文件名称是sample_720p.h264,其实比较迷糊,.h264的是什么鬼,不过好在我之前研究了一段时间Gstreamer,大致猜测是个h264压缩的视频格式,所以我就网上搜了一堆的H264的街头是实拍视频。这些素材我已经上传资源,可以直接下载。

随便一个视屏,用VLC播放器看了一下解码格式,都是H264的格式。

将这些视频文件,导入EHub_tx1_tx2_E100中。

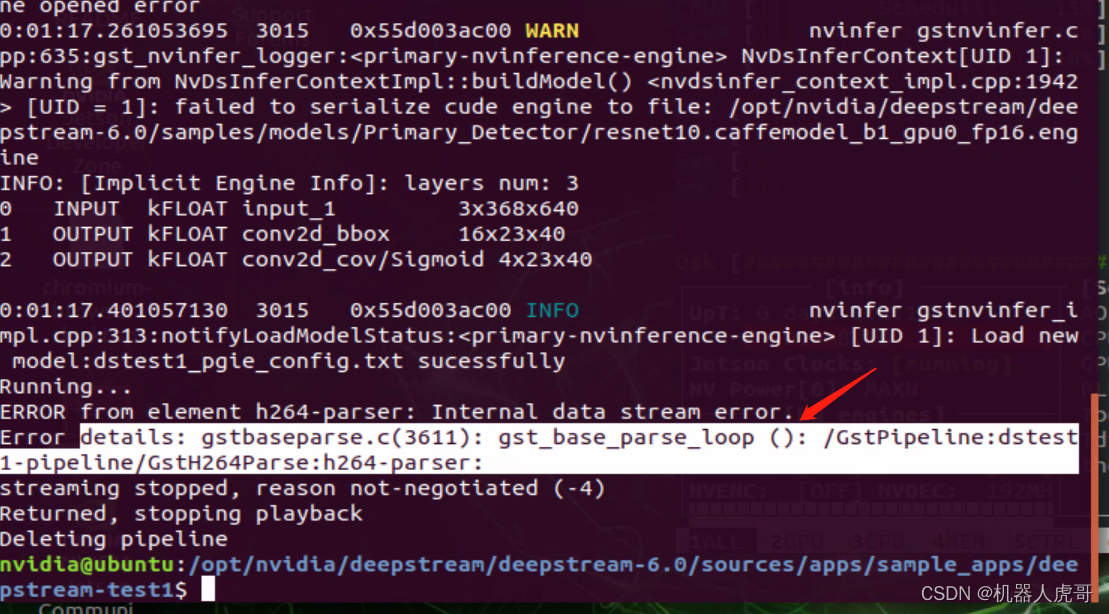

第一次直接用MP4文件输入测试:

cd /opt/nvidia/deepstream/deepstream-6.0/sources/apps/sample_apps/deepstream-test1

./deepstream-test1-app /home/nvidia/lsrobot_worksapce/Deepstream/mysamples_streams/H264-Streat-1.mp4执行后,需要等待比较长的时间,自我感觉有30秒,所以耐心等待结果。

报这个错误:

ERROR from element h264-parser: Internal data stream error.

Error details: gstbaseparse.c(3611): gst_base_parse_loop (): /GstPipeline:dstest1-pipeline/GstH264Parse:h264-parser:回想之前官方的测试视频:

/opt/nvidia/deepstream/deepstream-6.0/samples/streams这个目录下的文件:

同样名字,不同的尾缀,猜和查了一下,应该是官方h264的文件,就是单纯的H264的流文件,没有混杂其它,而我们下载的MP4文件,则是混合的音频流的视频文件,知道了这个就简单了,只需要处理一下我们下载的视频文件即可。

同样名字,不同的尾缀,猜和查了一下,应该是官方h264的文件,就是单纯的H264的流文件,没有混杂其它,而我们下载的MP4文件,则是混合的音频流的视频文件,知道了这个就简单了,只需要处理一下我们下载的视频文件即可。

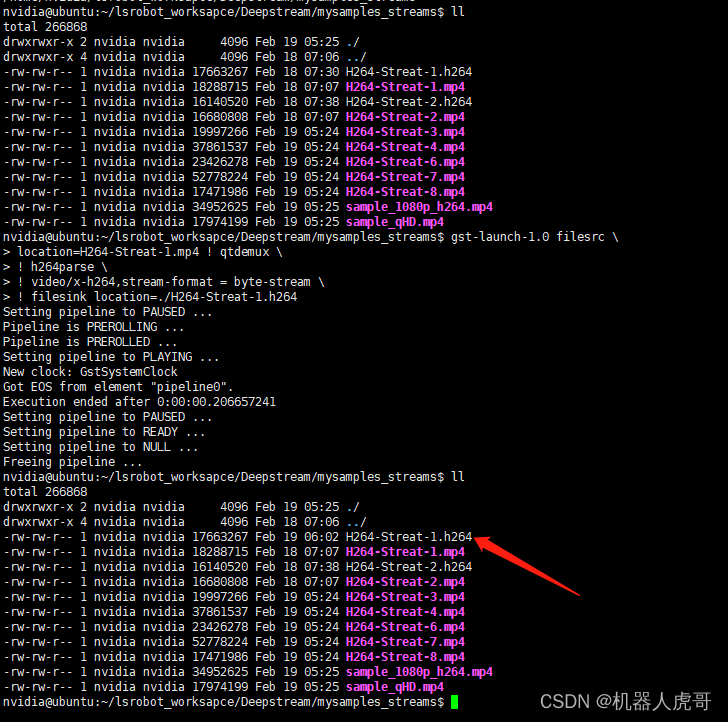

MP4混合流文件解复用,单独保存H264视频流文件

并非每个.mp4文件都具有h.264流。 MP4是一个容器,可能像MPEG-4一样具有不同的编码流。

解复用后显示

#matroskademux 解复用 MKV后缀

gst-launch-1.0 filesrc \

location=H264-Streat-1.mp4 ! matroskademux \

! h264parse ! avdec_h264 \

! videoconvert ! ximagesink

#qtdemux 解复用MP4

gst-launch-1.0 filesrc \

location=H264-Streat-1.mp4 ! qtdemux \

! h264parse ! avdec_h264 \

! videoconvert ! ximagesink解复用后保存文件:

#不需要显示器支撑,直接XSHELL终端就可以执行

gst-launch-1.0 filesrc \

location=H264-Streat-1.mp4 ! qtdemux \

! h264parse \

! video/x-h264,stream-format = byte-stream \

! filesink location=./H264-Streat-1.h264

#转换第二个文件

gst-launch-1.0 filesrc \

location=H264-Streat-2.mp4 ! qtdemux \

! h264parse \

! video/x-h264,stream-format = byte-stream \

! filesink location=./H264-Streat-2.h264 这样我们就得到解复用后的单独H264的字节流文件。有了H264-Streat-1.h264 文件我们继续测试一下看看:

这样我们就得到解复用后的单独H264的字节流文件。有了H264-Streat-1.h264 文件我们继续测试一下看看:

cd /opt/nvidia/deepstream/deepstream-6.0/sources/apps/sample_apps/deepstream-test1

./deepstream-test1-app /home/nvidia/lsrobot_worksapce/Deepstream/mysamples_streams/H264-Streat-1.h264 继续耐心等待出结果。

有结果了,说明转对了,这个小技巧分享给大家,希望可以帮助大家的实验更丰富。对应所有文件我也转成了H264的byte-stream文件,上传到了我的资源,免费就可以获取到。

有结果了,说明转对了,这个小技巧分享给大家,希望可以帮助大家的实验更丰富。对应所有文件我也转成了H264的byte-stream文件,上传到了我的资源,免费就可以获取到。

1.4 样例代码详解

以下内容主要来自于:【Nvidia DeepStream. 001】DeepStream-test1样例 逐行讲解版,原来竟如此简单-蒲公英云,我只是简单的COPY和整理了一下格式

1)原始代码:

test1的样例代码就只有一个文件,即deepstream_test1_app.c。这个文件位于opt\nvidia\deepstream\deepstream-6.0\sources\apps\sample_apps\deepstream-test1文件夹内。

获取 /opt/nvidia/deepstream/deepstream-6.0/sources/apps/sample_apps/deepstream-test1

#include <gst/gst.h>

#include <glib.h>

#include <stdio.h>

#include <cuda_runtime_api.h>

#include "gstnvdsmeta.h"

#define MAX_DISPLAY_LEN 64

#define PGIE_CLASS_ID_VEHICLE 0

#define PGIE_CLASS_ID_PERSON 2

/* The muxer output resolution must be set if the input streams will be of

* different resolution. The muxer will scale all the input frames to this

* resolution. */

#define MUXER_OUTPUT_WIDTH 1920

#define MUXER_OUTPUT_HEIGHT 1080

/* Muxer batch formation timeout, for e.g. 40 millisec. Should ideally be set

* based on the fastest source's framerate. */

#define MUXER_BATCH_TIMEOUT_USEC 40000

gint frame_number = 0;

gchar pgie_classes_str[4][32] = { "Vehicle", "TwoWheeler", "Person",

"Roadsign"

};

static GstPadProbeReturn

osd_sink_pad_buffer_probe (GstPad * pad, GstPadProbeInfo * info,

gpointer u_data)

{

GstBuffer *buf = (GstBuffer *) info->data;

guint num_rects = 0;

NvDsObjectMeta *obj_meta = NULL;

guint vehicle_count = 0;

guint person_count = 0;

NvDsMetaList * l_frame = NULL;

NvDsMetaList * l_obj = NULL;

NvDsDisplayMeta *display_meta = NULL;

NvDsBatchMeta *batch_meta = gst_buffer_get_nvds_batch_meta (buf);

for (l_frame = batch_meta->frame_meta_list; l_frame != NULL;

l_frame = l_frame->next) {

NvDsFrameMeta *frame_meta = (NvDsFrameMeta *) (l_frame->data);

int offset = 0;

for (l_obj = frame_meta->obj_meta_list; l_obj != NULL;

l_obj = l_obj->next) {

obj_meta = (NvDsObjectMeta *) (l_obj->data);

if (obj_meta->class_id == PGIE_CLASS_ID_VEHICLE) {

vehicle_count++;

num_rects++;

}

if (obj_meta->class_id == PGIE_CLASS_ID_PERSON) {

person_count++;

num_rects++;

}

}

display_meta = nvds_acquire_display_meta_from_pool(batch_meta);

NvOSD_TextParams *txt_params = &display_meta->text_params[0];

display_meta->num_labels = 1;

txt_params->display_text = g_malloc0 (MAX_DISPLAY_LEN);

offset = snprintf(txt_params->display_text, MAX_DISPLAY_LEN, "Person = %d ", person_count);

offset = snprintf(txt_params->display_text + offset , MAX_DISPLAY_LEN, "Vehicle = %d ", vehicle_count);

/* Now set the offsets where the string should appear */

txt_params->x_offset = 10;

txt_params->y_offset = 12;

/* Font , font-color and font-size */

txt_params->font_params.font_name = "Serif";

txt_params->font_params.font_size = 10;

txt_params->font_params.font_color.red = 1.0;

txt_params->font_params.font_color.green = 1.0;

txt_params->font_params.font_color.blue = 1.0;

txt_params->font_params.font_color.alpha = 1.0;

/* Text background color */

txt_params->set_bg_clr = 1;

txt_params->text_bg_clr.red = 0.0;

txt_params->text_bg_clr.green = 0.0;

txt_params->text_bg_clr.blue = 0.0;

txt_params->text_bg_clr.alpha = 1.0;

nvds_add_display_meta_to_frame(frame_meta, display_meta);

}

g_print ("Frame Number = %d Number of objects = %d "

"Vehicle Count = %d Person Count = %d\n",

frame_number, num_rects, vehicle_count, person_count);

frame_number++;

return GST_PAD_PROBE_OK;

}

static gboolean

bus_call (GstBus * bus, GstMessage * msg, gpointer data)

{

GMainLoop *loop = (GMainLoop *) data;

switch (GST_MESSAGE_TYPE (msg)) {

case GST_MESSAGE_EOS:

g_print ("End of stream\n");

g_main_loop_quit (loop);

break;

case GST_MESSAGE_ERROR:{

gchar *debug;

GError *error;

gst_message_parse_error (msg, &error, &debug);

g_printerr ("ERROR from element %s: %s\n",

GST_OBJECT_NAME (msg->src), error->message);

if (debug)

g_printerr ("Error details: %s\n", debug);

g_free (debug);

g_error_free (error);

g_main_loop_quit (loop);

break;

}

default:

break;

}

return TRUE;

}

int

main (int argc, char *argv[])

{

GMainLoop *loop = NULL;

GstElement *pipeline = NULL, *source = NULL, *h264parser = NULL,

*decoder = NULL, *streammux = NULL, *sink = NULL, *pgie = NULL, *nvvidconv = NULL,

*nvosd = NULL;

GstElement *transform = NULL;

GstBus *bus = NULL;

guint bus_watch_id;

GstPad *osd_sink_pad = NULL;

int current_device = -1;

cudaGetDevice(¤t_device);

struct cudaDeviceProp prop;

cudaGetDeviceProperties(&prop, current_device);

/* Check input arguments */

if (argc != 2) {

g_printerr ("Usage: %s <H264 filename>\n", argv[0]);

return -1;

}

/* Standard GStreamer initialization */

gst_init (&argc, &argv);

loop = g_main_loop_new (NULL, FALSE);

/* Create gstreamer elements */

/* Create Pipeline element that will form a connection of other elements */

pipeline = gst_pipeline_new ("dstest1-pipeline");

/* Source element for reading from the file */

source = gst_element_factory_make ("filesrc", "file-source");

/* Since the data format in the input file is elementary h264 stream,

* we need a h264parser */

h264parser = gst_element_factory_make ("h264parse", "h264-parser");

/* Use nvdec_h264 for hardware accelerated decode on GPU */

decoder = gst_element_factory_make ("nvv4l2decoder", "nvv4l2-decoder");

/* Create nvstreammux instance to form batches from one or more sources. */

streammux = gst_element_factory_make ("nvstreammux", "stream-muxer");

if (!pipeline || !streammux) {

g_printerr ("One element could not be created. Exiting.\n");

return -1;

}

/* Use nvinfer to run inferencing on decoder's output,

* behaviour of inferencing is set through config file */

pgie = gst_element_factory_make ("nvinfer", "primary-nvinference-engine");

/* Use convertor to convert from NV12 to RGBA as required by nvosd */

nvvidconv = gst_element_factory_make ("nvvideoconvert", "nvvideo-converter");

/* Create OSD to draw on the converted RGBA buffer */

nvosd = gst_element_factory_make ("nvdsosd", "nv-onscreendisplay");

/* Finally render the osd output */

if(prop.integrated) {

transform = gst_element_factory_make ("nvegltransform", "nvegl-transform");

}

sink = gst_element_factory_make ("nveglglessink", "nvvideo-renderer");

if (!source || !h264parser || !decoder || !pgie

|| !nvvidconv || !nvosd || !sink) {

g_printerr ("One element could not be created. Exiting.\n");

return -1;

}

if(!transform && prop.integrated) {

g_printerr ("One tegra element could not be created. Exiting.\n");

return -1;

}

/* we set the input filename to the source element */

g_object_set (G_OBJECT (source), "location", argv[1], NULL);

g_object_set (G_OBJECT (streammux), "batch-size", 1, NULL);

g_object_set (G_OBJECT (streammux), "width", MUXER_OUTPUT_WIDTH, "height",

MUXER_OUTPUT_HEIGHT,

"batched-push-timeout", MUXER_BATCH_TIMEOUT_USEC, NULL);

/* Set all the necessary properties of the nvinfer element,

* the necessary ones are : */

g_object_set (G_OBJECT (pgie),

"config-file-path", "dstest1_pgie_config.txt", NULL);

/* we add a message handler */

bus = gst_pipeline_get_bus (GST_PIPELINE (pipeline));

bus_watch_id = gst_bus_add_watch (bus, bus_call, loop);

gst_object_unref (bus);

/* Set up the pipeline */

/* we add all elements into the pipeline */

if(prop.integrated) {

gst_bin_add_many (GST_BIN (pipeline),

source, h264parser, decoder, streammux, pgie,

nvvidconv, nvosd, transform, sink, NULL);

}

else {

gst_bin_add_many (GST_BIN (pipeline),

source, h264parser, decoder, streammux, pgie,

nvvidconv, nvosd, sink, NULL);

}

GstPad *sinkpad, *srcpad;

gchar pad_name_sink[16] = "sink_0";

gchar pad_name_src[16] = "src";

sinkpad = gst_element_get_request_pad (streammux, pad_name_sink);

if (!sinkpad) {

g_printerr ("Streammux request sink pad failed. Exiting.\n");

return -1;

}

srcpad = gst_element_get_static_pad (decoder, pad_name_src);

if (!srcpad) {

g_printerr ("Decoder request src pad failed. Exiting.\n");

return -1;

}

if (gst_pad_link (srcpad, sinkpad) != GST_PAD_LINK_OK) {

g_printerr ("Failed to link decoder to stream muxer. Exiting.\n");

return -1;

}

gst_object_unref (sinkpad);

gst_object_unref (srcpad);

/* we link the elements together */

/* file-source -> h264-parser -> nvh264-decoder ->

* nvinfer -> nvvidconv -> nvosd -> video-renderer */

if (!gst_element_link_many (source, h264parser, decoder, NULL)) {

g_printerr ("Elements could not be linked: 1. Exiting.\n");

return -1;

}

if(prop.integrated) {

if (!gst_element_link_many (streammux, pgie,

nvvidconv, nvosd, transform, sink, NULL)) {

g_printerr ("Elements could not be linked: 2. Exiting.\n");

return -1;

}

}

else {

if (!gst_element_link_many (streammux, pgie,

nvvidconv, nvosd, sink, NULL)) {

g_printerr ("Elements could not be linked: 2. Exiting.\n");

return -1;

}

}

/* Lets add probe to get informed of the meta data generated, we add probe to

* the sink pad of the osd element, since by that time, the buffer would have

* had got all the metadata. */

osd_sink_pad = gst_element_get_static_pad (nvosd, "sink");

if (!osd_sink_pad)

g_print ("Unable to get sink pad\n");

else

gst_pad_add_probe (osd_sink_pad, GST_PAD_PROBE_TYPE_BUFFER,

osd_sink_pad_buffer_probe, NULL, NULL);

gst_object_unref (osd_sink_pad);

/* Set the pipeline to "playing" state */

g_print ("Now playing: %s\n", argv[1]);

gst_element_set_state (pipeline, GST_STATE_PLAYING);

/* Wait till pipeline encounters an error or EOS */

g_print ("Running...\n");

g_main_loop_run (loop);

/* Out of the main loop, clean up nicely */

g_print ("Returned, stopping playback\n");

gst_element_set_state (pipeline, GST_STATE_NULL);

g_print ("Deleting pipeline\n");

gst_object_unref (GST_OBJECT (pipeline));

g_source_remove (bus_watch_id);

g_main_loop_unref (loop);

return 0;

} 2) main 函数

首先定义了需要用到的所有变量。因为所有GStreamer元件都具有相同的基类GstElement,因此能够采用GstElement类型对所有的元件进行定义。以及定义了负责数据消息的传输的GstBus类别变量。

GMainLoop *loop = NULL;

//因为所有GStreamer元件都具有相同的基类GstElement

GstElement *pipeline = NULL, *source = NULL, *h264parser = NULL,

*decoder = NULL, *streammux = NULL, *sink = NULL, *pgie = NULL, *nvvidconv = NULL,

*nvosd = NULL;

//判断设备平台是否为TEGRA,是的话创建transform变量

#ifdef PLATFORM_TEGRA

GstElement *transform = NULL;

#endif

//数据传输变量

GstBus *bus = NULL;

guint bus_watch_id;

GstPad *osd_sink_pad = NULL;然后在主函数中调用gst_init()来完成相应的初始化工作,以便将用户从命令行输入的参数传递给GStreamer函数库。

//gst_init()来完成相应的初始化工作

gst_init (&argc, &argv);在GStreamer框架中管道是用来容纳和管理元件的,下面将创建一条名为pipeline的管道:

//管道是用来容纳和管理元件的

pipeline = gst_pipeline_new ("dstest1-pipeline");创建管理中需要使用的所有元件。最后检查所有元件是否创建成功

// 创建一个gstreamer element, 类型为filesrc,名称为file-source。

source = gst_element_factory_make ("filesrc", "file-source");

// 创建一个gstreamer element, 类型为h264parse,名称为h264-parser。

// 因为输入文件中的数据格式是基本的h264流,所以我们需要一个h264解析器

h264parser = gst_element_factory_make ("h264parse", "h264-parser");

// 创建一个gstreamer element, 类型为nvv4l2decoder,名称为nvv4l2-decoder。

// 调用GPU硬件加速来解码h264文件

decoder = gst_element_factory_make ("nvv4l2decoder", "nvv4l2-decoder");

// 创建一个gstreamer element, 类型为nvstreammux,名称为stream-muxer。

// 从一个或多个源中来组成batches

streammux = gst_element_factory_make ("nvstreammux", "stream-muxer");

// 若管道元件为空或者流复用器元件为空, 报错

if (!pipeline || !streammux) {

g_printerr ("One element could not be created. Exiting.\n");

return -1;

}

// 创建一个gstreamer element, 类型为nvinfer,名称为primary-nvinference-engine。

// 使用nvinfer在解码器的输出上运行推理,推理过程的参数是通过配置文件设置的

pgie = gst_element_factory_make ("nvinfer", "primary-nvinference-engine");

// 创建一个gstreamer element, 类型为nvvideoconvert,名称为nvvideo-converter。

// 使用转换器插件,从NV12 转换到 nvosd 所需要的RGBA

nvvidconv = gst_element_factory_make ("nvvideoconvert", "nvvideo-converter");

// 创建一个gstreamer element, 类型为nvdsosd,名称为nv-onscreendisplay。

// 创建OSD在转换后的RGBA缓冲区上绘图

nvosd = gst_element_factory_make ("nvdsosd", "nv-onscreendisplay");

// 判断设备平台是否为TEGRA,是的话创建transform元件,实现渲染osd输出。

// 这个属性是在makefile文件中设置的。

#ifdef PLATFORM_TEGRA

transform = gst_element_factory_make ("nvegltransform", "nvegl-transform");

#endif

// 接收器元件

sink = gst_element_factory_make ("nveglglessink", "nvvideo-renderer");

// 确认各个元件均已创建

if (!source || !h264parser || !decoder || !pgie

|| !nvvidconv || !nvosd || !sink) {

g_printerr ("One element could not be created. Exiting.\n");

return -1;

}

#ifdef PLATFORM_TEGRA

if(!transform) {

g_printerr ("One tegra element could not be created. Exiting.\n");

return -1;

}

#endif数据源元件负责从磁盘文件中读取视频数据,它具有名为location的属性,用来指明文件在磁盘上的位置。使用标准的GObject属性机制可以为元件设置相应的属性:

// 使用命令行参数中的第二个参数(本地视频地址),为source元件的location属性赋值,

g_object_set (G_OBJECT (source), "location", argv[1], NULL);同理,为流多路复用器元件中的属性赋值。

// 为streammux 元件中的batch-size属性赋值为1,表示只有一个数据源

g_object_set (G_OBJECT (streammux), "batch-size", 1, NULL);

// 为streammux 元件中的width、height、batched-push-timeout属性赋值

g_object_set (G_OBJECT (streammux), "width", MUXER_OUTPUT_WIDTH, "height",

MUXER_OUTPUT_HEIGHT,

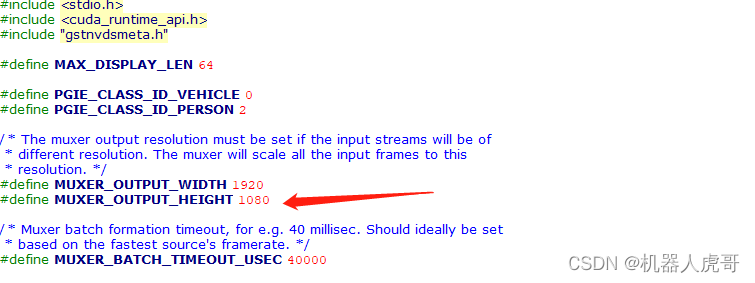

"batched-push-timeout", MUXER_BATCH_TIMEOUT_USEC, NULL);相应的宏定义自文件开始位置:

同理,为推理元件的属性config-file-path赋值。推理中的属性可以在dstest1_pgie_config.txt配置文件中修改。

// 设置nvinfer元件中的属性config-file-path, 通过设置配置文件来设置nvinfer元件的所有必要属性

g_object_set (G_OBJECT (pgie),

"config-file-path", "dstest1_pgie_config.txt", NULL);

得到管道的消息总线。

bus = gst_pipeline_get_bus (GST_PIPELINE (pipeline));添加消息监控器。其中bus_call 是消息处理函数,在下文中有介绍。

bus_watch_id = gst_bus_add_watch (bus, bus_call, loop);

gst_object_unref (bus);对应回调函数内容:

此时。管道、元件都已经创建并赋值。现在需要把创建好的元件按照顺序,需要全部添加到管道中。

// 设置好管道,将所有元件添加到管道中。根据PLATFORM_TEGRA属性来决定是否将transform加入到管道中

#ifdef PLATFORM_TEGRA

gst_bin_add_many (GST_BIN (pipeline),

source, h264parser, decoder, streammux, pgie,

nvvidconv, nvosd, transform, sink, NULL);

#else

gst_bin_add_many (GST_BIN (pipeline),

source, h264parser, decoder, streammux, pgie,

nvvidconv, nvosd, sink, NULL);

#endif现在,我们需要通过pad(衬垫)来将元件连接起来。Pad是一个element的输入/输出接口,分为src pad(生产衬垫)和sink pad(消费衬垫)两种。在element通过pad连接成功后,数据会从上一个element的src pad传到下一个element的sink pad然后进行处理。

GstPad *sinkpad, *srcpad;

gchar pad_name_sink[16] = "sink_0";

gchar pad_name_src[16] = "src";

sinkpad = gst_element_get_request_pad (streammux, pad_name_sink); // 消费pad

if (!sinkpad) {

g_printerr ("Streammux request sink pad failed. Exiting.\n");

return -1;

}

srcpad = gst_element_get_static_pad (decoder, pad_name_src); // 生产pad

if (!srcpad) {

g_printerr ("Decoder request src pad failed. Exiting.\n");

return -1;

}

// 将创建好的元件按照顺序连接起来,decoder -> streammux

if (gst_pad_link (srcpad, sinkpad) != GST_PAD_LINK_OK) {

g_printerr ("Failed to link decoder to stream muxer. Exiting.\n");

return -1;

}

gst_object_unref (sinkpad);

gst_object_unref (srcpad);

// 将创建好的元件按照顺序连接起来,source -> h264parser -> decoder

if (!gst_element_link_many (source, h264parser, decoder, NULL)) {

g_printerr ("Elements could not be linked: 1. Exiting.\n");

return -1;

}

// 将创建好的元件按照顺序连接起来,streammux -> pgie -> nvvidconv -> nvosd -> video -> sink

#ifdef PLATFORM_TEGRA

if (!gst_element_link_many (streammux, pgie,

nvvidconv, nvosd, transform, sink, NULL)) {

g_printerr ("Elements could not be linked: 2. Exiting.\n");

return -1;

}

#else

if (!gst_element_link_many (streammux, pgie,

nvvidconv, nvosd, sink, NULL)) {

g_printerr ("Elements could not be linked: 2. Exiting.\n");

return -1;

}

#endif

// filesrc → decode → nvstreammux → nvinfer or nvinferserver (primary detector) → nvdsosd → renderer.让我们添加探测来获得生成的元数据的信息。我们添加探测到 osd 元素的接收单元,因为到那时,缓冲区已经获得了所有的元数据。在下文我们会介绍osd_sink_pad_buffer_probe函数,这里先了解此函数的作用就是获取到所有元数据信息,在此基础上画框和打印文字。

osd_sink_pad = gst_element_get_static_pad (nvosd, "sink"); // 生产衬垫

if (!osd_sink_pad)

g_print ("Unable to get sink pad\n");

else

gst_pad_add_probe (osd_sink_pad, GST_PAD_PROBE_TYPE_BUFFER,

osd_sink_pad_buffer_probe, NULL, NULL); // 添加处理

gst_object_unref (osd_sink_pad);所有准备工作都做好之后,就可以通过将管道的状态切换到PLAYING状态,来启动整个管道的数据处理流程:

g_print ("Now playing: %s\n", argv[1]);

gst_element_set_state (pipeline, GST_STATE_PLAYING);进入主循环, 等待管道遇到错误或者EOS而终止

g_print ("Running...\n");

g_main_loop_run (loop);跳出循环,终止管道,并释放资源

g_print ("Returned, stopping playback\n");

gst_element_set_state (pipeline, GST_STATE_NULL);

g_print ("Deleting pipeline\n");

gst_object_unref (GST_OBJECT (pipeline));

g_source_remove (bus_watch_id);

g_main_loop_unref (loop);3) osd_sink_pad_buffer_probe函数

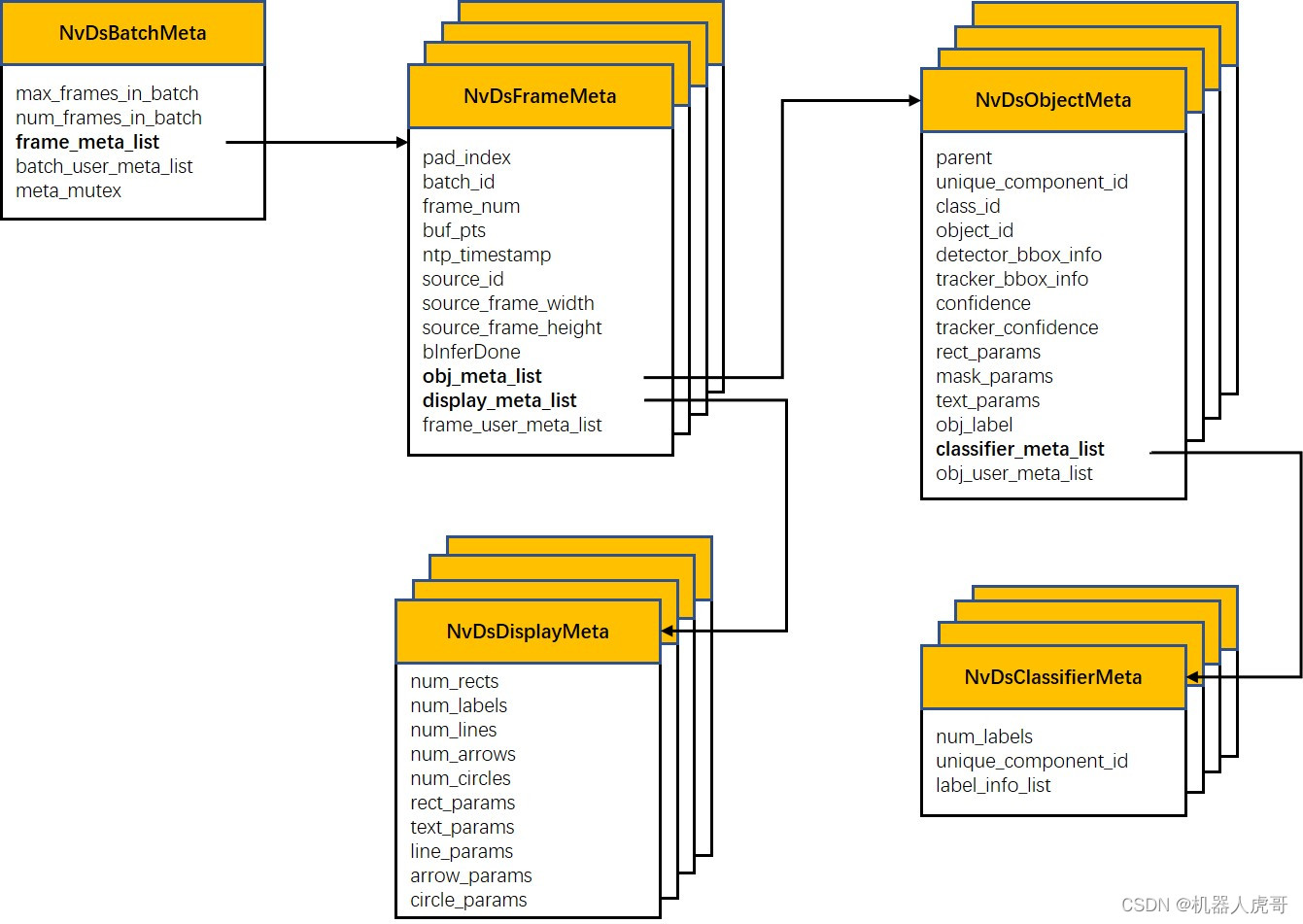

这个函数的作用就是提取从 osd接收器接收到的元数据 ,并更新绘图矩形、对象信息等的参数。阅读这部分,需要了解DeepStream中的数据结构,可以阅读官方文档,看这里。 但我在阅读官方文档过程中发现文中绘制的数据结构有点过时,和源码中的不完全对应。我自己重新绘制了一个,见下图。

static GstPadProbeReturn

osd_sink_pad_buffer_probe (GstPad * pad, GstPadProbeInfo * info,

gpointer u_data)

{

GstBuffer *buf = (GstBuffer *) info->data;

guint num_rects = 0;

NvDsObjectMeta *obj_meta = NULL; // 目标检测元数据类型变量

guint vehicle_count = 0; // 车辆数量

guint person_count = 0; // 行人数量

NvDsMetaList * l_frame = NULL;

NvDsMetaList * l_obj = NULL;

NvDsDisplayMeta *display_meta = NULL; // 展示的元数据类型变量

NvDsBatchMeta *batch_meta = gst_buffer_get_nvds_batch_meta (buf); // 函数 gst_buffer_get_nvds_batch_meta()作用是 从 Gst Buffer 中提取 NvDsBatchMeta

// 对batch_meta 中的frame_meta_list进行遍历; frame_meta_list列表的长度是num_frames_in_batch

for (l_frame = batch_meta->frame_meta_list; l_frame != NULL;

l_frame = l_frame->next) {

NvDsFrameMeta *frame_meta = (NvDsFrameMeta *) (l_frame->data);

int offset = 0;

// 对单帧的obj_meta_list进行遍历

for (l_obj = frame_meta->obj_meta_list; l_obj != NULL;

l_obj = l_obj->next) {

obj_meta = (NvDsObjectMeta *) (l_obj->data);

// 判断目标检测框的类别如果是0(0是汽车的类别标签),那么车辆数+1,目标检测框数+1.

if (obj_meta->class_id == PGIE_CLASS_ID_VEHICLE) {

vehicle_count++;

num_rects++;

}

// 判断目标检测框的类别如果是2(0是行人的类别标签),那么行人数+1,目标检测框数+1.

if (obj_meta->class_id == PGIE_CLASS_ID_PERSON) {

person_count++;

num_rects++;

}

}

display_meta = nvds_acquire_display_meta_from_pool(batch_meta);

// 设置display_meta的text_params属性

NvOSD_TextParams *txt_params = &display_meta->text_params[0];

display_meta->num_labels = 1;

txt_params->display_text = g_malloc0 (MAX_DISPLAY_LEN);

offset = snprintf(txt_params->display_text, MAX_DISPLAY_LEN, "Person = %d ", person_count);

offset = snprintf(txt_params->display_text + offset , MAX_DISPLAY_LEN, "Vehicle = %d ", vehicle_count);

// 设置文本在画面中的x,y坐标(分别是相对于画面原点的偏移量)

txt_params->x_offset = 10;

txt_params->y_offset = 12;

// 设置文本的字体类型、字体颜色、字体大小

txt_params->font_params.font_name = "Serif";

txt_params->font_params.font_size = 10;

txt_params->font_params.font_color.red = 1.0;

txt_params->font_params.font_color.green = 1.0;

txt_params->font_params.font_color.blue = 1.0;

txt_params->font_params.font_color.alpha = 1.0;

// 设置文本的背景颜色

txt_params->set_bg_clr = 1;

txt_params->text_bg_clr.red = 0.0;

txt_params->text_bg_clr.green = 0.0;

txt_params->text_bg_clr.blue = 0.0;

txt_params->text_bg_clr.alpha = 1.0;

// 将display_meta 信息添加到frame_meta信息中

nvds_add_display_meta_to_frame(frame_meta, display_meta);

}

g_print ("Frame Number = %d Number of objects = %d "

"Vehicle Count = %d Person Count = %d\n",

frame_number, num_rects, vehicle_count, person_count);

frame_number++;

return GST_PAD_PROBE_OK;

}

4) bus_call 函数

一个消息处理函数bus_cal,l来监视产生的消息。这里不再详解,可能也没必要深入了解,以后需要再学习不迟。

static gboolean

bus_call (GstBus * bus, GstMessage * msg, gpointer data)

{

GMainLoop *loop = (GMainLoop *) data;

switch (GST_MESSAGE_TYPE (msg)) {

case GST_MESSAGE_EOS:

g_print ("End of stream\n");

g_main_loop_quit (loop);

break;

case GST_MESSAGE_ERROR:{

gchar *debug;

GError *error;

gst_message_parse_error (msg, &error, &debug);

g_printerr ("ERROR from element %s: %s\n",

GST_OBJECT_NAME (msg->src), error->message);

if (debug)

g_printerr ("Error details: %s\n", debug);

g_free (debug);

g_error_free (error);

g_main_loop_quit (loop);

break;

}

default:

break;

}

return TRUE;

}以上就是我今天要分享的内容。纠错,疑问,交流: [email protected]