系列目录

lab1 地址 : lab1

lab2 地址 :lab2

lab3 地址 :lab3

lab4 地址 :lab4

lab5 地址 :lab5

lab6 地址 :lab6

文章目录

前言

最近在从公司回家后一直在看《MYSQL时怎样运行的》这本书,但是纸上得来终觉浅..为此在某个周末提前开始了,之前一直想写的lab,也就是6.830这一门关于database的这门课。

一、6.830/Lab1 Start

课程语言使用的是java,因此笔者直接使用了idea作为lab的开发ide,debug断点调试啥的都比较方便。

对于lab1其实是简单实现的是一个简易数据库中关于访问磁盘数据的一些核心模块。

In the lab assignments in 6.5830/6.5831 you will write a basic database management system called SimpleDB. For this lab, you will focus on implementing the core modules required to access stored data on disk;

而对于具体Exercise的要实现的主要是:Fields 、Tuple、TupleDesc、Catalog、BufferPool、HeapFile等主要概念,这些会在后面进行进一步说明。

二、Exercise

2.1、Exercise1:Fields and Tuples

Tuples in SimpleDB are quite basic. They consist of a collection of Field objects, one per field in the Tuple. Field is an interface that different data types (e.g., integer, string) implement. Tuple objects are created by the underlying access methods (e.g., heap files, or B-trees), as described in the next section. Tuples also have a type (or schema), called a tuple descriptor, represented by a TupleDesc object. This object consists of a collection of Type objects, one per field in the tuple, each of which describes the type of the corresponding field.

简单来说:对于一个Tuples可以理解为就是表中的一条记录,而fields则是Tuples中这条记录的具体各个字段内容。而TupleDesc则是各个字段对应的schema描述信息。

- Tuple Class:

/**

* Tuple maintains information about the contents of a tuple. Tuples have a

* specified schema specified by a TupleDesc object and contain Field objects

* with the data for each field.

*/

public class Tuple implements Serializable {

private static final long serialVersionUID = 1L;

/**

* 类似于元组的schema信息

*/

TupleDesc td;

/**

* 代表元组在disk的位置

*/

RecordId rid;

/**

* 标示这一条记录的所有字段

*/

CopyOnWriteArrayList<Field> fields;

/**

* Create a new tuple with the specified schema (type).

*

* @param td the schema of this tuple. It must be a valid TupleDesc

* instance with at least one field.

*/

public Tuple(TupleDesc td) {

// some code goes here

this.td = td;

this.fields = new CopyOnWriteArrayList<>();

}

/**

* @return The TupleDesc representing the schema of this tuple.

*/

public TupleDesc getTupleDesc() {

// some code goes here

return td;

}

/**

* @return The RecordId representing the location of this tuple on disk. May

* be null.

*/

public RecordId getRecordId() {

// some code goes here

return rid;

}

/**

* Set the RecordId information for this tuple.

*

* @param rid the new RecordId for this tuple.

*/

public void setRecordId(RecordId rid) {

// some code goes here

this.rid = rid;

}

/**

* Change the value of the ith field of this tuple.

*

* @param i index of the field to change. It must be a valid index.

* @param f new value for the field.

*/

public void setField(int i, Field f) {

// some code goes here

if(i >= 0 && i < fields.size()){

fields.set(i,f);

} else if (i == fields.size()) {

fields.add(f);

}

}

/**

* @param i field index to return. Must be a valid index.

* @return the value of the ith field, or null if it has not been set.

*/

public Field getField(int i) {

// some code goes here

if(fields == null || i >= fields.size()){

return null;

}

return fields.get(i);

}

/**

* Returns the contents of this Tuple as a string. Note that to pass the

* system tests, the format needs to be as follows:

* <p>

* column1\tcolumn2\tcolumn3\t...\tcolumnN

* <p>

* where \t is any whitespace (except a newline)

*/

public String toString() {

// some code goes here

StringBuilder stringBuilder = new StringBuilder();

Iterator<TupleDesc.TDItem> tdItems = this.td.iterator();

int i = 0;

while (tdItems.hasNext()) {

TupleDesc.TDItem item = tdItems.next();

stringBuilder.append("FiledName: ").append(item.fieldName);

stringBuilder.append("==> Value: ").append(fields.get(i).toString());

stringBuilder.append("\n");

i++;

}

return stringBuilder.toString();

}

/**

* @return An iterator which iterates over all the fields of this tuple

*/

public Iterator<Field> fields() {

// some code goes here

return fields.iterator();

}

/**

* reset the TupleDesc of this tuple (only affecting the TupleDesc)

*/

public void resetTupleDesc(TupleDesc td) {

// some code goes here

this.td = td;

}

@Override

public boolean equals(Object obj) {

if (!(obj instanceof Tuple))

{

return false;

}

Tuple other = (Tuple) obj;

if (this.rid.equals(other.getRecordId()) &&

this.td.equals(other.getTupleDesc())) {

for (int i = 0; i < this.fields.size(); i++) {

if (!this.fields.get(i).equals(other.getField(i))) {

return false;

}

}

return true;

}

return false;

}

}

- TupleDesc Class:

public class TupleDesc implements Serializable ,Cloneable{

CopyOnWriteArrayList<TDItem> tdItems;

public CopyOnWriteArrayList<TDItem> getTdItems() {

return tdItems;

}

@Override

public TupleDesc clone() {

try {

return (TupleDesc) super.clone();

} catch (CloneNotSupportedException e) {

throw new AssertionError();

}

}

/**

* A help class to facilitate organizing the information of each field

*/

public static class TDItem implements Serializable {

private static final long serialVersionUID = 1L;

/**

* The type of the field

*/

public final Type fieldType;

/**

* The name of the field

*/

public final String fieldName;

public TDItem(Type t, String n) {

this.fieldName = n;

this.fieldType = t;

}

public String toString() {

return fieldName + "(" + fieldType + ")";

}

}

/**

* @return An iterator which iterates over all the field TDItems

* that are included in this TupleDesc

*/

public Iterator<TDItem> iterator() {

// some code goes here

if(tdItems == null){

return null;

}

return tdItems.iterator();

}

private static final long serialVersionUID = 1L;

/**

* Create a new TupleDesc with typeAr.length fields with fields of the

* specified types, with associated named fields.

*

* @param typeAr array specifying the number of and types of fields in this

* TupleDesc. It must contain at least one entry.

* @param fieldAr array specifying the names of the fields. Note that names may

* be null.

*/

public TupleDesc(Type[] typeAr, String[] fieldAr) {

// some code goes here

tdItems = new CopyOnWriteArrayList<>();

for (int i = 0; i < typeAr.length; i++) {

tdItems.add(new TDItem(typeAr[i],fieldAr[i]));

}

}

/**

* Constructor. Create a new tuple desc with typeAr.length fields with

* fields of the specified types, with anonymous (unnamed) fields.

*

* @param typeAr array specifying the number of and types of fields in this

* TupleDesc. It must contain at least one entry.

*/

public TupleDesc(Type[] typeAr) {

// some code goes here

tdItems = new CopyOnWriteArrayList<>();

for (int i = 0; i < typeAr.length; i++) {

tdItems.add(new TDItem(typeAr[i],null));

}

}

public TupleDesc(){

tdItems = new CopyOnWriteArrayList<>();

}

/**

* @return the number of fields in this TupleDesc

*/

public int numFields() {

// some code goes here

return tdItems.size();

}

/**

* Gets the (possibly null) field name of the ith field of this TupleDesc.

*

* @param i index of the field name to return. It must be a valid index.

* @return the name of the ith field

* @throws NoSuchElementException if i is not a valid field reference.

*/

public String getFieldName(int i) throws NoSuchElementException {

// some code goes here

return tdItems.get(i).fieldName;

}

/**

* Gets the type of the ith field of this TupleDesc.

*

* @param i The index of the field to get the type of. It must be a valid

* index.

* @return the type of the ith field

* @throws NoSuchElementException if i is not a valid field reference.

*/

public Type getFieldType(int i) throws NoSuchElementException {

// some code goes here

if(i < 0 || i >= tdItems.size()){

throw new NoSuchElementException("i is not a valid field reference.");

}

return tdItems.get(i).fieldType;

}

/**

* Find the index of the field with a given name.

*

* @param name name of the field.

* @return the index of the field that is first to have the given name.

* @throws NoSuchElementException if no field with a matching name is found.

*/

public int indexForFieldName(String name) throws NoSuchElementException {

// some code goes here

if(name == null){

throw new NoSuchElementException("no field with a matching name is found.");

}

String altName = name.substring(name.lastIndexOf(".")+1);

// 因为合并后的元组可能得不到别名因此去掉.前面的名字

for (int i = 0; i < tdItems.size(); i++) {

if(name.equals(getFieldName(i)) || altName.equals(getFieldName(i)) ){

return i;

}

}

throw new NoSuchElementException("no field with a matching name is found.");

}

/**

* @return The size (in bytes) of tuples corresponding to this TupleDesc.

* Note that tuples from a given TupleDesc are of a fixed size.

*/

public int getSize() {

// TODO: some code goes here

int size = 0;

for (TDItem item : tdItems) {

size += item.fieldType.getLen();

}

return size;

}

/**

* Merge two TupleDescs into one, with td1.numFields + td2.numFields fields,

* with the first td1.numFields coming from td1 and the remaining from td2.

*

* @param td1 The TupleDesc with the first fields of the new TupleDesc

* @param td2 The TupleDesc with the last fields of the TupleDesc

* @return the new TupleDesc

*/

public static TupleDesc merge(TupleDesc td1, TupleDesc td2) {

// some code goes here

if (td1 == null) {

return td2;

}

if (td2 == null) {

return td1;

}

TupleDesc tupleDesc = new TupleDesc();

for(int i = 0 ; i < td1.numFields() ; i++){

tupleDesc.tdItems.add(td1.tdItems.get(i));

}

for (int i = 0; i < td2.numFields(); i++) {

tupleDesc.tdItems.add(td2.tdItems.get(i));

}

return tupleDesc;

}

/**

* Compares the specified object with this TupleDesc for equality. Two

* TupleDescs are considered equal if they have the same number of items

* and if the i-th type in this TupleDesc is equal to the i-th type in o

* for every i.

*

* @param o the Object to be compared for equality with this TupleDesc.

* @return true if the object is equal to this TupleDesc.

*/

public boolean equals(Object o) {

if (!(o instanceof TupleDesc)) {

return false;

}

TupleDesc other = (TupleDesc) o;

if (other.getSize() != this.getSize() || other.numFields() != this.numFields()) {

return false;

}

for (int i = 0; i < this.numFields(); i++) {

if (!this.getFieldType(i).equals(other.getFieldType(i))) {

return false;

}

}

return true;

}

public int hashCode() {

// If you want to use TupleDesc as keys for HashMap, implement this so

// that equal objects have equals hashCode() results

int result = 0;

for (TDItem item : tdItems) {

result += item.toString().hashCode() * 41 ;

}

return result;

}

/**

* Returns a String describing this descriptor. It should be of the form

* "fieldType[0](fieldName[0]), ..., fieldType[M](fieldName[M])", although

* the exact format does not matter.

*

* @return String describing this descriptor.

*/

public String toString() {

// some code goes here

StringBuilder stringBuilder = new StringBuilder();

for (int i = 0; i < this.numFields(); i++) {

TDItem tdItem = tdItems.get(i);

stringBuilder.append(tdItem.fieldType.toString())

.append("(").append(tdItem.fieldName).append("),");

}

stringBuilder.deleteCharAt(stringBuilder.length()-1);

return stringBuilder.toString();

}

}

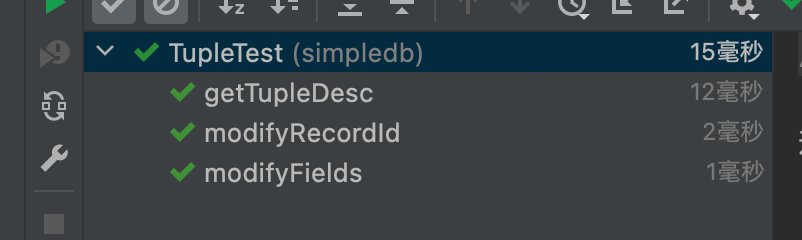

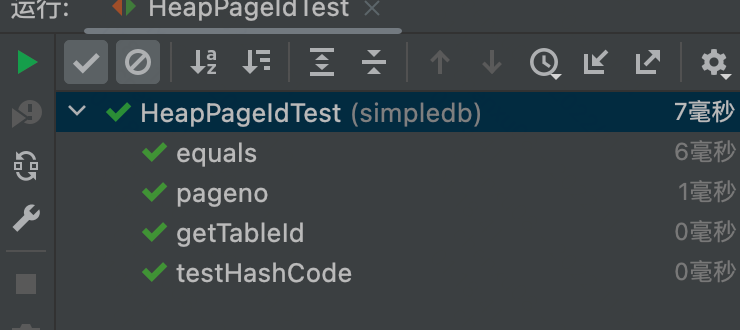

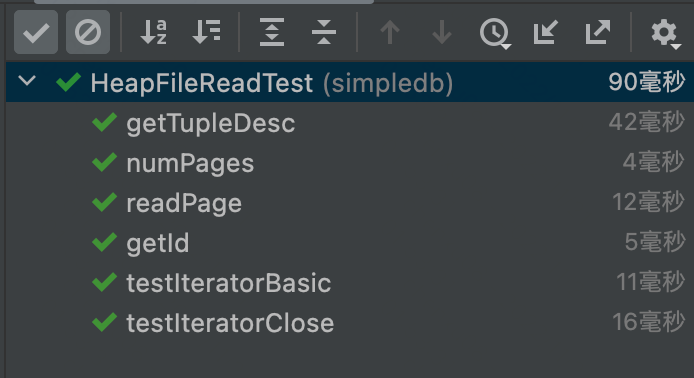

- 测试结果:

2.2、Exercise2:Catalog

The catalog (class Catalog in SimpleDB) consists of a list of the tables and schemas of the tables that are currently in the database. You will need to support the ability to add a new table, as well as getting information about a particular table. Associated with each table is a TupleDesc object that allows operators to determine the types and number of fields in a table.

The global catalog is a single instance of Catalog that is allocated for the entire SimpleDB process. The global catalog can be retrieved via the method Database.getCatalog(), and the same goes for the global buffer pool ( using Database.getBufferPool()).

数据库中管理表的入口,类似于“数据库”的概念,但更多的是存储各个表的与其id或者name的映射,以便更好的去访问这些文件(表),也因此叫做目录。

- Catalog Class:

/**

* The Catalog keeps track of all available tables in the database and their

* associated schemas.

* For now, this is a stub catalog that must be populated with tables by a

* user program before it can be used -- eventually, this should be converted

* to a catalog that reads a catalog table from disk.

*

* @Threadsafe

*/

public class Catalog {

ConcurrentHashMap<Integer,Table> tableIdMap;

ConcurrentHashMap<String,Integer> tableNameMap;

/**

* Constructor.

* Creates a new, empty catalog.

*/

public Catalog() {

// some code goes here

tableIdMap = new ConcurrentHashMap<>();

tableNameMap = new ConcurrentHashMap<>();

}

/**

* Add a new table to the catalog.

* This table's contents are stored in the specified DbFile.

*

* @param file the contents of the table to add; file.getId() is the identfier of

* this file/tupledesc param for the calls getTupleDesc and getFile

* @param name the name of the table -- may be an empty string. May not be null. If a name

* conflict exists, use the last table to be added as the table for a given name.

* @param pkeyField the name of the primary key field

*/

public void addTable(DbFile file, String name, String pkeyField) {

// some code goes here

tableIdMap.put(file.getId(),new Table(file,name,pkeyField));

tableNameMap.put(name,file.getId());

}

public void addTable(DbFile file, String name) {

addTable(file, name, "");

}

/**

* Add a new table to the catalog.

* This table has tuples formatted using the specified TupleDesc and its

* contents are stored in the specified DbFile.

*

* @param file the contents of the table to add; file.getId() is the identfier of

* this file/tupledesc param for the calls getTupleDesc and getFile

*/

public void addTable(DbFile file) {

addTable(file, (UUID.randomUUID()).toString());

}

/**

* Return the id of the table with a specified name,

*

* @throws NoSuchElementException if the table doesn't exist

*/

public int getTableId(String name) throws NoSuchElementException {

// some code goes here

if(name != null && tableNameMap.containsKey(name)){

return tableNameMap.get(name);

}

throw new NoSuchElementException("table doesn't exist!");

}

/**

* Returns the tuple descriptor (schema) of the specified table

*

* @param tableid The id of the table, as specified by the DbFile.getId()

* function passed to addTable

* @throws NoSuchElementException if the table doesn't exist

*/

public TupleDesc getTupleDesc(int tableid) throws NoSuchElementException {

// some code goes here

if(tableIdMap.containsKey(tableid)){

return tableIdMap.get(tableid).getFile().getTupleDesc();

}

throw new NoSuchElementException("table doesn't exist!");

}

/**

* Returns the DbFile that can be used to read the contents of the

* specified table.

*

* @param tableid The id of the table, as specified by the DbFile.getId()

* function passed to addTable

*/

public DbFile getDatabaseFile(int tableid) throws NoSuchElementException {

// some code goes here

if(tableIdMap.containsKey(tableid)){

return tableIdMap.get(tableid).getFile();

}

throw new NoSuchElementException("table doesn't exist!");

}

public String getPrimaryKey(int tableid) {

// some code goes here

if(tableIdMap.containsKey(tableid)){

return tableIdMap.get(tableid).getPkeyField();

}

throw new NoSuchElementException("table doesn't exist!");

}

public Iterator<Integer> tableIdIterator() {

// some code goes here

return tableIdMap.keySet().iterator();

}

public String getTableName(int id) {

// some code goes here

if(tableIdMap.containsKey(id)){

return tableIdMap.get(id).getName();

}

throw new NoSuchElementException("table doesn't exist!");

}

/**

* Delete all tables from the catalog

*/

public void clear() {

// some code goes here

tableIdMap.clear();

}

/**

* Reads the schema from a file and creates the appropriate tables in the database.

*

* @param catalogFile

*/

public void loadSchema(String catalogFile) {

String line = "";

String baseFolder = new File(new File(catalogFile).getAbsolutePath()).getParent();

try {

BufferedReader br = new BufferedReader(new FileReader(catalogFile));

while ((line = br.readLine()) != null) {

//assume line is of the format name (field type, field type, ...)

String name = line.substring(0, line.indexOf("(")).trim();

//System.out.println("TABLE NAME: " + name);

String fields = line.substring(line.indexOf("(") + 1, line.indexOf(")")).trim();

String[] els = fields.split(",");

List<String> names = new ArrayList<>();

List<Type> types = new ArrayList<>();

String primaryKey = "";

for (String e : els) {

String[] els2 = e.trim().split(" ");

names.add(els2[0].trim());

if (els2[1].trim().equalsIgnoreCase("int"))

types.add(Type.INT_TYPE);

else if (els2[1].trim().equalsIgnoreCase("string"))

types.add(Type.STRING_TYPE);

else {

System.out.println("Unknown type " + els2[1]);

System.exit(0);

}

if (els2.length == 3) {

if (els2[2].trim().equals("pk"))

primaryKey = els2[0].trim();

else {

System.out.println("Unknown annotation " + els2[2]);

System.exit(0);

}

}

}

Type[] typeAr = types.toArray(new Type[0]);

String[] namesAr = names.toArray(new String[0]);

TupleDesc t = new TupleDesc(typeAr, namesAr);

HeapFile tabHf = new HeapFile(new File(baseFolder + "/" + name + ".dat"), t);

addTable(tabHf, name, primaryKey);

System.out.println("Added table : " + name + " with schema " + t);

}

} catch (IOException e) {

e.printStackTrace();

System.exit(0);

} catch (IndexOutOfBoundsException e) {

System.out.println("Invalid catalog entry : " + line);

System.exit(0);

}

}

}

这里自己定义了一个Table Class:

@Data

public class Table {

/**

* the contents of the table to add; file.getId() is the identfier of

* this file/tupledesc param for the calls getTupleDesc and getFile

*/

private DbFile file;

/**

* the name of the table -- may be an empty string. May not be null. If a name

* conflict exists, use the last table to be added as the table for a given name.

*/

private String name;

/**

* the name of the primary key field

*/

private String pkeyField;

public Table(DbFile file,String name,String pkeyField){

this.file = file;

this.name = name;

this.pkeyField = pkeyField;

}

public Table(DbFile file,String name){

new Table(file,name,"");

}

}

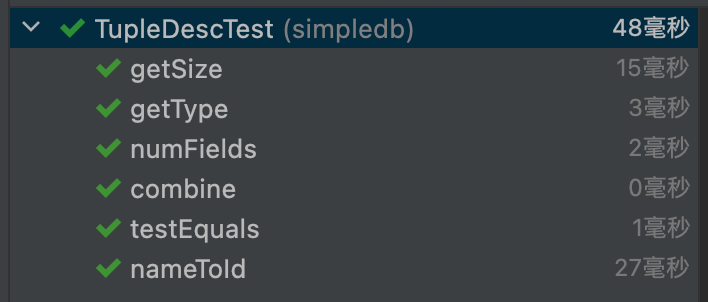

- 测试结果:

2.3、Exercise3:BufferPool

The buffer pool (class BufferPool in SimpleDB) is responsible for caching pages in memory that have been recently read from disk. All operators read and write pages from various files on disk through the buffer pool. It consists of a fixed number of pages, defined by the numPages parameter to the BufferPool constructor. In later labs, you will implement an eviction policy. For this lab, you only need to implement the constructor and the BufferPool.getPage() method used by the SeqScan operator. The BufferPool should store up to numPages pages. For this lab, if more than numPages requests are made for different pages, then instead of implementing an eviction policy, you may throw a DbException. In future labs you will be required to implement an eviction policy.

The Database class provides a static method, Database.getBufferPool(), that returns a reference to the single BufferPool instance for the entire SimpleDB process.

虽然数据存储在磁盘中,但是也不能每次都从磁盘中读数据,这样性能是极差的。也因此为了提升查询性能,后续数据从磁盘中取出后,缓存在内存中,下次查询同样的数据的时候,直接从内存中读取、处理。而这读取的数据,则被分为若干的页,以页作为磁盘和内存之间的交互的基本单位。(对于InnoDB也是如此,并且页的基本单位为16KB)。也就是下一个Exercise要实现的内容:堆页。

- BufferPool Class

/**

* BufferPool manages the reading and writing of pages into memory from

* disk. Access methods call into it to retrieve pages, and it fetches

* pages from the appropriate location.

* <p>

* The BufferPool is also responsible for locking; when a transaction fetches

* a page, BufferPool checks that the transaction has the appropriate

* locks to read/write the page.

*

* @Threadsafe, all fields are final

*/

public class BufferPool {

/**

* Bytes per page, including header.

*/

private static final int DEFAULT_PAGE_SIZE = 4096;

private static int pageSize = DEFAULT_PAGE_SIZE;

/**

* Default number of pages passed to the constructor. This is used by

* other classes. BufferPool should use the numPages argument to the

* constructor instead.

*/

public static final int DEFAULT_PAGES = 50;

private final int numPages;

private final Map<PageId, Page> bufferPool = new ConcurrentHashMap<>();

/**

* Creates a BufferPool that caches up to numPages pages.

*

* @param numPages maximum number of pages in this buffer pool.

*/

public BufferPool(int numPages) {

// some code goes here

this.numPages = numPages;

}

public static int getPageSize() {

return pageSize;

}

// THIS FUNCTION SHOULD ONLY BE USED FOR TESTING!!

public static void setPageSize(int pageSize) {

BufferPool.pageSize = pageSize;

}

// THIS FUNCTION SHOULD ONLY BE USED FOR TESTING!!

public static void resetPageSize() {

BufferPool.pageSize = DEFAULT_PAGE_SIZE;

}

/**

* Retrieve the specified page with the associated permissions.

* Will acquire a lock and may block if that lock is held by another

* transaction.

* <p>

* The retrieved page should be looked up in the buffer pool. If it

* is present, it should be returned. If it is not present, it should

* be added to the buffer pool and returned. If there is insufficient

* space in the buffer pool, a page should be evicted and the new page

* should be added in its place.

*

* @param tid the ID of the transaction requesting the page

* @param pid the ID of the requested page

* @param perm the requested permissions on the page

*/

public Page getPage(TransactionId tid, PageId pid, Permissions perm)

throws TransactionAbortedException, DbException {

// some code goes here

// TODO 事务..

// bufferPool应直接放在直接内存

if(!bufferPool.containsKey(pid)){

DbFile file = Database.getCatalog().getDatabaseFile(pid.getTableId());

Page page = file.readPage(pid);

bufferPool.put(pid,page);

}

return bufferPool.get(pid);

}

/**

* Releases the lock on a page.

* Calling this is very risky, and may result in wrong behavior. Think hard

* about who needs to call this and why, and why they can run the risk of

* calling it.

*

* @param tid the ID of the transaction requesting the unlock

* @param pid the ID of the page to unlock

*/

public void unsafeReleasePage(TransactionId tid, PageId pid) {

// TODO: some code goes here

// not necessary for lab1|lab2

}

/**

* Release all locks associated with a given transaction.

*

* @param tid the ID of the transaction requesting the unlock

*/

public void transactionComplete(TransactionId tid) {

// TODO: some code goes here

// not necessary for lab1|lab2

}

/**

* Return true if the specified transaction has a lock on the specified page

*/

public boolean holdsLock(TransactionId tid, PageId p) {

// TODO: some code goes here

// not necessary for lab1|lab2

return false;

}

/**

* Commit or abort a given transaction; release all locks associated to

* the transaction.

*

* @param tid the ID of the transaction requesting the unlock

* @param commit a flag indicating whether we should commit or abort

*/

public void transactionComplete(TransactionId tid, boolean commit) {

// TODO: some code goes here

// not necessary for lab1|lab2

}

/**

* Add a tuple to the specified table on behalf of transaction tid. Will

* acquire a write lock on the page the tuple is added to and any other

* pages that are updated (Lock acquisition is not needed for lab2).

* May block if the lock(s) cannot be acquired.

* <p>

* Marks any pages that were dirtied by the operation as dirty by calling

* their markDirty bit, and adds versions of any pages that have

* been dirtied to the cache (replacing any existing versions of those pages) so

* that future requests see up-to-date pages.

*

* @param tid the transaction adding the tuple

* @param tableId the table to add the tuple to

* @param t the tuple to add

*/

public void insertTuple(TransactionId tid, int tableId, Tuple t)

throws DbException, IOException, TransactionAbortedException {

// TODO: some code goes here

// not necessary for lab1

}

/**

* Remove the specified tuple from the buffer pool.

* Will acquire a write lock on the page the tuple is removed from and any

* other pages that are updated. May block if the lock(s) cannot be acquired.

* <p>

* Marks any pages that were dirtied by the operation as dirty by calling

* their markDirty bit, and adds versions of any pages that have

* been dirtied to the cache (replacing any existing versions of those pages) so

* that future requests see up-to-date pages.

*

* @param tid the transaction deleting the tuple.

* @param t the tuple to delete

*/

public void deleteTuple(TransactionId tid, Tuple t)

throws DbException, IOException, TransactionAbortedException {

// TODO: some code goes here

// not necessary for lab1

}

/**

* Flush all dirty pages to disk.

* NB: Be careful using this routine -- it writes dirty data to disk so will

* break simpledb if running in NO STEAL mode.

*/

public synchronized void flushAllPages() throws IOException {

// TODO: some code goes here

// not necessary for lab1

}

/**

* Remove the specific page id from the buffer pool.

* Needed by the recovery manager to ensure that the

* buffer pool doesn't keep a rolled back page in its

* cache.

* <p>

* Also used by B+ tree files to ensure that deleted pages

* are removed from the cache so they can be reused safely

*/

public synchronized void removePage(PageId pid) {

// TODO: some code goes here

// not necessary for lab1

}

/**

* Flushes a certain page to disk

*

* @param pid an ID indicating the page to flush

*/

private synchronized void flushPage(PageId pid) throws IOException {

// TODO: some code goes here

// not necessary for lab1

}

/**

* Write all pages of the specified transaction to disk.

*/

public synchronized void flushPages(TransactionId tid) throws IOException {

// TODO: some code goes here

// not necessary for lab1|lab2

}

/**

* Discards a page from the buffer pool.

* Flushes the page to disk to ensure dirty pages are updated on disk.

*/

private synchronized void evictPage() throws DbException {

// TODO: some code goes here

// not necessary for lab1

}

}

2.4、Exercise4:HeapFile access method

Access methods provide a way to read or write data from disk that is arranged in a specific way. Common access methods include heap files (unsorted files of tuples) and B-trees; for this assignment, you will only implement a heap file access method, and we have written some of the code for you.

A HeapFile object is arranged into a set of pages, each of which consists of a fixed number of bytes for storing tuples, (defined by the constant BufferPool.DEFAULT_PAGE_SIZE), including a header. In SimpleDB, there is one HeapFile object for each table in the database. Each page in a HeapFile is arranged as a set of slots, each of which can hold one tuple (tuples for a given table in SimpleDB are all of the same size). In addition to these slots, each page has a header that consists of a bitmap with one bit per tuple slot. If the bit corresponding to a particular tuple is 1, it indicates that the tuple is valid; if it is 0, the tuple is invalid (e.g., has been deleted or was never initialized.) Pages of HeapFile objects are of type HeapPage which implements the Page interface. Pages are stored in the buffer pool but are read and written by the HeapFile class.

- 简单来说,对于堆页:内部有多个槽的存储空间,每个槽中可以储存一个元组。同时还有一个head的bitmap位图,每个位上用来判断每个槽是否已经被存储了一个元组。标题为Heapile access method,其实可以看出来堆页储存的就是下一个Exercise堆文件要实现的具体数据。

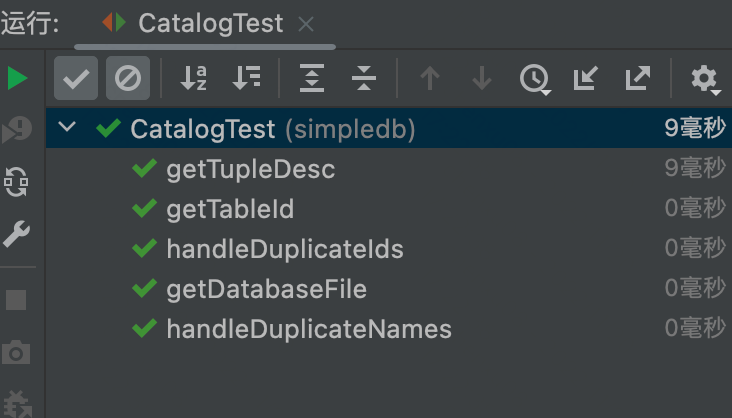

回到lab中,HeapPage这里还有要注意的可能就是TuplesNum、Header的计算。introduce中也给出了具体的计算公式:

- tuples per page = floor((page size * 8) / (tuple size * 8 + 1))

- header bytes = ceiling(tuples per page / 8)

只要记住这里的计算都要考虑到bit、与byte的换算就没太大问题。

- HeapPageId Class:

这个类主要也是储存HeapFile与HeaPage的映射关系。每个tableId,都应该有多个pgNo也就是多个页,也就是一对多的关系。

/**

* Unique identifier for HeapPage objects.

*/

public class HeapPageId implements PageId {

/**

* The table that is being referenced

*/

private int tableId;

/**

* The page number in that table.

*/

private int pgNo;

/**

* Constructor. Create a page id structure for a specific page of a

* specific table.

* 理解为是表id(HeapPageId)与其子页的关系映射的数据结构

* 如表1-1 表1-2...

* @param tableId The table that is being referenced

* @param pgNo The page number in that table.

*/

public HeapPageId(int tableId, int pgNo) {

// some code goes here

this.tableId = tableId;

this.pgNo = pgNo;

}

/**

* @return the table associated with this PageId

*/

public int getTableId() {

// TODO: some code goes here

return tableId;

}

/**

* @return the page number in the table getTableId() associated with

* this PageId

*/

public int getPageNumber() {

// TODO: some code goes here

return pgNo;

}

public void setTableId(int tableId){

this.tableId = tableId;

}

public void setPgNo(int pgNo){

this.pgNo = pgNo;

}

/**

* @return a hash code for this page, represented by a combination of

* the table number and the page number (needed if a PageId is used as a

* key in a hash table in the BufferPool, for example.)

* @see BufferPool

*/

public int hashCode() {

// TODO: some code goes here

// throw new UnsupportedOperationException("implement this");

return getPageNumber()*1000 + getTableId();

}

/**

* Compares one PageId to another.

*

* @param o The object to compare against (must be a PageId)

* @return true if the objects are equal (e.g., page numbers and table

* ids are the same)

*/

public boolean equals(Object o) {

// TODO: some code goes here

if (!(o instanceof HeapPageId)) {

return false; }

HeapPageId other = (HeapPageId) o;

if (this.getPageNumber() == other.getPageNumber() && this.getTableId() == other.getTableId()) {

return true;

}

return false;

}

/**

* Return a representation of this object as an array of

* integers, for writing to disk. Size of returned array must contain

* number of integers that corresponds to number of args to one of the

* constructors.

*/

public int[] serialize() {

int[] data = new int[2];

data[0] = getTableId();

data[1] = getPageNumber();

return data;

}

}

- HeapPage Class:

/**

* Each instance of HeapPage stores data for one page of HeapFiles and

* implements the Page interface that is used by BufferPool.

*

* @see HeapFile

* @see BufferPool

*/

public class HeapPage implements Page {

final HeapPageId pid;

final TupleDesc td;

/**

* the lowest bit of the first byte represents whether or not the first slot in the page is in use.

* The second lowest bit of the first byte represents whether or not the second slot in the page is in use

*/

final byte[] header;

final Tuple[] tuples;

/**

* Each page in a HeapFile is arranged as a set of slots, each of which can hold one tuple (tuples for a given table

* in SimpleDB are all of the same size)

*/

final int numSlots;

byte[] oldData;

private final Byte oldDataLock = (byte) 0;

/**

* Create a HeapPage from a set of bytes of data read from disk.

* The format of a HeapPage is a set of header bytes indicating

* the slots of the page that are in use, some number of tuple slots.

* Specifically, the number of tuples is equal to: <p>

* floor((BufferPool.getPageSize()*8) / (tuple size * 8 + 1))

* <p> where tuple size is the size of tuples in this

* database table, which can be determined via {@link Catalog#getTupleDesc}.

* The number of 8-bit header words is equal to:

* <p>

* ceiling(no. tuple slots / 8)

* <p>

*

* @see Database#getCatalog

* @see Catalog#getTupleDesc

* @see BufferPool#getPageSize()

*/

public HeapPage(HeapPageId id, byte[] data) throws IOException {

this.pid = id;

this.td = Database.getCatalog().getTupleDesc(id.getTableId());

this.numSlots = getNumTuples();

DataInputStream dis = new DataInputStream(new ByteArrayInputStream(data));

// allocate and read the header slots of this page

header = new byte[getHeaderSize()];

for (int i = 0; i < header.length; i++)

header[i] = dis.readByte();

tuples = new Tuple[numSlots];

try {

// allocate and read the actual records of this page

for (int i = 0; i < tuples.length; i++)

tuples[i] = readNextTuple(dis, i);

} catch (NoSuchElementException e) {

e.printStackTrace();

}

dis.close();

setBeforeImage();

}

/**

* Retrieve the number of tuples on this page.

*

* @return the number of tuples on this page

*/

private int getNumTuples() {

// some code goes here

return (BufferPool.getPageSize() * 8) / (td.getSize() * 8 +1);

}

/**

* Computes the number of bytes in the header of a page in a HeapFile with each tuple occupying tupleSize bytes

*

* @return the number of bytes in the header of a page in a HeapFile with each tuple occupying tupleSize bytes

*/

private int getHeaderSize() {

// some code goes here

return (int) Math.ceil((double) getNumTuples() / 8);

}

/**

* Return a view of this page before it was modified

* -- used by recovery

*/

public HeapPage getBeforeImage() {

try {

byte[] oldDataRef = null;

synchronized (oldDataLock) {

oldDataRef = oldData;

}

return new HeapPage(pid, oldDataRef);

} catch (IOException e) {

e.printStackTrace();

//should never happen -- we parsed it OK before!

System.exit(1);

}

return null;

}

public void setBeforeImage() {

synchronized (oldDataLock) {

oldData = getPageData().clone();

}

}

/**

* @return the PageId associated with this page.

*/

public HeapPageId getId() {

// some code goes here

return this.pid;

}

/**

* Suck up tuples from the source file.

*/

private Tuple readNextTuple(DataInputStream dis, int slotId) throws NoSuchElementException {

// if associated bit is not set, read forward to the next tuple, and

// return null.

if (!isSlotUsed(slotId)) {

for (int i = 0; i < td.getSize(); i++) {

try {

dis.readByte();

} catch (IOException e) {

System.out.println("slotId:"+slotId+" is empty;");

throw new NoSuchElementException("error reading empty tuple");

}

}

return null;

}

// read fields in the tuple

Tuple t = new Tuple(td);

RecordId rid = new RecordId(pid, slotId);

t.setRecordId(rid);

try {

for (int j = 0; j < td.numFields(); j++) {

Field f = td.getFieldType(j).parse(dis);

t.setField(j, f);

}

} catch (java.text.ParseException e) {

e.printStackTrace();

throw new NoSuchElementException("parsing error!");

}

return t;

}

/**

* Generates a byte array representing the contents of this page.

* Used to serialize this page to disk.

* <p>

* The invariant here is that it should be possible to pass the byte

* array generated by getPageData to the HeapPage constructor and

* have it produce an identical HeapPage object.

*

* @return A byte array correspond to the bytes of this page.

* @see #HeapPage

*/

public byte[] getPageData() {

int len = BufferPool.getPageSize();

ByteArrayOutputStream baos = new ByteArrayOutputStream(len);

DataOutputStream dos = new DataOutputStream(baos);

// create the header of the page

for (byte b : header) {

try {

dos.writeByte(b);

} catch (IOException e) {

// this really shouldn't happen

e.printStackTrace();

}

}

// create the tuples

for (int i = 0; i < tuples.length; i++) {

// empty slot

if (!isSlotUsed(i)) {

for (int j = 0; j < td.getSize(); j++) {

try {

dos.writeByte(0);

} catch (IOException e) {

e.printStackTrace();

}

}

continue;

}

// non-empty slot

for (int j = 0; j < td.numFields(); j++) {

Field f = tuples[i].getField(j);

try {

f.serialize(dos);

} catch (IOException e) {

e.printStackTrace();

}

}

}

// padding

int zerolen = BufferPool.getPageSize() - (header.length + td.getSize() * tuples.length); //- numSlots * td.getSize();

byte[] zeroes = new byte[zerolen];

try {

dos.write(zeroes, 0, zerolen);

} catch (IOException e) {

e.printStackTrace();

}

try {

dos.flush();

} catch (IOException e) {

e.printStackTrace();

}

return baos.toByteArray();

}

/**

* Static method to generate a byte array corresponding to an empty

* HeapPage.

* Used to add new, empty pages to the file. Passing the results of

* this method to the HeapPage constructor will create a HeapPage with

* no valid tuples in it.

*

* @return The returned ByteArray.

*/

public static byte[] createEmptyPageData() {

int len = BufferPool.getPageSize();

return new byte[len]; //all 0

}

/**

* Delete the specified tuple from the page; the corresponding header bit should be updated to reflect

* that it is no longer stored on any page.

*

* @param t The tuple to delete

* @throws DbException if this tuple is not on this page, or tuple slot is

* already empty.

*/

public void deleteTuple(Tuple t) throws DbException {

// TODO: some code goes here

// not necessary for lab1

}

/**

* Adds the specified tuple to the page; the tuple should be updated to reflect

* that it is now stored on this page.

*

* @param t The tuple to add.

* @throws DbException if the page is full (no empty slots) or tupledesc

* is mismatch.

*/

public void insertTuple(Tuple t) throws DbException {

// TODO: some code goes here

// not necessary for lab1

}

/**

* Marks this page as dirty/not dirty and record that transaction

* that did the dirtying

*/

public void markDirty(boolean dirty, TransactionId tid) {

// TODO: some code goes here

// not necessary for lab1

}

/**

* Returns the tid of the transaction that last dirtied this page, or null if the page is not dirty

*/

public TransactionId isDirty() {

// TODO: some code goes here

// Not necessary for lab1

return null;

}

/**

* Returns the number of unused (i.e., empty) slots on this page.

* 计算未使用的slot:计算header数组中bit为0

*/

public int getNumUnusedSlots() {

// some code goes here

int cnt = 0;

for(int i=0;i<numSlots;++i){

if(!isSlotUsed(i)){

++cnt;

}

}

return cnt;

}

/**

* Returns true if associated slot on this page is filled.

*/

public boolean isSlotUsed(int i) {

// some code goes here

// 计算在header中的位置

int iTh = i / 8;

// 计算具体在bitmap中的位置

int bitTh = i % 8;

int onBit = (header[iTh] >> bitTh) & 1;

return onBit == 1;

}

/**

* Abstraction to fill or clear a slot on this page.

*/

private void markSlotUsed(int i, boolean value) {

// TODO: some code goes here

// not necessary for lab1

}

/**

* @return an iterator over all tuples on this page (calling remove on this iterator throws an UnsupportedOperationException)

* (note that this iterator shouldn't return tuples in empty slots!)

*/

public Iterator<Tuple> iterator() {

// some code goes here

List<Tuple> tupleList = new ArrayList<>();

// 判断是否在empty slots

for(int i = 0; i < numSlots ;i++){

if(isSlotUsed(i)) tupleList.add(tuples[i]);

}

return tupleList.iterator();

}

}

- RecordId Class:

/**

* A RecordId is a reference to a specific tuple on a specific page of a

* specific table.

*/

public class RecordId implements Serializable {

private static final long serialVersionUID = 1L;

/**

* the pageid of the page on which the tuple resides

*/

private PageId pid;

/**

* the tuple number within the page.

*/

private int tupleNo ;

/**

* Creates a new RecordId referring to the specified PageId and tuple

* number.

*

* @param pid the pageid of the page on which the tuple resides

* @param tupleno the tuple number within the page.

*/

public RecordId(PageId pid, int tupleno) {

// TODO: some code goes here

this.pid = pid;

this.tupleNo = tupleno;

}

/**

* @return the tuple number this RecordId references.

*/

public int getTupleNumber() {

// TODO: some code goes here

return tupleNo;

}

/**

* @return the page id this RecordId references.

*/

public PageId getPageId() {

// TODO: some code goes here

return pid;

}

/**

* Two RecordId objects are considered equal if they represent the same

* tuple.

*

* @return True if this and o represent the same tuple

*/

@Override

public boolean equals(Object o) {

// TODO: some code goes here

//throw new UnsupportedOperationException("implement this");

if (!(o instanceof RecordId))

{

return false;

}

RecordId other = (RecordId) o;

if (this.pid.equals(other.getPageId()) && this.getTupleNumber() == other.getTupleNumber()) {

return true;

} else {

return false;

}

}

/**

* You should implement the hashCode() so that two equal RecordId instances

* (with respect to equals()) have the same hashCode().

*

* @return An int that is the same for equal RecordId objects.

*/

@Override

public int hashCode() {

// TODO: some code goes here

return this.pid.getTableId()*100+this.pid.getPageNumber()*10+this.tupleNo;

}

}

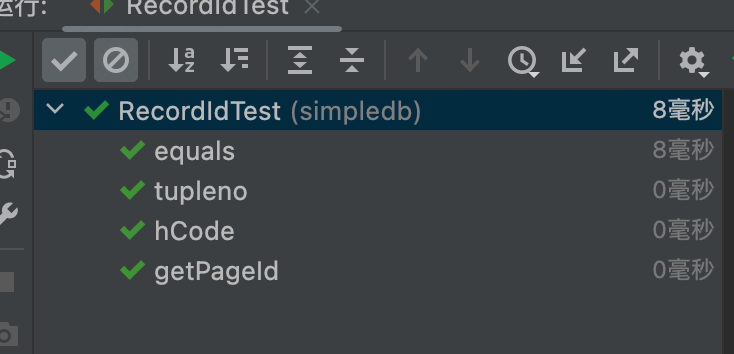

- 测试结果:

2.5、Exercise5:HeapFile

To read a page from disk, you will first need to calculate the correct offset in the file. Hint: you will need random access to the file in order to read and write pages at arbitrary offsets. You should not call BufferPool instance methods when reading a page from disk.

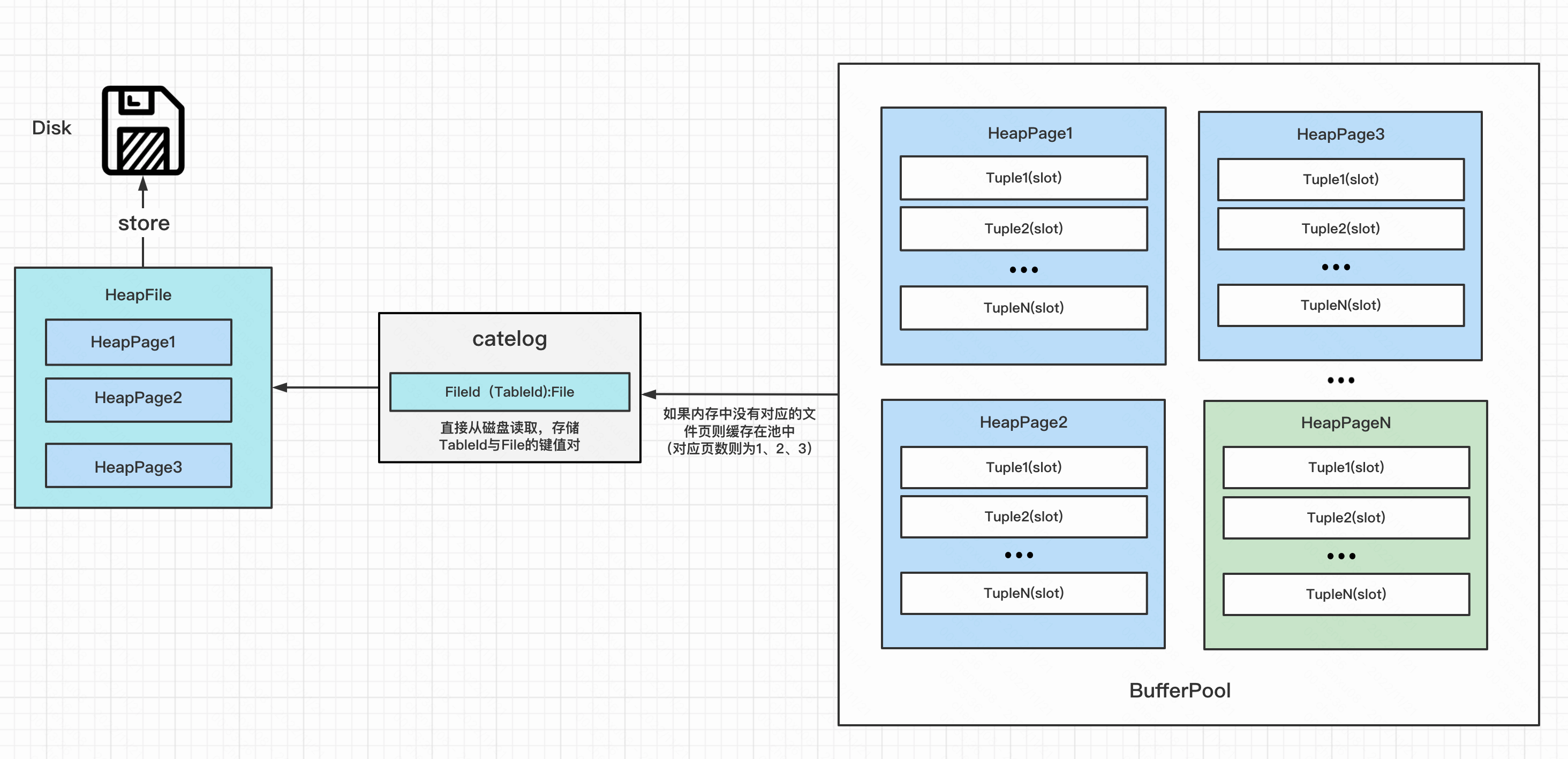

- 简单来说,堆文件是DBFile的一种实现,储存在磁盘上,属于随机存储的,也因此如果被读取到BufferPool上,这个文件则被存储BufferPool的多个页上。

- 这里还有一个点就是,对于一个DBFile就是就是一个table,而上文的catalog的table其实就是对应的这里的文件id。(具体见DBFile的doc),也因此每个文件都有一个TupleDesc对应着表的schema。

这里笔者先画一个图总结一下大致关系:

回到lab中,因为是随机存储的,所以就要去计算文件的offset(偏移量),也就是数据位于具体的哪一页中。 - 而这里要注意的一个细节则是之前BufferPool缓存的应该是完整的页数据。(就如同innoDB中小于16KB的数据同样不会被加载到内存中耗费资源,每次从内存中刷进磁盘的数据基本是大于16KB)。

/**

* HeapFile is an implementation of a DbFile that stores a collection of tuples

* in no particular order. Tuples are stored on pages, each of which is a fixed

* size, and the file is simply a collection of those pages. HeapFile works

* closely with HeapPage. The format of HeapPages is described in the HeapPage

* constructor.

*

* @author Sam Madden

* @see HeapPage#HeapPage

*/

public class HeapFile implements DbFile {

/**

* f the file that stores the on-disk backing store for this heap file.

*/

private final File f;

/**

* 文件描述(consist of records)

*/

private final TupleDesc td;

/**

* 写在内部类的原因是:DbFileIterator is the iterator interface that all SimpleDB Dbfile should

*/

private static final class HeapFileIterator implements DbFileIterator {

private final HeapFile heapFile;

private final TransactionId tid;

/**

* 存储了堆文件迭代器

*/

private Iterator<Tuple> tupleIterator;

private int index;

public HeapFileIterator(HeapFile file,TransactionId tid){

this.heapFile = file;

this.tid = tid;

}

@Override

public void open() throws DbException, TransactionAbortedException {

index = 0;

tupleIterator = getTupleIterator(index);

}

private Iterator<Tuple> getTupleIterator(int pageNumber) throws TransactionAbortedException, DbException{

if(pageNumber >= 0 && pageNumber < heapFile.numPages()){

HeapPageId pid = new HeapPageId(heapFile.getId(),pageNumber);

HeapPage page = (HeapPage)Database.getBufferPool().getPage(tid, pid, Permissions.READ_ONLY);

return page.iterator();

}else{

throw new DbException(String.format("heapFile %d does not exist in page[%d]!", pageNumber,heapFile.getId()));

}

}

@Override

public boolean hasNext() throws DbException, TransactionAbortedException {

// TODO Auto-generated method stub

if(tupleIterator == null){

return false;

}

if(tupleIterator.hasNext()){

return true;

}else{

if(index < (heapFile.numPages()-1)){

index++;

tupleIterator = getTupleIterator(index);

return tupleIterator.hasNext();

}else{

return false;

}

}

}

@Override

public Tuple next() throws DbException, TransactionAbortedException, NoSuchElementException {

if(tupleIterator == null || !tupleIterator.hasNext()){

throw new NoSuchElementException();

}

return tupleIterator.next();

}

@Override

public void rewind() throws DbException, TransactionAbortedException {

close();

open();

}

@Override

public void close() {

tupleIterator = null;

}

}

/**

* Constructs a heap file backed by the specified file.

*

* @param f the file that stores the on-disk backing store for this heap

* file.

*/

public HeapFile(File f, TupleDesc td) {

// some code goes here

this.f = f;

this.td = td;

}

/**

* Returns the File backing this HeapFile on disk.

*

* @return the File backing this HeapFile on disk.

*/

public File getFile() {

// some code goes here

return f;

}

/**

* Returns an ID uniquely identifying this HeapFile. Implementation note:

* you will need to generate this tableid somewhere to ensure that each

* HeapFile has a "unique id," and that you always return the same value for

* a particular HeapFile. We suggest hashing the absolute file name of the

* file underlying the heapfile, i.e. f.getAbsoluteFile().hashCode().

*

* @return an ID uniquely identifying this HeapFile.

*/

public int getId() {

// some code goes here

return f.getAbsoluteFile().hashCode();

}

/**

* Returns the TupleDesc of the table stored in this DbFile.

*

* @return TupleDesc of this DbFile.

*/

public TupleDesc getTupleDesc() {

// some code goes here

return this.td;

}

// see DbFile.java for javadocs

public Page readPage(PageId pid) {

// some code goes here

int tableId = pid.getTableId();

int pgNo = pid.getPageNumber();

int offset = pgNo * BufferPool.getPageSize();

RandomAccessFile randomAccessFile = null;

try{

randomAccessFile = new RandomAccessFile(f,"r");

// 起码有pgNo页那么大小就应该大于pgNo

if((long) (pgNo + 1) *BufferPool.getPageSize() > randomAccessFile.length()){

randomAccessFile.close();

throw new IllegalArgumentException(String.format("table %d page %d is invalid", tableId, pgNo));

}

byte[] bytes = new byte[BufferPool.getPageSize()];

// 移动偏移量到文件开头,并计算是否change

randomAccessFile.seek(offset);

int read = randomAccessFile.read(bytes,0,BufferPool.getPageSize());

// Do not load the entire table into memory on the open() call

// -- this will cause an out of memory error for very large tables.

if(read != BufferPool.getPageSize()){

throw new IllegalArgumentException(String.format("table %d page %d read %d bytes not equal to BufferPool.getPageSize() ", tableId, pgNo, read));

}

HeapPageId id = new HeapPageId(pid.getTableId(),pid.getPageNumber());

return new HeapPage(id,bytes);

}catch (IOException e){

e.printStackTrace();

}finally {

try{

if(randomAccessFile != null){

randomAccessFile.close();

}

}catch (Exception e){

e.printStackTrace();

}

}

throw new IllegalArgumentException(String.format("table %d page %d is invalid", tableId, pgNo));

}

// see DbFile.java for javadocs

public void writePage(Page page) throws IOException {

// TODO: some code goes here

// not necessary for lab1

}

/**

* Returns the number of pages in this HeapFile.

*/

public int numPages() {

// some code goes here

// 通过文件长度算出所在bufferPool所需的页数

System.out.println(String.format("file length :「 %d 」,BufferPool PageSize :「 %d 」 ",getFile().length(),BufferPool.getPageSize()));

return (int) Math.floor(getFile().length() * 1.0 / BufferPool.getPageSize());

}

// see DbFile.java for javadocs

public List<Page> insertTuple(TransactionId tid, Tuple t)

throws DbException, IOException, TransactionAbortedException {

// TODO: some code goes here

return null;

// not necessary for lab1

}

// see DbFile.java for javadocs

public List<Page> deleteTuple(TransactionId tid, Tuple t) throws DbException,

TransactionAbortedException {

// TODO: some code goes here

return null;

// not necessary for lab1

}

// see DbFile.java for javadocs

/**

* HeapFile与Heap为一一对应的关系,所以其实是获取BufferPool中对应页的元组迭代器

*/

public DbFileIterator iterator(TransactionId tid) {

//some code goes here

return new HeapFileIterator(this,tid);

}

}

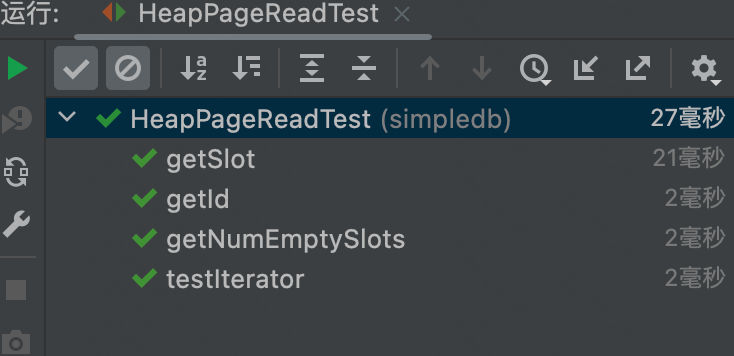

- 测试结果:

2.6、Exercise6:Operators

Operators are responsible for the actual execution of the query plan. They implement the operations of the relational algebra. In SimpleDB, operators are iterator based; each operator implements the DbIterator interface.

Operators are connected together into a plan by passing lower-level operators into the constructors of higher-level operators, i.e., by “chaining them together.” Special access method operators at the leaves of the plan are responsible for reading data from the disk (and hence do not have any operators below them).

这里的Operators其实更像于后端是怎么进行具体的查询,也就是query plan。在本次Exercise实现的更像是包装了一个扫描数据的入口,也就是又一层对外开放的迭代器。

- SeqScan Class:

/**

* SeqScan is an implementation of a sequential scan access method that reads

* each tuple of a table in no particular order (e.g., as they are laid out on

* disk).

*/

public class SeqScan implements OpIterator {

private static final long serialVersionUID = 1L;

/**

*

*/

private final TransactionId tid;

/**

* 表id

*/

private int tableId;

/**

* 表的别名

*/

private String tableAlias;

/**

* 实际访问的迭代器

*/

private DbFileIterator dbFileIterator;

/**

* Creates a sequential scan over the specified table as a part of the

* specified transaction.

*

* @param tid The transaction this scan is running as a part of.

* @param tableid the table to scan.

* @param tableAlias the alias of this table (needed by the parser); the returned

* tupleDesc should have fields with name tableAlias.fieldName

* (note: this class is not responsible for handling a case where

* tableAlias or fieldName are null. It shouldn't crash if they

* are, but the resulting name can be null.fieldName,

* tableAlias.null, or null.null).

*/

public SeqScan(TransactionId tid, int tableid, String tableAlias) {

// some code goes here

this.tid = tid;

this.tableId = tableid;

this.tableAlias = tableAlias;

}

/**

* @return return the table name of the table the operator scans. This should

* be the actual name of the table in the catalog of the database

*/

public String getTableName() {

return Database.getCatalog().getTableName(tableId);

}

/**

* @return Return the alias of the table this operator scans.

*/

public String getAlias() {

// some code goes here

return this.tableAlias;

}

/**

* Reset the tableid, and tableAlias of this operator.

*

* @param tableid the table to scan.

* @param tableAlias the alias of this table (needed by the parser); the returned

* tupleDesc should have fields with name tableAlias.fieldName

* (note: this class is not responsible for handling a case where

* tableAlias or fieldName are null. It shouldn't crash if they

* are, but the resulting name can be null.fieldName,

* tableAlias.null, or null.null).

*/

public void reset(int tableid, String tableAlias) {

// some code goes here

this.tableId = tableid;

this.tableAlias = tableAlias;

}

public SeqScan(TransactionId tid, int tableId) {

this(tid, tableId, Database.getCatalog().getTableName(tableId));

}

public void open() throws DbException, TransactionAbortedException {

// some code goes here

dbFileIterator = Database.getCatalog().getDatabaseFile(tableId).iterator(tid);

dbFileIterator.open();

}

/**

* Returns the TupleDesc with field names from the underlying HeapFile,

* prefixed with the tableAlias string from the constructor. This prefix

* becomes useful when joining tables containing a field(s) with the same

* name. The alias and name should be separated with a "." character

* (e.g., "alias.fieldName").

*

* @return the TupleDesc with field names from the underlying HeapFile,

* prefixed with the tableAlias string from the constructor.

*/

public TupleDesc getTupleDesc() {

// some code goes here

TupleDesc oldDesc = Database.getCatalog().getTupleDesc(tableId);

String[] names = new String[oldDesc.numFields()];

Type[] types = new Type[oldDesc.numFields()];

for(int i = 0; i < oldDesc.numFields(); i++) {

names[i] = this.getAlias() + "." + oldDesc.getFieldName(i);

types[i] = oldDesc.getFieldType(i);

}

return new TupleDesc(types, names);

}

public boolean hasNext() throws TransactionAbortedException, DbException {

// some code goes here

if(dbFileIterator == null){

return false;

}

return dbFileIterator.hasNext();

}

public Tuple next() throws NoSuchElementException,

TransactionAbortedException, DbException {

// some code goes here

if (dbFileIterator == null){

throw new NoSuchElementException("The dbFileIterator is null");

}

Tuple t = dbFileIterator.next();

if(t == null){

throw new NoSuchElementException("The next tuple is null");

}

return t;

}

public void close() {

// some code goes here

dbFileIterator = null;

}

public void rewind() throws DbException, NoSuchElementException,

TransactionAbortedException {

// some code goes here

dbFileIterator.rewind();

}

}

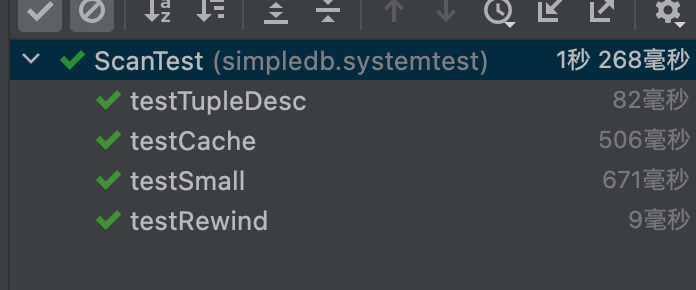

- Test结果:

2.7、A simple query

这里的A simple query就是为了将前面的各个组件进行组合起来。先创建一个some_data_file.txt

的文件,然后利用SimpleDb.class将其编译为二进制的dat文件。最后再利用lab中提供的代码熟悉整个流程并测试dat文件。

package simpledb;

import java.io.*;

public class test {

public static void main(String[] argv) {

// construct a 3-column table schema

Type types[] = new Type[]{

Type.INT_TYPE, Type.INT_TYPE, Type.INT_TYPE };

String names[] = new String[]{

"field0", "field1", "field2" };

TupleDesc descriptor = new TupleDesc(types, names);

// create the table, associate it with some_data_file.dat

// and tell the catalog about the schema of this table.

HeapFile table1 = new HeapFile(new File("some_data_file.dat"), descriptor);

Database.getCatalog().addTable(table1, "test");

// construct the query: we use a simple SeqScan, which spoonfeeds

// tuples via its iterator.

TransactionId tid = new TransactionId();

SeqScan f = new SeqScan(tid, table1.getId());

try {

// and run it

f.open();

while (f.hasNext()) {

Tuple tup = f.next();

System.out.println(tup);

}

f.close();

Database.getBufferPool().transactionComplete(tid);

} catch (Exception e) {

System.out.println ("Exception : " + e);

}

}

}

- test结果:

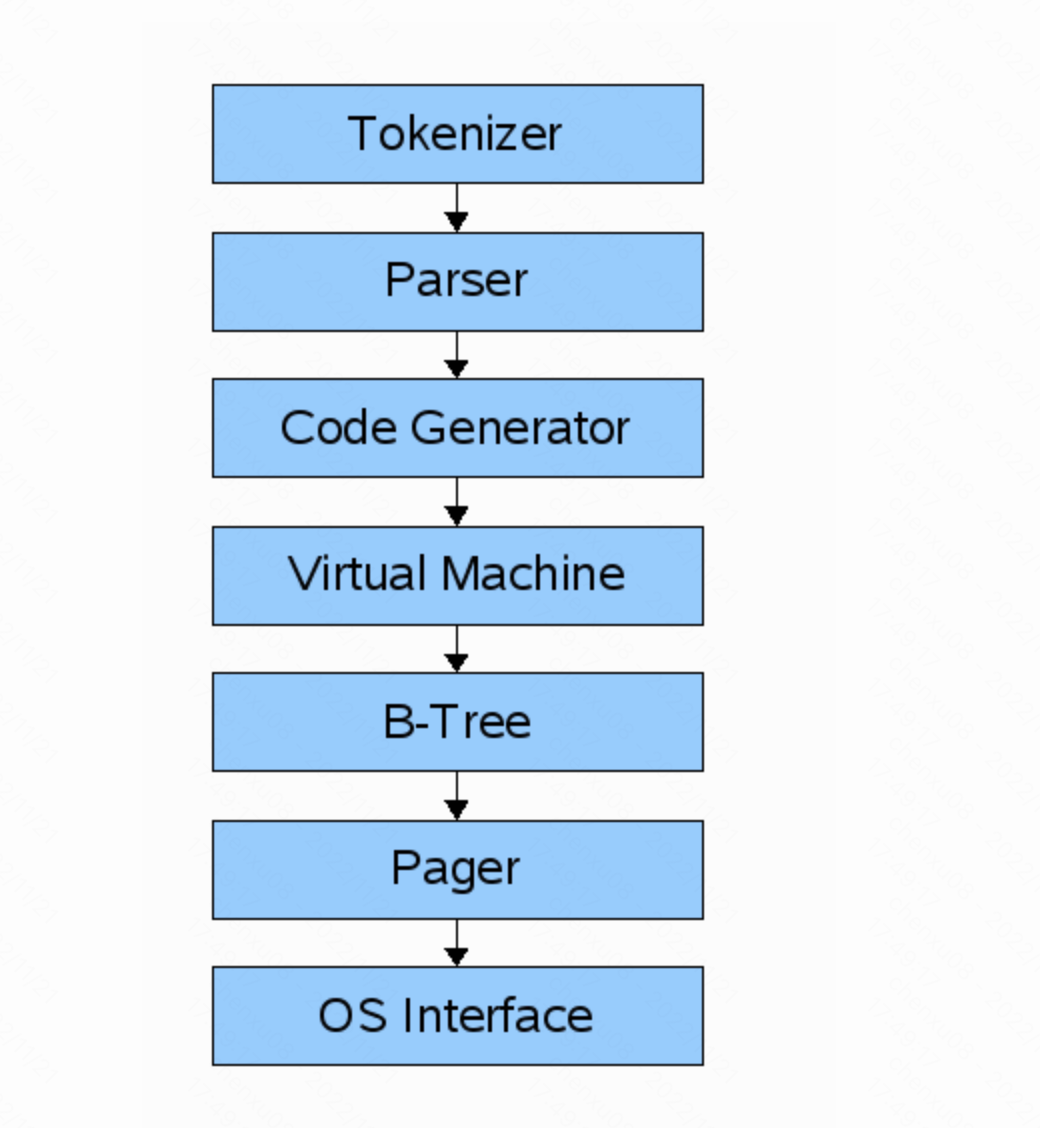

这边再扩展一下正常sqlite的流程:

- 如图所示,其中Tokenizer,Parser,Code Generator都属于front-end(前端部分)。输入一个sql query能被解析成为一个能被VM解析的byte文件。(本质上是可以在数据库上运行的编译文件)。对于本次lab1其实也是直接尽可能略过了front-end部分,专注于后端存储部分。

而对于 back-end 后面几个部分简单介绍下(引用:SQLite Database System: Design and Implementation.)

- The virtual machine takes bytecode generated by the front-end as instructions. It can then perform operations on one or more tables or indexes, each of which is stored in a data structure called a B-tree. The VM is essentially a big switch statement on the type of bytecode instruction.

- Each B-tree consists of many nodes. Each node is one page in length. The B-tree can retrieve a page from disk or save it back to disk by issuing commands to the pager.

- The pager receives commands to read or write pages of data. It is responsible for reading/writing at appropriate offsets in the database file. It also keeps a cache of recently-accessed pages in memory, and determines when those pages need to be written back to disk.

- The os interface is the layer that differs depending on which operating system sqlite was compiled for.

三、总结

写这次的Lab1感觉就稍微轻松一些了,可能也是因为笔者还是对java更熟悉些,而对于lab中的一些点,其实反复看看doc的注释,和introduction或者直接深入看test源码,通过测试还是比较容易。具体的bug点就不提了(其实是笔者断断续续写了一周,总结梳理的时候忘了个大概orz…)后面的Lab也会在实习空闲时继续完善。