1、前言

当前的3D点云目标检测主要分为两种方式,Voxel-based和Point-based。其中很多高性能的3D检测器都基于Point-base的方法,认为该方法表达的特征更具备物体的结构信息,取得更精确的box预测结果;但是point-base的方法因为不规则的数据结构,也让计算开销较大。相反Voxel-based的方法因为能将数据栅格化,更适合进行特征提取操作。

本文作者基于上述观点提出了自己不同的想法,认为精确的原始点云信息对于高性能的3D点云检测器不是必不可少的,同时提出使用粗粒度的voxel(coarse voxel granularity)信息同样可以得到不错的检测精度。

基于此观点,作者设计了Voxel-RCNN,一个基于voxel-based的简单有效的高性能3D点云检测器。为了能充分的利用体素特征(voxel feature)的,作者为此设计了 voxel ROI-Pooling用来提取来自体素的特征信息给二阶段的精调网络。网络结构总览如下图:

注:网络的整体结构可以视作SECOND加上我用红框框出的二阶调优过程,同时整体网络的结构与PV-RCNN十分相似,本文不会重头实现网络的所有模块和功能,如果对PV-RCNN或SECOND不熟悉的小伙伴建议先了解该部分内容,也可以看我之前的博客内容。

Voxel-RCNN论文地址:https://arxiv.org/pdf/2012.15712

Voxel-RCNN论文开源代码地址:GitHub - djiajunustc/Voxel-R-CNN

OpenPCDet仓库地址:GitHub - open-mmlab/OpenPCDet: OpenPCDet Toolbox for LiDAR-based 3D Object Detection.

我的注释代码仓库:

GitHub - Nathansong/OpenPCDdet-annotated: OpenPCDdet模型代码解析

Voxel-RCNN(Towards High Performance Voxel-based 3D Object Detection)在OpenPCDet中的类流程图:

Voxel-RCNN的6个模块

1、MeanVFE

2、VoxelBackBone8x

3、HeightCompression

4、BaseBEVBackbone

5、AnchorHeadSingle

6、VoxelRCNNHead

注1:其中黑色部分均与SECOND中相同。

注2:第6点内容为Voxel-RCNN的二阶段调优实现,代码解析会直接从这里开始,前面的内容均与SECOND一致。

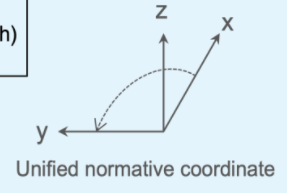

PCDet中的统一规范坐标:(X向前,Y向左,Z向上)

2、Voxel-RCNN设计思考:

Voxel-RCNN的设计初衷,是为了能够达到和point-based一样的精度同时要和Voxel-based的方法一样的快。因此,作者还是采用了基于Voxel-based的方法,并试图对他进行改造提升他的精度。所以,文中对比了PV-RCNN、SECOND两个经典的网络来进行分析。

注:在PV-RCNN中,也是通过SECOND的网络结构作为第一阶段的特征提取,但是同时也采取了关键点编码场景特征的方式来聚合不同尺度下3D卷积层特征;并在精调阶段,将对应的关键点特征融入proposal中,提高了网络的性能。

对比上图,可以发现,如果直接对SECOND加入二阶网络的box refinement操作,精度的提升也是十分有限, 说明BEV特征的表达能力受限,主要原因是现存的voxel-based的方法检测精度受限的主要原因来源于直接将经过3D的卷积的voxel feature直接在Z轴堆叠之后之后在BEV视角上进行检测,没有在中间的过程中恢复特征原有的3D结构信息。

秉持这个观点,在二阶网络的精调过程中融合来自3D卷积层中的3D结构信息。文章中对这部分的实现主要是使用voxel ROI pooling来提取proposal中相邻的voxel特征,并设计了一个local feature aggregation模块来进一步提升计算速度,因为作者比对了PV-RCNN各个模块的耗时,结果如下

可以看到最不同尺度的voxel进行关键点编码是十分耗时的。

总的来说总结如下:

1、3D的结构信息对于3D检测器十分重要,单纯使用来自BEV的特征表达对于精确的3D BBOX定位是有限的。

2、如果直接使用point-voxel的方式来生成编码特征是十分耗时的,影响了检测器的效率。

3、Voxel ROI pooling

3.1 Voxel Volumes as Points

为了直接从经过3D卷积后的3D特征层上聚合空间信息,直接将每层3D特征层认为是一个的非空的Voxel的集合![]()

![]() ,和所有对应非空voxel的特征向量

,和所有对应非空voxel的特征向量

![]() ;同时每个voxel的3D中心坐标根据3D卷积的indice、voxel size(KITTI:[0.05,0.05,0.1],WAYMO:[0.1,0.1,0.2])、选取的点云的范围计算得来。

;同时每个voxel的3D中心坐标根据3D卷积的indice、voxel size(KITTI:[0.05,0.05,0.1],WAYMO:[0.1,0.1,0.2])、选取的点云的范围计算得来。

3.2 Voxel query

得到非空voxel的坐标和特征后,使用Voxel query来寻找周围特征信息。

原来的Ball Query操作是在一个没有组织的数据上进行的,然而voxel是特征则是规则有序的;所以作者在这里使用了曼哈顿距离来计算一个Query Point相邻的voxel有哪些。

两个voxel之间的曼哈顿距离计算:

每个voxel query最多采样K个voxels,K为16。Voxel Query的时间复杂度是O(K)(K为领域voxel的数量),而Ball Query的时间复杂度是O(N) (N为非空voxel的数量)

3.3 Voxel ROI Pooling

每一个ROI内部均匀划分成6x6x6个个小块,每个小块的中心可以看做成一个grid point点,然后根据grid point点在哪个voxel,并以这个voxel为query point执行voxel query操作,得到的每一个grid point点集合的特征,然后使用一个简单的PointNet模块来聚合领域voxel的 特征,公式如下:

![]() 为领域点到grid point点的相对距离,

为领域点到grid point点的相对距离,

![]() 是

是![]() 的voxel 特征,

的voxel 特征,![]() 代表MLP操作,max(·)沿着通道维度进行得到一个grid point的聚合特征

代表MLP操作,max(·)沿着通道维度进行得到一个grid point的聚合特征![]() 。

。

Voxel ROI Pooling聚合来自3D卷积中后面3层的特征,在PCDet中分别是

['x_conv2', 'x_conv3', 'x_conv4'],下采样倍数分别是2、4、8

注:如果已经了解PV-RCNN Keypoint-to-grid RoI Feature Abstraction操作的的话,那么此处的操作就是将grid point从编码的关键点中融合信息变成了从每层3D卷积的非空voxel中直接获取一个范围内的点进行group操作。只不过原来的group操作重BallQuery换成了此处Voxel Query(从原来的基于半径的group变成了基于曼哈顿距离的group操作)

3.4 加速实现(Accelerated Local Aggregation)

原来的Pointnet中,将点的特征和点的坐标拼接在一起再念FC操作;这里的加速实现则是将点的坐标和点的特征分别进行FC操作,并得到两组对应的特征后,将特征对应相加,然后再取沿着通道维度取Max得到这个grid point点的聚合特征。

具体操作如下图所示:

其中M是grid point点的数量,M=r*G^3,r是roi的数量,G是每个ROI三个方向上的尺度。

K是每个Grid Point点采样的voxel数量,代码中为nsapmle

N为非空Voxel的数量,C为voxel 的特征,C+3表示voxel的特征和3个维度上的相对距离。

原始操作的计算量是:(O(M*N*(C+3)*C'))

优化后的计算量是:O(N ×C ×C'+M ×K × 3×C' )

因为 M * K 比N高一个数量级,所以加速的时间,效能更好一点。

代码在:pcdet/models/roi_heads/voxelrcnn_head.py

import torch

import torch.nn as nn

from ...ops.pointnet2.pointnet2_stack import voxel_pool_modules as voxelpool_stack_modules

from ...utils import common_utils

from .roi_head_template import RoIHeadTemplate

class VoxelRCNNHead(RoIHeadTemplate):

def __init__(self, backbone_channels, model_cfg, point_cloud_range, voxel_size, num_class=1, **kwargs):

super().__init__(num_class=num_class, model_cfg=model_cfg)

self.model_cfg = model_cfg

self.pool_cfg = model_cfg.ROI_GRID_POOL

LAYER_cfg = self.pool_cfg.POOL_LAYERS

self.point_cloud_range = point_cloud_range

self.voxel_size = voxel_size

c_out = 0

self.roi_grid_pool_layers = nn.ModuleList()

for src_name in self.pool_cfg.FEATURES_SOURCE:

mlps = LAYER_cfg[src_name].MLPS

for k in range(len(mlps)):

mlps[k] = [backbone_channels[src_name]] + mlps[k]

pool_layer = voxelpool_stack_modules.NeighborVoxelSAModuleMSG(

query_ranges=LAYER_cfg[src_name].QUERY_RANGES,

nsamples=LAYER_cfg[src_name].NSAMPLE,

radii=LAYER_cfg[src_name].POOL_RADIUS,

mlps=mlps,

pool_method=LAYER_cfg[src_name].POOL_METHOD,

)

self.roi_grid_pool_layers.append(pool_layer)

c_out += sum([x[-1] for x in mlps])

GRID_SIZE = self.model_cfg.ROI_GRID_POOL.GRID_SIZE

# c_out = sum([x[-1] for x in mlps])

pre_channel = GRID_SIZE * GRID_SIZE * GRID_SIZE * c_out

shared_fc_list = []

for k in range(0, self.model_cfg.SHARED_FC.__len__()):

shared_fc_list.extend([

nn.Linear(pre_channel, self.model_cfg.SHARED_FC[k], bias=False),

nn.BatchNorm1d(self.model_cfg.SHARED_FC[k]),

nn.ReLU(inplace=True)

])

pre_channel = self.model_cfg.SHARED_FC[k]

if k != self.model_cfg.SHARED_FC.__len__() - 1 and self.model_cfg.DP_RATIO > 0:

shared_fc_list.append(nn.Dropout(self.model_cfg.DP_RATIO))

self.shared_fc_layer = nn.Sequential(*shared_fc_list)

cls_fc_list = []

for k in range(0, self.model_cfg.CLS_FC.__len__()):

cls_fc_list.extend([

nn.Linear(pre_channel, self.model_cfg.CLS_FC[k], bias=False),

nn.BatchNorm1d(self.model_cfg.CLS_FC[k]),

nn.ReLU()

])

pre_channel = self.model_cfg.CLS_FC[k]

if k != self.model_cfg.CLS_FC.__len__() - 1 and self.model_cfg.DP_RATIO > 0:

cls_fc_list.append(nn.Dropout(self.model_cfg.DP_RATIO))

self.cls_fc_layers = nn.Sequential(*cls_fc_list)

self.cls_pred_layer = nn.Linear(pre_channel, self.num_class, bias=True)

reg_fc_list = []

for k in range(0, self.model_cfg.REG_FC.__len__()):

reg_fc_list.extend([

nn.Linear(pre_channel, self.model_cfg.REG_FC[k], bias=False),

nn.BatchNorm1d(self.model_cfg.REG_FC[k]),

nn.ReLU()

])

pre_channel = self.model_cfg.REG_FC[k]

if k != self.model_cfg.REG_FC.__len__() - 1 and self.model_cfg.DP_RATIO > 0:

reg_fc_list.append(nn.Dropout(self.model_cfg.DP_RATIO))

self.reg_fc_layers = nn.Sequential(*reg_fc_list)

self.reg_pred_layer = nn.Linear(pre_channel, self.box_coder.code_size * self.num_class, bias=True)

self.init_weights()

def init_weights(self):

init_func = nn.init.xavier_normal_

for module_list in [self.shared_fc_layer, self.cls_fc_layers, self.reg_fc_layers]:

for m in module_list.modules():

if isinstance(m, nn.Linear):

init_func(m.weight)

if m.bias is not None:

nn.init.constant_(m.bias, 0)

nn.init.normal_(self.cls_pred_layer.weight, 0, 0.01)

nn.init.constant_(self.cls_pred_layer.bias, 0)

nn.init.normal_(self.reg_pred_layer.weight, mean=0, std=0.001)

nn.init.constant_(self.reg_pred_layer.bias, 0)

# def _init_weights(self):

# init_func = nn.init.xavier_normal_

# for m in self.modules():

# if isinstance(m, nn.Conv2d) or isinstance(m, nn.Conv1d) or isinstance(m, nn.Linear):

# init_func(m.weight)

# if m.bias is not None:

# nn.init.constant_(m.bias, 0)

# nn.init.normal_(self.reg_layers[-1].weight, mean=0, std=0.001)

def roi_grid_pool(self, batch_dict):

"""

Args:

batch_dict:

batch_size:

rois: (B, num_rois, 7 + C)

point_coords: (num_points, 4) [bs_idx, x, y, z]

point_features: (num_points, C)

point_cls_scores: (N1 + N2 + N3 + ..., 1)

point_part_offset: (N1 + N2 + N3 + ..., 3)

Returns:

"""

# shape (batch, 128, 7)

rois = batch_dict['rois']

batch_size = batch_dict['batch_size']

with_vf_transform = batch_dict.get('with_voxel_feature_transform', False) # False

# roi_grid_xyz是雷达坐标系的grid point (Batch * num_of_roi, 6*6*6, 3) ;_是proposal中心坐标点CCS坐标系下的grid point

roi_grid_xyz, _ = self.get_global_grid_points_of_roi(

rois, grid_size=self.pool_cfg.GRID_SIZE

) # (BxN, 6x6x6, 3)

# roi_grid_xyz: (B, Nx6x6x6, 3)

roi_grid_xyz = roi_grid_xyz.view(batch_size, -1, 3)

# compute the voxel coordinates of grid points 计算得到每一个grid point在哪一个voxel内,并得到该voxel的坐标

roi_grid_coords_x = (roi_grid_xyz[:, :, 0:1] - self.point_cloud_range[0]) // self.voxel_size[0]

roi_grid_coords_y = (roi_grid_xyz[:, :, 1:2] - self.point_cloud_range[1]) // self.voxel_size[1]

roi_grid_coords_z = (roi_grid_xyz[:, :, 2:3] - self.point_cloud_range[2]) // self.voxel_size[2]

# roi_grid_coords: (B, Nx6x6x6, 3) 得到grid point所在voxel的坐标 xyz

roi_grid_coords = torch.cat([roi_grid_coords_x, roi_grid_coords_y, roi_grid_coords_z], dim=-1)

# 创建batch mask

batch_idx = rois.new_zeros(batch_size, roi_grid_coords.shape[1], 1)

for bs_idx in range(batch_size):

batch_idx[bs_idx, :, 0] = bs_idx

# roi_grid_coords: (B, Nx6x6x6, 4)

# roi_grid_coords = torch.cat([batch_idx, roi_grid_coords], dim=-1)

# roi_grid_coords = roi_grid_coords.int()

roi_grid_batch_cnt = rois.new_zeros(batch_size).int().fill_(

roi_grid_coords.shape[1]) # 每个batch中所有的proposal有多少个grid point [128*6*6*6, 128*6*6*6]

"""

这里pool有三个尺度,这里仅仅粘贴出来第一个x_conv2中的pool操作

ModuleList(

(0): NeighborVoxelSAModuleMSG(

(groupers): ModuleList(

(0): VoxelQueryAndGrouping()

)

(mlps_in): ModuleList(

(0): Sequential(

(0): Conv1d(32, 32, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(mlps_pos): ModuleList(

(0): Sequential(

(0): Conv2d(3, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(mlps_out): ModuleList(

(0): Sequential(

(0): Conv1d(32, 32, kernel_size=(1,), stride=(1,), bias=False)

(1): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

)

)

(relu): ReLU()

)

"""

# self.pool_cfg.FEATURES_SOURCE : ['x_conv2', 'x_conv3', 'x_conv4']

pooled_features_list = []

for k, src_name in enumerate(self.pool_cfg.FEATURES_SOURCE):

pool_layer = self.roi_grid_pool_layers[k]

cur_stride = batch_dict['multi_scale_3d_strides'][src_name]

cur_sp_tensors = batch_dict['multi_scale_3d_features'][src_name]

if with_vf_transform:

cur_sp_tensors = batch_dict['multi_scale_3d_features_post'][src_name]

else:

cur_sp_tensors = batch_dict['multi_scale_3d_features'][src_name]

# compute voxel center xyz and batch_cnt

# 该层3D特征中非空voxel的indices

cur_coords = cur_sp_tensors.indices

cur_voxel_xyz = common_utils.get_voxel_centers(

# shape (num_of_voxel, 3) 根据点云的范围,voxel的大小,该3D卷积层的下采样倍数,来获得该层所有非空voxel的中心点坐标

cur_coords[:, 1:4],

downsample_times=cur_stride,

voxel_size=self.voxel_size,

point_cloud_range=self.point_cloud_range

)

cur_voxel_xyz_batch_cnt = cur_voxel_xyz.new_zeros(batch_size).int()

for bs_idx in range(batch_size):

# 得到每帧点云在该尺度下有多少个voxel ,cur_voxel_xyz_batch_cnt [num_of_voxel_1, num_of_voxel_2]

cur_voxel_xyz_batch_cnt[bs_idx] = (cur_coords[:, 0] == bs_idx).sum()

# get voxel2point tensor,在特征尺度下根据非空的voxel的坐标点,来生成3D密集矩阵数据,

# 并将非空的voxel坐标点设置为该voxel在一个batch中的索引,空的voxel坐标点数值设置为-1;shape (batch, z, y, x)

v2p_ind_tensor = common_utils.generate_voxel2pinds(cur_sp_tensors)

# compute the grid coordinates in this scale, in [batch_idx, x y z] order

# 计算得到proposal中每个grid经过3D卷积下采样后的voxel coor坐标

cur_roi_grid_coords = roi_grid_coords // cur_stride

# 拼接batch_idx到cur_roi_grid_coords

cur_roi_grid_coords = torch.cat([batch_idx, cur_roi_grid_coords], dim=-1)

cur_roi_grid_coords = cur_roi_grid_coords.int()

# voxel neighbor aggregation

pooled_features = pool_layer(

xyz=cur_voxel_xyz.contiguous(), # 该3D卷积特征层上非空voxel的坐标 (num_of_voxel, 3)

xyz_batch_cnt=cur_voxel_xyz_batch_cnt, # 每一帧点云中,在该尺度上voxel的个数

new_xyz=roi_grid_xyz.contiguous().view(-1, 3), # 每个proposal中roi grid point在点云坐标系下的坐标 单位:米

new_xyz_batch_cnt=roi_grid_batch_cnt, # 一批数据中,每帧点云roi grid point的个数

new_coords=cur_roi_grid_coords.contiguous().view(-1, 4), # 该尺度下roi grid point的voxel坐标

features=cur_sp_tensors.features.contiguous(), # 该层的3D卷积特征

voxel2point_indices=v2p_ind_tensor # 密集3D矩阵

)

pooled_features = pooled_features.view(

-1, self.pool_cfg.GRID_SIZE ** 3,

pooled_features.shape[-1]

) # (BxN, 6x6x6, C)

pooled_features_list.append(pooled_features)

# (batch*128, 6x6x6, 32*3)每个grid point拼接来自['x_conv2', 'x_conv3', 'x_conv4']特征

ms_pooled_features = torch.cat(pooled_features_list, dim=-1)

return ms_pooled_features

def get_global_grid_points_of_roi(self, rois, grid_size):

rois = rois.view(-1, rois.shape[-1]) # (batch*128, 7)

batch_size_rcnn = rois.shape[0] # batch*128

# 每个box中均匀分布的6*6*6 的grid point点的坐标(基于proposal中心的CCS坐标系) shape : (batch * 128, 216, 3)

local_roi_grid_points = self.get_dense_grid_points(rois, batch_size_rcnn, grid_size) # (B, 6x6x6, 3)

# 将获取的grid point 转换到与之对应roi的旋转角度下 shape : (batch * 128, 216, 3)

global_roi_grid_points = common_utils.rotate_points_along_z(

local_roi_grid_points.clone(), rois[:, 6]

).squeeze(dim=1)

# CCS坐标的XYZ转换到雷达坐标系下

global_center = rois[:, 0:3].clone()

# shape : (batch * 128, 216, 3)

global_roi_grid_points += global_center.unsqueeze(dim=1)

# global_roi_grid_points是雷达坐标系的grid point;local_roi_grid_points是CCS坐标系下的grid point

return global_roi_grid_points, local_roi_grid_points

@staticmethod

def get_dense_grid_points(rois, batch_size_rcnn, grid_size):

faked_features = rois.new_ones((grid_size, grid_size, grid_size)) # (6, 6, 6)

dense_idx = faked_features.nonzero() # (6*6*6, 3) [x_idx, y_idx, z_idx]

dense_idx = dense_idx.repeat(batch_size_rcnn, 1, 1).float() # (B, 6x6x6, 3)

# (batch * 128, 3) (l, w, h)

local_roi_size = rois.view(batch_size_rcnn, -1)[:, 3:6]

# (B, 6x6x6, 3)

# (dense_idx + 0.5) / grid_size shape (256, 216, 3)

# local_roi_size.unsqueeze(dim=1) shape (256, 1, 3)

# (dense_idx + 0.5) / grid_size * local_roi_size.unsqueeze(dim=1)获取每个grid point点的位置

# - (local_roi_size.unsqueeze(dim=1) / 2) 将得到的grid point点转换到box的中心为原点(CCS坐标系)

roi_grid_points = (dense_idx + 0.5) / grid_size * local_roi_size.unsqueeze(dim=1) \

- (local_roi_size.unsqueeze(dim=1) / 2) # (B, 6x6x6, 3)

return roi_grid_points

def forward(self, batch_dict):

"""

:param input_data: input dict

:return:

"""

# 根据所有的预测结果生成proposal, rois: (B, num_rois, 7+C)

# roi_scores: (B, num_rois) roi_labels: (B, num_rois)

# 训练生成512个ROI,推理生成100个ROI

targets_dict = self.proposal_layer(

batch_dict, nms_config=self.model_cfg.NMS_CONFIG['TRAIN' if self.training else 'TEST']

)

# 训练模式下, 需要对选取的roi进行target assignment,并将ROI对应的GTBox转换到CCS坐标系下

if self.training:

targets_dict = self.assign_targets(batch_dict)

batch_dict['rois'] = targets_dict['rois']

batch_dict['roi_labels'] = targets_dict['roi_labels']

# RoI aware pooling pooled_features shape (batch * 128, 6x6x6, 96)

pooled_features = self.roi_grid_pool(batch_dict) # (BxN, 6x6x6, C)

# Box Refinement (batch * 128, 6x6x6, 96) --> (batch, 128, 6x6x6x96)

pooled_features = pooled_features.view(pooled_features.size(0), -1)

"""

使用一个两层的MLP生成最终的特征

Sequential(

(0): Linear(in_features=20736, out_features=256, bias=False)

(1): BatchNorm1d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Dropout(p=0.3, inplace=False)

(4): Linear(in_features=256, out_features=256, bias=False)

(5): BatchNorm1d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(6): ReLU(inplace=True)

)

"""

shared_features = self.shared_fc_layer(pooled_features)

# (256, 1)

rcnn_cls = self.cls_pred_layer(self.cls_fc_layers(shared_features))

# (256, 7)

rcnn_reg = self.reg_pred_layer(self.reg_fc_layers(shared_features))

# grid_size = self.model_cfg.ROI_GRID_POOL.GRID_SIZE

# batch_size_rcnn = pooled_features.shape[0]

# pooled_features = pooled_features.permute(0, 2, 1).\

# contiguous().view(batch_size_rcnn, -1, grid_size, grid_size, grid_size) # (BxN, C, 6, 6, 6)

# shared_features = self.shared_fc_layer(pooled_features.view(batch_size_rcnn, -1, 1))

# rcnn_cls = self.cls_layers(shared_features).transpose(1, 2).contiguous().squeeze(dim=1) # (B, 1 or 2)

# rcnn_reg = self.reg_layers(shared_features).transpose(1, 2).contiguous().squeeze(dim=1) # (B, C)

if not self.training:

batch_cls_preds, batch_box_preds = self.generate_predicted_boxes(

batch_size=batch_dict['batch_size'], rois=batch_dict['rois'], cls_preds=rcnn_cls, box_preds=rcnn_reg

)

batch_dict['batch_cls_preds'] = batch_cls_preds

batch_dict['batch_box_preds'] = batch_box_preds

batch_dict['cls_preds_normalized'] = False

else:

targets_dict['rcnn_cls'] = rcnn_cls

targets_dict['rcnn_reg'] = rcnn_reg

self.forward_ret_dict = targets_dict

return batch_dict

voxel pool操作

代码在:pcdet/ops/pointnet2/pointnet2_stack/voxel_pool_modules.py

def forward(self, xyz, xyz_batch_cnt, new_xyz, new_xyz_batch_cnt, \

new_coords, features, voxel2point_indices):

"""

:param xyz: (N1 + N2 ..., 3) tensor of the xyz coordinates of the features

:param xyz_batch_cnt: (batch_size), [N1, N2, ...]

:param new_xyz: (M1 + M2 ..., 3)

:param new_xyz_batch_cnt: (batch_size), [M1, M2, ...]

:param features: (N1 + N2 ..., C) tensor of the descriptors of the the features

:param point_indices: (B, Z, Y, X) tensor of point indices

:return:

new_xyz: (M1 + M2 ..., 3) tensor of the new features' xyz

new_features: (M1 + M2 ..., \sum_k(mlps[k][-1])) tensor of the new_features descriptors

"""

# change the order to [batch_idx, z, y, x]

new_coords = new_coords[:, [0, 3, 2, 1]].contiguous()

new_features_list = [] # 存储特征

for k in range(len(self.groupers)):

# features_in: (1, C, M1+M2) 将每个非空voxel的特征由(num_of_voxel, C) --> (1, C, num_of_voxel)

features_in = features.permute(1, 0).unsqueeze(0)

features_in = self.mlps_in[k](features_in) # 一层FC层

# features_in: (1, M1+M2, C)

features_in = features_in.permute(0, 2, 1).contiguous() # (1, C, num_of_voxel)-->(1, num_of_voxel, C)

# features_in: (M1+M2, C)

features_in = features_in.view(-1, features_in.shape[-1]) # (1, num_of_voxel, C) --> (num_of_voxel, C)

# grouped_features: (M1+M2, C, nsample)

# grouped_xyz: (M1+M2, 3, nsample) 完成voxel query的操作,并将选取到的所有点group到一起

grouped_features, grouped_xyz, empty_ball_mask = self.groupers[k](

new_coords, xyz, xyz_batch_cnt, new_xyz, new_xyz_batch_cnt, features_in, voxel2point_indices

)

grouped_features[empty_ball_mask] = 0

# grouped_features: (1, C, M1+M2, nsample)

grouped_features = grouped_features.permute(1, 0, 2).unsqueeze(dim=0)

# grouped_xyz: (M1+M2, 3, nsample)

grouped_xyz = grouped_xyz - new_xyz.unsqueeze(-1)

grouped_xyz[empty_ball_mask] = 0

# grouped_xyz: (1, 3, M1+M2, nsample)

grouped_xyz = grouped_xyz.permute(1, 0, 2).unsqueeze(0)

# grouped_xyz: (1, C, M1+M2, nsample) 先对每个点的坐标进行FC操作

position_features = self.mlps_pos[k](grouped_xyz)

new_features = grouped_features + position_features # 将得到的点的特征和3D卷积中的特征相加

new_features = self.relu(new_features)

if self.pool_method == 'max_pool':

new_features = F.max_pool2d(

new_features, kernel_size=[1, new_features.size(3)]

).squeeze(dim=-1) # (1, C, M1 + M2 ...)

elif self.pool_method == 'avg_pool':

new_features = F.avg_pool2d(

new_features, kernel_size=[1, new_features.size(3)]

).squeeze(dim=-1) # (1, C, M1 + M2 ...)

else:

raise NotImplementedError

new_features = self.mlps_out[k](new_features)

new_features = new_features.squeeze(dim=0).permute(1, 0) # (M1 + M2 ..., C)

new_features_list.append(new_features)

# (M1 + M2 ..., C)

new_features = torch.cat(new_features_list, dim=1)

return new_features其余的实现都与PV-RCNN一致,不再继续水下去了,直接看看消融实验吧。

4、消融实验

下图阐述了Voxel RCNN中各个模块的区别

D.H. 代表二阶检测头,用于boxrefinement

D.H. 代表二阶检测头,用于boxrefinement

V.Q. 代表voxel querry操作

A.P. 代表加速实现的PointNet操作

1、方法(a)是对BEV特征进行检测的单阶段基线。它以40.8FPS的速度运行,但AP不令人满意,这表明仅凭BEV表示不足以精确检测3D空间中的对象。

2、方法(b)在a的基础上扩展了一个检测头用于box refinement,提升了检测精度,这也证明了来自三维体素的空间的上下文信息(3D Voxels context)可以为精确的目标检测提供了足够的信息,但是使用的是ball query来提取中间卷积层的特征。

3、方法(c)在b的基础上使用了voxel query,这替换了方法b中的ball query操作,加速了检测速度

4、方法(d)使用了加速实现的PointNet模块,进一步将FPS从17.4提升到21.4。

5、方法(e)就是本文提出的Voxel RCNN,结果如上图所示。

至止,VoxelRCNN的内容就讲完了,PCDet中的大部分模型也已经说的差不多了,之后来康康该库中唯一一个单目3D检测模型caDDN。

参考文献或文章

1、距离向量(欧式距离、曼哈顿距离等)_王涛涛.的博客-CSDN博客_曼哈顿距离

2、https://arxiv.org/pdf/2012.15712.pdf

3、GitHub - open-mmlab/OpenPCDet: OpenPCDet Toolbox for LiDAR-based 3D Object Detection.