本文声明:本文只本人在学习过程中的练习,如有错误之处,欢迎大家不吝赐教

在学习过程中,对标准的打印字符构建模型,并进行训练,字符样本如下图所示:

模型中共用了 3 层卷积和2 个全连接层,其中,第1层卷积核大小为 3*3,输入图像为单通道灰度图像,输出特征为 64 个特征,第2层卷积核大小为5*5,输出特征为32个特征,第3层卷积核大小为5*5,输出特征为 16 个特征,将 16 个特征在第一层全连接层,映射为 128 个特征,第2层全连接层,将上一层的128个特征,映射为10个分类;

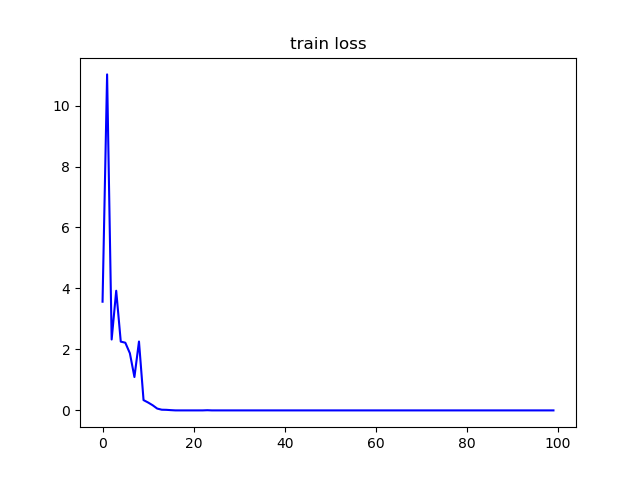

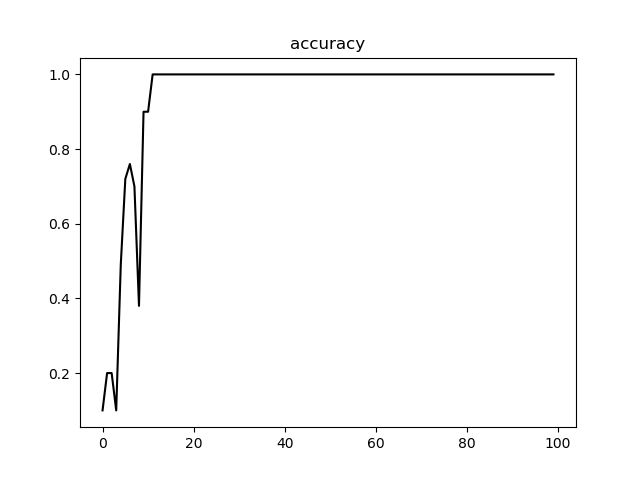

其中,每个字符选取了10个样本,并将样本进行归一化大小为128*256大小的图像,进行训练,迭代次数为100;训练过程中,损失值与准确率如下图所示:

从上图可以看书,在训练到20次左右,Loss值趋向于 0,说明是收敛的,而且准确率,也逐步达了100%,因为样本全部为标准字符,所以,识别的准确率达到100%,但也可能存在过拟合;

实现代码如下所示:

# 该程序使用 TensorFlow 对 CNN 进行实现

# 图像预处理部分使用 OpenCV

import os

import cv2

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' # 防止报出 TensorFlow 中的警告

# 以下代码用于实现卷积网络

def weight_init(shape, name):

return tf.get_variable(name, shape, initializer=tf.random_normal_initializer(mean=0.0, stddev=0.1))

def bias_init(shape, name):

return tf.get_variable(name, shape, initializer=tf.constant_initializer(0.0))

def conv2d(input_data, conv_w):

return tf.nn.conv2d(input_data, conv_w, strides=[1, 1, 1, 1], padding='VALID')

def max_pool(input_data, size):

return tf.nn.max_pool(input_data, ksize=[1, size, size, 1], strides=[1, size, size, 1], padding='VALID')

def conv_net(input_data):

with tf.name_scope('conv1'):

w_conv1 = weight_init([3, 3, 1, 64], 'conv1_w') # 卷积核大小是 3*3 输入是 1 通道,输出为 64 通道

b_conv1 = bias_init([64], 'conv1_b')

h_conv1 = tf.nn.relu(tf.nn.bias_add(conv2d(input_data, w_conv1), b_conv1))

h_pool1 = max_pool(h_conv1, 2)

with tf.name_scope('conv2'):

w_conv2 = weight_init([5, 5, 64, 32], 'conv2_w') # 卷积核大小是 5*5 输入是64,输出为 32

b_conv2 = bias_init([32], 'conv2_b')

h_conv2 = tf.nn.relu(conv2d(h_pool1, w_conv2) + b_conv2)

h_pool2 = max_pool(h_conv2, 2)

with tf.name_scope('conv3'):

w_conv3 = weight_init([5, 5, 32, 16], 'conv3_w') # 卷积核大小是 5*5 输入是32,输出为 16

b_conv3 = bias_init([16], 'conv3_b')

h_conv3 = tf.nn.relu(conv2d(h_pool2, w_conv3) + b_conv3)

h_pool3 = max_pool(h_conv3, 2)

# print(h_pool3)

with tf.name_scope('fc1'):

w_fc1 = weight_init([28 * 12 * 16, 128], 'fc1_w') # 三层卷积后得到的图像大小为 28 * 12

b_fc1 = bias_init([128], 'fc1_b')

h_fc1 = tf.nn.relu(tf.matmul(tf.reshape(h_pool3, [-1, 28*12*16]), w_fc1) + b_fc1)

with tf.name_scope('fc2'):

w_fc2 = weight_init([128, 10], 'fc2_w')

b_fc2 = bias_init([10], 'fc2_b')

h_fc2 = tf.matmul(h_fc1, w_fc2) + b_fc2

return h_fc2

"""

# 利用 os 模块中的 walk() 函数遍历文件

def load_images(path):

image_list = [] # 图像列表,用于保存加载到内存中的图像文件

for dirPath, _, fileNames in os.walk(path):

for fileName in fileNames:

if fileName == '001.jpg':

image_name = os.path.join(dirPath, fileName)

image = cv2.imread(image_name, cv2.IMREAD_GRAYSCALE) # 加载为灰度图像

image_list.append(image)

return image_list

"""

def load_images():

img_list = []

for i in range(10): # 文件夹

path = 'F:\Python WorkSpace\OcrByCNN\Characters\%d\\' % i

for j in range(10): # 文件名

file_names = '%03d.jpg' % (j+1)

image = cv2.imread(path + file_names, cv2.IMREAD_GRAYSCALE)

img_list.append(image)

return img_list

def get_accuracy(logits, targets):

batch_prediction = np.argmax(logits, axis=1)

num_correct = np.sum(np.equal(batch_prediction, targets))

return 100.* num_correct / batch_prediction.shape[0]

def train_data():

image_list = load_images()

batch_size = 100

np_train_datas = np.empty(shape=(batch_size, 256, 128, 1), dtype='float32')

for i in range(100):

np_train_datas[i] = image_list[i][ :, :, np.newaxis]/256 # 归一化

# train_datas = tf.convert_to_tensor(np_train_datas)

train_labels_data = np.zeros(shape=(100,), dtype=int)

for i in range(10):

train_labels_data[i*10: 10*(i + 1)] = i

train_labels = tf.one_hot(train_labels_data, 10, on_value=1.0, off_value=0.0)

"""

sess = tf.Session()

init = tf.global_variables_initializer()

sess.run(init)

print(sess.run(train_labels))

"""

x_data = tf.placeholder(tf.float32, [batch_size, 256, 128, 1])

y_target = tf.placeholder(tf.float32, [batch_size, 10])

model_output = conv_net(x_data)

# print(predict)

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(labels=y_target, logits=model_output))

# loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=model_output, logits=y_target), name='loss')

optimizer = tf.train.AdamOptimizer(1e-2).minimize(loss)

# prediction = tf.nn.softmax(model_output)

# 计算训练集准确率

train_correct_prediction = tf.equal(tf.argmax(model_output, 1), tf.argmax(train_labels, 1))

train_accuracy_1 = tf.reduce_mean(tf.cast(train_correct_prediction, tf.float32))

init = tf.global_variables_initializer()

session = tf.Session()

session.run(init)

train_loss = []

train_accuracy = []

for i in range(100): # 训练 100 次

[_loss, _, accuracy] = session.run([loss, optimizer, train_accuracy_1], feed_dict={x_data: np_train_datas, y_target: train_labels.eval(session=session)})

# accuracy = get_accuracy(_prediction, y_target)

train_loss.append(_loss)

train_accuracy.append(accuracy)

print('第 %d 次迭代:' % i)

print('loss: %0.10f' % _loss)

print('perdiction: %0.2f' % accuracy)

# print('prediction: %f' % _prediction)

session.close()

plt.title('train loss')

plt.plot(range(0, 100), train_loss, 'b-')

plt.show()

plt.title('accuracy')

plt.plot(range(0, 100), train_accuracy, 'k-')

plt.show()

def main():

train_data()

if __name__ == "__main__":

main()