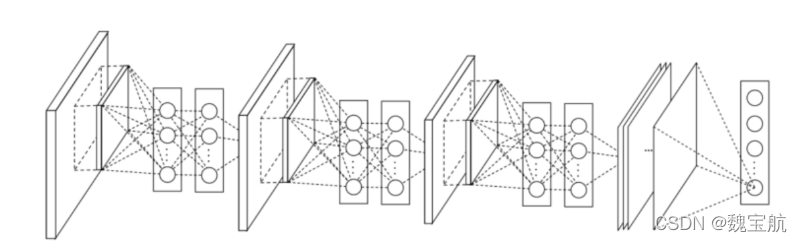

NIN网络结构

注解:这里为了简单起见,只是模拟NIN网络结构,本代码只是采用3个mlpconv层和最终的全局平均池化输出层,每个mlpconv层中包含了3个1*1卷积层

mlpconv层

1*1卷积只是会改变通道维数并不会改变feature map的大小,它可以变向起到一个通道交叉全连接的作用

self.mlpconv1 = Sequential([

Conv2D(filters=3,

kernel_size=1),

ReLU(),

Conv2D(filters=3,

kernel_size=1),

ReLU(),

Conv2D(filters=3,

kernel_size=1)]

)

全局平均池化层

GlobalAveragePooling会将每个feature map所有的值相加取均值然后将这个实数作为该通道的特征值,NIN网络结构采用全局平均池化代替传统输出层使用MLP结构,这样有效防止过拟合,如果我们的任务存在1000个分类,那么我们最终的输出层的feature map的个数也为1000,然后对其进行全局平均池化,每个feature map代表一个类别,会形成一个1*1*1000的特征图,也就是一个维度为1000的特征向量,然后进行softmax操作

self.global_average_pool = GlobalAveragePooling2D()

"""

* Created with PyCharm

* 作者: 阿光

* 日期: 2021/1/12

* 时间: 22:35

* 描述: 作者原文中的手写数据集是32*32,这里mnist是28*28,所以在训练前修改了图像尺寸

还有一种解决方式就是在第一个卷积层使用padding='same'进行填充,这样就保证了使用第一个卷积层后尺寸为28*28

之后仍可正常进行

"""

import tensorflow as tf

from keras import Sequential

from tensorflow.keras.layers import *

class NIN(tf.keras.Model):

def __init__(self, output_dim=10):

super(NIN, self).__init__()

self.mlpconv1 = Sequential([

Conv2D(filters=3,

kernel_size=1),

ReLU(),

Conv2D(filters=3,

kernel_size=1),

ReLU(),

Conv2D(filters=3,

kernel_size=1)]

)

self.mlpconv2 = Sequential([

Conv2D(filters=3,

kernel_size=1),

ReLU(),

Conv2D(filters=3,

kernel_size=1),

ReLU(),

Conv2D(filters=3,

kernel_size=1)]

)

self.mlpconv3 = Sequential([

Conv2D(filters=3,

kernel_size=1),

ReLU(),

Conv2D(filters=3,

kernel_size=1),

ReLU(),

Conv2D(filters=output_dim,

kernel_size=1)]

)

self.global_average_pool = GlobalAveragePooling2D()

def call(self, inputs):

x = self.mlpconv1(inputs)

x = self.mlpconv2(x)

x = self.mlpconv3(x)

x = self.global_average_pool(x)

x = Softmax()(x)

return x

调用模型,训练mnist数据集

"""

* Created with PyCharm

* 作者: 阿光

* 日期: 2021/1/12

* 时间: 22:20

* 描述:

"""

import tensorflow as tf

from tensorflow.keras import Input

# step1:加载数据集

import model

import model_sequential

(train_images, train_labels), (test_images, test_labels) = tf.keras.datasets.mnist.load_data()

# step2:将图像归一化

train_images, test_images = train_images / 255.0, test_images / 255.0

# step3:将图像的维度变为(60000,28,28,1)

train_images = tf.expand_dims(train_images, axis=3)

test_images = tf.expand_dims(test_images, axis=3)

# step5:导入模型

# history = LeNet5()

history = model.NIN(10)

# 让模型知道输入数据的形式

history.build(input_shape=(1, 28, 28, 1))

# 结局Output Shape为 multiple

history.call(Input(shape=(28, 28, 1)))

history.summary()

# step6:编译模型

history.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

# 权重保存路径

checkpoint_path = "./weight/cp.ckpt"

# 回调函数,用户保存权重

save_callback = tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_path,

save_best_only=True,

save_weights_only=True,

monitor='val_loss',

verbose=1)

# step7:训练模型

history = history.fit(train_images,

train_labels,

epochs=10,

batch_size=32,

validation_data=(test_images, test_labels),

callbacks=[save_callback])