import requests

import re

def getHTMLText(url): #获取页面的函数

try:

headers = {

"user-agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36",

"cookie": "miid=1428930817865580362; cna=EarZFfUm1S0CARsR+220O8hH; t=8bc94e7bc688eb7af5533f1976650fde; _m_h5_tk=65dbeb4e38f534aacf4025c8d4e81bce_1586794235712; _m_h5_tk_enc=e96d92ee16e958b4890caa9fc2fa6db4; thw=cn; cookie2=1554e5bbbfe6457cf1c1c9aa63c058df; v=0; _tb_token_=5a85e0188653; _samesite_flag_=true; sgcookie=EpId%2FVCz%2BPjBPFKeidqdS; unb=2683761081; uc3=lg2=WqG3DMC9VAQiUQ%3D%3D&id2=UU6p%2BQEJ8tSc4g%3D%3D&vt3=F8dBxdGLa3BXsASlX%2Bw%3D&nk2=BcLP06d1nZPt5PbdCo24Cnoi; csg=1e8e7f0a; lgc=freezing2856803123; cookie17=UU6p%2BQEJ8tSc4g%3D%3D; dnk=freezing2856803123; skt=6a084e57cf10b6e6; existShop=MTU4NzE5NDg1OA%3D%3D; uc4=id4=0%40U2xkY0WHChRFrR6VhQm75gIGMATD&nk4=0%40B044YAqLRKUazEZ7eWhSvUymCOjtR%2FkE1PO2nJ8%3D; tracknick=freezing2856803123; _cc_=U%2BGCWk%2F7og%3D%3D; _l_g_=Ug%3D%3D; sg=317; _nk_=freezing2856803123; cookie1=B0BXi%2BrAh%2BCsG%2B9LmOzVV9j8dAB5xdFbcF%2BmnvpYvzA%3D; tfstk=chgGBuae-cr6eLnsN1asMerwb79daT74EquI8V-uS4f_xE3z_sIoYL5pOSEkdp1..; mt=ci=97_1; enc=0gxF3t55dTUIEQOzUSrgF7p2gdf9xdcdC6xm317h5dXRn7D21KYrLJkRJFp6vcy6l7Z2CrAPewgEdMBB0j7yHg%3D%3D; alitrackid=www.taobao.com; lastalitrackid=www.taobao.com; hng=CN%7Czh-CN%7CCNY%7C156; uc1=cookie16=UtASsssmPlP%2Ff1IHDsDaPRu%2BPw%3D%3D&cookie21=U%2BGCWk%2F7p4mBoUyS4plD&cookie15=URm48syIIVrSKA%3D%3D&existShop=false&pas=0&cookie14=UoTUPc3lioQ%2F3A%3D%3D; JSESSIONID=B3B7C7381542916C591F2634FDE31A52; l=eBSbgB4VqimFn0mBBOfwdA7-hk7OSBdYYu8NeR-MiT5PON1p5CxAWZXZX0L9C3GVhsZXR3Szm2rQBeYBqS24n5U62j-la_kmn; isg=BGJi2OxgSCf6jlezYGKTe0FGvejEs2bNw3JHu6z7jlWAfwL5lEO23eh9r7uD9N5l"

}

r=requests.get(url,timeout=30, headers=headers)

r.raise_for_status()

r.encoding=r.apparent_encoding

return r.text

except:

return ""

def parsePage(ilt,html): #解析变量

try:

plt=re.findall(r'\"view_price\"\:\"[\d\.]*\"',html) #价格

tlt=re.findall(r'\"raw_title\"\:\".*?\"',html) #名称

for i in range(len(plt)):

price=eval(plt[i].split(":")[1])

title=eval(tlt[i].split(":")[1])

ilt.append([price,title])

except:

print("")

def printGoodsList(ilt): #输出淘宝的信息

tplt = "{0:^4}\t{1:<8}\t{2:{3}<16}"#增加了对其方式

# tplt = "{0:^4}\t{1:<8}\t{2:{3}<16}"

print(tplt.format("序号","价格","商品名称", chr(12288)))

count = 0

for g in ilt:

count = count + 1

print(tplt.format(count, g[0], g[1], chr(12288)))

def main():

goods = '书包' #使用书包作为检索词

depth = 2 #爬取的深度

start_url = 'https://s.taobao.com/search?q=' + goods

infoList = [] #输出结果定个变量

for i in range(depth):

try:

url = start_url + '&s=' + str(44*i)

html = getHTMLText(url)

parsePage(infoList, html)

except:

continue

printGoodsList(infoList)

main()

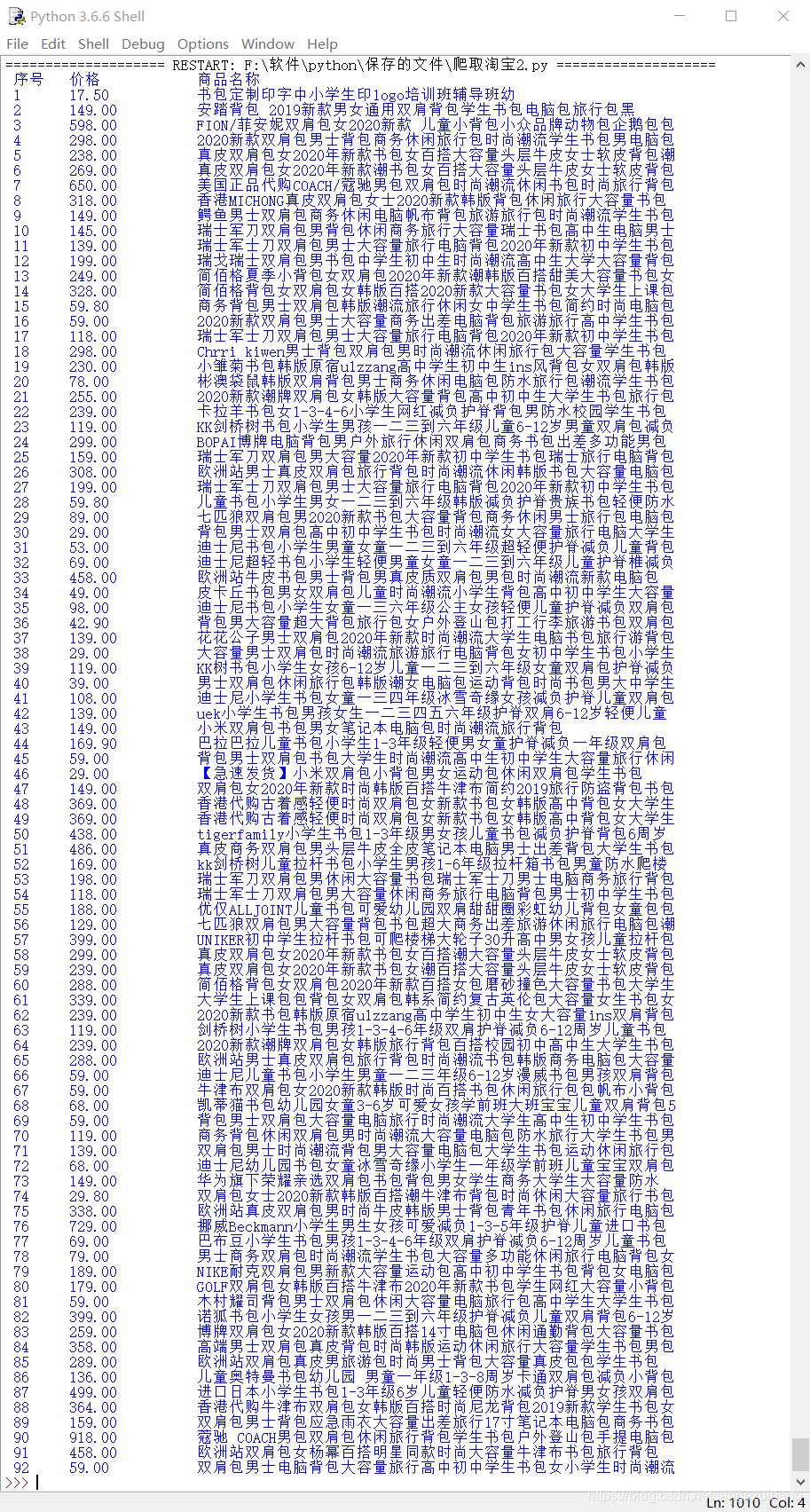

结果示例: