引用

LaTex

@ARTICLE{6381531,

author={B. Xue and M. Zhang and W. N. Browne},

journal={IEEE Transactions on Cybernetics},

title={Particle Swarm Optimization for Feature Selection in Classification: A Multi-Objective Approach},

year={2013},

volume={43},

number={6},

pages={1656-1671},

keywords={Pareto optimisation;evolutionary computation;feature extraction;particle swarm optimisation;pattern classification;sorting;PSO-based multiobjective feature selection algorithms;Pareto front;classification performance;classification problems;crowding;dominance;evolutionary multiobjective algorithms;feature subsets;multiobjective particle swarm optimization;mutation;nondominated solutions;nondominated sorting;single objective feature selection method;two-stage feature selection algorithm;Error analysis;Heuristic algorithms;Optimization;Search problems;Standards;Support vector machines;Training;Feature selection;multi-objective optimization;particle swarm optimization (PSO);0},

doi={10.1109/TSMCB.2012.2227469},

ISSN={2168-2267},

month={Dec},}

Normal

B. Xue, M. Zhang and W. N. Browne, “Particle Swarm Optimization for Feature Selection in Classification: A Multi-Objective Approach,” in IEEE Transactions on Cybernetics, vol. 43, no. 6, pp. 1656-1671, Dec. 2013.

doi: 10.1109/TSMCB.2012.2227469

keywords: {Pareto optimisation;evolutionary computation;feature extraction;particle swarm optimisation;pattern classification;sorting;PSO-based multiobjective feature selection algorithms;Pareto front;classification performance;classification problems;crowding;dominance;evolutionary multiobjective algorithms;feature subsets;multiobjective particle swarm optimization;mutation;nondominated solutions;nondominated sorting;single objective feature selection method;two-stage feature selection algorithm;Error analysis;Heuristic algorithms;Optimization;Search problems;Standards;Support vector machines;Training;Feature selection;multi-objective optimization;particle swarm optimization (PSO);0},

URL: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=6381531&isnumber=6670128

摘要

Irrelevant and redundant features

Feature selection

a small number of relevant features

- maximizing the classification performance

- minimizing the number of features

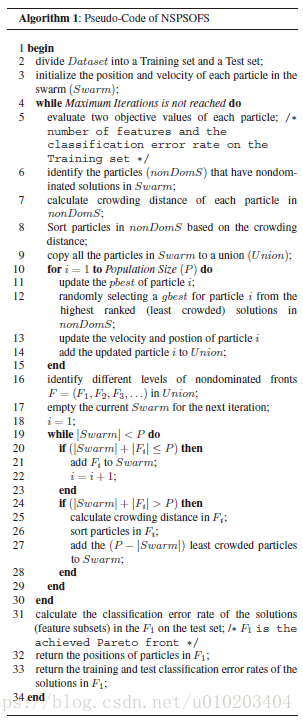

the first study on multi-objective particle swarm optimization (PSO) for feature selection

- nondominated sorting

- crowding, mutation, and dominance

compared with:

- two conventional feature selection methods

- a single objective feature selection method

- a two-stage feature selection algorithm

- three well-known evolutionary multi-objective algorithms

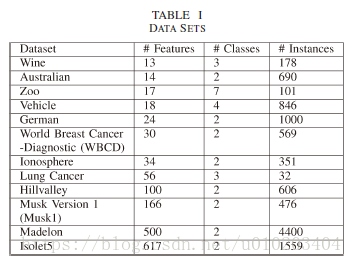

12 benchmark data sets

主要内容

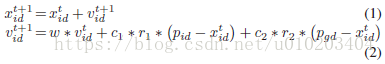

PSO

FS

Traditional:

- SFS

- SBS

- the “plus-l-take away-r” method

- sequential forward floating selection (SFFS)

- sequential backward floating selection (SBFS)

- The Relief algorithm — assigns a weight to each feature

- Decision trees (DTs)

- The FOCUS algorithm — exhaustively

算法

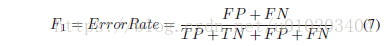

TP,TN,FP,FN — 真阳性、真阴性、假阳性和假阴性

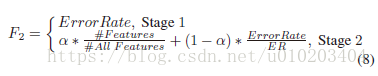

ER — the error rate obtained by using all available features for classification on the training set