最近在学python,对python爬虫框架十分着迷,因此在网上看了许多大佬们的代码,经过反复测试修改,终于大功告成!

原文地址是:https://blog.csdn.net/ljm_9615/article/details/76694188

我的运行环境是win10,用的是python3.6,开发软件pycharm

1.创建项目

cmd进入你要创建的目录下面,scrapy startproject doubanmovie

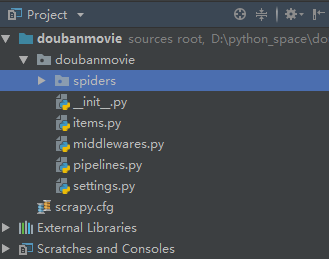

用pycharm打开,目录如下:

#在spiders文件夹下编写自己的爬虫

#在items中编写容器用于存放爬取到的数据

#在pipelines中对数据进行各种操作

# 在settings中进行项目的各种设置

2.编写代码

在items编写数据对象方便对数据操作管理,代码如下

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html import scrapy class MovieItem(scrapy.Item): # 电影名字 name = scrapy.Field() # 电影信息 info = scrapy.Field() # 评分 rating = scrapy.Field() # 评论人数 num = scrapy.Field() # 经典语句 quote = scrapy.Field() # 电影图片 img_url = scrapy.Field() #序号 id_num = scrapy.Field()

在spiders下面创建my_spider.py文件,完整代码如下:

import scrapy from doubanmovie.items import MovieItem class DoubanMovie(scrapy.Spider): # 爬虫唯一标识符 name = 'doubanMovie' # 爬取域名 allowed_domain = ['movie.douban.com'] # 爬取页面地址 start_urls = ['https://movie.douban.com/top250'] #def parse(self, response): # print(response.body) def parse(self, response): selector = scrapy.Selector(response) # 解析出各个电影 movies = selector.xpath('//div[@class="item"]') # 存放电影信息 item = MovieItem() for movie in movies: # 电影各种语言名字的列表 titles = movie.xpath('.//span[@class="title"]/text()').extract() # 将中文名与英文名合成一个字符串 name = '' for title in titles: name += title.strip() item['name'] = name # 电影信息列表 infos = movie.xpath('.//div[@class="bd"]/p/text()').extract() # 电影信息合成一个字符串 fullInfo = '' for info in infos: fullInfo += info.strip() item['info'] = fullInfo # 提取评分信息 item['rating'] = movie.xpath('.//span[@class="rating_num"]/text()').extract()[0].strip() # 提取评价人数 item['num'] = movie.xpath('.//div[@class="star"]/span[last()]/text()').extract()[0].strip()[:-3] # 提取经典语句,quote可能为空 quote = movie.xpath('.//span[@class="inq"]/text()').extract() if quote: quote = quote[0].strip() else: quote = 'null' item['quote'] = quote # 提取电影图片 item['img_url'] = movie.xpath('.//img/@src').extract()[0] item['id_num'] = movie.xpath('.//em/text()').extract()[0] yield item next_page = selector.xpath('//span[@class="next"]/a/@href').extract() if next_page: url = 'https://movie.douban.com/top250' + next_page[0] yield scrapy.Request(url, callback=self.parse)

在pipelines.py操作数据

用json格式文件输出爬取到的电影信息

class DoubanmoviePipeline(object): def __init__(self): # 打开文件 self.file = open('data.json', 'w', encoding='utf-8') # 该方法用于处理数据 def process_item(self, item, spider): # 读取item中的数据 line = json.dumps(dict(item), ensure_ascii=False) + "\n" # 写入文件 self.file.write(line) # 返回item return item # 该方法在spider被开启时被调用。 def open_spider(self, spider): pass # 该方法在spider被关闭时被调用。 def close_spider(self, spider): self.file.close()

爬取图片并保存到本地

class ImagePipeline(ImagesPipeline): def get_media_requests(self, item, info): yield scrapy.Request(item['img_url']) def item_completed(self, results, item, info): image_paths = [x['path'] for ok, x in results if ok] if not image_paths: raise DropItem("Item contains no images") item['img_url'] = image_paths return item

爬取信息写入数据库,我用的mysql数据库做测试

class DBPipeline(object): def __init__(self): # 连接数据库 self.connect = pymysql.connect( host=settings.MYSQL_HOST, port=3306, db=settings.MYSQL_DBNAME, user=settings.MYSQL_USER, passwd=settings.MYSQL_PASSWD, charset='utf8', use_unicode=True) # 通过cursor执行增删查改 self.cursor = self.connect.cursor(); def process_item(self, item, spider): try: self.cursor.execute( """insert into doubanmovie(name, info, rating, num ,quote, img_url,id_num) value (%s, %s, %s, %s, %s, %s,%s)""", (item['name'], item['info'], item['rating'], item['num'], item['quote'], item['img_url'], item['id_num'] )) # 提交sql语句 self.connect.commit() except Exception as error: # 出现错误时打印错误日志 log(error) return item

在settings.py添加

#防止爬取被禁,报错403forbidden USER_AGENT = 'Mozilla/5.0 (Windows NT 6.3; WOW64; rv:45.0) Gecko/20100101 Firefox/45.0' #如果爬取过程中又出现问题:Forbidden by robots.txt 将ROBOTSTXT_OBEY改为False,让scrapy不遵守robot协议,即可正常下载图片 ITEM_PIPELINES = { 'doubanmovie.pipelines.DoubanmoviePipeline': 1, 'doubanmovie.pipelines.ImagePipeline': 100, 'doubanmovie.pipelines.DBPipeline': 10, } 数字1表示优先级,越低越优先 IMAGES_STORE = 'E:\\img\\' #自定义图片存储路径 MYSQL_HOST = 'localhost' MYSQL_DBNAME = 'douban' MYSQL_USER = 'root' MYSQL_PASSWD = '0000' #数据库配置

3.报错问题

基本上报错都是缺少这个或那个文件造成的,网上搜一搜就能找到!

部分报错是由于看了不同大佬代码,导致一些逻辑错误,根据报错提示很轻松就能解决!

完整项目:https://github.com/theSixthDay/doubanmovie.git