虽然理论知识学了很多,但是实际操作还没有积累,现在每天积累一题。

---------------------------不积跬步无以至千里---------------------------------------

Titanic的数据分为test.csv和train.csv,每一行row代表一个乘客的详细信息,每一列column代表一个feature,最后一列是存活信息,1代表存活,0代表没存活。那么,需要用train数据去训练模型,拿到好模型和参数后去测试test,得到test训练之后每个人的存活与否,再与原存活信息比对,看是否一致。

一:导入包

#数据处理和整理 import pandas as pd import numpy as np import random as rnd #画图 import matplotlib.pyplot as plt import seaborn as sns %matplotlib inline #导入的是二分类相关模型,模型融合用的是随机森林。 from sklean.linear_model import LogisticRegression from sklearn.svm import SVC, LinearSVC from sklearn.naive_bayes import GaussianNB from sklearn.linear_model import Perceptron from sklearn.linear_model import SGDClassifier from sklearn.ensemble import RandomForestClassifier from sklearn.neighbors import KNeighborsClassifier from sklearn.tree import DecisionTreeClassifier

二:获得数据

train_df = pd.read_csv('train.csv')

test_df = pd.read_csv('test.csv')

combine = [train_df,test_df] #list中可以放入str,dict,int,float,也可以放入dataframe。可以用同一种处理迭代在两个数据上,保持数据一致性。

三:分析数据

查看每一列的特征名称

print(train_df.columns.values) #也可以list[dataframe.columns.values], list[dataframe]

['PassengerId' 'Survived' 'Pclass' 'Name' 'Sex' 'Age' 'SibSp' 'Parch' 'Ticket' 'Fare' 'Cabin' 'Embarked']

看一下每一列所包含的数据是什么?数值型数据还是分类型数据?数值型数据就可以用来计算,分类型数据可以用来定性。

train_df.head() #调用方法,产生数值需要括号,谨记类的调用知识。如果看头十行,head(10)

那么,对数据要上一下几点的心:

- Which features are available in the dataset?

- Which features are categorical?

- Which features are numerical?

- Which features are mixed data types?

- Which features may contain errors or typos?

- Which features contain blank, null or empty values?

- What are the data types for various features?

train_df.info

查看每一列和survived的联系

思想:过滤式特征选择 统计特征对于结果的贡献程度。

简单一点的方法:groupby

train[['Pclass','Survived']].groupby(['Pclass',as_index=False],mean().sort_values(['Survivued'],ascending = False)

把'Pclass'特征和结果'Survived'组合起来,通过Pclass作为标签,计算年龄的存活率。这个有一点不好,就是Pclass有三个值,1,2,3,这三个值如果作为x点乘θ的贡献其实是平等的,但是1,2,3却赋予了它们不等的值,需要做one-hot处理。

查看各列的组合和survived的联系

grid = sns.FacetGrid(train_df, row='Embarked', col='Survived', size=2.2, aspect=1.6) grid.map(sns.barplot, 'Sex', 'Fare', alpha=.5, ci=None) grid.add_legend()

四:数据清洗

去掉脏数据和对结果没贡献的数据。

train_df = train_df.drop(['Ticket','Cabin'],axis=1) #axis=1去掉columns test_df = test_df.drop(['Ticket','Cabin'],axis = 1) combine = [train_df,test_df] #去掉数据需要单独做,再combine。

生成一个新特征,新特征提取自旧特征,相当于是旧特征的再加工。

We want to analyze if Name feature can be engineered to extract titles and test correlation between titles and survival, before dropping Name and PassengerId features. 这个有点玄学,但是能学到一点,如何通过dataframe做re的事情。外国人的名字是Jack.Johson。那么,通过匹配前面Jack.就可以拿到他们的title。

for dataset in combine:

dataset['Title'] = dataset.Name.str.extract('([A-za-z]+)\.',expand=False) #dataset.ColumnName也可以用dataset['ColumnName']

pd.crosstab(train_df['Title'],train_df['Sex']) #第一个参数是指定index,第二个参数是指定column

Sex female male Title Capt 0 1 Col 0 2 Countess 1 0 Don 0 1 Dr 1 6 Jonkheer 0 1 Lady 1 0 Major 0 2 Master 0 40还可以这样更进一步玩:

df = pd.crosstab(df['Title'], df['Sex'],values=df['Survived'],aggfunc=sum) #把对应项的值求和

把特征的值替换掉:

for dataset in combine:

dataset['Title'] = dataset['Title'].replace(['Lady', 'Countess','Capt', 'Col',\

'Don', 'Dr', 'Major', 'Rev', 'Sir', 'Jonkheer', 'Dona'], 'Rare') #replace(x,y) 把x替换成y

dataset['Title'] = dataset['Title'].replace('Mlle', 'Miss')

dataset['Title'] = dataset['Title'].replace('Ms', 'Miss')

dataset['Title'] = dataset['Title'].replace('Mme', 'Mrs')

train_df[['Title', 'Survived']].groupby(['Title'], as_index=False).mean()

title_mapping = {"Mr": 1, "Miss": 2, "Mrs": 3, "Master": 4, "Rare": 5}

for dataset in combine:

dataset['Title'] = dataset['Title'].map(title_mapping)

dataset['Title'] = dataset['Title'].fillna(0)

train_df.head()

###########################更进一步处理数据##############################

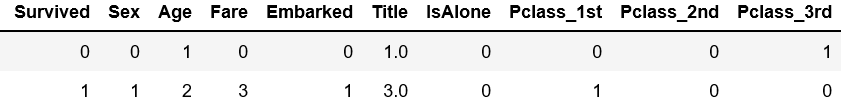

哑变量处理(这是跑完下面的建模分析做对比之后,我第二次再加工的数据,下面的预测都是未做哑变量处理的)

dict_Pclass = {1:'1st',2:'2nd',3:'3rd'}

#总觉得Pclass那边做astype(int)不太好,因为普通乘客3,会员2,尊贵黄金会员1是等价的所以改回来,用str代替了数字1,2,3,并用get_dummies处理。

train_df ["Pclass"] = train_df["Pclass"].map(dict_Pclass)

test_df["Pclass"] = test_df["Pclass"].map(dict_Pclass)

test_df = test_df.drop(['Age*Pclass'],axis = 1)

train_df = pd.get_dummies(train_df) #把dataframe中的str数据分类,形成新特征,包含该新特征的样本是1,不包含是0. test_df = pd.get_dummies(test_df) #好处是更加容易做计算,坏处是对特征稀释。

处理过后对训练集的预测结果:

LR:80.920000000000002 < 81.37 未处理SVC:83.28 < 83.95 未处理

kNN:84.510000000000005 < 84.95

naive_bayes:75.530000000000001 > 73.73perceptron:81.140000000000001 > 74.06linear_SVC:81.930000000000007 > 81.14Decision_Tree:86.760000000000005 = 86.76

Random_Forest:86.640000000000001 = 86.64

讲道理,进行了哑变量变换之后,应该线性模型都会更加精确,不知道为什么LR和SVC,kNN变小了,是不是因为样本数很小,体现不出来?

################总之,初步的处理了一下数据###################

X_train = train_df.drop(['Survived'],axis = 1)

y_train = train_df['Survived']

X_test = test_df.drop(['PassengerId'],axis=1).copy() #传值

#Logistic Regression

logist = LogisticRegression() #初始化类

logist.fit(X_train,y_train) #调用类中的fit函数,训练数据,不需要返回值

y_predict = logist.predict(X_test)

acc_log = round(logist.score(X_train, y_train) * 100, 2)

acc_log

#81.370000000000005

coeff_df = pd.DataFrame(train_df.columns.delete(0))

coeff_df["Correlation"] = pd.Series(logist.coef_[0])

coeff_df.sort_values(by='Correlation', ascending=False)

svc = SVC()

svc.fit(X_train,y_train)

svc_predict = svc.predict(X_test)

acc_svc = round(svc.score(X_train,y_train ) *100,2) #给出该模型的平均正确率

print(acc_svc)

#83.95

kn = KNeighborsClassifier()

kn.fit(X_train,y_train)

y_test = kn.predict(X_test)

acc_knn = round(kn.score(X_train,y_train) * 100, 2)

acc_knn

#84.959999999999994

gaussian = GaussianNB()

gaussian.fit(X_train, y_train)

y_test = gaussian.predict(X_test)

acc_gaus = round(gaussian.score(X_train,y_train) * 100, 2)

acc_gaus

#73.739999999999995

percep = Perceptron()

percep.fit(X_train, y_train)

y_test = percep.predict(X_test)

acc_per = round(percep.score(X_train, y_train) * 100, 2)

acc_per

#74.069999999999993

l_svc = LinearSVC()

l_svc.fit(X_train,y_train)

y_test = l_svc.predict(X_test)

acc_lsvc = round(l_svc.score(X_train, y_train) * 100 , 2)

acc_lsvc

#81.140000000000001

deci_tre = DecisionTreeClassifier()

deci_tre.fit(X_train,y_train)

deci_tre_y_test = deci_tre.predict(X_test)

acc_deci = round(deci_tre.score(X_train, y_train) * 100 , 2)

acc_deci

#86.760000000000005

ran_fo = RandomForestClassifier()

ran_fo.fit(X_train, y_train )

y_test = ran_fo.predict(X_test)

acc_ran_fo = round(ran_fo.score(X_train, y_train) * 100, 2)

acc_ran_fo

#86.640000000000001

submission = pd.DataFrame({

"PassengerId": test_df["PassengerId"],

"Survived":y_test

})

submission.to_csv("Titanic_submission.csv",index=False)