前言

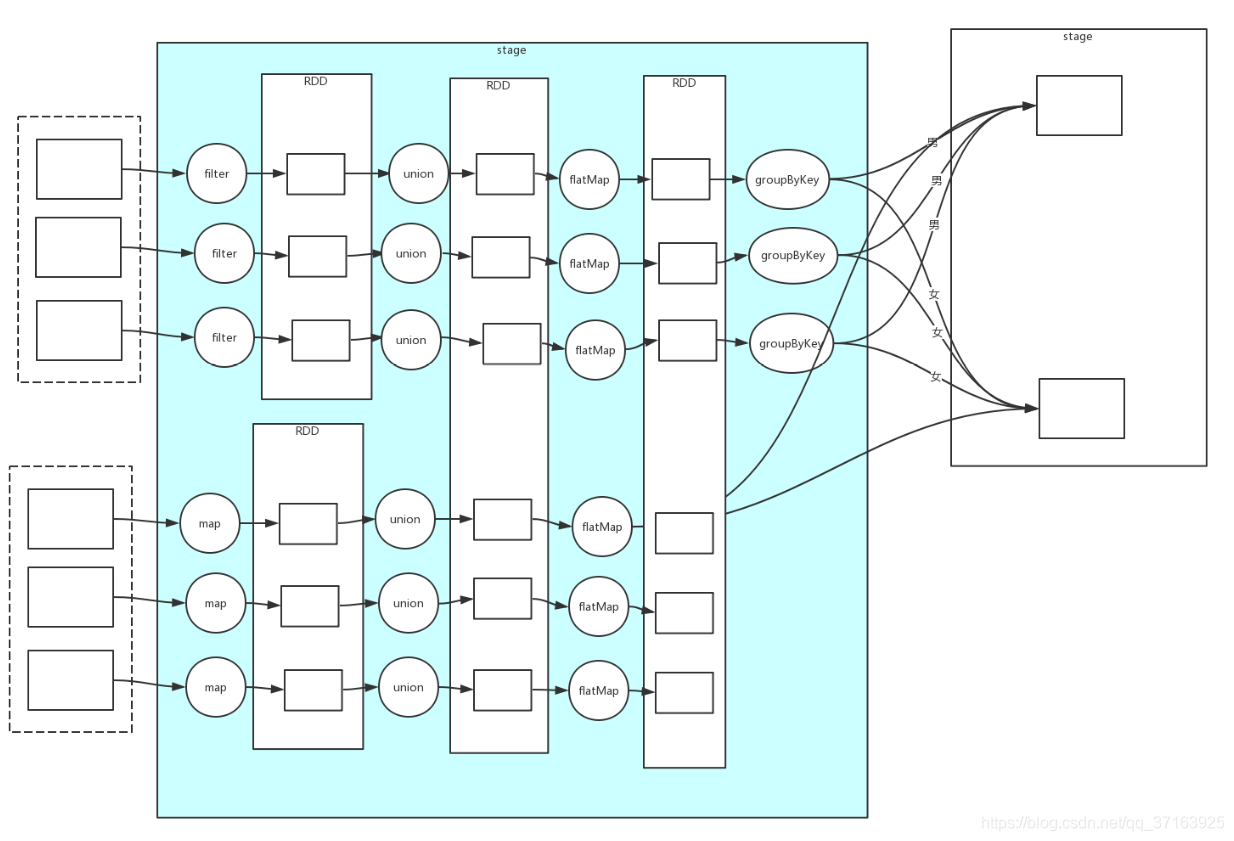

我们知道,Spark是惰性计算的,只有遇到Action算子时,才会发生计算过程,那么这个计算过程是如何发生的呢?首先,DAG Scheduler会通过shuffle操作来划分Stage,所以在一个Stage中的任务一定是窄依赖,也就是说,它们不需要依赖其他节点的计算就能完成自己的任务,即一个Stage里的任务可以并行计算。

注:本人使用的Spark源码版本为2.3.0,IDE为IDEA2019,对源码感兴趣的同学可以点击这里下载源码包,直接解压用IDEA打开即可。

那么DAG Scheduler是如何划分Stage的?我们以wordCount为例,程序运行到foreach操作的时候,调用SparkContext的Runjob方法。

正文

/**

* Applies a function f to all elements of this RDD.

*/

def foreach(f: T => Unit): Unit = withScope {

val cleanF = sc.clean(f)

sc.runJob(this, (iter: Iterator[T]) => iter.foreach(cleanF))

}

def runJob[T, U: ClassTag](

rdd: RDD[T],

func: (TaskContext, Iterator[T]) => U,

partitions: Seq[Int],

resultHandler: (Int, U) => Unit): Unit = {

if (stopped.get()) {

throw new IllegalStateException("SparkContext has been shutdown")

}

val callSite = getCallSite

val cleanedFunc = clean(func)

logInfo("Starting job: " + callSite.shortForm)

if (conf.getBoolean("spark.logLineage", false)) {

logInfo("RDD's recursive dependencies:\n" + rdd.toDebugString)

}

//注意,这里我们可以看到,由DAG Scheduler来对任务进行划分,进入这个方法

dagScheduler.runJob(rdd, cleanedFunc, partitions, callSite, resultHandler, localProperties.get)

progressBar.foreach(_.finishAll())

rdd.doCheckpoint()

}

def runJob[T, U](

rdd: RDD[T],

func: (TaskContext, Iterator[T]) => U,

partitions: Seq[Int],

callSite: CallSite,

resultHandler: (Int, U) => Unit,

properties: Properties): Unit = {

val start = System.nanoTime

//这里调用了submitJob并把RDD也传进去了,进入这个方法

val waiter = submitJob(rdd, func, partitions, callSite, resultHandler, properties)

ThreadUtils.awaitReady(waiter.completionFuture, Duration.Inf)

waiter.completionFuture.value.get match {

case scala.util.Success(_) =>

logInfo("Job %d finished: %s, took %f s".format

(waiter.jobId, callSite.shortForm, (System.nanoTime - start) / 1e9))

case scala.util.Failure(exception) =>

logInfo("Job %d failed: %s, took %f s".format

(waiter.jobId, callSite.shortForm, (System.nanoTime - start) / 1e9))

// SPARK-8644: Include user stack trace in exceptions coming from DAGScheduler.

val callerStackTrace = Thread.currentThread().getStackTrace.tail

exception.setStackTrace(exception.getStackTrace ++ callerStackTrace)

throw exception

}

}

def submitJob[T, U](

rdd: RDD[T],

func: (TaskContext, Iterator[T]) => U,

partitions: Seq[Int],

callSite: CallSite,

resultHandler: (Int, U) => Unit,

properties: Properties): JobWaiter[U] = {

// Check to make sure we are not launching a task on a partition that does not exist.

val maxPartitions = rdd.partitions.length

partitions.find(p => p >= maxPartitions || p < 0).foreach { p =>

throw new IllegalArgumentException(

"Attempting to access a non-existent partition: " + p + ". " +

"Total number of partitions: " + maxPartitions)

}

val jobId = nextJobId.getAndIncrement()

if (partitions.size == 0) {

// Return immediately if the job is running 0 tasks

return new JobWaiter[U](this, jobId, 0, resultHandler)

}

assert(partitions.size > 0)

val func2 = func.asInstanceOf[(TaskContext, Iterator[_]) => _]

val waiter = new JobWaiter(this, jobId, partitions.size, resultHandler)

//这里创建了一个waiter实例来等待job划分执行结束,并使用了一个线程池来处理job的划分工作,我们应该关注这个eventProcessLoop

//eventProcessLoop.post这里将JobSubmitted这个样例类推送到eventProcessLoop.eventQueue中去,然后由run方法来消费

eventProcessLoop.post(JobSubmitted(

jobId, rdd, func2, partitions.toArray, callSite, waiter,

SerializationUtils.clone(properties)))

waiter

}

private[spark] abstract class EventLoop[E](name: String) extends Logging {

private val eventQueue: BlockingQueue[E] = new LinkedBlockingDeque[E]()

private val stopped = new AtomicBoolean(false)

private val eventThread = new Thread(name) {

setDaemon(true)

override def run(): Unit = {

try {

while (!stopped.get) {

val event = eventQueue.take()

try {

onReceive(event)

} catch {

case NonFatal(e) =>

try {

onError(e)

} catch {

case NonFatal(e) => logError("Unexpected error in " + name, e)

}

}

}

} catch {

case ie: InterruptedException => // exit even if eventQueue is not empty

case NonFatal(e) => logError("Unexpected error in " + name, e)

}

}

}

这个EventLoop的start方法是异步调用的,通过Eventloop的onstart方法调用。这里用于代码太长就不贴了。回到DAG Scheduler.scala,我们已经知道它创建了一个eventProcessLoop对象,然后在DAG Scheduler的末尾调用了eventProcessLoop.start()方法。这个线程的run方法在这里被调用。一直等待消费eventQueue的消息。然后eventProcessLoop.post方法会往eventQueue里面投放数据。进程消费时会调用doOnreceive()方法并在这个方法里面进行样例类的匹配,这里会匹配到JobSubmitted样例类,跳回DAG Scheduler类,也就是说,它调用了EventLoop 类实例,最终又调回了自己的类方法。

private def doOnReceive(event: DAGSchedulerEvent): Unit = event match {

case JobSubmitted(jobId, rdd, func, partitions, callSite, listener, properties) =>

dagScheduler.handleJobSubmitted(jobId, rdd, func, partitions, callSite, listener, properties)

为什么要这么做呢?其实是为了让多线程在运行时有一个顺序,如果把调用EventLoop这一段操作去掉,它就变成线性的了,多线程运行时也无法保证它的顺序性。

进入handleJobSubmitted()方法,可以看到它将最后一个RDD(Final RDD)传入进去了,这里调用createResultStage()方法创建resultStage,resultStage是一个Job中最后一个Stage。然后继续看代码,下面执行了submitStage()方法,这个方法会递归地调用,从第一个stage开始,到最后一个Stage,直到所有的Stage都提交完毕,submitStage()又调用了getMissParentStage()方法,在这里划分Stage为ShuffleMapStage和ResultStage。除了最后一个Stage,其他Stage都被称为ShuffleMapStage。

private def getMissingParentStages(stage: Stage): List[Stage] = {

val missing = new HashSet[Stage]

val visited = new HashSet[RDD[_]]

// We are manually maintaining a stack here to prevent StackOverflowError

// caused by recursively visiting

val waitingForVisit = new Stack[RDD[_]]

def visit(rdd: RDD[_]) {

if (!visited(rdd)) {

visited += rdd

val rddHasUncachedPartitions = getCacheLocs(rdd).contains(Nil)

if (rddHasUncachedPartitions) {

for (dep <- rdd.dependencies) {

dep match {

case shufDep: ShuffleDependency[_, _, _] =>

val mapStage = getOrCreateShuffleMapStage(shufDep, stage.firstJobId)

if (!mapStage.isAvailable) {

missing += mapStage

}

case narrowDep: NarrowDependency[_] =>

waitingForVisit.push(narrowDep.rdd)

}

}

}

}

}

waitingForVisit.push(stage.rdd)

while (waitingForVisit.nonEmpty) {

visit(waitingForVisit.pop())

}

missing.toList

}

这里通过一个Stack来将RDD压栈,如果是窄依赖,就将递归地将RDD压入栈中,这个RDD是一个Stage的最后一个RDD。取出时又将RDD取出,分别调用了SubmitStage()方法。

总结

DAG Scheduler划分Stage的过程就是依靠RDD之间的依赖关系来决定的,Action算子触发DAG Scheduler,DAG Scheduler通过依赖关系将其划分为ShuffleMapStage和ResultStage。其中最重要的方法是getMissingParentStages。其底层依靠栈结构和递归来实现对RDD的遍历和Stage的划分。通过最后一个RDD,一直递归向前遍历,触底之后开始从前往后提交Stage。

文章结构比较混乱,一方面自己对Spark还没有很熟,另一方面源码还是不容易讲的。下一篇想要讲讲TaskScheduler分配任务的过程。