前言

本节学习PCA(主成分分析)

- 非监督机器学习

- 主要用于数据降维

内容包括

- 实现底层逻辑

- 使用scikit库

1、PCA原理与实现

PCA说白了就是在尽可能保留信息的情况下,将高维数据映射到低维

PCA说白了就是在尽可能保留信息的情况下,将高维数据映射到低维

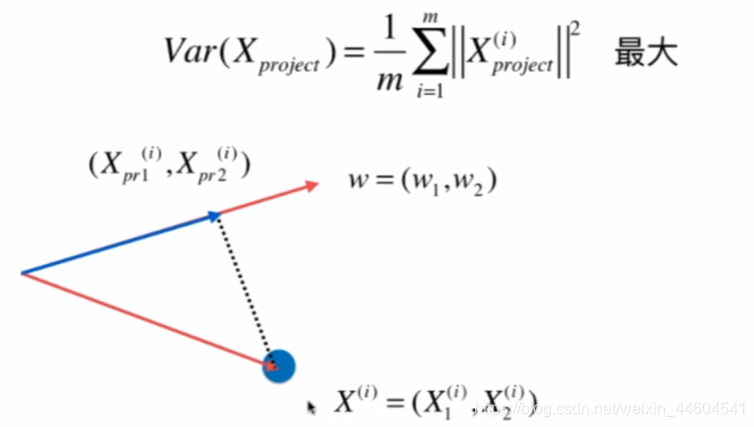

过程如下

- 样本均值归零(demean)

- 求一个轴的方向w

- 将所有样本映射到w后方差最大

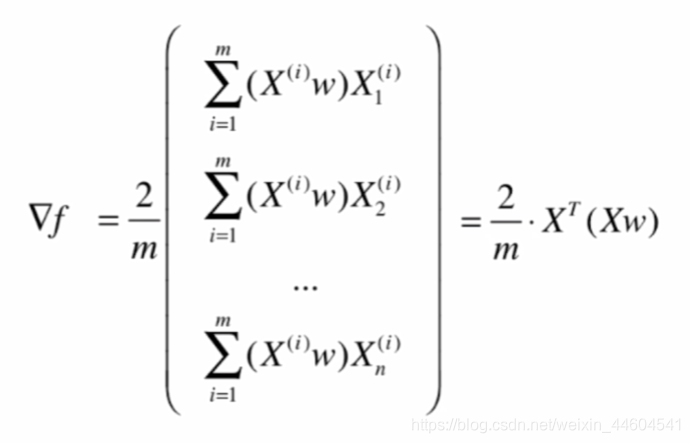

用梯度上升法实现

梯度是

实现如下

import numpy as np

import matplotlib.pyplot as plt

"""使用梯度上升法实现PCA"""

# 数据准备

X = np.empty((100, 2))

X[:,0] = np.random.uniform(0., 100., size=100)

X[:,1] = 0.75 * X[:,0] + 3. + np.random.normal(0, 10., size=100)

plt.scatter(X[:,0], X[:,1])

plt.show() #看一下我们的数据长啥样

# demean

def demean(X):

return X - np.mean(X, axis=0) #减去每个特征的均值,即每列的均值

X_demean = demean(X)

plt.scatter(X_demean[:,0], X_demean[:,1])

plt.show() #分布一致,坐标轴变换

# 梯度上升法

# 目标函数

def f(w, X):

return np.sum((X.dot(w) ** 2)) / len(X)

# 梯度

def df_math(w, X):

return X.T.dot(X.dot(w)) * 2. / len(X)

# 梯度调试

def df_debug(w, X, epsilon=0.0001):

res = np.empty(len(w))

for i in range(len(w)):

w_1 = w.copy()

w_1[i] += epsilon

w_2 = w.copy()

w_2[i] -= epsilon

res[i] = (f(w_1, X) - f(w_2, X)) / (2 * epsilon)

return res

# w是个方向,即单位向量

def direction(w):

return w / np.linalg.norm(w)

# 梯度上升

def gradient_ascent(df, X, initial_w, eta, n_iters=1e4, epsilon=1e-8):

w = direction(initial_w)

cur_iter = 0

while cur_iter < n_iters:

gradient = df(w, X)

last_w = w

w = w + eta * gradient

w = direction(w) # 注意:每次求一个单位方向

if (abs(f(w, X) - f(last_w, X)) < epsilon):

break

cur_iter += 1

return w

# 实施

initial_w = np.random.random(X.shape[1]) # 注意:初始值不能用0向量开始

eta = 0.001

# 注意:不能使用StandardScaler标准化数据,标准化会把方差干掉

PCA_debug = gradient_ascent(df_debug, X_demean, initial_w, eta)

print(PCA_debug)

PCA = gradient_ascent(df_math, X_demean, initial_w, eta)

print(PCA)

w = gradient_ascent(df_math, X_demean, initial_w, eta)

plt.scatter(X_demean[:,0], X_demean[:,1])

plt.plot([0, w[0]*30], [0, w[1]*30], color='r')

plt.show()

# 尝试使用极端数据

X2 = np.empty((100, 2))

X2[:,0] = np.random.uniform(0., 100., size=100)

X2[:,1] = 0.75 * X2[:,0] + 3.

plt.scatter(X2[:,0], X2[:,1])

plt.show()

X2_demean = demean(X2)

w2 = gradient_ascent(df_math, X2_demean, initial_w, eta)

print(w2)

plt.scatter(X2_demean[:,0], X2_demean[:,1])

plt.plot([0, w2[0]*30], [0, w2[1]*30], color='r')

plt.show()2、前n个主成分

上面实现了一个主成分

那我们处理高维数据时

最终降维到k个主成分

原理就是

去掉之前主成分的影响后

再求主成分

实现如下

import numpy as np

import matplotlib.pyplot as plt

"""获取前n个主成分"""

# 数据

X = np.empty((100, 2))

X[:,0] = np.random.uniform(0., 100., size=100)

X[:,1] = 0.75 * X[:,0] + 3. + np.random.normal(0, 10., size=100)

# demean

def demean(X):

return X - np.mean(X, axis=0)

X = demean(X)

# 目标函数

def f(w, X):

return np.sum((X.dot(w) ** 2)) / len(X)

# 梯度

def df(w, X):

return X.T.dot(X.dot(w)) * 2. / len(X)

# 方向的单位向量

def direction(w):

return w / np.linalg.norm(w)

# 梯度上升

def first_component(X, initial_w, eta, n_iters=1e4, epsilon=1e-8):

w = direction(initial_w)

cur_iter = 0

while cur_iter < n_iters:

gradient = df(w, X)

last_w = w

w = w + eta * gradient

w = direction(w)

if (abs(f(w, X) - f(last_w, X)) < epsilon):

break

cur_iter += 1

return w

# 第一主成分

initial_w = np.random.random(X.shape[1])

eta = 0.01

w1 = first_component(X, initial_w, eta)

print(w1)

# 第二主成分

"""

X2 = np.empty(X.shape)

for i in range(len(X)):

X2[i] = X[i] - X[i].dot(w) * w

"""

X2 = X - X.dot(w1).reshape(-1, 1) * w1 #向量化

plt.scatter(X2[:,0], X2[:,1])

plt.show()

w2 = first_component(X2, initial_w, eta)

print(w2)

print(w1.dot(w2)) #验证,应该为0封装成函数

def first_n_components(n, X, eta=0.01, n_iters=1e4, epsilon=1e-8):

X_pca = X.copy()

X_pca = demean(X_pca)

res = []

for i in range(n):

initial_w = np.random.random(X_pca.shape[1])

w = first_component(X_pca, initial_w, eta)

res.append(w)

X_pca = X_pca - X_pca.dot(w).reshape(-1, 1) * w

return res

w = first_n_components(2, X)

print(w)3、将PCA封装成函数

import numpy as np

"""PCA的函数封装"""

class PCA:

def __init__(self, n_components):

"""初始化PCA"""

assert n_components >= 1, "n_components must be valid"

self.n_components = n_components

self.components_ = None

def fit(self, X, eta=0.01, n_iters=1e4):

"""获得数据集X的前n个主成分"""

assert self.n_components <= X.shape[1], \

"n_components must not be greater than the feature number of X"

# demean

def demean(X):

return X - np.mean(X, axis=0)

# 目标函数

def f(w, X):

return np.sum((X.dot(w) ** 2)) / len(X)

# 梯度

def df(w, X):

return X.T.dot(X.dot(w)) * 2. / len(X)

# 方向

def direction(w):

return w / np.linalg.norm(w)

# 梯度上升法

def first_component(X, initial_w, eta=0.01, n_iters=1e4, epsilon=1e-8):

w = direction(initial_w)

cur_iter = 0

while cur_iter < n_iters:

gradient = df(w, X)

last_w = w

w = w + eta * gradient

w = direction(w)

if (abs(f(w, X) - f(last_w, X)) < epsilon):

break

cur_iter += 1

return w

X_pca = demean(X)

self.components_ = np.empty(shape=(self.n_components, X.shape[1]))

for i in range(self.n_components):

initial_w = np.random.random(X_pca.shape[1]) #初始方向

w = first_component(X_pca, initial_w, eta, n_iters) #得到主成分

self.components_[i,:] = w

X_pca = X_pca - X_pca.dot(w).reshape(-1, 1) * w #去掉得到的主成分

return self

def transform(self, X):

"""将给定的X,映射到各个主成分分量中"""

assert X.shape[1] == self.components_.shape[1]

return X.dot(self.components_.T)

def inverse_transform(self, X):

"""将给定的X,反向映射回原来的特征空间"""

assert X.shape[1] == self.components_.shape[0]

return X.dot(self.components_)

def __repr__(self):

return "PCA(n_components=%d)" % self.n_components4、使用scikit库

前面是自己实现底层逻辑

在scikit中有封装好的可用库

import numpy as np

import matplotlib.pyplot as plt

from sklearn.decomposition import PCA

"""使用scikit的库实现PCA"""

# 数据

X = np.empty((100, 2))

X[:,0] = np.random.uniform(0., 100., size=100)

X[:,1] = 0.75 * X[:,0] + 3. + np.random.normal(0, 10., size=100)

# pca

pca = PCA(n_components=1)

pca.fit(X)

print(pca.components_)

# 降维

X_reduction = pca.transform(X)

X_restore = pca.inverse_transform(X_reduction)

print(X_reduction.shape)

print(X_restore.shape)

plt.scatter(X[:,0], X[:,1], color='b', alpha=0.5)

plt.scatter(X_restore[:,0], X_restore[:,1], color='r', alpha=0.5)

plt.show()对真实数据集进行使用

import numpy as np

import matplotlib.pyplot as plt

from sklearn.decomposition import PCA

# 真实数据集,手写识别

from sklearn import datasets

digits = datasets.load_digits()

X = digits.data

y = digits.target

# 划分test和train

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=666)

print(X_train.shape)

# kNN

from sklearn.neighbors import KNeighborsClassifier

knn_clf = KNeighborsClassifier() #默认参数

knn_clf.fit(X_train, y_train)

print(knn_clf.score(X_test, y_test))

# PCA后

pca = PCA(n_components=2)

pca.fit(X_train)

X_train_reduction = pca.transform(X_train)

X_test_reduction = pca.transform(X_test)

knn_clf = KNeighborsClassifier()

knn_clf.fit(X_train_reduction, y_train)

print(knn_clf.score(X_test_reduction, y_test)) #降到2维后精度太低了

# 需要选取合适的特征数

pca = PCA(0.95) #可以解释95%的数据的特征数

pca.fit(X_train)

print(pca.n_components_) #查看特征数

X_train_reduction = pca.transform(X_train)

X_test_reduction = pca.transform(X_test)

knn_clf = KNeighborsClassifier()

knn_clf.fit(X_train_reduction, y_train)

print(knn_clf.score(X_test_reduction, y_test)) #时间快很多,精度稍微减小

# 可视化

pca = PCA(n_components=2) #可视化总是降到2维或3维

pca.fit(X)

X_reduction = pca.transform(X)

for i in range(10):

plt.scatter(X_reduction[y==i,0], X_reduction[y==i,1], alpha=0.8)

plt.show()5、用PCA降噪

PCA还可以用来降噪

import numpy as np

import matplotlib.pyplot as plt

from sklearn.decomposition import PCA

from sklearn import datasets

"""用PCA降噪"""

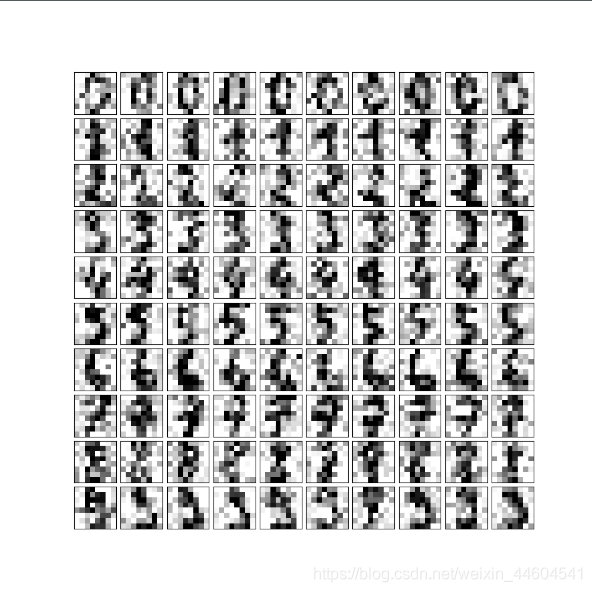

# 数据,手写识别

digits = datasets.load_digits()

X = digits.data

y = digits.target

noisy_digits = X + np.random.normal(0, 4, size=X.shape) #加噪音

example_digits = noisy_digits[y==0,:][:10]

for num in range(1,10):

example_digits = np.vstack([example_digits, noisy_digits[y==num,:][:10]])

print(example_digits.shape)

# 绘图

def plot_digits(data):

fig, axes = plt.subplots(10, 10, figsize=(10, 10),

subplot_kw={'xticks': [], 'yticks': []},

gridspec_kw=dict(hspace=0.1, wspace=0.1))

for i, ax in enumerate(axes.flat):

ax.imshow(data[i].reshape(8, 8),

cmap='binary', interpolation='nearest',

clim=(0, 16))

plt.show()

# 原图

plot_digits(example_digits)

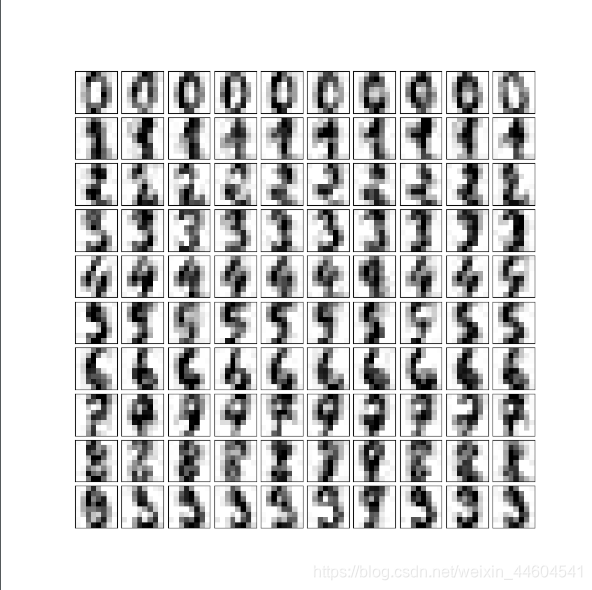

# PCA后

pca = PCA(0.5).fit(noisy_digits)

print(pca.n_components_) #特征数

components = pca.transform(example_digits) #降维

filtered_digits = pca.inverse_transform(components) #升维

plot_digits(filtered_digits) #噪音去除原图和降噪效果如下

结语

学习了PCA的原理和用法

下一节准备再MNIST上使用下试试