原3.2.0-dev http://docs.opencv.org/master/d4/d18/tutorial_sfm_scene_reconstruction.html

在本教程中,您将学习如何使用重建api稀疏重建:

- 加载和图像文件的路径。

- libmv重建传递途径运行。

- 使用即显示结果。

1。 明德殿

使用下面的图像序列[1]和追随者相机参数我们可以计算稀疏三维重建:

。 / example_sfm_scene_reconstruction image_paths_file。 txt 800 400 225

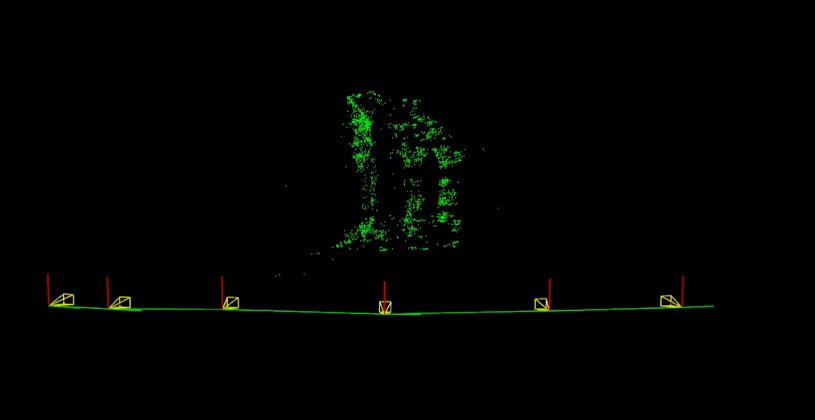

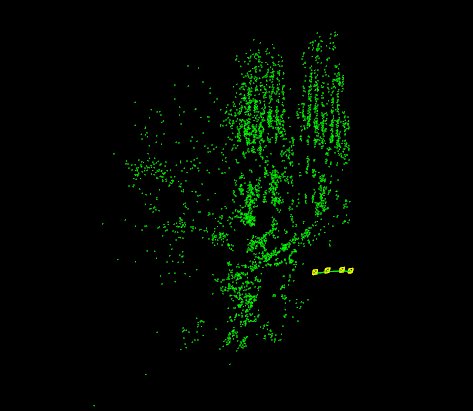

下面的图片显示了获得相机运动除了估计稀疏三维重建:

2。 圣家堂

使用下面的图像序列[2]和追随者相机参数我们可以计算稀疏三维重建:

。 / example_sfm_scene_reconstruction image_paths_file。 txt 350 240 360

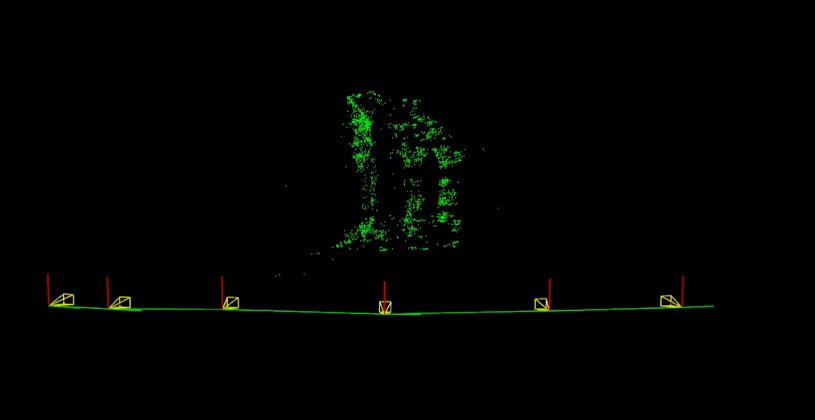

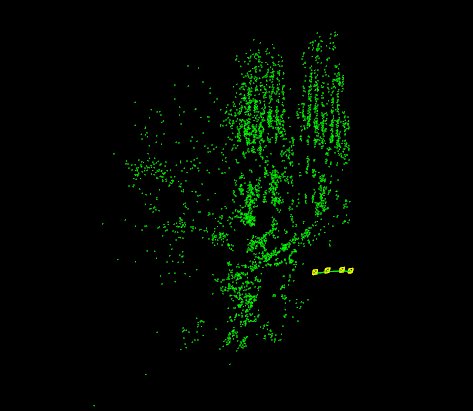

下面的图片显示了获得相机运动除了估计稀疏三维重建:

[1] http://vision.middlebury.edu/mview/data

[2]Penate桑切斯,a .和Moreno-Noguer f和安德拉德Cetto,j .和小花,f(2014)。 LETHA:学习从高质量的输入对3 d姿势估计在低质量的图像。 学报》国际会议3 d视觉(3 dv)。 URL

Code

#include <iostream>

#include <fstream>

static

void help() {

cout

<<

"\n------------------------------------------------------------------------------------\n"

<<

" This program shows the multiview reconstruction capabilities in the \n"

<<

" OpenCV Structure From Motion (SFM) module.\n"

<<

" It reconstruct(重建) a scene from a set of 2D images \n"

<<

" Usage:\n"

<<

" example_sfm_scene_reconstruction <path_to_file> <f> <cx> <cy>\n"

<<

" where: path_to_file is the file absolute path into your system which contains\n"

<<

" the list of images to use for reconstruction. \n"

<<

" f is the focal lenght in pixels. \n"

<<

" cx is the image principal point x coordinates in pixels. \n"

<<

" cy is the image principal point y coordinates in pixels. \n"

<<

"------------------------------------------------------------------------------------\n\n"

<< endl;

}

int getdir(

const

string _filename, vector<String> &files)

{

ifstream myfile(_filename.c_str());

if (!myfile.is_open()) {

cout <<

"Unable to read file: " << _filename << endl;

exit(0);

}

else {;

size_t found = _filename.find_last_of(

"/\\");

string line_str, path_to_file = _filename.substr(0, found);

while ( getline(myfile, line_str) )

files.push_back(path_to_file+

string(

"/")+line_str);

}

return 1;

}

int main(

int argc,

char* argv[])

{

if (

argc

(命令行参数个数) != 5 )

{

help();

exit(0);

}

vector<String> images_paths;

getdir( argv[1], images_paths );

float f = atof(argv[2]),

cx = atof(argv[3]), cy = atof(argv[4]);

0, f, cy,

0, 0, 1);

bool is_projective =

true;

vector<Mat> Rs_est, ts_est, points3d_estimated;

reconstruct(images_paths, Rs_est, ts_est, K, points3d_estimated, is_

projective

(投影的));

cout <<

"\n----------------------------\n" << endl;

cout <<

"Reconstruction: " << endl;

cout <<

"============================" << endl;

cout <<

"Estimated 3D points: " << points3d_estimated.size() << endl;

cout <<

"Estimated cameras: " << Rs_est.size() << endl;

cout <<

"Refined intrinsics: " << endl << K << endl << endl;

cout <<

"3D Visualization: " << endl;

cout <<

"============================" << endl;

window.setWindowSize(

Size(500,500));

window.setWindowPosition(

Point(150,150));

window.setBackgroundColor();

cout <<

"Recovering points ... ";

vector<Vec3f> point_cloud_est;

for (

int i = 0; i < points3d_estimated.size(); ++i)

point_cloud_est.push_back(

Vec3f(points3d_estimated[i]));

cout <<

"[DONE]" << endl;

cout <<

"Recovering cameras ... ";

vector<Affine3d> path;

for (

size_t i = 0; i < Rs_est.size(); ++i)

path.push_back(

Affine3d(Rs_est[i],ts_est[i]));

cout <<

"[DONE]" << endl;

if ( point_cloud_est.size() > 0 )

{

cout <<

"Rendering points ... ";

viz::WCloud cloud_widget(point_cloud_est, viz::Color::green());

window.showWidget(

"point_cloud", cloud_widget);

cout <<

"[DONE]" << endl;

}

else

{

cout <<

"Cannot render points: Empty pointcloud" << endl;

}

if ( path.size() > 0 )

{

cout <<

"Rendering Cameras ... ";

window.showWidget(

"cameras_frames_and_lines",

viz::WTrajectory(path, viz::WTrajectory::BOTH, 0.1, viz::Color::green()));

window.setViewerPose(path[0]);

cout <<

"[DONE]" << endl;

}

else

{

cout <<

"Cannot render the cameras: Empty path" << endl;

}

cout << endl <<

"Press 'q' to close each windows ... " << endl;

window.spin();

return 0;

}

Explanation

Firstly, we need to load the file containing list of image paths in order to feed the reconstruction api:

解释

首先,我们需要加载这个文件包含为了支持重建的图像路径列表api:

/home/eriba/software/opencv_contrib/modules/sfm/samples/data/images/resized_IMG_2889.jpg

/home/eriba/software/opencv_contrib/modules/sfm/samples/data/images/resized_IMG_2890.jpg

/home/eriba/software/opencv_contrib/modules/sfm/samples/data/images/resized_IMG_2891.jpg

/home/eriba/software/opencv_contrib/modules/sfm/samples/data/images/resized_IMG_2892.jpg

...

int getdir(

const

string _filename, vector<string> &files)

{

ifstream myfile(_filename.c_str());

if (!myfile.is_open()) {

cout <<

"Unable to read file: " << _filename << endl;

exit(0);

}

else {

string line_str;

while ( getline(myfile, line_str) )

files.push_back(line_str);

}

return 1;

}

其次,建立集装箱将被用来重建api。 重要的是大纲,必须存储在一个向量的估计结果<mat>。 在这种情况下被称为重载签名图像的真实图像,内部提取和计算稀疏的2 d功能使用雏菊描述符来使用FlannBasedMatcher匹配,建立轨道结构

Secondly, the built container will be used to feed the reconstruction api. It is important outline that the estimated results must be stored in a vector<Mat>. In this case is called the overloaded signature for real images which from the images, internally extracts and compute the sparse(稀疏的) 2d features using DAISY descriptors(描述符号) in order to be matched using FlannBasedMatcher and build the tracks structure.

bool is_projective =

true;

vector<Mat> Rs_est, ts_est, points3d_estimated;

reconstruct(images_paths, Rs_est, ts_est, K, points3d_estimated, is_

projective

(投影的));

cout <<

"\n----------------------------\n" << endl;

cout <<

"Reconstruction: " << endl;

cout <<

"============================" << endl;

cout <<

"Estimated 3D points: " << points3d_estimated.size() << endl;

cout <<

"Estimated cameras: " << Rs_est.size() << endl;

cout <<

"Refined intrinsics: " << endl << K << endl << endl;

Finally, the obtained results will be shown in Viz.

Usage and Results

In order to run this sample we need to specify the path to the image paths files, the focal lenght of the camera in addition to the center projection coordinates (in pixels).

最后,将即所示结果。

使用情况和结果

为了运行这个示例,我们需要指定图像路径文件的路径,相机的焦距长度除了中心投影坐标(以像素为单位)。

1. Middlebury temple

Using following image sequence [1] and the followings camera parameters we can compute the sparse(稀疏的) 3d reconstruction:

./example_sfm_scene_reconstruction image_paths_file.txt 800 400 225

The following picture shows the obtained camera motion in addition to the estimated sparse 3d reconstruction:

2. Sagrada Familia

Using following image sequence [2] and the followings camera parameters we can compute the sparse 3d reconstruction:

./example_sfm_scene_reconstruction image_paths_file.txt 350 240 360

The following picture shows the obtained camera motion in addition to the estimated sparse 3d reconstruction:

[1] http://vision.middlebury.edu/mview/data

[2] Penate Sanchez, A. and Moreno-Noguer, F. and Andrade Cetto, J. and Fleuret, F. (2014). LETHA: Learning from High Quality Inputs for 3D Pose Estimation in Low Quality Images. Proceedings of the International Conference on 3D vision (3DV). URL