摘要

目标检测算法yolo3应该算是复现难度比较小的,比起R-CNN,FAST-RCNN,FASTER-RCNN简单很多,越是最新的目标检测算法,越是容易复现,之前看过faster-rcnn的源码,有个roi-pooling这一板块使用c语言写的,加上版本torch0.4才行就放弃复现了,理解代码还是很有必要的。那么今天就开始学习yolo3,第一步是把每行的代码学懂。

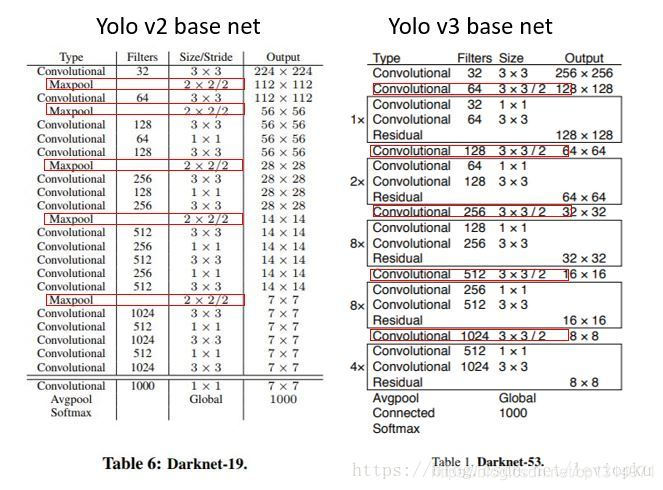

darknet网络详解

首先看下darknet的网络形状,和一般的网络不通之处在于没有maxpool,darknet是通过stride=2来实现大小变化,还有一个residual这种小版块像resnet的捷径通道,将特征图融合,我们先看git的代码

代码理解

为了测试方便我把parse_model_config直接拿过来用了

from __future__ import division

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

import numpy as np

def parse_model_config(path):

"""Parses the yolo-v3 layer configuration file and returns module definitions"""

file = open(path, 'r')

lines = file.read().split('\n')

lines = [x for x in lines if x and not x.startswith('#')]

lines = [x.rstrip().lstrip() for x in lines] # get rid of fringe whitespaces

module_defs = []

for line in lines:

if line.startswith('['): # This marks the start of a new block

module_defs.append({})

module_defs[-1]['type'] = line[1:-1].rstrip()

if module_defs[-1]['type'] == 'convolutional':

module_defs[-1]['batch_normalize'] = 0

else:

key, value = line.split("=")

value = value.strip()

module_defs[-1][key.rstrip()] = value.strip()

return module_defs

class Upsample(nn.Module):

def __init__(self, scale_factor, mode="nearest"):

super(Upsample, self).__init__()

self.scale_factor = scale_factor

self.mode = mode

def forward(self, x):

x = F.interpolate(x, scale_factor=self.scale_factor, mode=self.mode)

return x

class EmptyLayer(nn.Module):

"""Placeholder for 'route' and 'shortcut' layers"""

def __init__(self):

super(EmptyLayer, self).__init__()

module_defs = parse_model_config("G:/3d\训练测试\PyTorch-YOLOv3-master\config/yolov3.cfg")

hyperparams = module_defs.pop(0)

output_filters = [int(hyperparams["channels"])]

module_list = nn.ModuleList()

for module_i, module_def in enumerate(module_defs):

modules = nn.Sequential()

if module_def["type"] == "convolutional":

bn = int(module_def["batch_normalize"])

filters = int(module_def["filters"])

kernel_size = int(module_def["size"])

pad = (kernel_size - 1) // 2

modules.add_module(

f"conv_{module_i}",

nn.Conv2d(

in_channels=output_filters[-1],

out_channels=filters,

kernel_size=kernel_size,

stride=int(module_def["stride"]),

padding=pad,

bias=not bn,

),

)

if bn:

modules.add_module(f"batch_norm_{module_i}", nn.BatchNorm2d(filters, momentum=0.9, eps=1e-5))

if module_def["activation"] == "leaky":

modules.add_module(f"leaky_{module_i}", nn.LeakyReLU(0.1))

elif module_def["type"] == "upsample":

upsample = Upsample(scale_factor=int(module_def["stride"]), mode="nearest")

modules.add_module(f"upsample_{module_i}", upsample)

elif module_def["type"] == "route":

layers = [int(x) for x in module_def["layers"].split(",")]

filters = sum([output_filters[1:][i] for i in layers])

modules.add_module(f"route_{module_i}", EmptyLayer())

elif module_def["type"] == "shortcut":

filters = output_filters[1:][int(module_def["from"])]

modules.add_module(f"shortcut_{module_i}", EmptyLayer())

module_list.append(modules)

output_filters.append(filters)

print(module_list)

parse_model_config()先整体看下这个函数,是将yolo3.cfg文件读取,读取成包含字典的列表

[{'type': 'net', 'batch': '16', 'subdivisions': '1', 'width': '416', 'height': '416', 'channels': '3', 'momentum': '0.9', 'decay': '0.0005', 'angle': '0', 'saturation': '1.5', 'exposure':

太长了,只放了一小部分

hyperparams = module_defs.pop(0)

去掉【】

for module_i, module_def in enumerate(module_defs):

module_i是从0开始的数值,module_def是一行行字典形式的数据,

通过几个if条件实现对cfg文件的读取到构建darknet网络

elif module_def["type"] == "route":

这一行代码其实是特征图的融合,通过加减操作到指定的特征图大小进行合并

在来看下输出

(0): Sequential(

(conv_0): Conv2d(3, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(batch_norm_0): BatchNorm2d(32, eps=1e-05, momentum=0.9, affine=True, track_running_stats=True)

(leaky_0): LeakyReLU(negative_slope=0.1)

)

(1): Sequential(

(conv_1): Conv2d(32, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(batch_norm_1): BatchNorm2d(64, eps=1e-05, momentum=0.9, affine=True, track_running_stats=True)

(leaky_1): LeakyReLU(negative_slope=0.1)

)

总共有106层

output_filters同样是记录经过每次卷积之后的特征图大小

正常版本的darknet

import torch

import torch.nn as nn

import time

class Conv2d(nn.Module):

def __init__(self, inc, ouc, k, s, p):

super(Conv2d, self).__init__()

self.conv = nn.Sequential(

nn.Conv2d(inc, ouc, k, s, p),

nn.BatchNorm2d(ouc),

nn.LeakyReLU()

)

def forward(self, x):

return self.conv(x)

class ConvSet(nn.Module): # inc->ouc

def __init__(self, inc, ouc):

super(ConvSet, self).__init__()

self.convset = nn.Sequential(

Conv2d(inc, ouc, 1, 1, 0),

Conv2d(ouc, ouc, 3, 1, 1),

Conv2d(ouc, ouc * 2, 1, 1, 0),

Conv2d(ouc * 2, ouc * 2, 3, 1, 1),

Conv2d(ouc * 2, ouc, 1, 1, 0)

)

def forward(self, x):

return self.convset(x)

class Upsampling(nn.Module):

def __init__(self):

super(Upsampling, self).__init__()

def forward(self, x):

return nn.functional.interpolate(x, scale_factor=2, mode='nearest')

class Downsampling(nn.Module):

def __init__(self, inc, ouc):

super(Downsampling, self).__init__()

self.d = nn.Sequential(

Conv2d(inc, ouc, 3, 2, 1)

)

def forward(self, x):

return self.d(x)

class Residual(nn.Module): # inc->inc

def __init__(self, inc):

super(Residual, self).__init__()

self.r = nn.Sequential(

Conv2d(inc, inc // 2, 1, 1, 0),

Conv2d(inc // 2, inc, 3, 1, 1)

)

def forward(self, x):

return x + self.r(x)

class MainNet(nn.Module):

def __init__(self):

super(MainNet, self).__init__()

self.d52 = nn.Sequential(

Conv2d(3, 32, 3, 1, 1), # 416

Conv2d(32, 64, 3, 2, 1), # 208

# 1x

Conv2d(64, 32, 1, 1, 0),

Conv2d(32, 64, 3, 1, 1),

Residual(64),

Downsampling(64, 128), # 104

# 2x

Conv2d(128, 64, 1, 1, 0),

Conv2d(64, 128, 3, 1, 1),

Residual(128),

Conv2d(128, 64, 1, 1, 0),

Conv2d(64, 128, 3, 1, 1),

Residual(128),

Downsampling(128, 256), # 52

# 8x

Conv2d(256, 128, 1, 1, 0),

Conv2d(128, 256, 3, 1, 1),

Residual(256),

Conv2d(256, 128, 1, 1, 0),

Conv2d(128, 256, 3, 1, 1),

Residual(256),

Conv2d(256, 128, 1, 1, 0),

Conv2d(128, 256, 3, 1, 1),

Residual(256),

Conv2d(256, 128, 1, 1, 0),

Conv2d(128, 256, 3, 1, 1),

Residual(256),

Conv2d(256, 128, 1, 1, 0),

Conv2d(128, 256, 3, 1, 1),

Residual(256),

Conv2d(256, 128, 1, 1, 0),

Conv2d(128, 256, 3, 1, 1),

Residual(256),

Conv2d(256, 128, 1, 1, 0),

Conv2d(128, 256, 3, 1, 1),

Residual(256),

Conv2d(256, 128, 1, 1, 0),

Conv2d(128, 256, 3, 1, 1),

Residual(256)

)

self.d26 = nn.Sequential(

Downsampling(256, 512), # 26

# 8x

Conv2d(512, 256, 1, 1, 0),

Conv2d(256, 512, 3, 1, 1),

Residual(512),

Conv2d(512, 256, 1, 1, 0),

Conv2d(256, 512, 3, 1, 1),

Residual(512),

Conv2d(512, 256, 1, 1, 0),

Conv2d(256, 512, 3, 1, 1),

Residual(512),

Conv2d(512, 256, 1, 1, 0),

Conv2d(256, 512, 3, 1, 1),

Residual(512),

Conv2d(512, 256, 1, 1, 0),

Conv2d(256, 512, 3, 1, 1),

Residual(512),

Conv2d(512, 256, 1, 1, 0),

Conv2d(256, 512, 3, 1, 1),

Residual(512),

Conv2d(512, 256, 1, 1, 0),

Conv2d(256, 512, 3, 1, 1),

Residual(512),

Conv2d(512, 256, 1, 1, 0),

Conv2d(256, 512, 3, 1, 1),

Residual(512)

)

self.d13 = nn.Sequential(

Downsampling(512, 1024), # 13

# 4x

Conv2d(1024, 512, 1, 1, 0),

Conv2d(512, 1024, 3, 1, 1),

Residual(1024),

Conv2d(1024, 512, 1, 1, 0),

Conv2d(512, 1024, 3, 1, 1),

Residual(1024),

Conv2d(1024, 512, 1, 1, 0),

Conv2d(512, 1024, 3, 1, 1),

Residual(1024),

Conv2d(1024, 512, 1, 1, 0),

Conv2d(512, 1024, 3, 1, 1),

Residual(1024)

)

'---------------------------------------------------------'

self.convset_13 = nn.Sequential(

ConvSet(1024, 512)

)

self.detection_13 = nn.Sequential(

Conv2d(512, 512, 3, 1, 1),

nn.Conv2d(512, 266, 1, 1, 0) # ?????????????????18

)

self.conv_13 = nn.Sequential(

Conv2d(512, 256, 1, 1, 0)

)

self.up_to_26 = nn.Sequential(

Upsampling()

)

'---------------------------------------------------------'

self.convset_26 = nn.Sequential(

ConvSet(768, 512) # 经concat,通道相加512+256=768

)

self.detection_26 = nn.Sequential(

Conv2d(512, 512, 3, 1, 1),

nn.Conv2d(512, 255, 1, 1, 0)

)

self.conv_26 = nn.Sequential(

Conv2d(512, 256, 1, 1, 0)

)

self.up_to_52 = nn.Sequential(

Upsampling()

)

'---------------------------------------------------------'

self.convset_52 = nn.Sequential(

ConvSet(512, 512) # 经concat,通道相加256+256=512

)

self.detection_52 = nn.Sequential(

Conv2d(512, 512, 3, 1, 1),

nn.Conv2d(512, 255, 1, 1, 0)

)

def forward(self, x):

x_52 = self.d52(x)

x_26 = self.d26(x_52)

x_13 = self.d13(x_26)

x_13_ = self.convset_13(x_13)

out_13 = self.detection_13(x_13_) # 13*13输出

y_13_ = self.conv_13(x_13_)

y_26 = self.up_to_26(y_13_)

'----------------------------------------------------------'

y_26_cat = torch.cat((y_26, x_26), dim=1) # 26*26连接

x_26_ = self.convset_26(y_26_cat)

out_26 = self.detection_26(x_26_)

y_26_ = self.conv_26(x_26_)

y_52 = self.up_to_52(y_26_)

'----------------------------------------------------------'

y_52_cat = torch.cat((y_52, x_52), dim=1)

x_52_ = self.convset_52(y_52_cat)

out_52 = self.detection_52(x_52_)

return out_13, out_26, out_52

if __name__ == '__main__':

trunk = MainNet()

x = torch.rand((1, 3, 416, 416))

y_13, y_26, y_52 = trunk(x)

print(y_13.shape)

print(y_26.shape)

print(y_52.shape)

darknet是输出三个不同大小的特征图,这三个特征图是有特征图结合的,在这种pytorch直接写出来看的更加明确,第一种读取cfg文件之后也会进行这一步的融合,只不过在后面才有所操作。