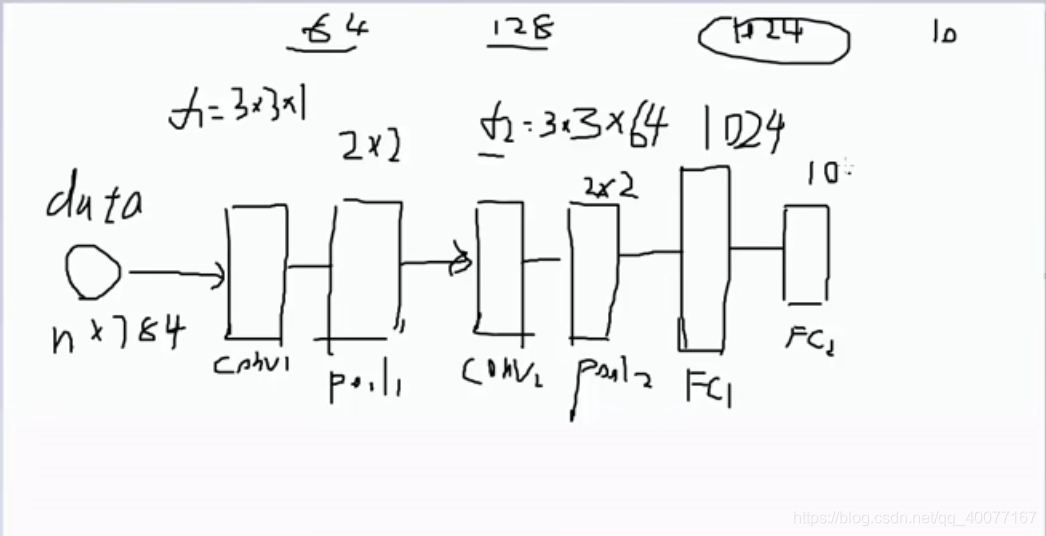

1.原理图:

2.代码:

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

import tensorflow.examples.tutorials.mnist.input_data as input_data

mnist = input_data.read_data_sets('data/', one_hot=True)

trainimg = mnist.train.images

trainlabel = mnist.train.labels

testimg = mnist.test.images

testlabel = mnist.test.labels

print ("MNIST ready")

n_input = 784

n_output = 10

weights = {

'wc1': tf.Variable(tf.random_normal([3, 3, 1, 64], stddev=0.1)),

'wc2': tf.Variable(tf.random_normal([3, 3, 64, 128], stddev=0.1)),

'wd1': tf.Variable(tf.random_normal([7*7*128, 1024], stddev=0.1)),

'wd2': tf.Variable(tf.random_normal([1024, n_output], stddev=0.1))

}

biases = {

'bc1': tf.Variable(tf.random_normal([64], stddev=0.1)),

'bc2': tf.Variable(tf.random_normal([128], stddev=0.1)),

'bd1': tf.Variable(tf.random_normal([1024], stddev=0.1)),

'bd2': tf.Variable(tf.random_normal([n_output], stddev=0.1))

}

"""前向传播

卷积层1:3*3*1 -> 64

池化层1:2*2

卷积层2:3*3*64 ->128

池化层2:2*2

全连接层1:7*7*128 ->1024

全连接层2:1024 ->10

"""

def conv_basic(_input, _w, _b, _keepratio):

# INPUT

_input_r = tf.reshape(_input, shape=[-1, 28, 28, 1])

# CONV LAYER 1

_conv1 = tf.nn.conv2d(_input_r, _w['wc1'], strides=[1, 1, 1, 1], padding='SAME')

# _mean, _var = tf.nn.moments(_conv1, [0, 1, 2])

# _conv1 = tf.nn.batch_normalization(_conv1, _mean, _var, 0, 1, 0.0001)

_conv1 = tf.nn.relu(tf.nn.bias_add(_conv1, _b['bc1']))

_pool1 = tf.nn.max_pool(_conv1, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

_pool_dr1 = tf.nn.dropout(_pool1, _keepratio)

# CONV LAYER 2

_conv2 = tf.nn.conv2d(_pool_dr1, _w['wc2'], strides=[1, 1, 1, 1], padding='SAME')

# _mean, _var = tf.nn.moments(_conv2, [0, 1, 2])

# _conv2 = tf.nn.batch_normalization(_conv2, _mean, _var, 0, 1, 0.0001)

_conv2 = tf.nn.relu(tf.nn.bias_add(_conv2, _b['bc2']))

_pool2 = tf.nn.max_pool(_conv2, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

_pool_dr2 = tf.nn.dropout(_pool2, _keepratio)

# VECTORIZE

_dense1 = tf.reshape(_pool_dr2, [-1, _w['wd1'].get_shape().as_list()[0]])

# FULLY CONNECTED LAYER 1

_fc1 = tf.nn.relu(tf.add(tf.matmul(_dense1, _w['wd1']), _b['bd1']))

_fc_dr1 = tf.nn.dropout(_fc1, _keepratio)

# FULLY CONNECTED LAYER 2

_out = tf.add(tf.matmul(_fc_dr1, _w['wd2']), _b['bd2'])

# RETURN

out = {'input_r': _input_r, 'conv1': _conv1, 'pool1': _pool1, 'pool1_dr1': _pool_dr1,

'conv2': _conv2, 'pool2': _pool2, 'pool_dr2': _pool_dr2, 'dense1': _dense1,

'fc1': _fc1, 'fc_dr1': _fc_dr1, 'out': _out

}

return out

print("CNN READY")

x = tf.placeholder(tf.float32, [None, n_input])

y = tf.placeholder(tf.float32, [None, n_output])

keepratio = tf.placeholder(tf.float32)

# FUNCTIONS

_pred = conv_basic(x, weights, biases, keepratio)['out']

cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y, logits=_pred))

optm = tf.train.AdamOptimizer(learning_rate=0.001).minimize(cost)

_corr = tf.equal(tf.argmax(_pred, 1), tf.argmax(y, 1))

accr = tf.reduce_mean(tf.cast(_corr, tf.float32))

init = tf.global_variables_initializer()

# SAVER

save_step = 1

saver = tf.train.Saver(max_to_keep=3)

print("GRAPH READY")

do_train = 1

sess = tf.Session()

sess.run(init)

training_epochs = 15

batch_size = 16

display_step = 1

if do_train == 1:#训练

for epoch in range(training_epochs):

avg_cost = 0.

# total_batch = int(mnist.train.num_examples/batch_size)

total_batch = 10

# Loop over all batches

for i in range(total_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

# Fit training using batch data

sess.run(optm, feed_dict={x: batch_xs, y: batch_ys, keepratio: 0.7})

# Compute average loss

avg_cost += sess.run(cost, feed_dict={x: batch_xs, y: batch_ys, keepratio: 1.}) / total_batch

# Display logs per epoch step

if epoch % display_step == 0:

print("Epoch: %03d/%03d cost: %.9f" % (epoch, training_epochs, avg_cost))

train_acc = sess.run(accr, feed_dict={x: batch_xs, y: batch_ys, keepratio: 1.})

print(" Training accuracy: %.3f" % (train_acc))

# test_acc = sess.run(accr, feed_dict={x: testimg, y: testlabel, keepratio:1.})

# print (" Test accuracy: %.3f" % (test_acc))

# Save Net

if epoch % save_step == 0:

saver.save(sess, "save/nets/cnn_mnist_basic.ckpt-" + str(epoch))

print("OPTIMIZATION FINISHED")

if do_train == 0:#测试

epoch = training_epochs - 1

saver.restore(sess, "save/nets/cnn_mnist_basic.ckpt-" + str(epoch))

test_acc = sess.run(accr, feed_dict={x: testimg, y: testlabel, keepratio: 1.})

print(" TEST ACCURACY: %.3f" % (test_acc))

3.测试:

do_train=1

Future major versions of TensorFlow will allow gradients to flow

into the labels input on backprop by default.

See `tf.nn.softmax_cross_entropy_with_logits_v2`.

GRAPH READY

2019-05-13 21:08:46.535000: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2

Epoch: 000/015 cost: 6.706351614

Training accuracy: 0.375

Epoch: 001/015 cost: 3.045194244

Training accuracy: 0.375

Epoch: 002/015 cost: 1.572650748

Training accuracy: 0.750

Epoch: 003/015 cost: 1.190892065

Training accuracy: 0.750

WARNING:tensorflow:From F:\python\shi_jue\venv\lib\site-packages\tensorflow\python\training\saver.py:966: remove_checkpoint (from tensorflow.python.training.checkpoint_management) is deprecated and will be removed in a future version.

Instructions for updating:

Use standard file APIs to delete files with this prefix.

Epoch: 004/015 cost: 1.222349179

Training accuracy: 0.562

Epoch: 005/015 cost: 1.086833096

Training accuracy: 0.688

Epoch: 006/015 cost: 1.113742071

Training accuracy: 0.812

Epoch: 007/015 cost: 0.929461908

Training accuracy: 0.750

Epoch: 008/015 cost: 0.859379065

Training accuracy: 0.812

Epoch: 009/015 cost: 0.798095918

Training accuracy: 0.875

Epoch: 010/015 cost: 0.590675253

Training accuracy: 0.750

Epoch: 011/015 cost: 0.533954525

Training accuracy: 0.938

Epoch: 012/015 cost: 0.461822632

Training accuracy: 0.938

Epoch: 013/015 cost: 0.378245395

Training accuracy: 0.875

Epoch: 014/015 cost: 0.412136450

Training accuracy: 0.875

OPTIMIZATION FINISHED

do_train=0