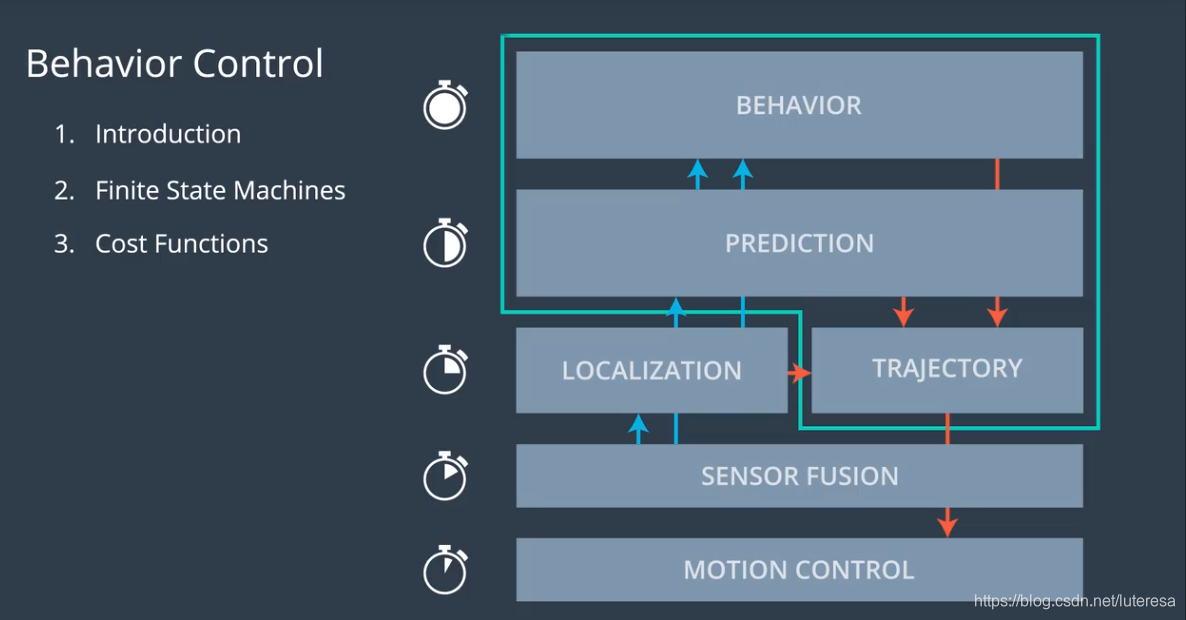

行为规划的内容包括车辆如何生成安全的、可行驶的轨迹以到达目的地:

我们会使用计算机视觉和传感器融合得到的数据,来理解我们周围的环境,也将使用从定位模块得到的数据来精确理解我们具体置身何处,路径规划使用所有这些数据来决定下一步执行何种动作,

然后,路径规划构造出一条轨迹,让控制器去执行。

无人驾驶系统的规划层包括三层结构:

算法在任务规划中应用非常广泛,作为离散空间搜索算法, 算法多用于离散空间最优路径搜索问题的解决方案;

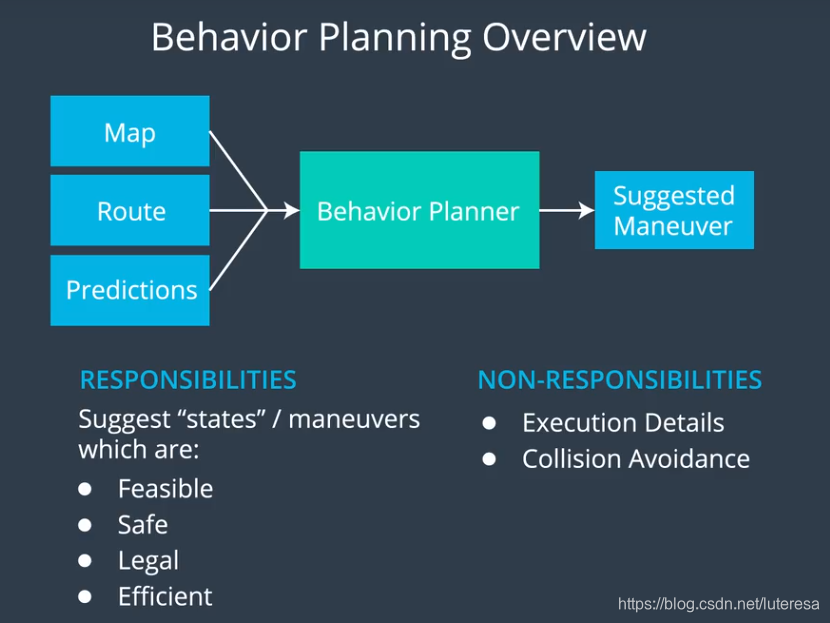

行为规划,是无人车系统决策的核心部分,通常使用有限状态机来设计一个行为决策模型。

行为规划器目前是个黑盒子,它把地图数据,目的地路线,作为输入,结合周围环境中静态或者动态障碍物的下一步可能动向,

生成无人车的下一个动作,对此动作,会有规划器负责平滑,安全,无碰撞的执行。

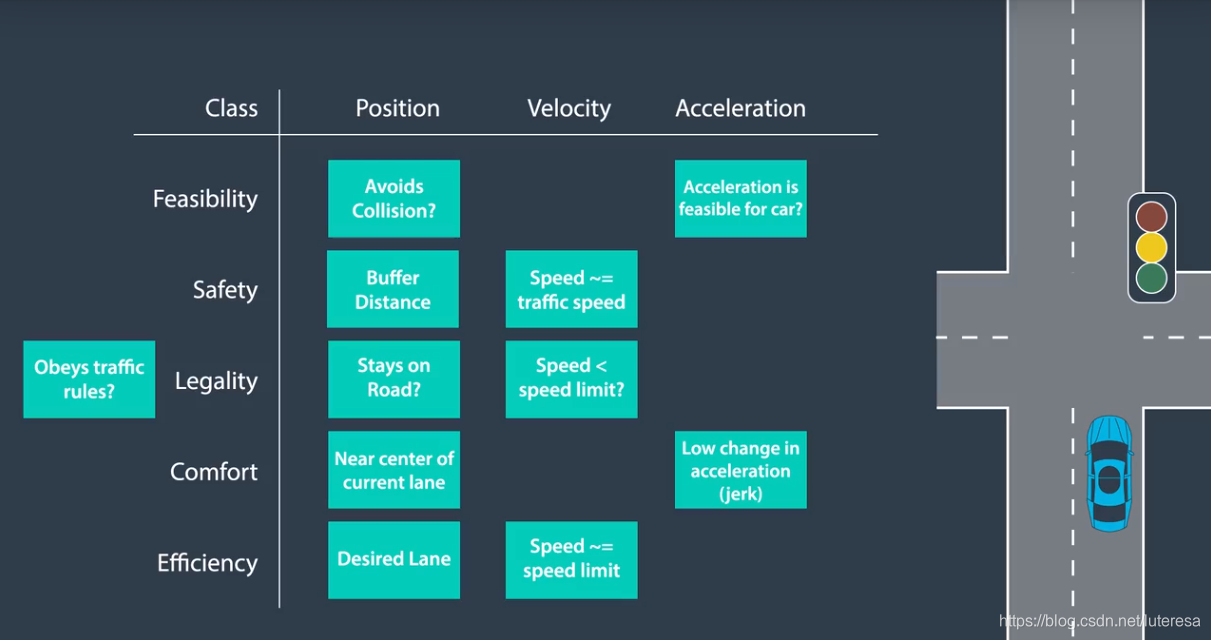

行为模块的职责,是提出一些建议动作,这些动作因该是可行的、最安全的、不会违规的,高效的,但不负责动作执行的细节。也不负责进行碰撞检测。

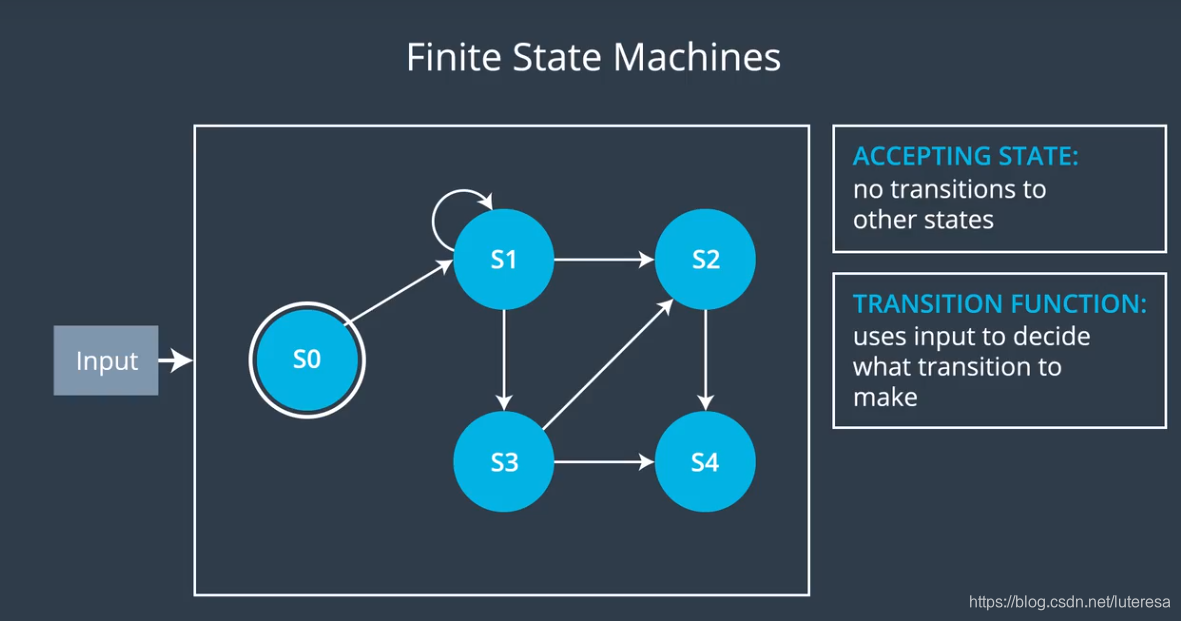

我们使用“有限状态机”来解决行为规划问题。

有限状态机

有限状态机基于有限的离散状态来做决策,

有限状态机是一个非常简单的抽象反应系统,因为它只针对特定的外界输入产生数量有限的相应。

其核心思想是,通过有限的状态描述定义,组合产生大量的复杂的逻辑行为。

一个有限状态机通常包括,输入集合,输出集合,转换逻辑;每个状态机根据是否有输出可以分为两类,接收器(Acceptor)和变换器(Transducer)。如上图中,S4是接收器,其他是转换器。

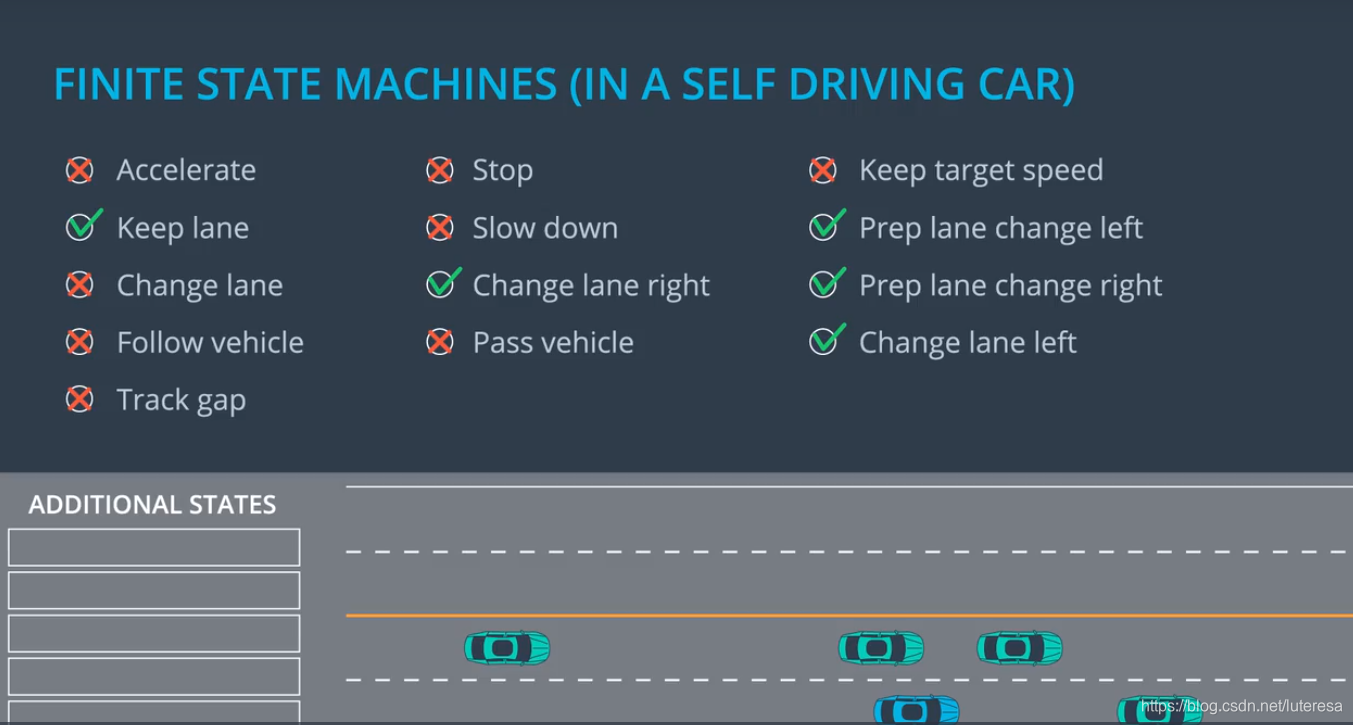

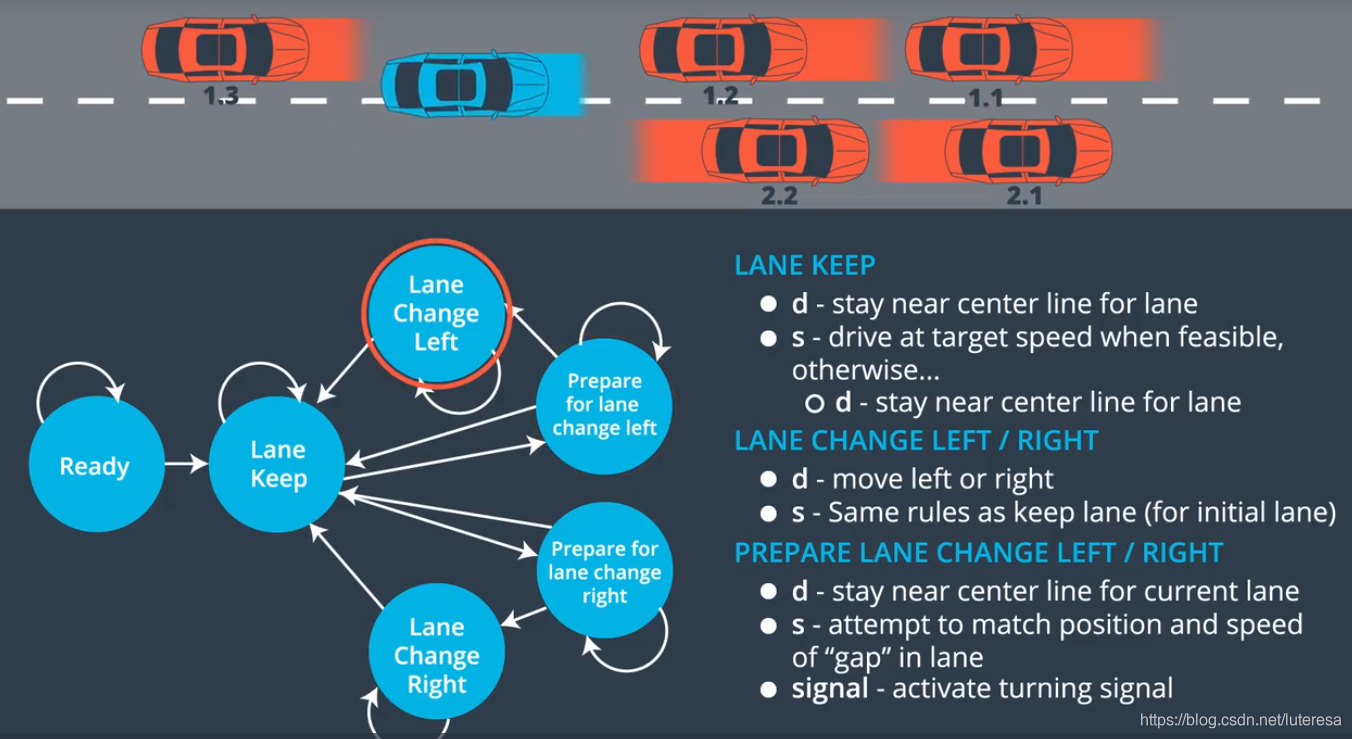

在道路行驶场景中,可以用5个状态来组合表达无人车的所有可能驾驶动作。

设计状态机如下图:

转换函数

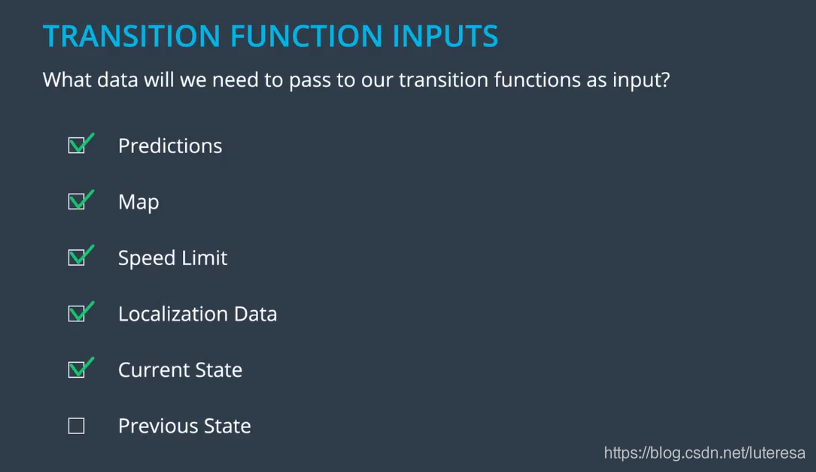

行为规划的状态机需要输入的数据是

路径规划实现伪代码:

def transition_function(predictions, current_fsm_state, current_pose, cost_functions, weights):

# only consider states which can be reached from current FSM state.

possible_successor_states = successor_states(current_fsm_state)

# keep track of the total cost of each state.

costs = []

for state in possible_successor_states:

# generate a rough idea of what trajectory we would

# follow IF we chose this state.

trajectory_for_state = generate_trajectory(state, current_pose, predictions)

# calculate the "cost" associated with that trajectory.

cost_for_state = 0

for i in range(len(cost_functions)) :

# apply each cost function to the generated trajectory

cost_function = cost_functions[i]

cost_for_cost_function = cost_function(trajectory_for_state, predictions)

# multiply the cost by the associated weight

weight = weights[i]

cost_for_state += weight * cost_for_cost_function

costs.append({'state' : state, 'cost' : cost_for_state})

# Find the minimum cost state.

best_next_state = None

min_cost = 9999999

for i in range(len(possible_successor_states)):

state = possible_successor_states[i]

cost = costs[i]

if cost < min_cost:

min_cost = cost

best_next_state = state

return best_next_state

成本函数

在状态机转换过程函数中,必须用到一个值,就是成本函数,即在可行的转换状态中,选择最优的状态。我们希望设计合理的成本函数,可以对事物作出正确评估。

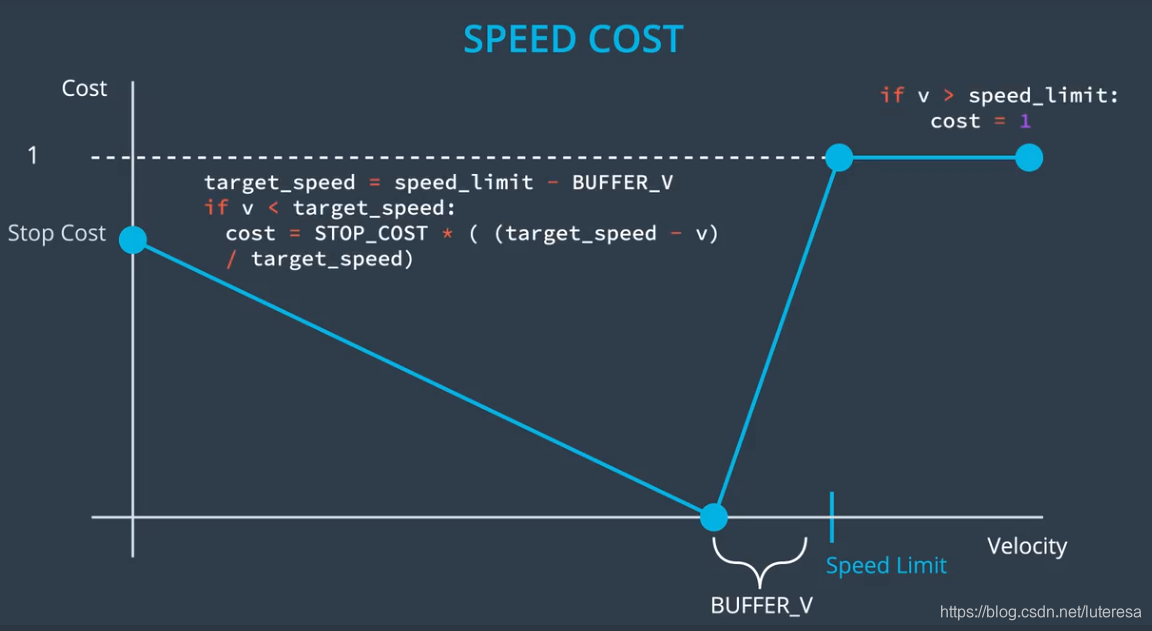

如何为车速设计成本函数

一方面想尽快到达目的地,一方面又不能超速,需要的控制量就是汽车的期望车速,假设成本函数值是(0~1)之间,且成本函数是线性的,通过调整成本函数的权重,从而调节成本函数的相对重要性.

设计一个车速和成本函数的关系图图下:

一个实例,假设无人车位于左下角,目的地是右下角的G点,无人车前方有一个车辆,但是速度非常慢;

这时无人车的可选项有两个,一是keep lane直到终点,二是先变道左侧,超过前车,再变道回目的车道,快速行驶至目的地。

这两个轨迹,哪个更优?我们用两个参数来建模:

:距离目的地纵向距离;

:距离目的地横向距离;

代码实现:

double goal_distance_cost(int goal_lane, int intended_lane, int final_lane,

double distance_to_goal) {

// The cost increases with both the distance of intended lane from the goal

// and the distance of the final lane from the goal. The cost of being out

// of the goal lane also becomes larger as the vehicle approaches the goal.

int delta_d = 2.0 * goal_lane - intended_lane - final_lane;

double cost = 1 - exp(-(std::abs(delta_d) / distance_to_goal));

return cost;

}

大部分情况下,一个简单的代价函数不能较好的获得复杂的车辆行为。代价函数可以有多个实现,比如针对以下需求设计代价函数

Your task in the implementation will be to create a cost function that satisifes:

The cost decreases as both intended lane and final lane are higher speed lanes.

The cost function provides different costs for each possible behavior: KL, PLCR/PLCL, LCR/LCL.

The values produced by the cost function are in the range 0 to 1.

double inefficiency_cost(int target_speed, int intended_lane, int final_lane,

const std::vector<int> &lane_speeds) {

// Cost becomes higher for trajectories with intended lane and final lane

// that have traffic slower than target_speed.

double speed_intended = lane_speeds[intended_lane];

double speed_final = lane_speeds[final_lane];

double cost = (2.0*target_speed - speed_intended - speed_final)/target_speed;

return cost;

}

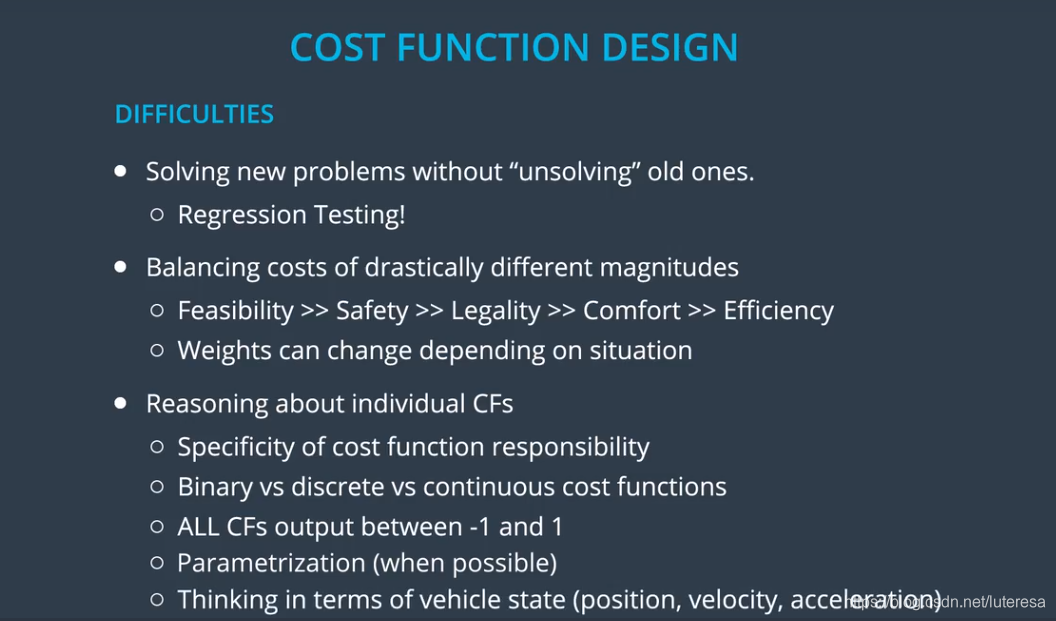

设计成本函数是一个困难的工作,而将它们组合起来以生成可用的车辆行为则是非常困难的工作,

其中一些困难与成本函数设计有关,既要解决新问题,又不会影响老问题;

针对不同场景,需调节不同参数

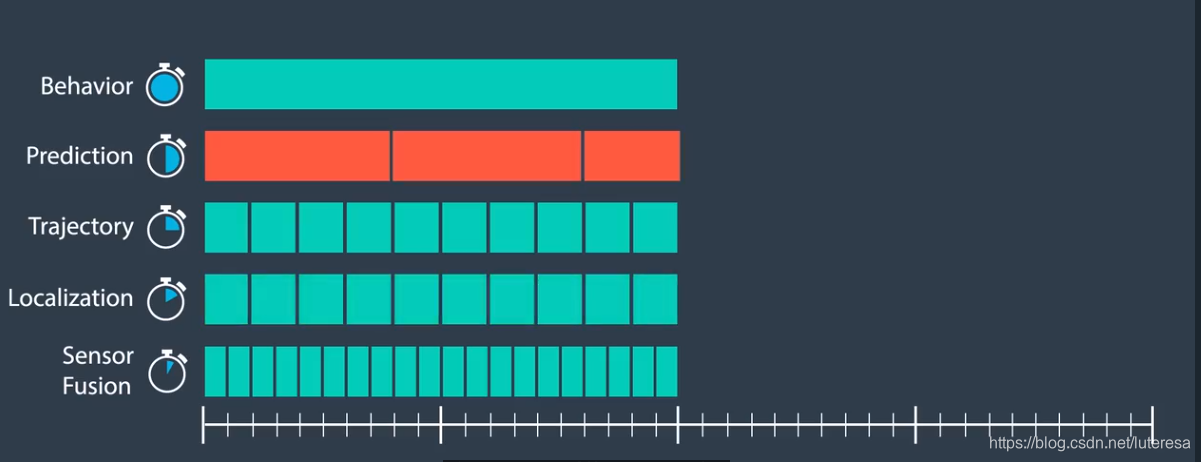

时序调度问题

由于行为模块相比其他模块,更新频率较低,而整个系统不允许存在某个特别慢的模块,这会阻止其他模块正确运行。

解决同步问题方法:比如行为模块周期更新时,预测模块循环尚未完成,

这时,如果行为模块选择等待,则会阻塞整个循环管线,影响下游模块,正确做法是使用现有数据,并接受这些数据并非最新数据的事实。

有限状态机是行为规划问题的一种解决方案,在状态空间较小情况下,优先状态机通常工作的很好。例如在高速公路上驾驶,

但对于更复杂的场景,比如城市里驾驶,其他方法可能更适用,

Additional Resources on Path Planning

Indoors

Intention-Net: Integrating Planning and Deep Learning for Goal-Directed Autonomous Navigation by S. W. Gao, et. al.

Abstract: How can a delivery robot navigate reliably to a destination in a new office building, with minimal prior information? To tackle this challenge, this paper introduces a two-level hierarchical approach, which integrates model-free deep learning and model-based path planning. At the low level, a neural-network motion controller, called the intention-net, is trained end-to-end to provide robust local navigation. The intention-net maps images from a single monocular camera and “intentions” directly to robot controls. At the high level, a path planner uses a crude map, e.g., a 2-D floor plan, to compute a path from the robot’s current location to the goal. The planned path provides intentions to the intention-net. Preliminary experiments suggest that the learned motion controller is robust against perceptual uncertainty and by integrating with a path planner, it generalizes effectively to new environments and goals.

City Navigation

Learning to Navigate in Cities Without a Map by P. Mirowski, et. al.

Abstract: Navigating through unstructured environments is a basic capability of intelligent creatures, and thus is of fundamental interest in the study and development of artificial intelligence. Long-range navigation is a complex cognitive task that relies on developing an internal representation of space, grounded by recognizable landmarks and robust visual processing, that can simultaneously support continuous self-localization (“I am here”) and a representation of the goal (“I am going there”). Building upon recent research that applies deep reinforcement learning to maze navigation problems, we present an end-to-end deep reinforcement learning approach that can be applied on a city scale. […] We present an interactive navigation environment that uses Google StreetView for its photographic content and worldwide coverage, and demonstrate that our learning method allows agents to learn to navigate multiple cities and to traverse to target destinations that may be kilometers away. […]

Intersections

A Look at Motion Planning for Autonomous Vehicles at an Intersection by S. Krishnan, et. al.

Abstract: Autonomous Vehicles are currently being tested in a variety of scenarios. As we move towards Autonomous Vehicles, how should intersections look? To answer that question, we break down an intersection management into the different conundrums and scenarios involved in the trajectory planning and current approaches to solve them. Then, a brief analysis of current works in autonomous intersection is conducted. With a critical eye, we try to delve into the discrepancies of existing solutions while presenting some critical and important factors that have been addressed. Furthermore, open issues that have to be addressed are also emphasized. We also try to answer the question of how to benchmark intersection management algorithms by providing some factors that impact autonomous navigation at intersection.

Planning in Traffic with Deep Reinforcement Learning

DeepTraffic: Crowdsourced Hyperparameter Tuning of Deep Reinforcement Learning Systems for Multi-Agent Dense Traffic Navigation by L. Fridman, J. Terwilliger and B. Jenik

Abstract: We present a traffic simulation named DeepTraffic where the planning systems for a subset of the vehicles are handled by a neural network as part of a model-free, off-policy reinforcement learning process. The primary goal of DeepTraffic is to make the hands-on study of deep reinforcement learning accessible to thousands of students, educators, and researchers in order to inspire and fuel the exploration and evaluation of deep Q-learning network variants and hyperparameter configurations through large-scale, open competition. This paper investigates the crowd-sourced hyperparameter tuning of the policy network that resulted from the first iteration of the DeepTraffic competition where thousands of participants actively searched through the hyperparameter space.