1 广度优先搜索算法(Breadth-First_Search)

核心思想是,从起始节点开始,将它的所有Neigbors加入到下一步要搜索的预备队列中;

然后从预备队列按一定规则选出一个节点,重复上一步骤;直到找到目的节点。

1.1涉及到的数据结构

Graph: 有向图,每个节点可以指向的下一个临近节点组成一个列表;

数组: 存放待遍历的节点,常用队列来实现;

Visited列表:存放已经遍历过的节点,避免遍历陷入死循环;

1.2如何生成路线

遍历扩展的过程中,每个节点都保存其来源,待搜索遍历完成,通过这些记录进行回溯,就可以得到完整的路径。

1.3有方向地进行搜索(启发式)

BFS的扩展朝着所有方向,呈现同心圆状从起点向外扩展,非常蛮力地遍历整张图,直到终点出现。

用启发式搜索,核心思想是,充分利用我们始终已知当前点和终点坐标这一信息,让扩展优先朝向终点方向进行,

也就是在数组中,按当前节点到终点距离排序,值越小,优先级越高。

2 Dijkstra算法

从起点到终点可能存在多条路径,BFS通过Visited来避免重复遍历,这也同时导致了默认最早遍历的路径当成最短路径。

Dijkstra算法的主要思想是, :

我们记录每个点从起点遍历到它所花费的当前最少长度,当我们通过另外一条路径再次遍历到这个点的时候,由于该点已经被遍历过了(已经加入了came_from数组),我们此时不再直接跳过该点,而是比较一下目前的路径是否比该点最初遍历的路径花费更少,如果是这样,那就将该点纳入到新的路径当中去(修改该点在came_from中的值)。

按保存的代价值,对数组排序,值越小,优先级越高。

3 算法

A start算法综合启发式算法和Dijkstra算法优点,既优先向目的点方向扩展,也尽可能最短路径。

将启发函数设计为:

g(n):从起点到当前节点的代价值;

h(n):从当前节点到终点的代价值;

f(n)值越小,优先级越高;

注:h(n)不需要很精确,一般估算值小于或等于实际值。

# ----------

# User Instructions:

#

# Define a function, search() that returns a list

# in the form of [optimal path length, row, col]. For

# the grid shown below, your function should output

# [11, 4, 5].

#

# If there is no valid path from the start point

# to the goal, your function should return the string

# 'fail'

# ----------

# Grid format:

# 0 = Navigable space

# 1 = Occupied space

grid = [[0, 1, 0, 0, 0, 0],

[0, 1, 0, 0, 0, 0],

[0, 1, 0, 0, 0, 0],

[0, 1, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0]]

init = [0, 0]

goal = [len(grid)-1, len(grid[0])-1]

cost = 1

delta = [[-1, 0], # go up

[ 0,-1], # go left

[ 1, 0], # go down

[ 0, 1]] # go right

delta_name = ['^', '<', 'v', '>']

print("goal:",goal)

print(len(grid),len(grid[0]))

# h的值全部为0,A star算法退回到上面普通搜索算法

#heuristic = [[0 for row in range(len(grid[0]))] for col in range(len(grid))]

def search_A_star(grid,init,goal,cost):

# ----------------------------------------

# modify the code below

# ----------------------------------------

closed = [[0 for col in range(len(grid[0]))] for row in range(len(grid))]

closed[init[0]][init[1]] = 1

expand = [[-1 for col in range(len(grid[0]))] for row in range(len(grid))]

action = [[-1 for col in range(len(grid[0]))] for row in range(len(grid))]

x = init[0]

y = init[1]

g = 0

h = heuristic[x][y]

f = g + h

open = [[f, g, h, x, y]]

found = False # flag that is set when search is complete

resign = False # flag set if we can't find expand

count = 0

while not found and not resign:

if len(open) == 0:

resign = True

return "Fail"

else:

open.sort()

open.reverse()

next = open.pop()

f = next[0]

g = next[1]

h = next[2]

x = next[3]

y = next[4]

expand[x][y] = count

count += 1

if x == goal[0] and y == goal[1]:

found = True

else:

for i in range(len(delta)):

x2 = x + delta[i][0]

y2 = y + delta[i][1]

if x2 >= 0 and x2 < len(grid) and y2 >=0 and y2 < len(grid[0]):

if closed[x2][y2] == 0 and grid[x2][y2] == 0:

g2 = g + cost

h2 = heuristic[x2][y2]

f2 = g2 + h2

open.append([f2, g2, h2, x2, y2])

closed[x2][y2] = 1

action[x2][y2] = i

for i in range(len(expand)):

print(expand[i])

policy = [['0' for row in range(len(grid[0]))] for col in range(len(grid))]

x = goal[0]

y = goal[1]

path = [[x,y]]

policy[x][y] = '*'

while x!= init[0] or y != init[1]:

x2 = x - delta[action[x][y]][0]

y2 = y - delta[action[x][y]][1]

policy[x2][y2] = delta_name[action[x][y]]

x = x2

y = y2

path.append((x,y))

for i in range(len(policy)):

print(policy[i])

path.reverse()

print(path) #打印最优路径

return expand

result = search_A_star(grid,init,goal,cost)

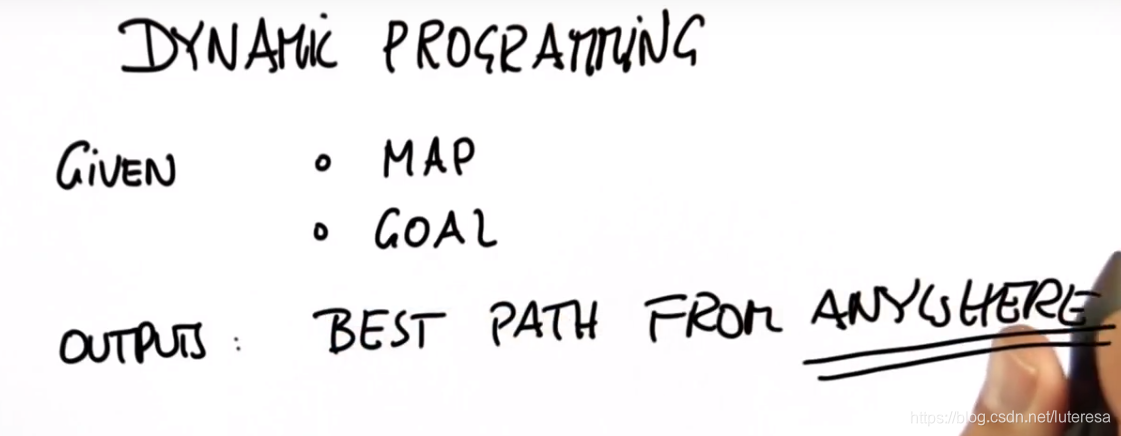

4 Dynamic Programming

动态规划算法:不限于单个起点,应用于任意起点位置,搜索到目的点的最短路径。

因为无人车行驶的是一个随机的环境。

# ----------

# User Instructions:

#

# Write a function optimum_policy that returns

# a grid which shows the optimum policy for robot

# motion. This means there should be an optimum

# direction associated with each navigable cell from

# which the goal can be reached.

#

# Unnavigable cells as well as cells from which

# the goal cannot be reached should have a string

# containing a single space (' '), as shown in the

# previous video. The goal cell should have '*'.

# ----------

grid = [[0, 1, 0, 0, 0, 0],

[0, 1, 0, 0, 0, 0],

[0, 1, 0, 0, 0, 0],

[0, 1, 0, 0, 0, 0],

[0, 0, 0, 0, 1, 0]]

init = [0, 0]

goal = [len(grid)-1, len(grid[0])-1]

cost = 1 # the cost associated with moving from a cell to an adjacent one

delta = [[-1, 0 ], # go up

[ 0, -1], # go left

[ 1, 0 ], # go down

[ 0, 1 ]] # go right

delta_name = ['^', '<', 'v', '>']

def optimum_policy(grid,goal,cost):

# ----------------------------------------

# modify code below

# ----------------------------------------

value = [[99 for row in range(len(grid[0]))] for col in range(len(grid))]

policy = [[' ' for row in range(len(grid[0]))] for col in range(len(grid))]

change = True

while change:

change = False

print("-"*88)

for item in value:

print(item)

for x in range(len(grid)):

for y in range(len(grid[0])):

if goal[0] == x and goal[1] == y:

if value[x][y] > 0:

value[x][y] = 0

policy[x][y] = '*'

print("policy end,***")

change = True

elif grid[x][y] == 0:

for a in range(len(delta)):

x2 = x + delta[a][0]

y2 = y + delta[a][1]

if x2 >= 0 and x2 < len(grid) and y2 >= 0 and y2 < len(grid[0]) and grid[x2][y2] == 0:

v2 = value[x2][y2] + cost

#print("v2={},value[{}][{}]={}".format(v2,x,y,value[x][y]))

if v2 < value[x][y]:

print("v2={},value[{}][{}]={}".format(v2,x,y,value[x][y]))

change = True

value[x][y] = v2

policy[x][y] = delta_name[a] #打印出任意点到目标点的最优方案

print("grid:")

for i in range(len(grid)):

print(grid[i])

print("value:")

for i in range(len(value)):

print(value[i])

print("policy")

for i in range(len(policy)):

print(policy[i])

return policy

ret = optimum_policy(grid, goal, cost)

----------------------------------------------------------------------------------------

[99, 99, 99, 99, 99, 99]

[99, 99, 99, 99, 99, 99]

[99, 99, 99, 99, 99, 99]

[99, 99, 99, 99, 99, 99]

[99, 99, 99, 99, 99, 99]

policy end,***

----------------------------------------------------------------------------------------

[99, 99, 99, 99, 99, 99]

[99, 99, 99, 99, 99, 99]

[99, 99, 99, 99, 99, 99]

[99, 99, 99, 99, 99, 99]

[99, 99, 99, 99, 99, 0]

v2=1,value[3][5]=99

----------------------------------------------------------------------------------------

[99, 99, 99, 99, 99, 99]

[99, 99, 99, 99, 99, 99]

[99, 99, 99, 99, 99, 99]

[99, 99, 99, 99, 99, 1]

[99, 99, 99, 99, 99, 0]

v2=2,value[2][5]=99

v2=2,value[3][4]=99

----------------------------------------------------------------------------------------

[99, 99, 99, 99, 99, 99]

[99, 99, 99, 99, 99, 99]

[99, 99, 99, 99, 99, 2]

[99, 99, 99, 99, 2, 1]

[99, 99, 99, 99, 99, 0]

v2=3,value[1][5]=99

v2=3,value[2][4]=99

v2=3,value[3][3]=99

v2=4,value[4][3]=99

----------------------------------------------------------------------------------------

[99, 99, 99, 99, 99, 99]

[99, 99, 99, 99, 99, 3]

[99, 99, 99, 99, 3, 2]

[99, 99, 99, 3, 2, 1]

[99, 99, 99, 4, 99, 0]

v2=4,value[0][5]=99

v2=4,value[1][4]=99

v2=4,value[2][3]=99

v2=4,value[3][2]=99

v2=5,value[4][2]=99

----------------------------------------------------------------------------------------

[99, 99, 99, 99, 99, 4]

[99, 99, 99, 99, 4, 3]

[99, 99, 99, 4, 3, 2]

[99, 99, 4, 3, 2, 1]

[99, 99, 5, 4, 99, 0]

v2=5,value[0][4]=99

v2=5,value[1][3]=99

v2=5,value[2][2]=99

v2=6,value[4][1]=99

----------------------------------------------------------------------------------------

[99, 99, 99, 99, 5, 4]

[99, 99, 99, 5, 4, 3]

[99, 99, 5, 4, 3, 2]

[99, 99, 4, 3, 2, 1]

[99, 6, 5, 4, 99, 0]

v2=6,value[0][3]=99

v2=6,value[1][2]=99

v2=7,value[4][0]=99

----------------------------------------------------------------------------------------

[99, 99, 99, 6, 5, 4]

[99, 99, 6, 5, 4, 3]

[99, 99, 5, 4, 3, 2]

[99, 99, 4, 3, 2, 1]

[7, 6, 5, 4, 99, 0]

v2=7,value[0][2]=99

v2=8,value[3][0]=99

----------------------------------------------------------------------------------------

[99, 99, 7, 6, 5, 4]

[99, 99, 6, 5, 4, 3]

[99, 99, 5, 4, 3, 2]

[8, 99, 4, 3, 2, 1]

[7, 6, 5, 4, 99, 0]

v2=9,value[2][0]=99

----------------------------------------------------------------------------------------

[99, 99, 7, 6, 5, 4]

[99, 99, 6, 5, 4, 3]

[9, 99, 5, 4, 3, 2]

[8, 99, 4, 3, 2, 1]

[7, 6, 5, 4, 99, 0]

v2=10,value[1][0]=99

----------------------------------------------------------------------------------------

[99, 99, 7, 6, 5, 4]

[10, 99, 6, 5, 4, 3]

[9, 99, 5, 4, 3, 2]

[8, 99, 4, 3, 2, 1]

[7, 6, 5, 4, 99, 0]

v2=11,value[0][0]=99

----------------------------------------------------------------------------------------

[11, 99, 7, 6, 5, 4]

[10, 99, 6, 5, 4, 3]

[9, 99, 5, 4, 3, 2]

[8, 99, 4, 3, 2, 1]

[7, 6, 5, 4, 99, 0]

grid:

[0, 1, 0, 0, 0, 0]

[0, 1, 0, 0, 0, 0]

[0, 1, 0, 0, 0, 0]

[0, 1, 0, 0, 0, 0]

[0, 0, 0, 0, 1, 0]

value:

[11, 99, 7, 6, 5, 4]

[10, 99, 6, 5, 4, 3]

[9, 99, 5, 4, 3, 2]

[8, 99, 4, 3, 2, 1]

[7, 6, 5, 4, 99, 0]

policy

['v', ' ', 'v', 'v', 'v', 'v']

['v', ' ', 'v', 'v', 'v', 'v']

['v', ' ', 'v', 'v', 'v', 'v']

['v', ' ', '>', '>', '>', 'v']

['>', '>', '^', '^', ' ', '*']

5.Left Trun Policy

在实际驾驶场景中,还可以对不同路径添加相应权重,比如左转一般比较费,可以加大代价函数值;

# ----------

# User Instructions:

#

# Implement the function optimum_policy2D below.

#

# You are given a car in grid with initial state

# init. Your task is to compute and return the car's

# optimal path to the position specified in goal;

# the costs for each motion are as defined in cost.

#

# There are four motion directions: up, left, down, and right.

# Increasing the index in this array corresponds to making a

# a left turn, and decreasing the index corresponds to making a

# right turn.

forward = [[-1, 0], # go up

[ 0, -1], # go left

[ 1, 0], # go down

[ 0, 1]] # go right

forward_name = ['up', 'left', 'down', 'right']

# action has 3 values: right turn, no turn, left turn

action = [-1, 0, 1]

action_name = ['R', '#', 'L']

# EXAMPLE INPUTS:

# grid format:

# 0 = navigable space

# 1 = unnavigable space

grid = [[1, 1, 1, 0, 0, 0],

[1, 1, 1, 0, 1, 0],

[0, 0, 0, 0, 0, 0],

[1, 1, 1, 0, 1, 1],

[1, 1, 1, 0, 1, 1]]

init = [4, 3, 0] # given in the form [row,col,direction]

# direction = 0: up

# 1: left

# 2: down

# 3: right

goal = [2, 0] # given in the form [row,col]

cost = [2, 1, 20] # cost has 3 values, corresponding to making

# a right turn, no turn, and a left turn

# EXAMPLE OUTPUT:

# calling optimum_policy2D with the given parameters should return

# [[' ', ' ', ' ', 'R', '#', 'R'],

# [' ', ' ', ' ', '#', ' ', '#'],

# ['*', '#', '#', '#', '#', 'R'],

# [' ', ' ', ' ', '#', ' ', ' '],

# [' ', ' ', ' ', '#', ' ', ' ']]

# ----------

# ----------------------------------------

# modify code below

# ----------------------------------------

def optimum_policy2D(grid,init,goal,cost):

#四组value值,表示四个不同起始方向

value = [[[999 for row in range(len(grid[0]))] for col in range(len(grid))],

[[999 for row in range(len(grid[0]))] for col in range(len(grid))],

[[999 for row in range(len(grid[0]))] for col in range(len(grid))],

[[999 for row in range(len(grid[0]))] for col in range(len(grid))]]

policy = [[[' ' for row in range(len(grid[0]))] for col in range(len(grid))],

[[' ' for row in range(len(grid[0]))] for col in range(len(grid))],

[[' ' for row in range(len(grid[0]))] for col in range(len(grid))],

[[' ' for row in range(len(grid[0]))] for col in range(len(grid))]]

policy2D = [[' ' for row in range(len(grid[0]))] for col in range(len(grid))]

change = True

while change:

change = False

for x in range(len(grid)):

for y in range(len(grid[0])):

for orientation in range(4):

if goal[0] == x and goal[1] == y:

if value[orientation][x][y] > 0:

value[orientation][x][y] = 0

policy[orientation][x][y] = '*'

print("policy end,***")

change = True

elif grid[x][y] == 0:

for i in range(3):

o2 = (orientation + action[i])%4 # -1%4=3

x2 =x + forward[o2][0]

y2 = y + forward[o2][1]

if x2 >= 0 and x2 < len(grid) and y2 >= 0 and y2 < len(grid[0]) and grid[x2][y2] == 0:

v2 = value[o2][x2][y2] + cost[i]

if v2 < value[orientation][x][y]:

change = True

value[orientation][x][y] = v2

policy[orientation][x][y] = action_name[i]

#policy存储任意起点到目标点的动作

for i in range(len(policy)):

print(forward_name[i])

for j in range(len(policy[0])):

print(policy[i][j])

print("-"*88)

print("="*88)

#print(policy)

x = init[0]

y = init[1]

orientation = init[2]

policy2D[x][y] = policy[orientation][x][y]

while policy[orientation][x][y] != '*':

if policy[orientation][x][y] == '#':

o2 = orientation

elif policy[orientation][x][y] == 'R':

o2 = (orientation -1)%4

elif policy[orientation][x][y] == 'L':

o2 = (orientation +1)%4

x = x + forward[o2][0]

y = y + forward[o2][1]

orientation = o2

policy2D[x][y] = policy[orientation][x][y]

return policy2D

optimum_policy2D(grid, init, goal, cost)

policy end,***

policy end,***

policy end,***

policy end,***

up

[' ', ' ', ' ', 'R', 'R', 'L']

[' ', ' ', ' ', '#', ' ', '#']

['*', 'L', 'L', '#', 'L', 'L']

[' ', ' ', ' ', '#', ' ', ' ']

[' ', ' ', ' ', '#', ' ', ' ']

----------------------------------------------------------------------------------------

left

[' ', ' ', ' ', 'L', '#', '#']

[' ', ' ', ' ', 'R', ' ', 'L']

['*', '#', '#', '#', '#', '#']

[' ', ' ', ' ', 'R', ' ', ' ']

[' ', ' ', ' ', 'R', ' ', ' ']

----------------------------------------------------------------------------------------

down

[' ', ' ', ' ', '#', 'R', '#']

[' ', ' ', ' ', '#', ' ', '#']

['*', 'R', 'R', 'R', 'R', 'R']

[' ', ' ', ' ', ' ', ' ', ' ']

[' ', ' ', ' ', ' ', ' ', ' ']

----------------------------------------------------------------------------------------

right

[' ', ' ', ' ', 'R', '#', 'R']

[' ', ' ', ' ', 'R', ' ', 'R']

['*', '#', '#', 'L', '#', 'L']

[' ', ' ', ' ', 'L', ' ', ' ']

[' ', ' ', ' ', 'L', ' ', ' ']

----------------------------------------------------------------------------------------

========================================================================================

[[' ', ' ', ' ', 'R', '#', 'R'],

[' ', ' ', ' ', '#', ' ', '#'],

['*', '#', '#', '#', '#', 'R'],

[' ', ' ', ' ', '#', ' ', ' '],

[' ', ' ', ' ', '#', ' ', ' ']]