1 #author: "xian" 2 #date: 2018/5/2 3 import requests 4 from requests.exceptions import RequestException 5 import re 6 import json 7 8 def get_one_page(url): 9 headers = { 10 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.139 Safari/537.36', 11 12 } 13 try: 14 response = requests.get(url,headers = headers) 15 if response.status_code == 200: 16 return response.text 17 else: 18 return None 19 except RequestException: 20 return None 21 22 def parse_one_page(html): 23 pattern = re.compile('<dd>.*?board-index.*?>(\d+)</i>.*?data-src="(.*?)"' 24 +'.*?name"><a.*?>(.*?)</a>.*?star">(.*?)</p>.*?releasetime">(.*?)</p>' 25 +'.*?integer">(.*?)</i>.*?fraction">(\d+)</i>.*?</dd>',re.S) 26 27 items = re.findall(pattern,html) 28 for item in items: 29 yield { 30 'index':item[0], 31 'image':item[1], 32 'title':item[2], 33 'authors':item[3].strip()[3:], 34 'time':item[4].strip()[5:], 35 'rating':item[5] + item[6], 36 37 } 38 def write_to_file(content): 39 with open('result.txt','a',encoding='utf8') as f: 40 f.write(json.dumps(content,ensure_ascii=False) +'\n') 41 f.close() 42 43 def main(): 44 url = 'http://maoyan.com/board' 45 html = get_one_page(url) 46 for item in parse_one_page(html): 47 print(item) 48 write_to_file(item) 49 50 if __name__ == '__main__': 51 main()

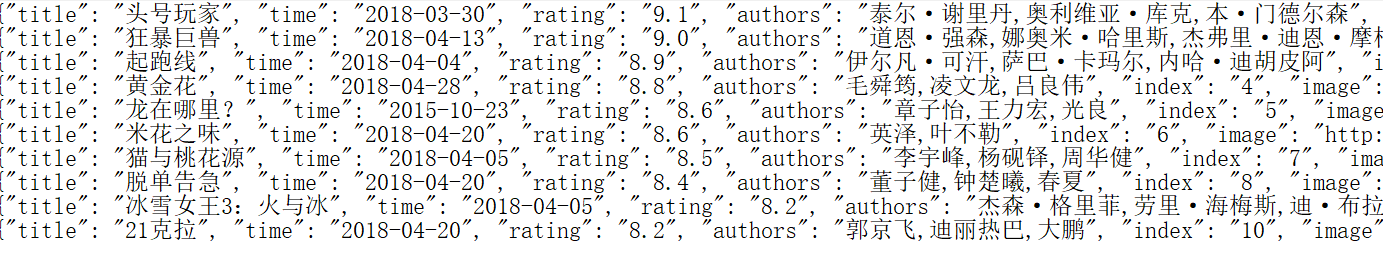

部分运行效果图: