参考以下两个链接

https://blog.csdn.net/weixin_42398658/article/details/84392845

https://blog.csdn.net/stdcoutzyx/article/details/41596663

import matplotlib as mpl

import matplotlib. pyplot as plt

% matplotlib inline

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

import tensorflow as tf

from tensorflow import keras

print ( tf. __version__)

print ( sys. version_info)

for module in mpl, np , pd, sklearn, tf, keras:

print ( module. __name__, module. __version__)

2.1.0

sys.version_info(major=3, minor=7, micro=4, releaselevel='final', serial=0)

matplotlib 3.1.1

numpy 1.16.5

pandas 0.25.1

sklearn 0.21.3

tensorflow 2.1.0

tensorflow_core.python.keras.api._v2.keras 2.2.4-tf

fashion_mnist = keras. datasets. fashion_mnist

( x_train_all, y_train_all) , ( x_test, y_test) = fashion_mnist. load_data( )

x_valid, x_train = x_train_all[ : 5000 ] , x_train_all[ 5000 : ]

y_valid, y_train = y_train_all[ : 5000 ] , y_train_all[ 5000 : ]

print ( x_valid. shape, y_valid. shape)

print ( x_train. shape, y_train. shape)

print ( x_test. shape, y_test. shape)

(5000, 28, 28) (5000,)

(55000, 28, 28) (55000,)

(10000, 28, 28) (10000,)

from sklearn. preprocessing import StandardScaler

scaler = StandardScaler( )

x_train_scaled = scaler. fit_transform(

x_train. astype( np. float32) . reshape( - 1 , 1 ) ) . reshape( - 1 , 28 , 28 , 1 )

x_valid_scaled = scaler. transform(

x_valid. astype( np. float32) . reshape( - 1 , 1 ) ) . reshape( - 1 , 28 , 28 , 1 )

x_test_scaled = scaler. transform(

x_test. astype( np. float32) . reshape( - 1 , 1 ) ) . reshape( - 1 , 28 , 28 , 1 )

model = keras. models. Sequential( )

model. add( keras. layers. Conv2D( filters= 32 , kernel_size= 3 ,

padding= 'same' ,

activation= "selu" ,

input_shape= ( 28 , 28 , 1 ) ) )

model. add( keras. layers. Conv2D( filters= 3 , kernel_size= 3 ,

padding= 'same' ,

activation= "selu"

) )

model. add( keras. layers. MaxPool2D( pool_size= 2 ) )

model. add( keras. layers. Conv2D( filters= 64 , kernel_size= 3 ,

padding= 'same' ,

activation= "selu"

) )

model. add( keras. layers. Conv2D( filters= 64 , kernel_size= 3 ,

padding= 'same' ,

activation= "selu"

) )

model. add( keras. layers. MaxPool2D( pool_size= 2 ) )

model. add( keras. layers. Conv2D( filters= 128 , kernel_size= 3 ,

padding= 'same' ,

activation= "selu"

) )

model. add( keras. layers. Conv2D( filters= 128 , kernel_size= 3 ,

padding= 'same' ,

activation= "selu"

) )

model. add( keras. layers. MaxPool2D( pool_size= 2 ) )

model. add( keras. layers. Flatten( ) )

model. add( keras. layers. Dense( 128 , activation= "selu" ) )

model. add( keras. layers. Dense( 10 , activation= "softmax" ) )

model. compile ( loss= "sparse_categorical_crossentropy" ,

optimizer= "sgd" ,

metrics = [ "accuracy" ] )

model. summary( )

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 28, 28, 32) 320

_________________________________________________________________

conv2d_1 (Conv2D) (None, 28, 28, 3) 867

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 14, 14, 3) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 14, 14, 64) 1792

_________________________________________________________________

conv2d_3 (Conv2D) (None, 14, 14, 64) 36928

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 7, 7, 64) 0

_________________________________________________________________

conv2d_4 (Conv2D) (None, 7, 7, 128) 73856

_________________________________________________________________

conv2d_5 (Conv2D) (None, 7, 7, 128) 147584

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 3, 3, 128) 0

_________________________________________________________________

flatten (Flatten) (None, 1152) 0

_________________________________________________________________

dense (Dense) (None, 128) 147584

_________________________________________________________________

dense_1 (Dense) (None, 10) 1290

=================================================================

Total params: 410,221

Trainable params: 410,221

Non-trainable params: 0

_________________________________________________________________

logdir = os. path. join( "cnn-selu-callbacks" )

if not os. path. exists( logdir) :

os. mkdir( logdir)

output_model_file = os. path. join( logdir, "fashion_mnist_model.h5" )

callbacks = [

keras. callbacks. TensorBoard( log_dir= logdir) ,

keras. callbacks. ModelCheckpoint( output_model_file,

save_best_only= True ) ,

keras. callbacks. EarlyStopping( patience= 5 , min_delta= 1e - 3 ) ,

]

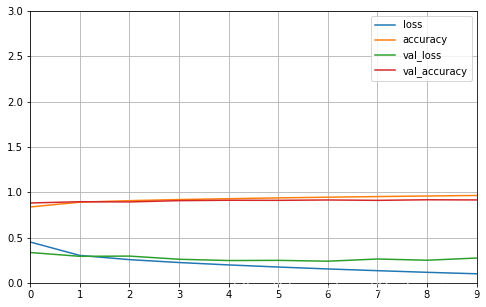

history = model. fit( x_train_scaled, y_train, epochs= 10 ,

validation_data= ( x_valid_scaled, y_valid) ,

callbacks = callbacks)

Train on 55000 samples, validate on 5000 samples

Epoch 1/10

55000/55000 [==============================] - 155s 3ms/sample - loss: 0.4501 - accuracy: 0.8370 - val_loss: 0.3343 - val_accuracy: 0.8812

Epoch 2/10

55000/55000 [==============================] - 168s 3ms/sample - loss: 0.3022 - accuracy: 0.8898 - val_loss: 0.2922 - val_accuracy: 0.8946

Epoch 3/10

55000/55000 [==============================] - 161s 3ms/sample - loss: 0.2558 - accuracy: 0.9066 - val_loss: 0.2948 - val_accuracy: 0.8926

Epoch 4/10

55000/55000 [==============================] - 156s 3ms/sample - loss: 0.2238 - accuracy: 0.9187 - val_loss: 0.2599 - val_accuracy: 0.9068

Epoch 5/10

55000/55000 [==============================] - 151s 3ms/sample - loss: 0.1973 - accuracy: 0.9283 - val_loss: 0.2458 - val_accuracy: 0.9112

Epoch 6/10

55000/55000 [==============================] - 158s 3ms/sample - loss: 0.1742 - accuracy: 0.9371 - val_loss: 0.2477 - val_accuracy: 0.9102

Epoch 7/10

55000/55000 [==============================] - 154s 3ms/sample - loss: 0.1529 - accuracy: 0.9448 - val_loss: 0.2383 - val_accuracy: 0.9142

Epoch 8/10

55000/55000 [==============================] - 147s 3ms/sample - loss: 0.1339 - accuracy: 0.9518 - val_loss: 0.2625 - val_accuracy: 0.9100

Epoch 9/10

55000/55000 [==============================] - 150s 3ms/sample - loss: 0.1157 - accuracy: 0.9580 - val_loss: 0.2491 - val_accuracy: 0.9164

Epoch 10/10

55000/55000 [==============================] - 152s 3ms/sample - loss: 0.0995 - accuracy: 0.9639 - val_loss: 0.2732 - val_accuracy: 0.9146

def plot_learning_curves ( history) :

pd. DataFrame( history. history) . plot( figsize= ( 8 , 5 ) )

plt. grid( True )

plt. gca( ) . set_ylim( 0 , 3 )

plt. show( )

plot_learning_curves( history)

model. evaluate( x_test_scaled, y_test, verbose= 2 )

10000/10000 - 8s - loss: 0.3132 - accuracy: 0.9030

[0.3131856933772564, 0.903]