学习笔记|Pytorch使用教程17

本学习笔记主要摘自“深度之眼”,做一个总结,方便查阅。

使用Pytorch版本为1.2

- learning rate学习率

- momentum动量

- torch.optim.SGD

- Pytorch的十种优化器

- 作业

一. learning rate学习率

- 梯度下降:

… …

测试代码:

import torch

import numpy as np

import matplotlib.pyplot as plt

torch.manual_seed(1)

def func(x_t):

"""

y = (2x)^2 = 4*x^2 dy/dx = 8x

"""

return torch.pow(2*x_t, 2)

# init

x = torch.tensor([2.], requires_grad=True)

# ------------------------------ plot data ------------------------------

# flag = 0

flag = 1

if flag:

x_t = torch.linspace(-3, 3, 100)

y = func(x_t)

plt.plot(x_t.numpy(), y.numpy(), label="y = 4*x^2")

plt.grid()

plt.xlabel("x")

plt.ylabel("y")

plt.legend()

plt.show()

输出:

测试梯度下降:

# ------------------------------ gradient descent ------------------------------

# flag = 0

flag = 1

if flag:

iter_rec, loss_rec, x_rec = list(), list(), list()

lr = 1 # /1. /.5 /.2 /.1 /.125

max_iteration = 4 # /1. 4 /.5 4 /.2 20 200

for i in range(max_iteration):

y = func(x)

y.backward()

print("Iter:{}, X:{:8}, X.grad:{:8}, loss:{:10}".format(

i, x.detach().numpy()[0], x.grad.detach().numpy()[0], y.item()))

x_rec.append(x.item())

x.data.sub_(lr * x.grad) # x -= x.grad 数学表达式意义: x = x - x.grad # 0.5 0.2 0.1 0.125

x.grad.zero_()

iter_rec.append(i)

loss_rec.append(y)

plt.subplot(121).plot(iter_rec, loss_rec, '-ro')

plt.xlabel("Iteration")

plt.ylabel("Loss value")

x_t = torch.linspace(-3, 3, 100)

y = func(x_t)

plt.subplot(122).plot(x_t.numpy(), y.numpy(), label="y = 4*x^2")

plt.grid()

y_rec = [func(torch.tensor(i)).item() for i in x_rec]

plt.subplot(122).plot(x_rec, y_rec, '-ro')

plt.legend()

plt.show()

输出:

Iter:0, X: 2.0, X.grad: 16.0, loss: 16.0

Iter:1, X: -14.0, X.grad: -112.0, loss: 784.0

Iter:2, X: 98.0, X.grad: 784.0, loss: 38416.0

Iter:3, X: -686.0, X.grad: -5488.0, loss: 1882384.0

出现梯度爆炸。

设置学习率:lr = 0.2

和迭代次数:max_iteration = 20

输出:

Iter:0, X: 2.0, X.grad: 16.0, loss: 16.0

Iter:1, X:-1.2000000476837158, X.grad:-9.600000381469727, loss:5.760000228881836

Iter:2, X:0.7200000286102295, X.grad:5.760000228881836, loss:2.0736000537872314

Iter:3, X:-0.4320000410079956, X.grad:-3.456000328063965, loss:0.7464961409568787

Iter:4, X:0.2592000365257263, X.grad:2.0736002922058105, loss:0.26873862743377686

Iter:5, X:-0.1555200219154358, X.grad:-1.2441601753234863, loss:0.09674590826034546

Iter:6, X:0.09331201016902924, X.grad:0.7464960813522339, loss:0.03482852503657341

Iter:7, X:-0.05598720908164978, X.grad:-0.44789767265319824, loss:0.012538270093500614

Iter:8, X:0.03359232842922211, X.grad:0.26873862743377686, loss:0.004513778258115053

Iter:9, X:-0.020155396312475204, X.grad:-0.16124317049980164, loss:0.0016249599866569042

Iter:10, X:0.012093238532543182, X.grad:0.09674590826034546, loss:0.0005849856534041464

Iter:11, X:-0.007255943492054939, X.grad:-0.058047547936439514, loss:0.000210594866075553

Iter:12, X:0.0043535660952329636, X.grad:0.03482852876186371, loss:7.581415411550552e-05

Iter:13, X:-0.0026121395640075207, X.grad:-0.020897116512060165, loss:2.729309198912233e-05

Iter:14, X:0.001567283645272255, X.grad:0.01253826916217804, loss:9.825512279348914e-06

Iter:15, X:-0.0009403701405972242, X.grad:-0.007522961124777794, loss:3.537184056767728e-06

Iter:16, X:0.0005642221076413989, X.grad:0.004513776861131191, loss:1.2733863741232199e-06

Iter:17, X:-0.00033853325294330716, X.grad:-0.0027082660235464573, loss:4.584190662626497e-07

Iter:18, X:0.00020311994012445211, X.grad:0.001624959520995617, loss:1.6503084054875217e-07

Iter:19, X:-0.00012187196989543736, X.grad:-0.0009749757591634989, loss:5.941110714502429e-08

设置学习率:

输出:lr = 0.01

Iter:0, X: 2.0, X.grad: 16.0, loss: 16.0

Iter:1, X:1.840000033378601, X.grad:14.720000267028809, loss:13.542400360107422

Iter:2, X:1.6928000450134277, X.grad:13.542400360107422, loss:11.462287902832031

Iter:3, X:1.5573760271072388, X.grad:12.45900821685791, loss:9.701680183410645

Iter:4, X:1.432785987854004, X.grad:11.462287902832031, loss:8.211503028869629

Iter:5, X:1.3181631565093994, X.grad:10.545305252075195, loss:6.950216293334961

Iter:6, X:1.2127101421356201, X.grad:9.701681137084961, loss:5.882663726806641

Iter:7, X:1.1156933307647705, X.grad:8.925546646118164, loss:4.979086399078369

Iter:8, X:1.0264378786087036, X.grad:8.211503028869629, loss:4.214298725128174

Iter:9, X:0.9443228244781494, X.grad:7.554582595825195, loss:3.5669822692871094

Iter:10, X:0.8687769770622253, X.grad:6.950215816497803, loss:3.0190937519073486

Iter:11, X:0.7992748022079468, X.grad:6.394198417663574, loss:2.555360794067383

Iter:12, X:0.7353328466415405, X.grad:5.882662773132324, loss:2.1628575325012207

Iter:13, X:0.6765062212944031, X.grad:5.412049770355225, loss:1.8306427001953125

Iter:14, X:0.6223857402801514, X.grad:4.979085922241211, loss:1.549456000328064

Iter:15, X:0.5725948810577393, X.grad:4.580759048461914, loss:1.3114595413208008

Iter:16, X:0.526787281036377, X.grad:4.214298248291016, loss:1.110019326210022

Iter:17, X:0.4846442937850952, X.grad:3.8771543502807617, loss:0.9395203590393066

Iter:18, X:0.4458727538585663, X.grad:3.5669820308685303, loss:0.795210063457489

Iter:19, X:0.41020292043685913, X.grad:3.281623363494873, loss:0.673065721988678

测试多个学习率:

# ------------------------------ multi learning rate ------------------------------

# flag = 0

flag = 1

if flag:

iteration = 100

num_lr = 10

lr_min, lr_max = 0.01, 0.5 # .5 .3 .2

lr_list = np.linspace(lr_min, lr_max, num=num_lr).tolist()

loss_rec = [[] for l in range(len(lr_list))]

iter_rec = list()

for i, lr in enumerate(lr_list):

x = torch.tensor([2.], requires_grad=True)

for iter in range(iteration):

y = func(x)

y.backward()

x.data.sub_(lr * x.grad) # x.data -= x.grad

x.grad.zero_()

loss_rec[i].append(y.item())

for i, loss_r in enumerate(loss_rec):

plt.plot(range(len(loss_r)), loss_r, label="LR: {}".format(lr_list[i]))

plt.legend()

plt.xlabel('Iterations')

plt.ylabel('Loss value')

plt.show()

输出:

发现loss值有激增的情况。

减少最大学习率:lr_min, lr_max = 0.01, 0.2

二.momentum动量

Momentum (动量,冲量):结合当前梯度与上一次更新信息,用于当前更新

指数加权平均:

测试代码:

import torch

import numpy as np

import torch.optim as optim

import matplotlib.pyplot as plt

torch.manual_seed(1)

def exp_w_func(beta, time_list):

return [(1 - beta) * np.power(beta, exp) for exp in time_list]

beta = 0.9

num_point = 100

time_list = np.arange(num_point).tolist()

# ------------------------------ exponential weight ------------------------------

# flag = 0

flag = 1

if flag:

weights = exp_w_func(beta, time_list)

plt.plot(time_list, weights, '-ro', label="Beta: {}\ny = B^t * (1-B)".format(beta))

plt.xlabel("time")

plt.ylabel("weight")

plt.legend()

plt.title("exponentially weighted average")

plt.show()

print(np.sum(weights))

输出:0.9999734386011124

测试不同权重下的变化曲线:

# ------------------------------ multi weights ------------------------------

# flag = 0

flag = 1

if flag:

beta_list = [0.98, 0.95, 0.9, 0.8]

w_list = [exp_w_func(beta, time_list) for beta in beta_list]

for i, w in enumerate(w_list):

plt.plot(time_list, w, label="Beta: {}".format(beta_list[i]))

plt.xlabel("time")

plt.ylabel("weight")

plt.legend()

plt.show()

输出:

这个超参数是用来控制记忆周期,值越大记的越远。

比较SGD和Momentum:

# ------------------------------ SGD momentum ------------------------------

# flag = 0

flag = 1

if flag:

def func(x):

return torch.pow(2*x, 2) # y = (2x)^2 = 4*x^2 dy/dx = 8x

iteration = 100

m = 0. # .9 .63

lr_list = [0.01, 0.03]

momentum_list = list()

loss_rec = [[] for l in range(len(lr_list))]

iter_rec = list()

for i, lr in enumerate(lr_list):

x = torch.tensor([2.], requires_grad=True)

momentum = 0. if lr == 0.03 else m

momentum_list.append(momentum)

optimizer = optim.SGD([x], lr=lr, momentum=momentum)

for iter in range(iteration):

y = func(x)

y.backward()

optimizer.step()

optimizer.zero_grad()

loss_rec[i].append(y.item())

for i, loss_r in enumerate(loss_rec):

plt.plot(range(len(loss_r)), loss_r, label="LR: {} M:{}".format(lr_list[i], momentum_list[i]))

plt.legend()

plt.xlabel('Iterations')

plt.ylabel('Loss value')

plt.show()

输出:

改变动量:m = 0.9

输出:

Momentum比SGD更快的达到了loss的较小值,按时由于权重过大,会受到前一时刻步长的影响,造成震荡。

继续修改合适的值:m = .63

输出:

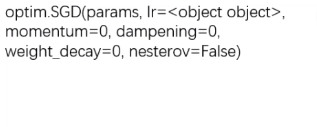

三.torch.optim.SGD

1.optim.SGD

主要参数:

- params:管理的参数组

- Ir :初始学习率

- momentum :动量系数,贝塔

- weight_decay : L2正则化系数

- nesterov :是否采用NAG

NAG参考文献:《On the importance of initialization and momentum in deep learning》

四.Pytorch的十种优化器

- optim.SGD:随机梯度下降法

- optim.Adagrad :自适应学习率梯度下降法

- optim.RMSprop : Adagrad的 改进

- optim.Adadelta : Adagrad 的改进

- optim.Adam : RMSprop结合Momentum

- optim.Adamax : Adam增加学习率上限

- optim.SparseAdam :稀疏版的Adam

- optim.ASGD :随机平均梯度下降

- optim.Rprop :弹性反向传播

- optim.LBFGS : BFGS的改进

五.作业

优化器的作用是管理并更新参数组,请构建一个SGD优化器,通过add_param_group方法添加三组参数,三组参数的学习率分别为 0.01, 0.02, 0.03, momentum分别为0.9, 0.8, 0.7,构建好之后,并打印优化器中的param_groups属性中的每一个元素的key和value(提示:param_groups是list,其每一个元素是一个字典)

测试代码:

import torch

import torch.optim as optim

from tools.common_tools import set_seed

set_seed(1) # 设置随机种子

w1 = torch.randn((2, 2), requires_grad=True)

w2 = torch.randn((2, 2), requires_grad=True)

w3 = torch.randn((2, 2), requires_grad=True)

optimizer = optim.SGD([w1], lr=0.01, momentum=0.9)

optimizer.add_param_group({"params": w2, 'lr': 0.02, 'momentum': 0.8})

optimizer.add_param_group({"params": w3, 'lr': 0.03, 'momentum': 0.7})

for index, group in enumerate(optimizer.param_groups):

params = group["params"]

lr = group["lr"]

momentum = group["momentum"]

print("第【{}】组参数 params 为:\n{} \n学习率 lr 为:{} \n动量 momentum 为:{}".format(index, params, lr, momentum))

print("==============================================")

输出:

第【0】组参数 params 为:

[tensor([[0.6614, 0.2669],

[0.0617, 0.6213]], requires_grad=True)]

学习率 lr 为:0.01

动量 momentum 为:0.9

==============================================

第【1】组参数 params 为:

[tensor([[-0.4519, -0.1661],

[-1.5228, 0.3817]], requires_grad=True)]

学习率 lr 为:0.02

动量 momentum 为:0.8

==============================================

第【2】组参数 params 为:

[tensor([[-1.0276, -0.5631],

[-0.8923, -0.0583]], requires_grad=True)]

学习率 lr 为:0.03

动量 momentum 为:0.7

==============================================