学习笔记|Pytorch使用教程15

本学习笔记主要摘自“深度之眼”,做一个总结,方便查阅。

使用Pytorch版本为1.2

- 其他的损失函数

一.其他的损失函数

1.nn.L1Loss

功能:计算inputs与target之差的绝对值

2.nn.MSELoss

功能:计 算inputs与target之差的平方

主要参数:

- reduction :计算模式,可为none/sum/mean

none-逐个元素计算

sum-所有元素求和,返回标量

mean-加权平均,返回标量

测试L1Loss和MSELoss代码:

import torch

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

from tools.common_tools import set_seed

set_seed(1) # 设置随机种子

# ------------------------------------------------- 5 L1 loss ----------------------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.ones((2, 2))

target = torch.ones((2, 2)) * 3

loss_f = nn.L1Loss(reduction='none')

loss = loss_f(inputs, target)

print("input:{}\ntarget:{}\nL1 loss:{}".format(inputs, target, loss))

输出:

input:tensor([[1., 1.],

[1., 1.]])

target:tensor([[3., 3.],

[3., 3.]])

L1 loss:tensor([[2., 2.],

[2., 2.]])

MSE loss:tensor([[4., 4.],

[4., 4.]])

3.SmoothL1Loss

功能:平滑的L1Loss

主要参数:

- reduction :计算模式,可为none/sum/mean

none-逐个元素计算

sum-所有元素求和,返回标量

mean-加权平均,返回标量

测试代码:

# ------------------------------------------------- 7 Smooth L1 loss ----------------------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.linspace(-3, 3, steps=500)

target = torch.zeros_like(inputs)

loss_f = nn.SmoothL1Loss(reduction='none')

loss_smooth = loss_f(inputs, target)

loss_l1 = np.abs(inputs.numpy())

plt.plot(inputs.numpy(), loss_smooth.numpy(), label='Smooth L1 Loss')

plt.plot(inputs.numpy(), loss_l1, label='L1 loss')

plt.xlabel('x_i - y_i')

plt.ylabel('loss value')

plt.legend()

plt.grid()

plt.show()

输出:

4.PoissonNLLLoss

功能:泊松分布的负对数似然损失函数

主要参数:

- log_input:输入是否为对数形式,决定计算公式

- full :计算所有loss,默认为False

- eps: 修正项,避免log (input) 为nan

测试代码:

# ------------------------------------------------- 8 Poisson NLL Loss ----------------------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.randn((2, 2))

target = torch.randn((2, 2))

loss_f = nn.PoissonNLLLoss(log_input=True, full=False, reduction='none')

loss = loss_f(inputs, target)

print("input:{}\ntarget:{}\nPoisson NLL loss:{}".format(inputs, target, loss))

输出:

input:tensor([[0.6614, 0.2669],

[0.0617, 0.6213]])

target:tensor([[-0.4519, -0.1661],

[-1.5228, 0.3817]])

Poisson NLL loss:tensor([[2.2363, 1.3503],

[1.1575, 1.6242]])

手动计算验证:

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

idx = 0

loss_1 = torch.exp(inputs[idx, idx]) - target[idx, idx]*inputs[idx, idx]

print("第一个元素loss:", loss_1)

输出:第一个元素loss: tensor(2.2363)

5.nn.KLDivLoss

功能:计算KLD (divergence), KL散度,相对熵

注意事项:需提前将输入计算log-probabilities,如通过nn.logsoftmax()

主要参数:

- reduction : none/sum/mean/batchmean

none-逐个元素计算

sum-所有元素求和,返回标量

mean-加权平均,返回标量 - batchmean: batchsize维度求平均值

测试代码:

# ------------------------------------------------- 9 KL Divergence Loss ----------------------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.tensor([[0.5, 0.3, 0.2], [0.2, 0.3, 0.5]])

inputs_log = torch.log(inputs)

target = torch.tensor([[0.9, 0.05, 0.05], [0.1, 0.7, 0.2]], dtype=torch.float)

loss_f_none = nn.KLDivLoss(reduction='none')

loss_f_mean = nn.KLDivLoss(reduction='mean')

loss_f_bs_mean = nn.KLDivLoss(reduction='batchmean')

loss_none = loss_f_none(inputs, target)

loss_mean = loss_f_mean(inputs, target)

loss_bs_mean = loss_f_bs_mean(inputs, target)

print("loss_none:\n{}\nloss_mean:\n{}\nloss_bs_mean:\n{}".format(loss_none, loss_mean, loss_bs_mean))

输出:

loss_none:

tensor([[-0.5448, -0.1648, -0.1598],

[-0.2503, -0.4597, -0.4219]])

loss_mean:

-0.3335360288619995

loss_bs_mean:

-1.0006080865859985

mean是所有值之和除以个数(6)。

bs_mean是所有值之和除以批(2)。

手动验证:

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

idx = 0

loss_1 = target[idx, idx] * (torch.log(target[idx, idx]) - inputs[idx, idx])

print("第一个元素loss:", loss_1)

输出:第一个元素loss: tensor(-0.5448)

6.nn. MarginRankingLoss

功能:计算两个向量之间的相似度,用于排序任务

- y= 1时,希望x1比x2大,当x1>x2时, 不产生loss

- y = -1时,希望x2比x1大,当x2>x1时,不产生loss

特别说明:该方法计算两组数据之间的差异,返回一个个n*n的loss矩阵

主要参数:

- margin :边界值,x1与x2之间的差异值

- reduction :计算模式,可为none/sum/mean

测试代码:

# ---------------------------------------------- 10 Margin Ranking Loss --------------------------------------------

# flag = 0

flag = 1

if flag:

x1 = torch.tensor([[1], [2], [3]], dtype=torch.float)

x2 = torch.tensor([[2], [2], [2]], dtype=torch.float)

target = torch.tensor([1, 1, -1], dtype=torch.float)

loss_f_none = nn.MarginRankingLoss(margin=0, reduction='none')

loss = loss_f_none(x1, x2, target)

print(loss)

输出:

tensor([[1., 1., 0.],

[0., 0., 0.],

[0., 0., 1.]])

7.nn.MultiLabelMarginLoss

功能:多标签边界损失函数

举例:四分类任务,样本x属于0类和3类。

标签: [0, 3, -1,-1], 不是[1, 0,0, 1]

主要参数:

- reduction :计算模式,可为none/sum/mean

测试代码:

# ---------------------------------------------- 11 Multi Label Margin Loss -----------------------------------------

# flag = 0

flag = 1

if flag:

x = torch.tensor([[0.1, 0.2, 0.4, 0.8]])

y = torch.tensor([[0, 3, -1, -1]], dtype=torch.long)

loss_f = nn.MultiLabelMarginLoss(reduction='none')

loss = loss_f(x, y)

print(loss)

输出:

tensor([0.8500])

手动验证:

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

x = x[0]

item_1 = (1-(x[0] - x[1])) + (1 - (x[0] - x[2])) # [0]

item_2 = (1-(x[3] - x[1])) + (1 - (x[3] - x[2])) # [3]

loss_h = (item_1 + item_2) / x.shape[0]

print(loss_h)

输出:tensor(0.8500)

8.nn.SoftMarginLoss

功能:计算二分类的logistic损失

主要参数:

- reduction :计算模式,可为none/sum/mean

测试代码:

# ---------------------------------------------- 12 SoftMargin Loss -----------------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.tensor([[0.3, 0.7], [0.5, 0.5]])

target = torch.tensor([[-1, 1], [1, -1]], dtype=torch.float)

loss_f = nn.SoftMarginLoss(reduction='none')

loss = loss_f(inputs, target)

print("SoftMargin: ", loss)

输出:

SoftMargin: tensor([[0.8544, 0.4032],

[0.4741, 0.9741]])

手动计算验证:

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

idx = 0

inputs_i = inputs[idx, idx]

target_i = target[idx, idx]

loss_h = np.log(1 + np.exp(-target_i * inputs_i))

print(loss_h)

输出:tensor(0.8544)

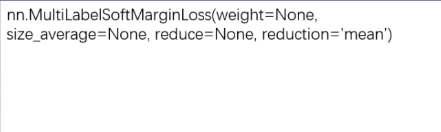

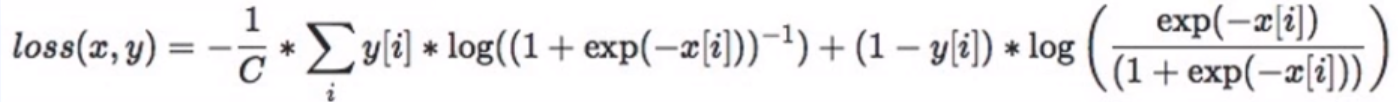

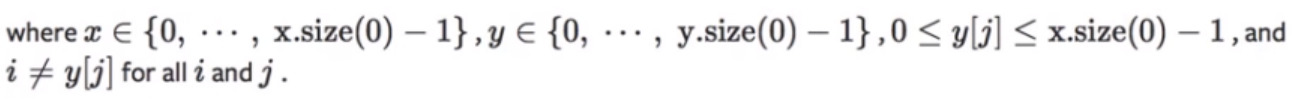

9.nn.MultiLabelSoftMarginLoss

功能: SoftMarginLoss多标签版本

主要参数:

- weight:各类别的loss设置权值

- reduction :计算模式,可为none/sum/mean

测试代码:

# ---------------------------------------------- 13 MultiLabel SoftMargin Loss -----------------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.tensor([[0.3, 0.7, 0.8]])

target = torch.tensor([[0, 1, 1]], dtype=torch.float)

loss_f = nn.MultiLabelSoftMarginLoss(reduction='none')

loss = loss_f(inputs, target)

print("MultiLabel SoftMargin: ", loss)

输出:MultiLabel SoftMargin: tensor([0.5429])

手动验证:

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

i_0 = torch.log(torch.exp(-inputs[0, 0]) / (1 + torch.exp(-inputs[0, 0])))

i_1 = torch.log(1 / (1 + torch.exp(-inputs[0, 1])))

i_2 = torch.log(1 / (1 + torch.exp(-inputs[0, 2])))

loss_h = (i_0 + i_1 + i_2) / -3

print(loss_h)

输出:tensor(0.5429)

10.nn. MultiMarginLoss

功能:计算多分类的折页损失

主要参数:

- p:可选1或2

- weight:各类别的loss设置权值

- margin :边界值

- reduction :计算模式,可为none/sum/mean

测试代码:

# ---------------------------------------------- 14 Multi Margin Loss -----------------------------------------

# flag = 0

flag = 1

if flag:

x = torch.tensor([[0.1, 0.2, 0.7], [0.2, 0.5, 0.3]])

y = torch.tensor([1, 2], dtype=torch.long)

loss_f = nn.MultiMarginLoss(reduction='none')

loss = loss_f(x, y)

print("Multi Margin Loss: ", loss)

输出:Multi Margin Loss: tensor([0.8000, 0.7000])

手动验证:

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

x = x[0]

margin = 1

i_0 = margin - (x[1] - x[0])

# i_1 = margin - (x[1] - x[1])

i_2 = margin - (x[1] - x[2])

loss_h = (i_0 + i_2) / x.shape[0]

print(loss_h)

输出:tensor(0.8000)

11.nn.TripletMarginLoss

功能:计算三元组损失,人脸验证中常用

主要参数:

- p:范数的阶,默认为2

- margin :边界值

- reduction :计算模式,可为none/sum/mean

测试代码:

# ---------------------------------------------- 15 Triplet Margin Loss -----------------------------------------

# flag = 0

flag = 1

if flag:

anchor = torch.tensor([[1.]])

pos = torch.tensor([[2.]])

neg = torch.tensor([[0.5]])

loss_f = nn.TripletMarginLoss(margin=1.0, p=1)

loss = loss_f(anchor, pos, neg)

print("Triplet Margin Loss", loss)

输出:Triplet Margin Loss tensor(1.5000)

手动验证:

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

margin = 1

a, p, n = anchor[0], pos[0], neg[0]

d_ap = torch.abs(a-p)

d_an = torch.abs(a-n)

loss = d_ap - d_an + margin

print(loss)

输出:tensor([1.5000])

12.nn.HingeEmbeddingLoss

功能:计算两个输入的相似性,常用于非线性embedding和半监督学习。

特别注意:输入x应为两个输入之差的绝对值

主要参数:

- margin :边界值

- reduction :计算模式,可为none/sum/mean

测试代码:

# ---------------------------------------------- 16 Hinge Embedding Loss -----------------------------------------

# flag = 0

flag = 1

if flag:

inputs = torch.tensor([[1., 0.8, 0.5]])

target = torch.tensor([[1, 1, -1]])

loss_f = nn.HingeEmbeddingLoss(margin=1, reduction='none')

loss = loss_f(inputs, target)

print("Hinge Embedding Loss", loss)

输出:Hinge Embedding Loss tensor([[1.0000, 0.8000, 0.5000]])

手动验证:

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

margin = 1.

loss = max(0, margin - inputs.numpy()[0, 2])

print(loss)

输出:0.5

13.nn.CosineEmbeddingLoss

功能:采用余弦相似度计算两个输入的相似性

主要参数:

- margin:可取值[-1, 1],推荐为[0, 0.5]

- reduction :计算模式,可为none/sum/mean

测试代码:

# ---------------------------------------------- 17 Cosine Embedding Loss -----------------------------------------

# flag = 0

flag = 1

if flag:

x1 = torch.tensor([[0.3, 0.5, 0.7], [0.3, 0.5, 0.7]])

x2 = torch.tensor([[0.1, 0.3, 0.5], [0.1, 0.3, 0.5]])

target = torch.tensor([[1, -1]], dtype=torch.float)

loss_f = nn.CosineEmbeddingLoss(margin=0., reduction='none')

loss = loss_f(x1, x2, target)

print("Cosine Embedding Loss", loss)

输出:Cosine Embedding Loss tensor([[0.0167, 0.9833]])

手动验证:

# --------------------------------- compute by hand

# flag = 0

flag = 1

if flag:

margin = 0.

def cosine(a, b):

numerator = torch.dot(a, b)

denominator = torch.norm(a, 2) * torch.norm(b, 2)

return float(numerator/denominator)

l_1 = 1 - (cosine(x1[0], x2[0]))

l_2 = max(0, cosine(x1[0], x2[0]))

print(l_1, l_2)

输出:0.016662120819091797 0.9833378791809082

14.nn.CTCLoss

功能:计算CTC损失, 解决时序类数据的分类

主要参数:

- blank : blank label

- zero infinity :无穷大的值或梯度置0

- reduction :计算模式,可为none/sum/mean

测试代码:

# ---------------------------------------------- 18 CTC Loss -----------------------------------------

# flag = 0

flag = 1

if flag:

T = 50 # Input sequence length

C = 20 # Number of classes (including blank)

N = 16 # Batch size

S = 30 # Target sequence length of longest target in batch

S_min = 10 # Minimum target length, for demonstration purposes

# Initialize random batch of input vectors, for *size = (T,N,C)

inputs = torch.randn(T, N, C).log_softmax(2).detach().requires_grad_()

# Initialize random batch of targets (0 = blank, 1:C = classes)

target = torch.randint(low=1, high=C, size=(N, S), dtype=torch.long)

input_lengths = torch.full(size=(N,), fill_value=T, dtype=torch.long)

target_lengths = torch.randint(low=S_min, high=S, size=(N,), dtype=torch.long)

ctc_loss = nn.CTCLoss()

loss = ctc_loss(inputs, target, input_lengths, target_lengths)

print("CTC loss: ", loss)

输出:CTC loss: tensor(7.5385, grad_fn=<MeanBackward0>)