学习笔记|Pytorch使用教程20

本学习笔记主要摘自“深度之眼”,做一个总结,方便查阅。

使用Pytorch版本为1.2

- SummaryWriter

- add_scalar and add_histogram

- 模型指标监控

一.SummaryWriter

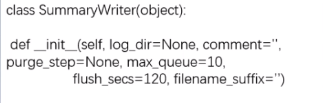

SummaryWriter

功能:提供创建event file的高级接口

主要属性:

- log_dir : event file输出文件夹

- comment:不指定log_ dir时,文件夹后缀

- filename_suffix : event file文件名后缀

测试代码:

import numpy as np

import matplotlib.pyplot as plt

from torch.utils.tensorboard import SummaryWriter

from tools.common_tools import set_seed

set_seed(1) # 设置随机种子

# ----------------------------------- 0 SummaryWriter -----------------------------------

# flag = 0

flag = 1

if flag:

log_dir = "./train_log/test_log_dir"

writer = SummaryWriter(log_dir=log_dir, comment='_scalars', filename_suffix="12345678")

#writer = SummaryWriter(comment='_scalars', filename_suffix="12345678")

for x in range(100):

writer.add_scalar('y=pow_2_x', 2 ** x, x)

writer.close()

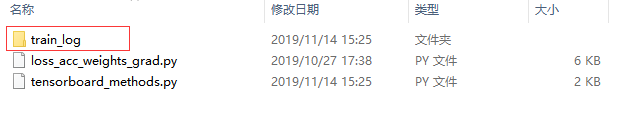

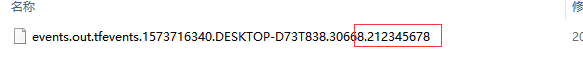

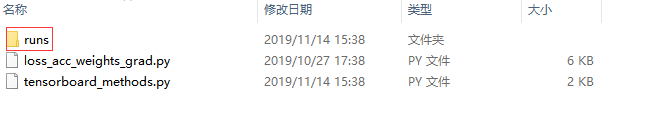

在当前文件夹下出现新的文件:

查看后缀和设置一致。

发现:comment='_scalars'并没有出现在当前文件夹下,这是因为设置了log_dir=log_dir,comment不会起作用。如果要使用comment,则设置writer = SummaryWriter(comment='_scalars', filename_suffix="12345678")

这时:

二.add_scalar and add_histogram

1 add_scalar()

功能:记录标量

- tag :图像的标签名,图的唯一标识

- scalar_value :要记录的标量

- global_step :x轴

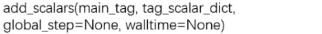

2.add_scalars()

- main_tag :该图的标签

- tag_scalar_dict : key是变量的tag, value是变量的值

测试代码:

# ----------------------------------- 1 scalar and scalars -----------------------------------

# flag = 0

flag = 1

if flag:

max_epoch = 100

writer = SummaryWriter(comment='test_comment', filename_suffix="test_suffix")

for x in range(max_epoch):

writer.add_scalar('y=2x', x * 2, x)

writer.add_scalar('y=pow_2_x', 2 ** x, x)

writer.add_scalars('data/scalar_group', {"xsinx": x * np.sin(x),

"xcosx": x * np.cos(x)}, x)

writer.close()

输出:

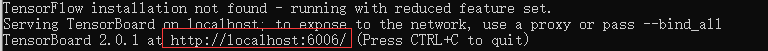

在当前输入:tensorboard --logdir=./runs

在浏览器中打开网址:http://localhost:6006/

其中:writer.add_scalars('data/scalar_group', {"xsinx": x * np.sin(x), "xcosx": x * np.cos(x)}, x)

其中:writer.add_scalar('y=2x', x * 2, x)

其中:writer.add_scalar('y=pow_2_x', 2 ** x, x)

3.add_histogram()

功能:统计直方图与多分位数折线图

- tag:图像的标签名,图的唯一标识

- values :要统计的参数

- global_step : y轴

- bins:取直方图的bins

测试代码:

# ----------------------------------- 2 histogram -----------------------------------

# flag = 0

flag = 1

if flag:

writer = SummaryWriter(comment='test_comment', filename_suffix="test_suffix")

for x in range(2):

np.random.seed(x)

data_union = np.arange(100)

data_normal = np.random.normal(size=1000)

writer.add_histogram('distribution union', data_union, x)

writer.add_histogram('distribution normal', data_normal, x)

plt.subplot(121).hist(data_union, label="union")

plt.subplot(122).hist(data_normal, label="normal")

plt.legend()

plt.show()

writer.close()

输出 :

三.模型指标监控

使用上述方法对模型进行监控。

完整代码:

import os

import numpy as np

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

from torch.utils.tensorboard import SummaryWriter

import torch.optim as optim

from matplotlib import pyplot as plt

from model.lenet import LeNet

from tools.my_dataset import RMBDataset

from tools.common_tools import set_seed

set_seed() # 设置随机种子

rmb_label = {"1": 0, "100": 1}

# 参数设置

MAX_EPOCH = 10

BATCH_SIZE = 16

LR = 0.01

log_interval = 10

val_interval = 1

# ============================ step 1/5 数据 ============================

split_dir = os.path.join("..", "..", "data", "rmb_split")

train_dir = os.path.join(split_dir, "train")

valid_dir = os.path.join(split_dir, "valid")

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

train_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.RandomCrop(32, padding=4),

transforms.RandomGrayscale(p=0.8),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

valid_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

# 构建MyDataset实例

train_data = RMBDataset(data_dir=train_dir, transform=train_transform)

valid_data = RMBDataset(data_dir=valid_dir, transform=valid_transform)

# 构建DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=BATCH_SIZE)

# ============================ step 2/5 模型 ============================

net = LeNet(classes=2)

net.initialize_weights()

# ============================ step 3/5 损失函数 ============================

criterion = nn.CrossEntropyLoss() # 选择损失函数

# ============================ step 4/5 优化器 ============================

optimizer = optim.SGD(net.parameters(), lr=LR, momentum=0.9) # 选择优化器

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1) # 设置学习率下降策略

# ============================ step 5/5 训练 ============================

train_curve = list()

valid_curve = list()

iter_count = 0

# 构建 SummaryWriter

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

for epoch in range(MAX_EPOCH):

loss_mean = 0.

correct = 0.

total = 0.

net.train()

for i, data in enumerate(train_loader):

iter_count += 1

# forward

inputs, labels = data

outputs = net(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

# update weights

optimizer.step()

# 统计分类情况

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).squeeze().sum().numpy()

# 打印训练信息

loss_mean += loss.item()

train_curve.append(loss.item())

if (i+1) % log_interval == 0:

loss_mean = loss_mean / log_interval

print("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, i+1, len(train_loader), loss_mean, correct / total))

loss_mean = 0.

# 记录数据,保存于event file

writer.add_scalars("Loss", {"Train": loss.item()}, iter_count)

writer.add_scalars("Accuracy", {"Train": correct / total}, iter_count)

# 每个epoch,记录梯度,权值

for name, param in net.named_parameters():

writer.add_histogram(name + '_grad', param.grad, epoch)

writer.add_histogram(name + '_data', param, epoch)

scheduler.step() # 更新学习率

# validate the model

if (epoch+1) % val_interval == 0:

correct_val = 0.

total_val = 0.

loss_val = 0.

net.eval()

with torch.no_grad():

for j, data in enumerate(valid_loader):

inputs, labels = data

outputs = net(inputs)

loss = criterion(outputs, labels)

_, predicted = torch.max(outputs.data, 1)

total_val += labels.size(0)

correct_val += (predicted == labels).squeeze().sum().numpy()

loss_val += loss.item()

valid_curve.append(loss.item())

print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, j+1, len(valid_loader), loss_val, correct / total))

# 记录数据,保存于event file

writer.add_scalars("Loss", {"Valid": np.mean(valid_curve)}, iter_count)

writer.add_scalars("Accuracy", {"Valid": correct_val / total_val}, iter_count)

train_x = range(len(train_curve))

train_y = train_curve

train_iters = len(train_loader)

valid_x = np.arange(1, len(valid_curve)+1) * train_iters*val_interval # 由于valid中记录的是epochloss,需要对记录点进行转换到iterations

valid_y = valid_curve

plt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')

plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.show()

输出:

Training:Epoch[000/010] Iteration[010/010] Loss: 0.6846 Acc:53.75%

Valid: Epoch[000/010] Iteration[002/002] Loss: 0.9805 Acc:53.75%

Training:Epoch[001/010] Iteration[010/010] Loss: 0.4099 Acc:85.00%

Valid: Epoch[001/010] Iteration[002/002] Loss: 0.0829 Acc:85.00%

Training:Epoch[002/010] Iteration[010/010] Loss: 0.1470 Acc:94.38%

Valid: Epoch[002/010] Iteration[002/002] Loss: 0.0035 Acc:94.38%

Training:Epoch[003/010] Iteration[010/010] Loss: 0.4276 Acc:88.12%

Valid: Epoch[003/010] Iteration[002/002] Loss: 0.2250 Acc:88.12%

Training:Epoch[004/010] Iteration[010/010] Loss: 0.3169 Acc:87.50%

Valid: Epoch[004/010] Iteration[002/002] Loss: 0.1232 Acc:87.50%

Training:Epoch[005/010] Iteration[010/010] Loss: 0.2026 Acc:91.88%

Valid: Epoch[005/010] Iteration[002/002] Loss: 0.0132 Acc:91.88%

Training:Epoch[006/010] Iteration[010/010] Loss: 0.1064 Acc:95.62%

Valid: Epoch[006/010] Iteration[002/002] Loss: 0.0002 Acc:95.62%

Training:Epoch[007/010] Iteration[010/010] Loss: 0.0482 Acc:99.38%

Valid: Epoch[007/010] Iteration[002/002] Loss: 0.0006 Acc:99.38%

Training:Epoch[008/010] Iteration[010/010] Loss: 0.0069 Acc:100.00%

Valid: Epoch[008/010] Iteration[002/002] Loss: 0.0000 Acc:100.00%

Training:Epoch[009/010] Iteration[010/010] Loss: 0.0133 Acc:99.38%

Valid: Epoch[009/010] Iteration[002/002] Loss: 0.0000 Acc:99.38%

可视化:

loss曲线一致。

当loss值比较小的时候,w的梯度比较小,全连接层也是如此: